[中文] [English]

🔥🔥🔥 [2023/09/07]We released CodeFuse-CodeLlama-34B, which achieves the 74.4% Python Pass@1 (greedy decoding) and surpasses GPT4 (2023/03/15) and ChatGPT-3.5 on the HumanEval Benchmarks.

🔥 [2023/08/26]We released MFTCoder which supports finetuning Code Llama, Llama, Llama2, StarCoder, ChatGLM2, CodeGeeX2, Qwen, and GPT-NeoX models with LoRA/QLoRA.

| Model | HumanEval(Pass@1) | Date |

|---|---|---|

| CodeFuse-CodeLlama-34B | 74.4% | 2023/09 |

| WizardCoder-Python-34B-V1.0 | 73.2% | 2023/08 |

| GPT-4(zero-shot) | 67.0% | 2023/03 |

| PanGu-Coder2 15B | 61.6% | 2023/08 |

| CodeLlama-34b-Python | 53.7% | 2023/08 |

| CodeLlama-34b | 48.8% | 2023/08 |

| GPT-3.5(zero-shot) | 48.1% | 2022/11 |

| OctoCoder | 46.2% | 2023/08 |

| StarCoder-15B | 33.6% | 2023/05 |

| LLaMA 2 70B(zero-shot) | 29.9% | 2023/07 |

TBA

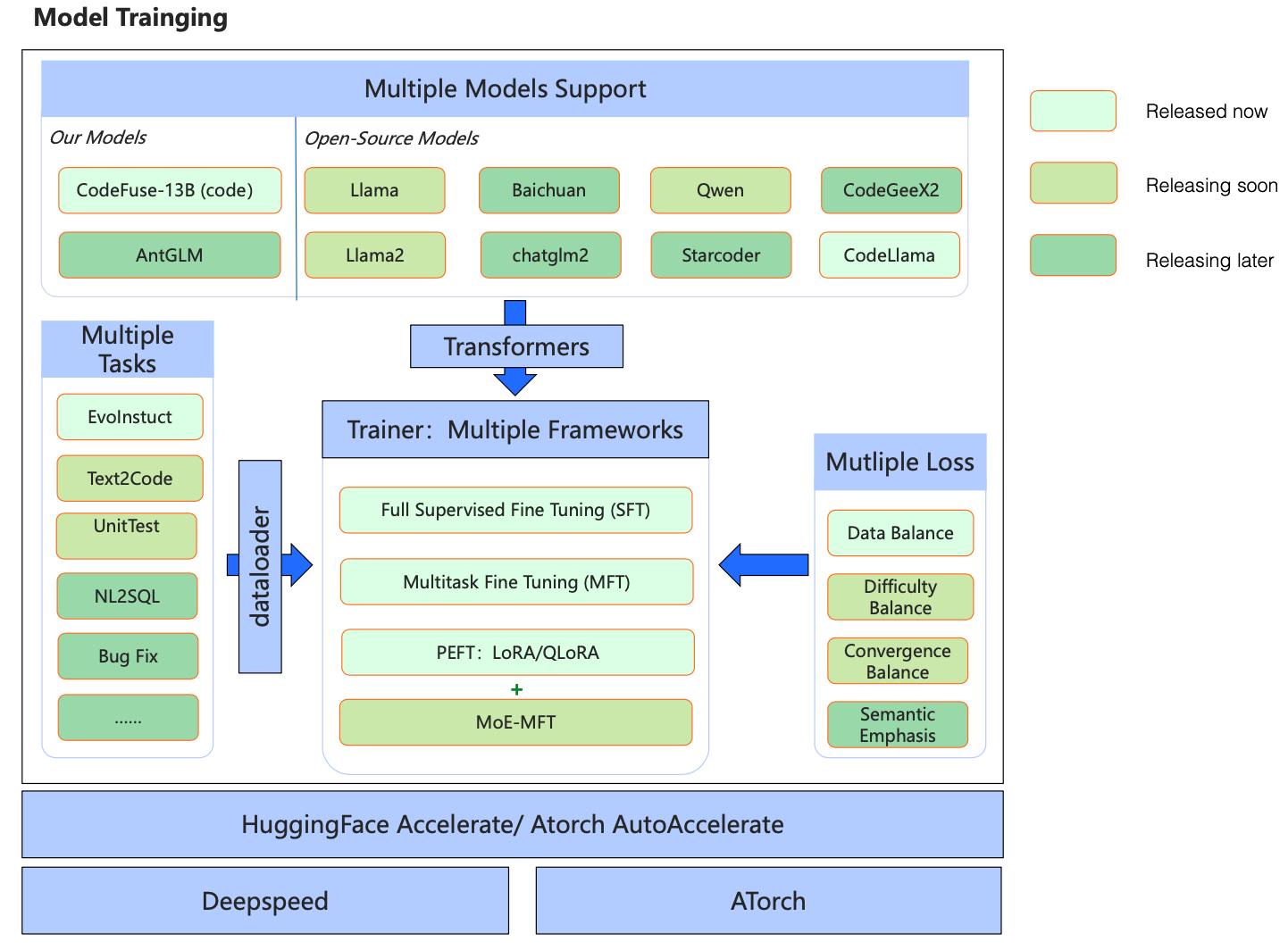

CodeFuse-MFTCoder is an open-source project of CodeFuse for multitasking Code-LLMs(large language model for code tasks), which includes models, datasets, training codebases and inference guides. In MFTCoder, we released two codebases for finetuning Large Language Models:

mft_peft_hfis based on the HuggingFace Accelerate and deepspeed framework.mft_atorchis based on the ATorch frameworks, which is a fast distributed training framework of LLM.

The aim of this project is to foster collaboration and share advancements in large language models, particularly within the domain of code development.

✅ Multi-task: Train models on multiple tasks while maintaining a balance between them. The models can even generalize to new, previously unseen tasks.

✅ Multi-model: It integrates state-of-the-art open-source models such as gpt-neox, llama, llama-2, baichuan, Qwen, chatglm2, and more. (These finetuned models will be released in the near future.)

✅ Multi-framework: It provides support for both HuggingFace Accelerate (with deepspeed) and ATorch.

✅ Efficient fine-tuning: It supports LoRA and QLoRA, enabling fine-tuning of large models with minimal resources. The training speed meets the demands of almost all fine-tuning scenarios.

The main components of this project include:

- Support for both SFT (Supervised FineTuning) and MFT (Multi-task FineTuning). The current MFTCoder achieves data balance among multiple tasks, and future releases will achieve a balance between task difficulty and convergence speed during training.

- Support for QLoRA instruction fine-tuning, as well as LoRA fine-tuning.

- Support for most mainstream open-source large models, particularly those relevant to Code-LLMs, such as Code-LLaMA, Starcoder, Codegeex2, Qwen, GPT-Neox, and more.

- Support for weight merging between the LoRA adaptor and base models, simplifying the inference process.

- Release of 2 high-quality code-related instruction fine-tuning datasets: Evol-instruction-66k and CodeExercise-Python-27k.

- Release of 2 models: CodeFuse-13B and CodeFuse-CodeLlama-34B.

To begin, ensure that you have successfully installed CUDA (version >= 11.4, preferably 11.7) along with the necessary drivers. Additionally, make sure you have installed torch (version 2.0.1).

Next, we have provided an init_env.sh script to simplify the installation of required packages. Execute the following command to run the script:

sh init_env.shIf you require flash attention, please refer to the following link for installation instructions: https://github.com/Dao-AILab/flash-attention

🚀 Huggingface accelerate + deepspeed Codebase for MFT(Multi-task Finetuning)

🚀 Atorch Codebase for MFT(Multi-task Finetuning)

We are excited to release the following two CodeLLMs trained by MFTCoder, now available on Hugging Face:

| Model | Base Model | Num of examples trained | Batch Size | Seq Length |

|---|---|---|---|---|

| 🔥🔥🔥 CodeFuse-CodeLlama-34B | CodeLlama-34b-Python | 600k | 80 | 4096 |

| 🔥 CodeFuse-13B | CodeFuse-13B | 66k | 64 | 4096 |

We are also pleased to release two code-related instruction datasets, meticulously selected from a range of datasets to facilitate multitask training. Moving forward, we are committed to releasing additional instruction datasets covering various code-related tasks.

| Dataset | Introduction |

|---|---|

| ⭐ Evol-instruction-66k | Based on open-evol-instruction-80k, filter out low-quality, repeated, and similar instructions to HumanEval, thus get high-quality code instruction dataset. |

| ⭐ CodeExercise-Python-27k | python code exercise instruction dataset generated by chatgpt |