Table of Contents

CodeSearchNet is a collection of datasets and a deep learning framework built on TensorFlow to explore the problem of code retrieval using natural language. This research is a continuation of some ideas presented in this blog post and is a joint collaboration between GitHub and the Deep Program Understanding group at Microsoft Research - Cambridge. Our intent is to present and provide a platform for this research to the community by providing the following:

- Instructions for obtaining large corpora of relevant data

- Open source code for a range of baseline models, along with pre-trained weights

- Baseline evaluation metrics and utilities.

- Mechanisms to track progress on the community benchmark. This is hosted by Weights & Biases, which is free for open source projects. TODO: link here to active benchmark?

We hope that CodeSearchNet is a good step towards engaging with the broader machine learning and NLP community towards developing new machine learning models that understand source code and natural language. Despite the fact that we described a specific task here, we expect and welcome many other uses of our dataset.

More context regarding the motivation for this problem is in our blog post TODO here.

The primary dataset consists of 2 million (comment, code) pairs from open source libraries. Concretely, a comment is a top-level function or method comment (e.g. docstrings in Python), and code is an entire function or method. Currently, the dataset contains Python, Javascript, Ruby, Go, Java, and PHP code. Throughout this repo, we refer to the terms docstring and query interchangeably. We partition the data into train, validation, and test splits such that code from the same repository can only exist in one partition. Currently this is the only dataset on which we train our model. Summary stastics about this dataset can be found in this notebook

Our model ingests a parallel corpus of (comments, code) and learns to retrieve a code snippet given a natural language query. Specifically, comments are top-level function and method comments (e.g. docstrings in Python), and code is an entire function or method. Throughout this repo, we refer to the terms docstring and query interchangeably.

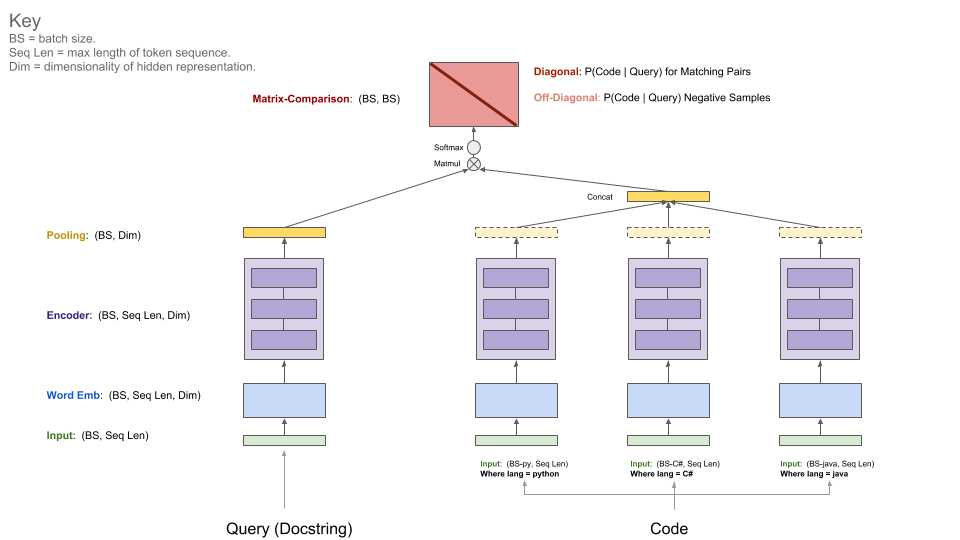

The query has a single encoder, whereas each programming language has its own encoder. Our initial release has three languages: Python, Java, and C#. The available encoders are Neural-Bag-Of-Words, RNN, 1D-CNN, Self-Attention (BERT), and a 1D-CNN+Self-Attention Hybrid.

The diagram below illustrates the general architecture of our model:

The metric we use for evaluation is Mean Reciprocal Rank. To calculate MRR, we use distractors from negative samples within a batch at evaluation time, with a fixed batch size of 1,000 (Note: we fix the batch size to 1,000 at evaluation time to standardize the MRR calculation, and do not do this at training time.)

For example, consider a dataset of 10,005 (comment, code) pairs. For every (comment, code) pair in each of the 10 batches (we exclude the remaining 5 examples), we use the comment to retrieve the code, with the other code snippets in the batch serving as distractors. We then average the MRR across all 10 batches to compute MRR for the dataset. If the dataset is not divisible by 1,000, we exclude the final batch (any remainder that is less than 1,000) from the MRR calculation.

Since our model architecture is designed to learn a common representation for both code and natural language, we use the distances between these representations to rank results for the MRR calculation. We are computing distance using cosine similarity by default.

We annotate retrieval results for the six languages from 99 general queries. This dataset will be used as groundtruth data for evaluation only. One task is to predict top 100 results per language per query from all functions (w/o comments). NDCG is computed as our main metrics.

We are using a community benchmark for this project to encourage collaboration and improve reproducibility. It is hosted by Weights & Biases (W&B), which is free for open source projects. Our entries in the benchmark link to detailed logs of our training and evaluation metrics, as well as model artifacts, and we encourage other participants to provide as much transparency as possible. Here is the current state of the benchmark:

We invite the community to improve on these baselines by submitting PRs with your new performance metrics. TODO: how does the PR/submission flow interact between W&B leaderboard and this version? Please see these instructions for submitting to the benchmark. Some requirements for submission:

- Results must be reproducible with clear instructions.

- Code must be open sourced and clearly licensed.

- Model must demonstrate an improvement on at least one of the auxiliary tests.

We are excited to offer the community useful tools—datasets, baseline models, and a collaboration forum via the Weights & Biases benchmark (TODO: link to active benchmark)—for the challenging research tasks of learning representations of code and code retrieval using natural language. We encourage you to contribute by improving on our baseline models, sharing your ideas with others and submitting your results to the collaborative benchmark.

We anticipate that the community will design custom architectures and use frameworks other than Tensorflow. Furthermore, we anticipate that additional datasets will be useful. It is not our intention to integrate these models, approaches, and datasets into this repository as a superset of all available ideas. Rather, we intend to maintain the baseline models and a central place of reference with links to related repositories from the community. TODO: link and description of W&B discussion forum? We are accepting PRs that update the documentation, link to your project(s) with improved benchmarks, fix bugs, or make minor improvements to the code. Here are more specific guidelines for contributing to this repository. Please open an issue if you are unsure of the best course of action.

You should only have to perform the setup steps once to download the data and prepare the environment.

-

Due to the complexity of installing all dependencies, we prepared Docker containers to run this code. You can find instructions on how to install Docker in the official docs. Additionally, you must install Nvidia-Docker to satisfy GPU-compute related dependencies. For those who are new to Docker, this blog post provides a gentle introduction focused on data science.

-

After installing Docker, you need to download the pre-processed datasets, which are hosted on S3. You can do this by running

script/setup.script/setupThis will build Docker containers and download the datasets. By default, the data is downloaded into the

resources/data/folder inside this repository, with the directory structure described here.

The datasets you will download (most of them compressed) have a combined size of only ~ 3.5 GB.

For more about the data, see Data Details below as well as this notebook.

This step assumes that you have a suitable Nvidia-GPU with Cuda v9.0 installed. We used AWS P3-V100 instances (a p3.2xlarge is sufficient).

-

Start the model run environment by running

script/console:script/consoleThis will drop you into the shell of a Docker container with all necessary dependencies installed, including the code in this repository, along with data that you downloaded in the previous step. By default you will be placed in the

src/folder of this GitHub repository. From here you can execute commands to run the model. -

Set up W&B (free for open source projects) per the instructions below if you would like to share your results on the community benchmark. This is optional but highly recommended.

-

The entry point to this model is

src/train.py. You can see various options by executing the following command:python train.py --helpTo test if everything is working on a small dataset, you can run the following command:

python train.py --testrun -

Now you are prepared for a full training run. Example commands to kick off training runs:

-

Training a neural-bag-of-words model on all languages

python train.py --model neuralbowThe above command will assume default values for the location(s) of the training data and a destination where would like to save the output model. The default location for training data is specified in

/src/data_dirs_{train,valid,test}.txt. These files each contain a list of paths where data for the corresponding partition exists. If more than one path specified (separated by a newline), the data from all the paths will be concatenated together. For example, this is the content ofsrc/data_dirs_train.txt:$ cat data_dirs_train.txt ../resources/data/python/final/jsonl/train ../resources/data/javascript/final/jsonl/train ../resources/data/java/final/jsonl/train ../resources/data/php/final/jsonl/train ../resources/data/ruby/final/jsonl/train ../resources/data/go/final/jsonl/trainBy default models are saved in the

resources/saved_modelsfolder of this repository. -

Training a 1D-CNN model on Python data only:

python train.py --model 1dcnn /trained_models ../resources/data/python/final/jsonl/train ../resources/data/python/final/jsonl/valid ../resources/data/python/final/jsonl/testThe above command overrides the default locations for saving the model to

trained_modelsand also overrides the source of the train, validation, and test sets.

Additional notes:

-

Options for

--modelare currently listed insrc/model_restore_helper.get_model_class_from_name. -

Hyperparameters are specific to the respective model/encoder classes; a simple trick to discover them is to kick off a run without specifying hyperparameter choices, as that will print a list of all used hyperparameters with their default values (in JSON format).

-

By default, models are saved in the

/resources/saved_modelsfolder of this repository, but this can be overridden as shown above.

To initialize W&B:

-

Navigate to the

/srcdirectory in this repository. -

If it's your first time using W&B on a machine, you will need to login:

$ wandb login -

You will be asked for your api key, which appears on your W&B profile page.

There are several options for acquiring the data.

- Recommended: obtain the preprocessed dataset.

We recommend this option because parsing all the code from source can require a considerable amount of computation. However, there may be an opportunity to parse, clean and transform the original data in new ways that can increase performance. If you have run the setup steps above you will already have the preprocessed files, and nothing more needs to be done. The data will be available in the /resources/data folder of this repository, with this directory structure.

- Extract the data from source and parse, annotate, and deduplicate the data. To do this, see the data extraction README.

TODO: consider moving this to a separate readme on data structure/format/directory structure? Data is stored in jsonlines format. Each line in the uncompressed file represents one example (usually a function with an associated comment). A prettified example of one row is illustrated below.

- repo: the owner/repo

- path: the full path to the original file

- func_name: the function or method name

- original_string: the raw string before tokenization or parsing

- language: the programming language

- code: the part of the

original_stringthat is code - code_tokens: tokenized version of

code - docstring: the top level comment or docstring, if exists in the original string

- docstring_tokens: tokenized version of

docstring - sha: this field is not being used [TODO: add note on where this comes from?]

- partition: a flag indicating what partition this datum belongs to of {train, valid, test, etc.} This is not used by the model. Instead we rely on directory structure to denote the partition of the data.

- url: the url for the this code snippet including the line numbers

Code, comments, and docstrings are extracted in a language-specific manner, removing artifacts of that language.

{

'code': 'def get_vid_from_url(url):\n'

' """Extracts video ID from URL.\n'

' """\n'

" return match1(url, r'youtu\\.be/([^?/]+)') or \\\n"

" match1(url, r'youtube\\.com/embed/([^/?]+)') or \\\n"

" match1(url, r'youtube\\.com/v/([^/?]+)') or \\\n"

" match1(url, r'youtube\\.com/watch/([^/?]+)') or \\\n"

" parse_query_param(url, 'v') or \\\n"

" parse_query_param(parse_query_param(url, 'u'), 'v')",

'code_tokens': ['def',

'get_vid_from_url',

'(',

'url',

')',

':',

'return',

'match1',

'(',

'url',

',',

"r'youtu\\.be/([^?/]+)'",

')',

'or',

'match1',

'(',

'url',

',',

"r'youtube\\.com/embed/([^/?]+)'",

')',

'or',

'match1',

'(',

'url',

',',

"r'youtube\\.com/v/([^/?]+)'",

')',

'or',

'match1',

'(',

'url',

',',

"r'youtube\\.com/watch/([^/?]+)'",

')',

'or',

'parse_query_param',

'(',

'url',

',',

"'v'",

')',

'or',

'parse_query_param',

'(',

'parse_query_param',

'(',

'url',

',',

"'u'",

')',

',',

"'v'",

')'],

'docstring': 'Extracts video ID from URL.',

'docstring_tokens': ['Extracts', 'video', 'ID', 'from', 'URL', '.'],

'func_name': 'YouTube.get_vid_from_url',

'language': 'python',

'original_string': 'def get_vid_from_url(url):\n'

' """Extracts video ID from URL.\n'

' """\n'

" return match1(url, r'youtu\\.be/([^?/]+)') or \\\n"

" match1(url, r'youtube\\.com/embed/([^/?]+)') or "

'\\\n'

" match1(url, r'youtube\\.com/v/([^/?]+)') or \\\n"

" match1(url, r'youtube\\.com/watch/([^/?]+)') or "

'\\\n'

" parse_query_param(url, 'v') or \\\n"

" parse_query_param(parse_query_param(url, 'u'), "

"'v')",

'partition': 'test',

'path': 'src/you_get/extractors/youtube.py',

'repo': 'soimort/you-get',

'sha': 'b746ac01c9f39de94cac2d56f665285b0523b974',

'url': 'https://github.com/soimort/you-get/blob/b746ac01c9f39de94cac2d56f665285b0523b974/src/you_get/extractors/youtube.py#L135-L143'

}

Furthermore, summary statistics such as row counts and token length histograms can be found in this notebook

The shell script /script/setup will automatically download these files into the /resources/data directory. Here are the links to the relevant files for visibility:

The s3 links follow this pattern:

https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/{python,java,go,php,javascript,ruby}.zip

For example, the link for the java is:

https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/java.zip

The size of the pre-processed dataset is 1.8 GB.

We also provide all functions (w/o comments), total ~6M functions. This data is located in the following S3 bucket:

For example, the link for the python file is:

https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/python_dedupe_definitions_v2.pkl

The size of the raw filtered dataset is 17 GB.

Here are related projects from the community that leverage these ideas. PRs featuring other projects are welcome!

-

Repository recommendations using idi-o-matic.

This project is released under the MIT License.

Container images built with this project include third party materials. See the third party notice for details.