Master Spark's core APIs with Scala.

- https://rockthejvm.com/p/spark-essentials

- https://github.com/rockthejvm/spark-essentials

- https://github.com/rockthejvm/spark-essentials/releases/tag/start

- https://docs.docker.com/desktop/install/ubuntu/

- https://docs.docker.com/engine/install/ubuntu/#set-up-the-repository

$ docker compose upIn another shell:

$ ./psql.shBuild spark images for master, worker and submit (do this once):

$ cd spark-cluster

$ ./build-images.shStart a Spark cluster with 1 worker:

$ docker compose up --scale spark-worker=1Connect to the master node and run the Spark SQL shell:

$ docker exec -it spark-cluster-spark-master-1 bash

$ cd spark/

$ ./bin/spark-sqlOr a run a Spark shell:

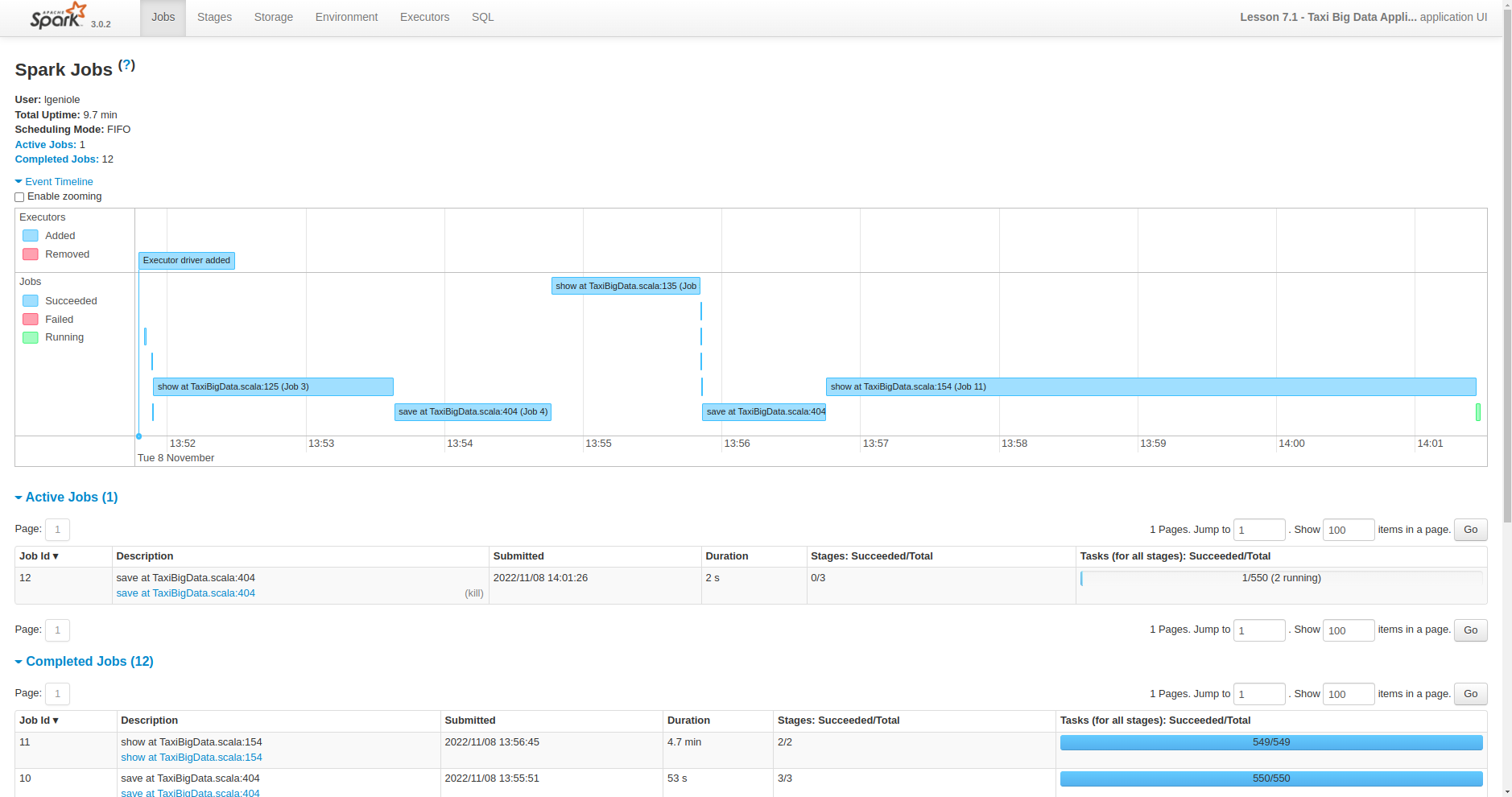

$ ./bin/spark-shellThis starts a web view listening on localhost:4040

Among other tools, we can also run Spark with R and Python environments:

$ /spark/bin/sparkR

$ /spark/bin/pyspark

$ /spark/bin/spark-submit

$ /spark/bin/beeline

$ /spark/bin/find-spark-home

$ /spark/bin/spark-class

$ /spark/bin/spark-class2.cmd

$ /spark/bin/run-example