In this repo, we introduce two approaches to training transformers to capture semantic and lexical text representations for robust dense passage retrieval.

- Aggretriever: A Simple Approach to Aggregate Textual Representation for Robust Dense Passage Retrieval Sheng-Chieh Lin, Minghan Li and Jimmy Lin.

- A Dense Representation Framework for Lexical and Semantic Matching Sheng-Chieh Lin and Jimmy Lin.

This repo contains three parts: (1) densify (2) training (tevatron) (3) retrieval. Our training code is mainly from Tevatron with a minor revision.

pip install torch>=1.7.0

pip install transformers==4.15.0

pip install pyserini

pip install beir

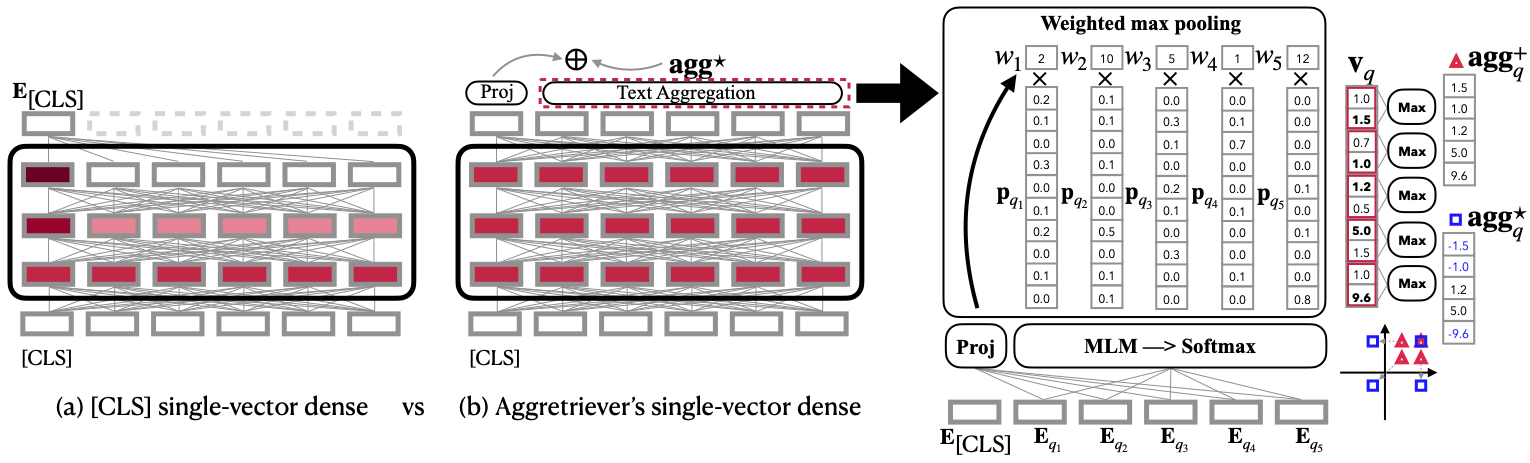

In this paper, we introduce a simple approach to aggregating token-level information into a single-vector dense representation. We provide instruction for model training and evaluation on MS MARCO passage ranking dataset in the document. We also provide instruction for the evaluation on BEIR datasets in the document.

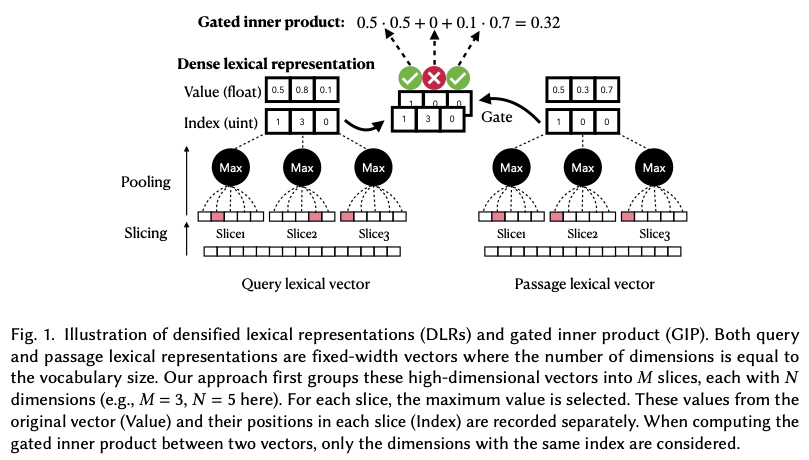

In this paper, we introduce a unified representation framework for Lexical and Semantic Matching. We first introduce how to use our framework to conduct retrieval for high-dimensional (lexcial) representations and combine with single-vector dense (semantic) representations for hybrid search.

We can densify any existing lexical matching models and conduct lexical matching on GPU. In the document, we demonstrate how to conduct BM25 and uniCOIL end-to-end retrieval under our framework. Detailed description can be found in our paper.

With the densified lexical representations, we can easily conduct lexical and semantic hybrid retrieval using independent neural models. A document for hybrid retrieval will be coming soon.

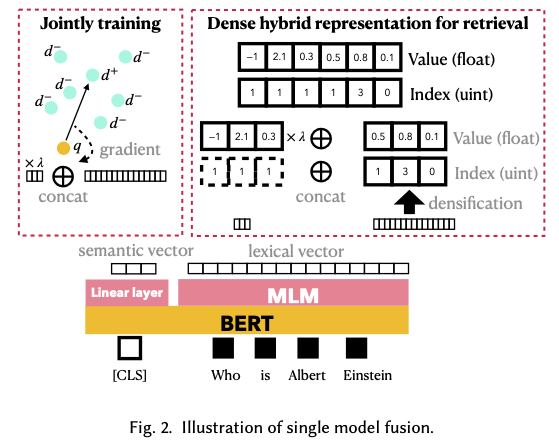

In our paper, we propose a single model fusion approach by training the lexical and semantic components of a transformer while inference, we combine the densified lexical representations and dense representations as dense hybrid representations. Instead of training by yourself, you can also download our trained DeLADE-CLS-P, DeLADE-CLS and DeLADE and directly peform inference on MSMARCO Passage dataset (see document) or BEIR datasets (see document).