MAXIM: Multi-Axis MLP for Image Processing (CVPR 2022 Oral)

This repo is a PyTorch re-implementation of [CVPR 2022 Oral] paper: "MAXIM: Multi-Axis MLP for Image Processing" by Zhengzhong Tu, Hossein Talebi, Han Zhang, Feng Yang, Peyman Milanfar, Alan Bovik, and Yinxiao Li

Google Research, University of Texas at Austin

Disclaimer: This repo is currently working in progress. No timelines are guaranteed.

- April 12, 2022: Initialize PyTorch repo for MAXIM.

- March 29, 2022: The official JAX code and models have been released at [google-research/maxim]

- March 29, 2022: MAXIM is selected for an ORAL presentation at CVPR 2022 🎉

- March 3, 2022: Paper accepted at CVPR 2022.

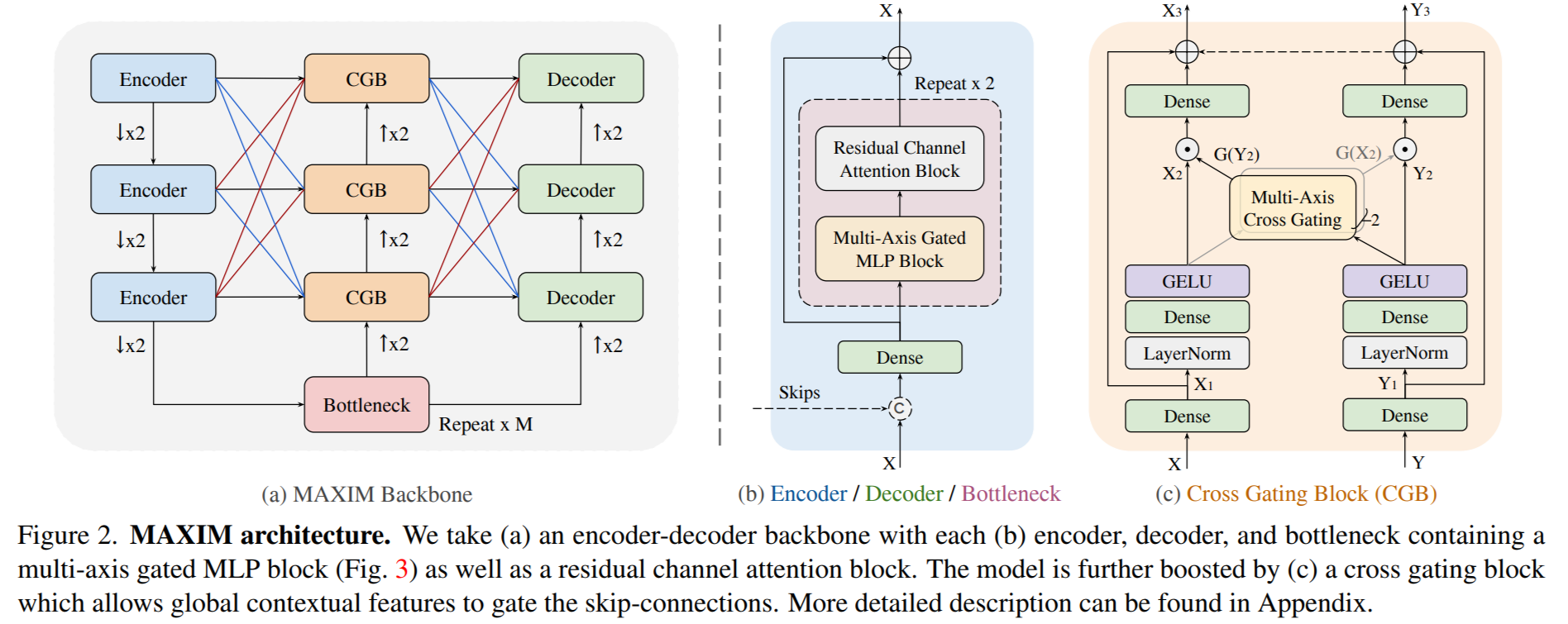

Abstract: Recent progress on Transformers and multi-layer perceptron (MLP) models provide new network architectural designs for computer vision tasks. Although these models proved to be effective in many vision tasks such as image recognition, there remain challenges in adapting them for low-level vision. The inflexibility to support high-resolution images and limitations of local attention are perhaps the main bottlenecks. In this work, we present a multi-axis MLP based architecture called MAXIM, that can serve as an efficient and flexible general-purpose vision backbone for image processing tasks. MAXIM uses a UNet-shaped hierarchical structure and supports long-range interactions enabled by spatially-gated MLPs. Specifically, MAXIM contains two MLP-based building blocks: a multi-axis gated MLP that allows for efficient and scalable spatial mixing of local and global visual cues, and a cross-gating block, an alternative to cross-attention, which accounts for cross-feature conditioning. Both these modules are exclusively based on MLPs, but also benefit from being both global and `fully-convolutional', two properties that are desirable for image processing. Our extensive experimental results show that the proposed MAXIM model achieves state-of-the-art performance on more than ten benchmarks across a range of image processing tasks, including denoising, deblurring, deraining, dehazing, and enhancement while requiring fewer or comparable numbers of parameters and FLOPs than competitive models.

TBD

TBD

Should you find this repository useful, please consider citing:

@article{tu2022maxim,

title={MAXIM: Multi-Axis MLP for Image Processing},

author={Tu, Zhengzhong and Talebi, Hossein and Zhang, Han and Yang, Feng and Milanfar, Peyman and Bovik, Alan and Li, Yinxiao},

journal={CVPR},

year={2022},

}

This repository is built on Restormer. Our work is also inspired by HiT, MPRNet, and HINet.