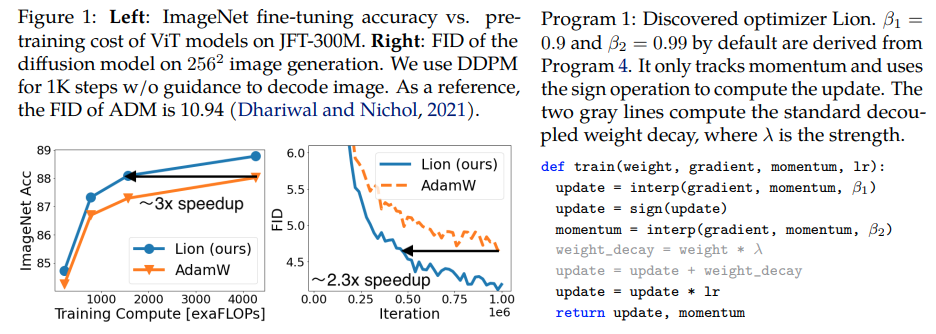

Lion, EvoLved Sign Momentum, new optimizer discovered by Google Brain that is purportedly better than Adam(w), in Pytorch. This is nearly a straight copy from here, with few minor modifications.

It is so simple, we may as well get it accessible and used asap by everyone to train some great models, if it really works 🤞

In regards to learning rate, the authors write in section 5 - Based on our experience, a suitable learning rate for Lion is typically 10x smaller than that for AdamW, although sometimes a learning rate that is 3x smaller may perform slightly better.

At the end of section 1, they also said that a larger decoupled weight decay is needed. - Users should be aware that the uniform update calculated using the sign function usually yields a larger norm compared to those generated by SGD and adaptive methods. Therefore, Lion requires a smaller learning rate lr, and a larger decoupled weight decay λ to maintain the effective weight decay strength.

Update: seems to work for my local enwik8 autoregressive language modeling

Update 2: experiments, seems much worse than Adam if learning rate held constant

Update 3: Dividing the learning rate by 3, seeing better early results than Adam. Maybe Adam has been dethroned, after nearly a decade.

Update 4: using the 10x smaller learning rate rule of thumb from the paper resulted in the worst run. so I guess it still takes a bit of tuning

$ pip install lion-pytorch# toy model

import torch

from torch import nn

model = nn.Linear(10, 1)

# import Lion and instantiate with parameters

from lion_pytorch import Lion

opt = Lion(model.parameters(), lr = 1e-4)

# forward and backwards

loss = model(torch.randn(10))

loss.backward()

# optimizer step

opt.step()

opt.zero_grad()To use a fused kernel for updating the parameters, first pip install triton -U --pre, then

opt = Lion(

model.parameters(),

lr = 1e-4,

use_triton = True # set this to True to use cuda kernel w/ Triton lang (Tillet et al)

)- Stability.ai for the generous sponsorship to work and open source cutting edge artificial intelligence research

@misc{https://doi.org/10.48550/arxiv.2302.06675,

url = {https://arxiv.org/abs/2302.06675},

author = {Chen, Xiangning and Liang, Chen and Huang, Da and Real, Esteban and Wang, Kaiyuan and Liu, Yao and Pham, Hieu and Dong, Xuanyi and Luong, Thang and Hsieh, Cho-Jui and Lu, Yifeng and Le, Quoc V.},

title = {Symbolic Discovery of Optimization Algorithms},

publisher = {arXiv},

year = {2023}

}@article{Tillet2019TritonAI,

title = {Triton: an intermediate language and compiler for tiled neural network computations},

author = {Philippe Tillet and H. Kung and D. Cox},

journal = {Proceedings of the 3rd ACM SIGPLAN International Workshop on Machine Learning and Programming Languages},

year = {2019}

}