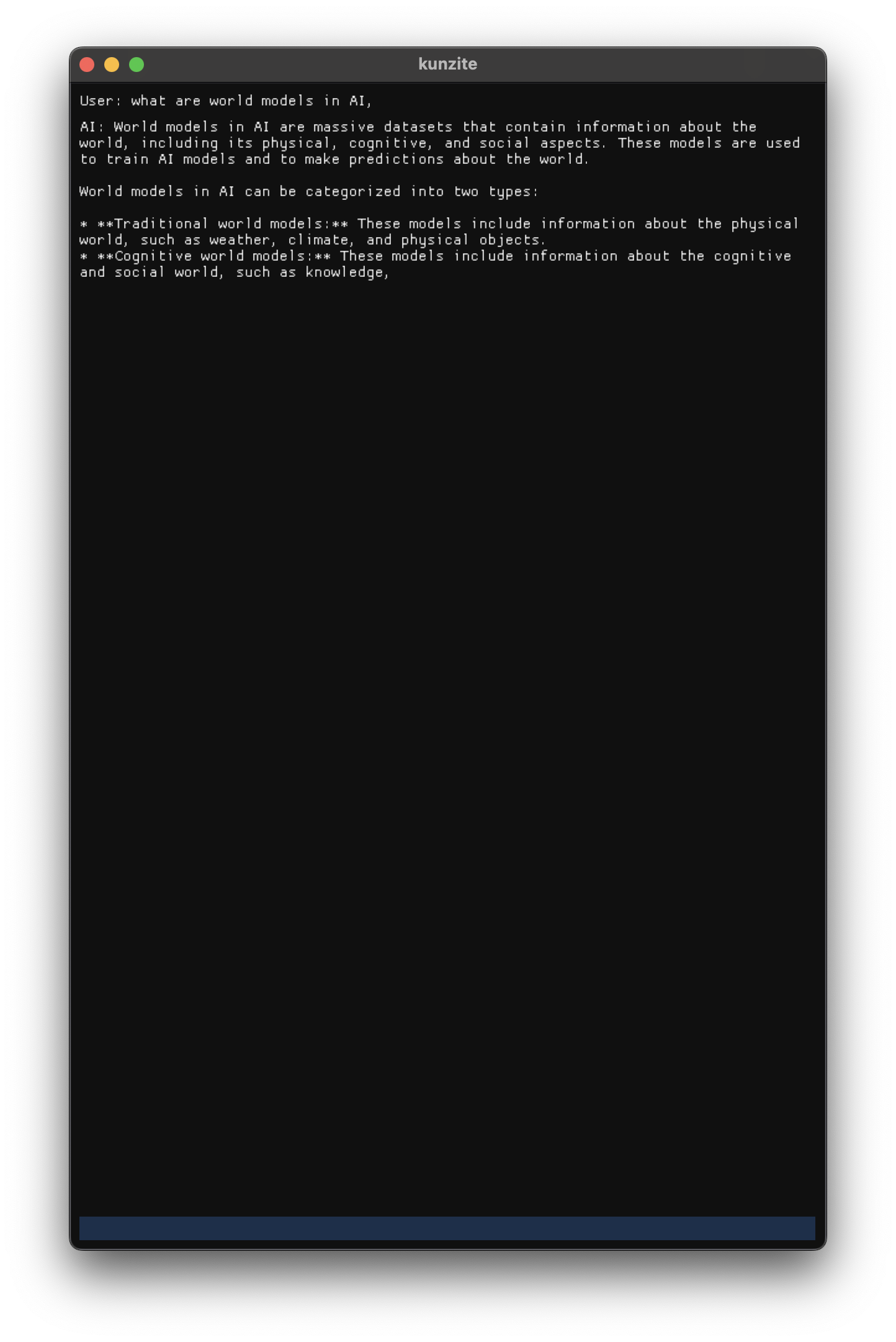

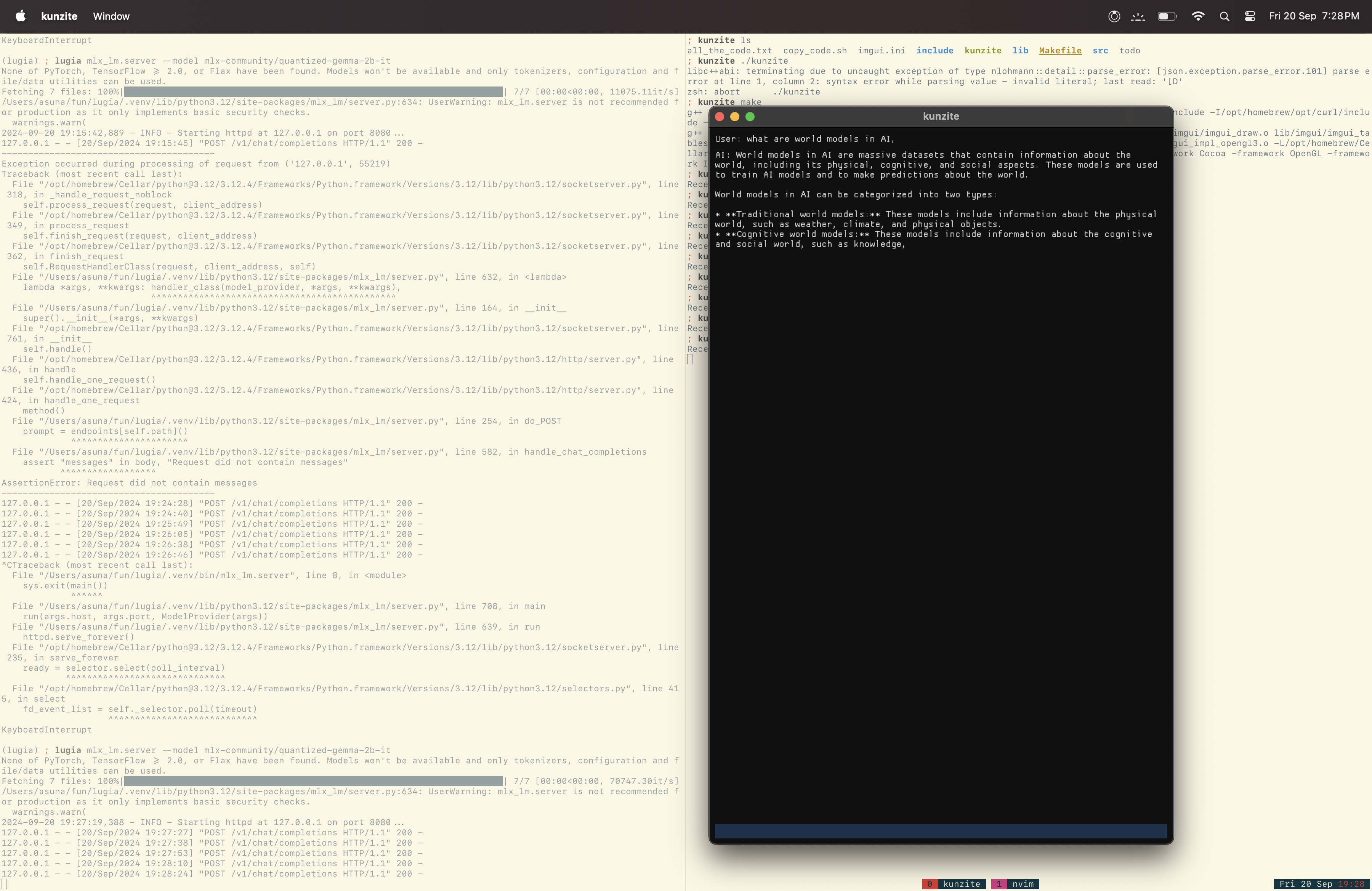

Kunzite is a lightweight, bloat-free GUI application for local AI chat using the lugia inference engine (based on mlx).

- Local AI: Run AI chat completions entirely on your device

- MLX Inference Engine: Utilize Apple's MLX framework for fast, efficient inference

- On-Device Inference: Ensure privacy and offline functionality

- Bloat-Free GUI: Minimalist interface for distraction-free work

- Clone the repository

- Install dependencies - imgui, lugia

- Run

maketo build the project - Execute

./kunziteto start the application

- Kunzite uses a simple client-server architecture

- GUI: Implemented with Dear ImGui for a lightweight, cross-platform interface

- LLM Client: Handles communication with the local inference server

- MLX Server: Runs the language model using the MLX framework using lugia inference engine

This project is licensed under the MIT License