A pure Julia machine learning framework.

Call for help. MLJ is getting attention but its small project team needs help to ensure its success. This depends crucially on:

-

Existing and developing ML algorithms implementing the MLJ model interface

-

Improvements to existing but poorly maintained Julia ML algorithms

The MLJ model interface is now relatively stable and well-documented, and the core team is happy to respond to issue requests for assistance. Please click here for more details on contributing.

MLJ is presently supported by a small Alan Turing Institute grant and is looking for new funding sources to grow the project.

MLJ aims to be a flexible framework for combining and tuning machine learning models, written in the high performance, rapid development, scientific programming language, Julia.

The MLJ project is partly inspired by MLR.

A list of models implementing the MLJ interface: MLJRegistry

In the Julia REPL:

]add MLJ

add MLJModelsA docker image with installation instructions is also available.

-

Automated tuning of hyperparameters, including composite models with nested parameters. Tuning implemented as a wrapper, allowing composition with other meta-algorithms. ✔

-

Option to tune hyperparameters using gradient descent and automatic differentiation (for learning algorithms written in Julia).

-

Data agnostic: Train models on any data supported by the Tables.jl interface. ✔

-

Intuitive syntax for building arbitrarily complicated learning networks .✔

-

Learning networks can be exported as self-contained composite models ✔, but common networks (e.g., linear pipelines, stacks) come ready to plug-and-play.

-

Performant parallel implementation of large homogeneous ensembles of arbitrary models (e.g., random forests). ✔

-

Task interface matches machine learning problem to available models. ✔

-

Benchmarking a battery of assorted models for a given task.

-

Automated estimates of cpu and memory requirements for given task/model.

See here.

-

The ScikitLearn SVM models will not work under Julia 1.0.3 but do work under Julia 1.1 due to Issue #29208

-

When MLJRegistry is updated with new models you may need to force a new precompilation of MLJ to make new models available.

Get started here, or take the MLJ tour.

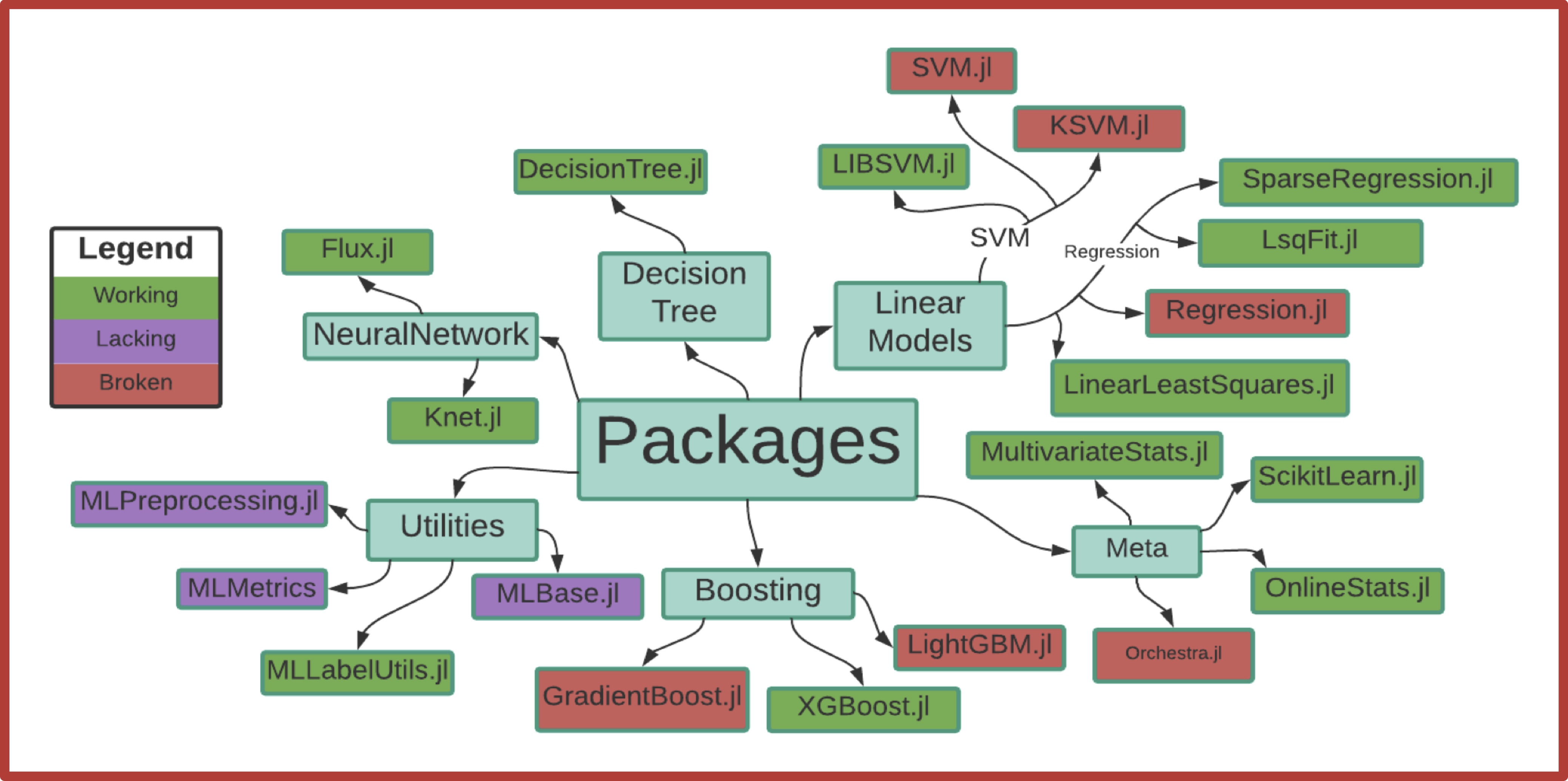

Predecessors of the current package are AnalyticalEngine.jl and Orchestra.jl, and Koala.jl. Work continued as a research study group at the University of Warwick, beginning with a review of existing ML Modules that were available in Julia at the time (in-depth, overview).

Further work culminated in the first MLJ proof-of-concept