🚙📷 Drive&Segment: Unsupervised Semantic Segmentation of Urban Scenes via Cross-modal Distillation (ECCV'22 oral)

This project hosts the code for inference of the Drive&Segment for unsupervised image segmentation of urban scenes.

It is accepted to the ECCV'22 conference as a paper for an oral presentation (2.7% of submissions).

Drive&Segment: Unsupervised Semantic Segmentation of Urban Scenes via Cross-modal Distillation

Antonin Vobecky, David Hurych, Oriane Siméoni, Spyros Gidaris, Andrei Bursuc, Patrick Pérez, and Josef SivicarXiv preprint (arXiv 2203.11160, full paper)

Table of Contents

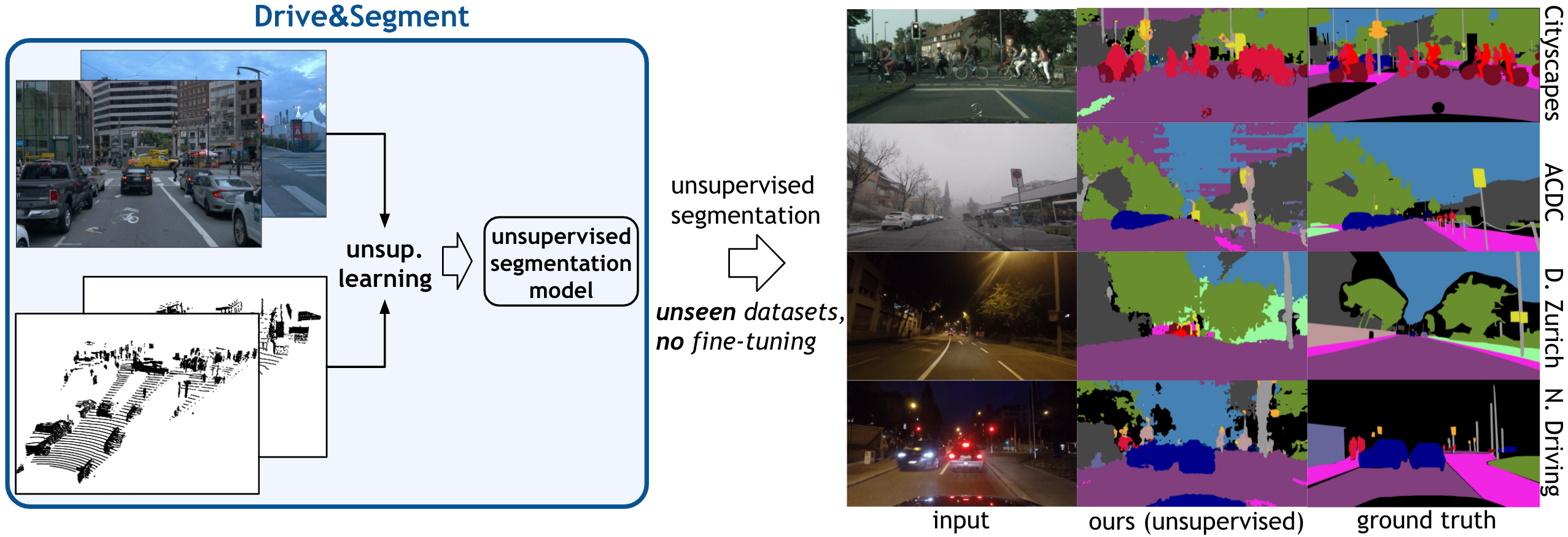

- 🚫🔬 Unsupervised semantic segmentation: Drive&Segments proposes learning semantic segmentation in urban scenes without any manual annotation, just from the raw non-curated data collected by cars which, equipped with 📷 cameras and 💥 LiDAR sensors.

- 📷💥 Multi-modal training: During the train time our method takes 📷 images and 💥 LiDAR scans as an input, and learns a semantic segmentation model without using manual annotations.

- 📷 Image-only inference: During the inference time, Drive&Segments takes only images as an input.

- 🏆 State-of-the-art performance: Our best single model based on Segmenter architecture achieves 21.8% in mIoU on Cityscapes (without any fine-tuning).

- 🚀 Gradio Application: We provide an interactive Gradio application so that everyone can try our model.

Example of pseudo segmentation.

Two examples of pseudo segmentation mapped to the 19 ground-truth classes of the Cityscapes dataset by using Hungarian algorithm.

Please, refer to requirements.txt

To create and run a virtual environment with the required packages, you can run:

conda create --name DaS --file requirements.txt

conda activate DaS

We provide our Segmenter model trained on the nuScenes dataset.

Run

python3 inference.py --input-path [path to image/folder with images] --output-dir [where to save the outputs] --cuda

where:

--input-pathspecifies either a path to a single image or a path to a folder with images,output-dir(optional) specifies the output directory, and--cudais flag denoting whether to run the code on the GPU (strongly recommended).

Example: python3 inference.py --input-path sources/img1.jpeg --output-dir ./outputs --cuda

We provide weights and config files (CPU / GPU) for the Segmenter model trained on the nuScenes dataset.

The weights and config files are downloaded automatically when running inference.py. Should you prefer to download

them by hand, please place them to the ./weights folder.

Due to the Waymo Open dataset license terms, we cannot openly share the trained weights. If you are interested in using

the model trained on the Waymo Open dataset, please register at

the Waymo Open and send the confirmation of your agreement to

the license terms to the authors (antonin (dot) vobecky (at) cvut (dot) cz).

In the table below, we report the results of our best model (Segmenter trained on Waymo Open dataset) evaluated in the unsupervised setup (i.e., by obtaining mapping between pseudo- and ground-truth classes by Hungarian algorithm).

| Dataset | mIoU |

|---|---|

| Cityscapes (19 cls) | 21.8 |

| Cityscapes (27 cls) | 15.3 |

| Dark Zurich | 14.2 |

| Nighttime Driving | 18.9 |

| ACDC (night) | 13.8 |

| ACDC (fog) | 14.5 |

| ACDC (rain) | 14.9 |

| ACDC (snow) | 14.6 |

| ACDC (mean) | 16.7 |

Please consider citing our paper in your publications if the project helps your research. BibTeX reference is as follows.

@inproceedings{vobecky2022drivesegment,

title={Drive&Segment: Unsupervised Semantic Segmentation of Urban Scenes via Cross-modal Distillation},

author={Antonin Vobecky and David Hurych and Oriane Siméoni and Spyros Gidaris and Andrei Bursuc and Patrick Pérez and Josef Sivic},

journal = {Proceedings of the European Conference on Computer Vision {(ECCV)}},

month = {October},

year = {2022}

}