TRex is a traffic generator for Stateful and Stateless use cases.

Traditionally, network infrastructure devices have been tested using commercial traffic generators, while the performance was measured using metrics like packets per second (PPS) and No Drop Rate (NDR). As the network infrastructure functionality has become more complex, stateful traffic generators have become necessary in order to test with more realistic application traffic pattern scenarios. Realistic and Stateful traffic generators are needed in order to:

-

Test and provide more realistic performance numbers

-

Design and architecture of SW and HW based on realistic use cases

-

Cost: Commercial State-full traffic generators are expensive

-

Scale: Bandwidth does not scale up well with features complexity

-

Standardization: Lack of standardization of traffic patterns and methodologies

-

Flexibility: Commercial tools do not allow agility when flexibility and changes are needed

-

High capital expenditure (capEx) spent by different teams

-

Testing in low scale and extrapolation became a common practice, it is not accurate and hides real life bottlenecks and quality issues

-

Different feature/platform teams benchmark and results methodology

-

Delays in development and testing due to testing tools feature dependency

-

Resource and effort investment in developing different ad hoc tools and test methodologies

TRex addresses these problems through an innovative and extendable software implementation and by leveraging standard and open SW and working on COTS x86/ARM server.

-

Fueled by DPDK

-

Generates L3-7 traffic and able to provide in one tool capabilities provided by commercial tools.

-

Stateful/Stateless traffic generator.

-

Scale to 200Gb/sec

-

Python automation API

-

Low cost

-

Virtualization support. Enable TRex to be used in a fully virtual environment without physical NICs and the following example use cases:

-

Amazon AWS

-

TRex on your laptop

-

Docker

-

Self-contained packaging

-

-

Cisco Pioneer Award Winner 2015

-

Support Physical DPDK 1/2.5/10/25/50/40/100Gbps interfaces (Broadcom/Intel/Mellanox/Cisco VIC/Napatech/Amazon ENA)

-

Virtualization interfaces support (virtio/VMXNET3/E1000)

-

SR-IOV support for best performance

This feature is for stateful features that inspect the traffic.

-

High scale of realistic traffic (number of clients, number of server, bandwidth)

-

Latency/Jitter measurements

-

Flow ordering checks

-

NAT, PAT dynamic translation learning

-

Learn TCP SYN sequence randomization - vASA/Firepower use case

-

Cluster mode for Controller tests

-

IPV6 inline replacement

-

Some cross flow support (e.g RTSP/SIP)

This feature is for Stateless features that do routing/switching e.g. Cisco VPP/OVS. It is more packet based.

-

Large-scale - Supports about 10-30 million packets per second (Mpps) per core, scalable with the number of cores

-

Profile can support multiple streams, scalable to 10K parallel streams

-

Supported for each stream:

-

Packet template - ability to build any packet (including malformed) using Scapy (example: MPLS/IPv4/Ipv6/GRE/VXLAN/NSH)

-

Field engine program

-

Ability to change any field inside the packet (example: src_ip = 10.0.0.1-10.0.0.255)

-

Ability to change the packet size (example: random packet size 64-9K)

-

-

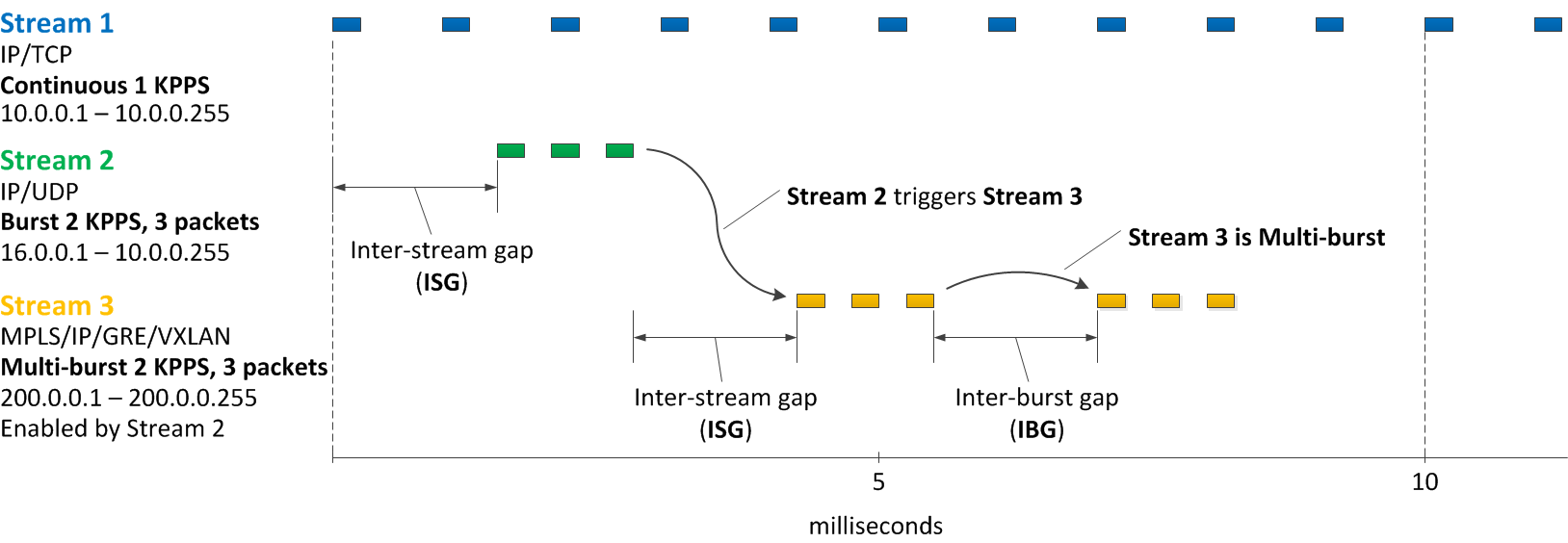

Mode - Continuous/Burst/Multi-burst support

-

Rate can be specified as:

-

Packets per second (example: 14MPPS)

-

L1/L2 bandwidth (example: 500Mb/sec)

-

Interface link percentage (example: 10%)

-

-

Support for basic HLTAPI-like profile definition

-

Action - stream can trigger a stream

-

-

Interactive support - Fast Console, GUI

-

Statistics per interface

-

Statistics per stream done in hardware/software

-

Latency and Jitter per stream

-

Blazingly fast Python automation API

-

L2 Emulation Python event-driven framework with examples of ARP/ICMP/ICMPv6/IPv6ND/DHCP and more. The framework can be extendable with new protocols

-

Capture/Monitor traffic with BPF filters - no need for Wireshark

-

Capture network traffic by redirecting the traffic to Wireshark

-

Functional tests

-

PCAP file import/export

-

Huge pcap file transmission (e.g. 1TB pcap file) for DPI

-

Multi-user support

-

Routing protocol support BGP/OSPF/RIP using BIRD integration

The following example shows three streams configured for Continuous, Burst, and Multi-burst traffic.

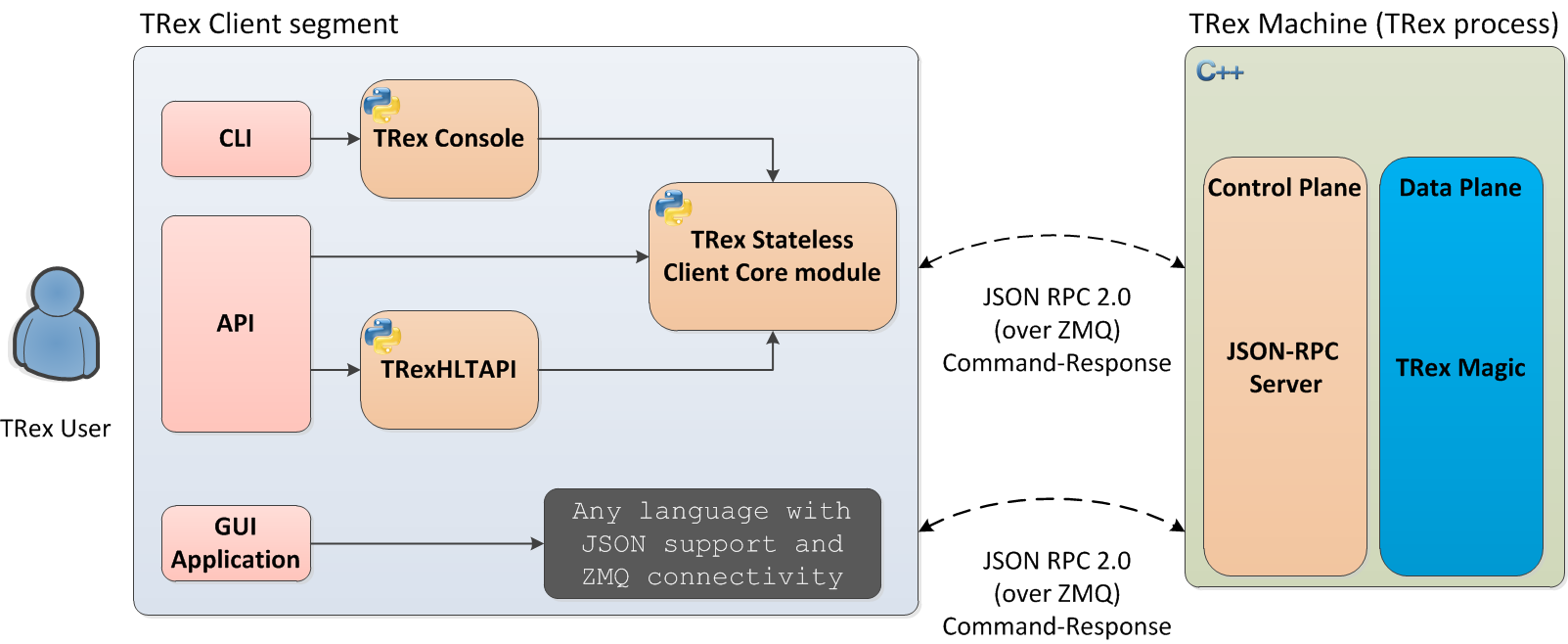

A new JSON-RPC2 Architecture provides support for interactive mode

more info can be found here Documentation

With the new advanced scalable TCP/UDP support, TRex uses TCP/UDP layer for generating the L7 data. This opens the following new capabilities:

-

Ability to work when the DUT terminates the TCP stack (e.g. compress/uncompress). In this case, there is a different TCP session on each side, but L7 data are almost the same.

-

Ability to work in either client mode or server mode. This way TRex client side could be installed in one physical location on the network and TRex server in another.

-

Performance and scale

-

High bandwidth - 200gb/sec with many realistic flows (not one elephant flow )

-

High connection rate - order of MCPS

-

Scale to millions of active established flows

-

-

Emulate L7 application, e.g. HTTP/HTTPS/Citrix- there is no need to implement the exact protocol.

-

Accurate TCP implementation

-

Ability to change fields in the L7 application - for example, change HTTP User-Agent field

more information can be found here:

-

Benchmark/Stress stateful features :

-

NAT

-

DPI

-

Load Balancer

-

Network cache devices

-

FireWall

-

IPS/IDS

-

-

Mixing Application level traffic/profile (HTTP/SIP/Video)

-

Unlimited concurrent flows, limited only by memory

Internal Wiki

Internal Wiki

Our old report bug/request tool YouTrack Better to use github issues

blogs can be found TRex blog

-

Cross-Platform - runs on Windows, Linux, Mac OS X

-

Written in JavaFX use TRex RPC API

-

Scapy base packet builder to build any type of packet using GUI

-

very easy to add new protocols builders (using scapy)

-

-

Open and edit PCAP files, replay and save back

-

visual latency/jitter/per stream statistic

-

Free

Github is here trex-stateless-gui

The objective is to implement client side L3 protocols i.e ARP, IPv6, ND, MLD, IGMP in order to simulate a scale of clients and servers. This project is not limited to client protocols, but it is a good start. The project provides a framework to implement and use client protocols.

The framework is fast enough for control plane protocols and will work with TRex server. Very fast L7 applications (on top of TCP/UDP) will run on TRex server. One single thread of TRex-EMU can achieve a high rate of client creation/teardown. Each of the aforementioned protocol is implemented as a plugin. These plugins are self contained and can signal events one to the other, or to the framework, using an event-bus. (e.g. DHCP signals that it has a new IPv6 address). The framework has an event driven architecture, this way it can scale. The framework also provides to a protocol plugin infrastructure, for example RPC, timers, packet parsers, simulation and more.

The main properties:

-

Fast client creation/teardown. ~3K/sec for one thread.

-

Number of active client/namespace is limited only by the memory on the server.

-

Packet per second (PPS) in the range of 3-5 MPPS.

-

Python 2.7/3.0 Client API exposed through JSON-RPC.

-

Interactive support - Integrated with the TRex console.

-

Modular design. Each plugin is self contained and can be tested on its own.

-

TRex-EMU supports the following protocols:

| Plug-in | Description |

|---|---|

ARP |

RFC 826 |

ICMP |

RFC 777 |

DHCPv4 |

RFC 2131 client side |

IGMP |

IGMP v3/v2/v1 RFC3376 |

IPv6 |

IPv6 ND, RFC 4443, RFC 4861, RFC 4862 and MLD and MLDv2 RFC 3810 |

DHCPv6 |

RFC 8415 client side |

more infor here trex-emu

Bird Internet Routing Daemon is a project aimed to develop a fully functional linux dynamic IP routing daemon. It was integrated into TRex to run alongside in order to exploit it’s features together with Python automation API.

-

Run on top of IPv4 and IPv6 (using kernel veth)

-

BGP (eBGP/iBGP), RPKI (RFC 6480)/RFC 6483 records type are pv4,ipv6,vpn4,vpn6,multicast,flow4,flow6

-

RFC 4271 - Border Gateway Protocol 4 (BGP)

-

RFC 1997 - BGP Communities Attribute

-

RFC 2385 - Protection of BGP Sessions via TCP MD5 Signature

-

RFC 2545 - Use of BGP Multiprotocol Extensions for IPv6

-

RFC 2918 - Route Refresh Capability

-

RFC 3107 - Carrying Label Information in BGP

-

RFC 4360 - BGP Extended Communities Attribute

-

RFC 4364 - BGP/MPLS IPv4 Virtual Private Networks

-

RFC 4456 - BGP Route Reflection

-

RFC 4486 - Subcodes for BGP Cease Notification Message

-

RFC 4659 - BGP/MPLS IPv6 Virtual Private Networks

-

RFC 4724 - Graceful Restart Mechanism for BGP

-

RFC 4760 - Multiprotocol extensions for BGP

-

RFC 4798 - Connecting IPv6 Islands over IPv4 MPLS

-

RFC 5065 - AS confederations for BGP

-

RFC 5082 - Generalized TTL Security Mechanism

-

RFC 5492 - Capabilities Advertisement with BGP

-

RFC 5549 - Advertising IPv4 NLRI with an IPv6 Next Hop

-

RFC 5575 - Dissemination of Flow Specification Rules

-

RFC 5668 - 4-Octet AS Specific BGP Extended Community

-

RFC 6286 - AS-Wide Unique BGP Identifier

-

RFC 6608 - Subcodes for BGP Finite State Machine Error

-

RFC 6793 - BGP Support for 4-Octet AS Numbers

-

RFC 7311 - Accumulated IGP Metric Attribute for BGP

-

RFC 7313 - Enhanced Route Refresh Capability for BGP

-

RFC 7606 - Revised Error Handling for BGP UPDATE Messages

-

RFC 7911 - Advertisement of Multiple Paths in BGP

-

RFC 7947 - Internet Exchange BGP Route Server

-

RFC 8092 - BGP Large Communities Attribute

-

RFC 8203 - BGP Administrative Shutdown Communication

-

RFC 8212 - Default EBGP Route Propagation Behavior without Policies

-

OSPF (v2/v3) RFC 2328/ RFC 5340

-

RIP - RIPv1 (RFC 1058),RIPv2 (RFC 2453), RIPng (RFC 2080), and RIP cryptographic authentication (RFC 4822).

-

-

Scale of Millions of routes (depends on the protocol scale e.g. BGP) in a few seconds

-

Integration with Multi-RX software model (-software and -c higher than 1) to supprt dynamic filters for BIRD protocols while keeping high rates of traffic

-

Can support up to 10K veth (virtual interfaces) each with different QinQ/VLAN configuration

-

Simple automation Python API for pushing configuration and read statistics

Try the new Devnet Sandbox TRex Sandbox

Follow us on TRex traffic generator google group, Or contact via: Group mailing list (trex-tgn@googlegroups.com)

-

VPP performance/functional tests, fd.io

-

VNF tests OPNFV-NFVBENCH

-

Napatech delivers 100Gb/sec Napatech