The following results have been obtained from an agent trained for the Unity Obstacle Tower Challenge . The agent needs to navigate a maze of rooms to get to a door which leads him one floor up. On the way he can collect blue spheres which supply more time. Starting at level 5, the agent needs to find and pick up a key in order to open certain doors. Starting from level 10, the agent needs to solve puzzles in which he is supposed to push a box onto a designated area for a door to open. The agent shown here reaches level 8 on average.

Here you can find the Jupyter Notebook used to generate the following figures and animations.

Further data analysis can be found here . The corresponding data is stored and accessible:

Clay, Viviane (2020), “Data from Neural Network Training in the Obstacle Tower Environment to Investigate Embodied, Weakly Supervised Learning”, Mendeley Data, V2, https://doi.org/10.17632/zdh4d5ws2z.2 .

Cite the paper: Clay, V., König, P., Kühnberger, K.-U. & Pipa, G. Learning sparse and meaningful representations through embodiment. Neural Networks (2020). https://doi.org/10.1016/j.neunet.2020.11.004 .

Further research on using the learned representation for few-shot learning can be found in this repository: https://github.com/vkakerbeck/FastConceptMapping

Also check out this follow-up paper: https://ieeexplore.ieee.org/document/10274870

V. Clay, G. Pipa, K. -U. Kühnberger and P. König, "Development of Few-Shot Learning Capabilities in Artificial Neural Networks When Learning Through Self-Supervised Interaction," in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 46, no. 1, pp. 209-219, Jan. 2024, doi: 10.1109/TPAMI.2023.3323040.

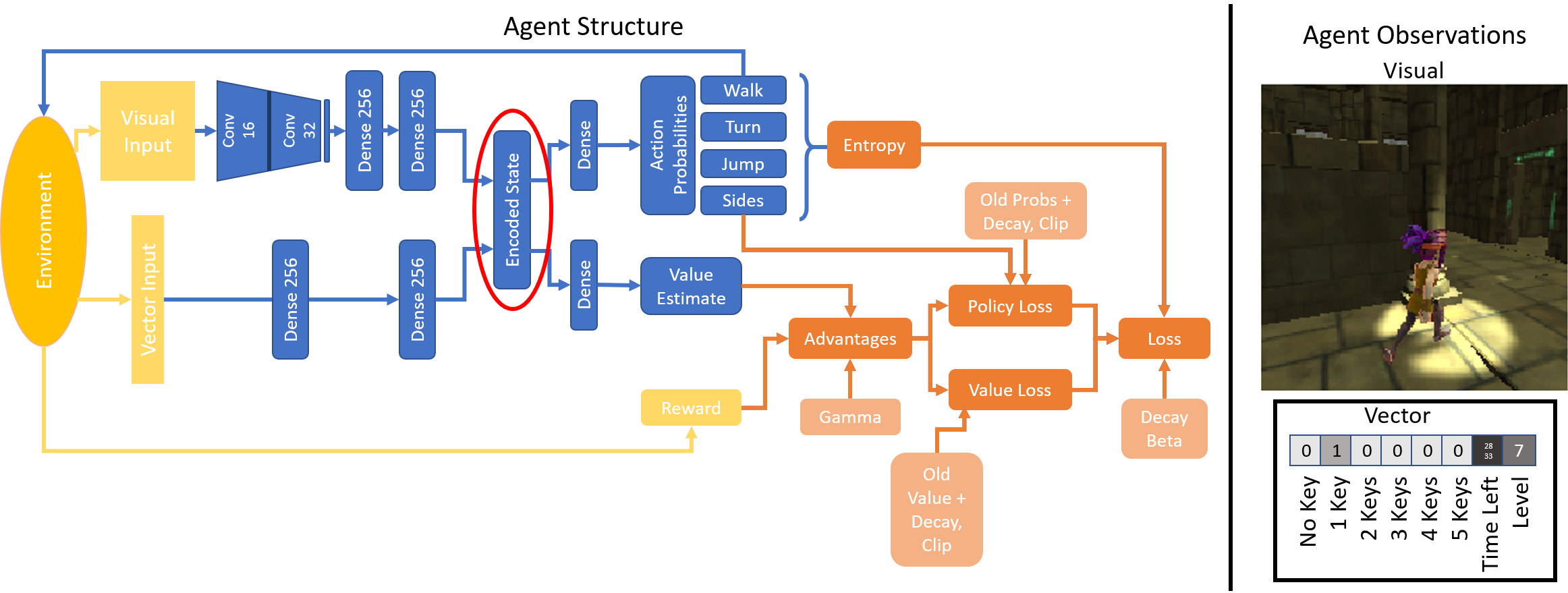

I use proximal policy optimization (PPO) to train the agent. Figure 1 shows the underlying network structure (blue). The agent makes decisions based on visual and vector observations provided by the environment (yellow). Vector observations are composed of the time left, the number of key which the agent possesses and the level in which he finds himself.

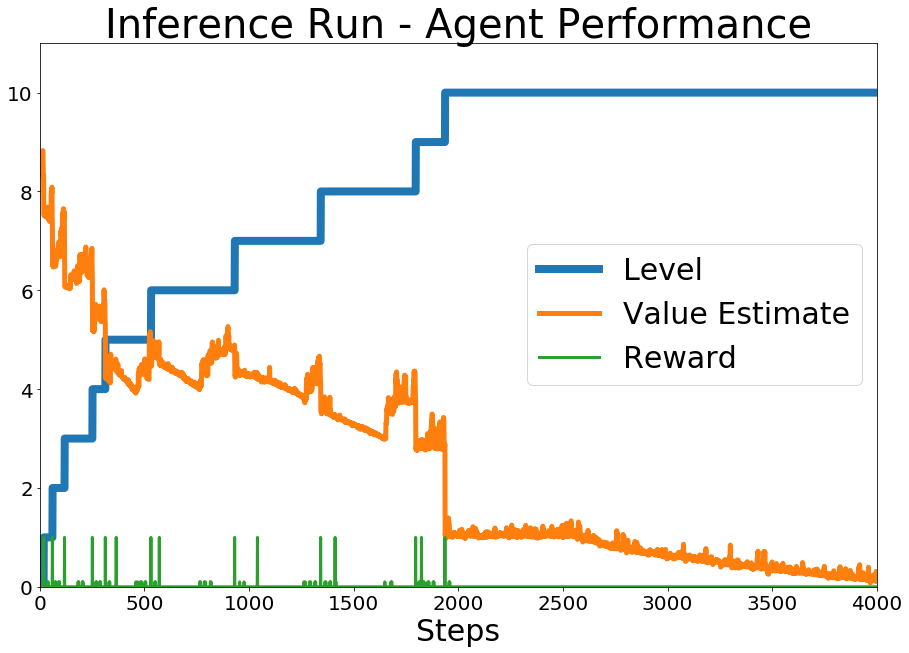

We will now look at the agents brain in one particular run. Figure 2 shows the overall statistics of this run. You can see that the agent reached level 10 within less than 2000 steps.

The following animation shows the embedded layer activations in the agents brain (left) with the corresponding visual observations (right) and the actions which the agent selects. R and V display the obtained reward from the environment and the value estimate respectively.

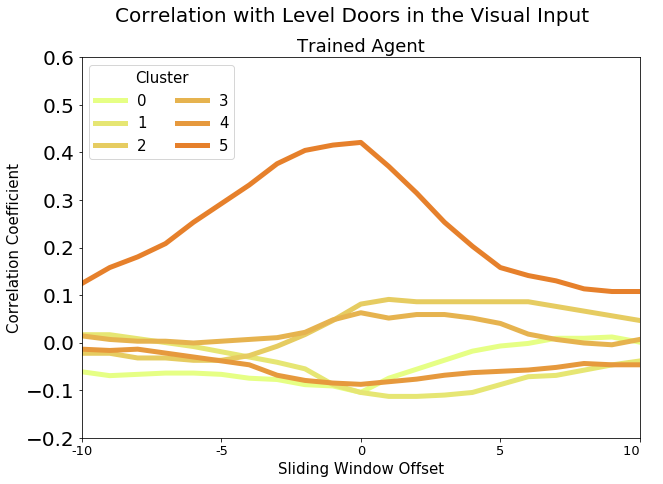

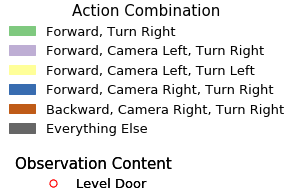

In the figure below (left) you can see the distribution of images in the 6 clusters calculated with k-means clustering. On the right you can see the within class variance for each cluster. The clustering is performed on the embedding of the visual observations (256 dimensions). When looking at the images in the different clusters one can make out some semantic patterns. Some cluster contain images where the agent walks through doors or sees other rewarding events coming such as blue spheres or keys (for instance see cluster 5), other clusters contain frames where the agent simply walks through rooms (cluster 1) while others for example contain hard right/left turns or backwards motion (cluster 2). Feel free to also explore the images in the 6 class clustering (number of cluster with least within class variance).

In the figure below one can see that the clusters don't only have a meaningful structure in regard to the actions they contain but also the content of the image. One can see that for example the information if a level door is observed is represented in the visual encoding and in this clustering those frames are mainly placed within cluster 5.

Hover with your curser over the data points to see the corresponding observations of the agent for each point.

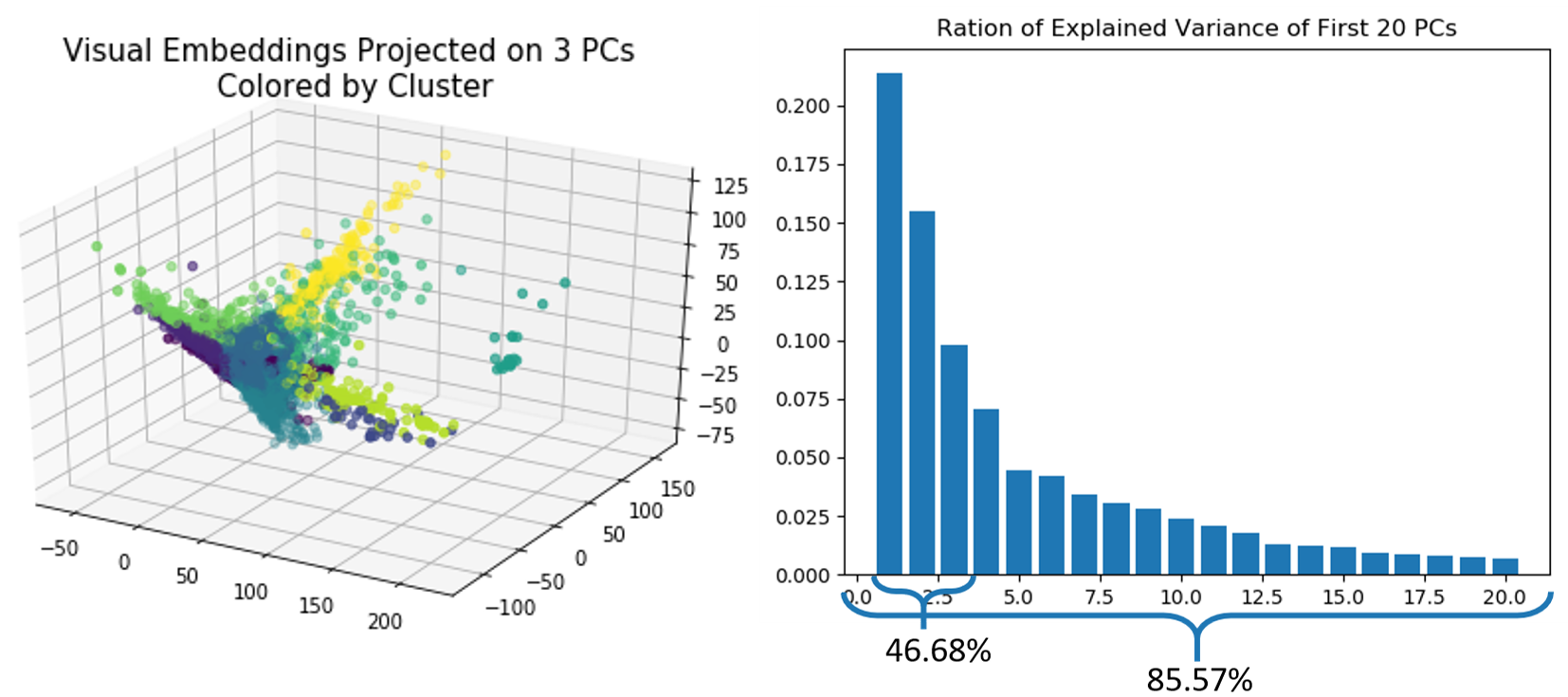

Principal component analysis reveals quite a lot of variance in the activations. The first three components explain only 46.68% of the variance and even 20 principal components can explain only 85.57% of the variance.

When looking at the K-Means clustered data projected onto the principal component one can see somehow distinct point clouds.

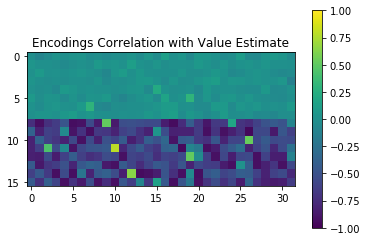

In figure 7 you can see the correlation between the neuron activations in the hidden layer and the value estimate.

When looking at the most correlated neuron in the visual part of the embedding one can se spikes in the neuron strongly correlated with going through level doors or obtaining other rewards.

When we now look at the visual observations that lead to this neuron being activated we can see that a lot of these observations contain doors and spheres.

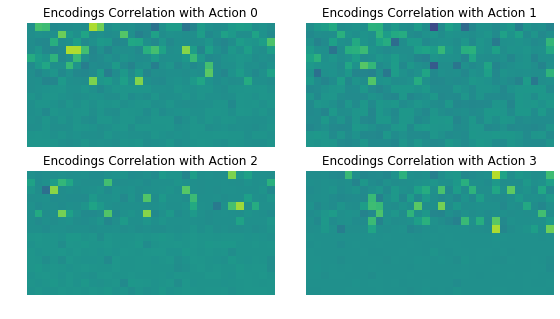

Figure 8 shows the correlation between network activations and the four action branches. You can see a stronger correlation with the visual part of the embedding than the vector part.

In this display you can see the correlation of each neuron with all the other neurons. If you want to see the visual observations which led to the selected neuron being active click on the button "Active Frames" and they will appear in the display below.

The following animation shows the embedded layer activations in the agents brain (left) with the corresponding visual observations (right) and the actions which the agent selects on a generalization run. In this run the environment is lit in new, untrained lighting conditions. Here Level 0,1,2,3 & 5 are shown.