Readme em português esta aqui: README Português

This project extracts weather data from the OpenWeatherMap API and traffic data from the OSRM API, transforms and cleans the data, and loads it into a PostgreSQL database. Queries are then executed to generate reports based on the extracted data.

- Git

- Docker

- Docker Compose

Set the environment variables for API keys in the docker-compose files. Obtain your API keys from:

-

Set environment variables for api keys in docker-compose files (https://home.openweathermap.org/api_keys)

-

Clone the repository:

git clone https://github.com/vitorjpc10/etl-weather_traffic_data.git

-

Move to the newly cloned repository:

cd etl-weather_traffic_data

-

Build and run the Docker containers:

docker-compose up --build

-

The data will be extracted, transformed, and loaded into the PostgreSQL database based on the logic in

scripts/main.py. -

Once built, run the following command to execute queries on both weather and traffic tables:

docker exec -it etl-weather_traffic_data-db-1 psql -U postgres -c "\i queries/queries.sql"

Do

\qin terminal to quit query, there are 2 queries in total. -

To generate the pivoted report, access the PostgreSQL database and execute the

query.sqlSQL file:docker exec -it etl-gdp-of-south-american-countries-using-the-world-bank-api-db-1 psql -U postgres -c "\i query.sql"

-

Move to the Airflow directory:

cd airflow -

Build and run the Docker containers:

docker-compose up --build

-

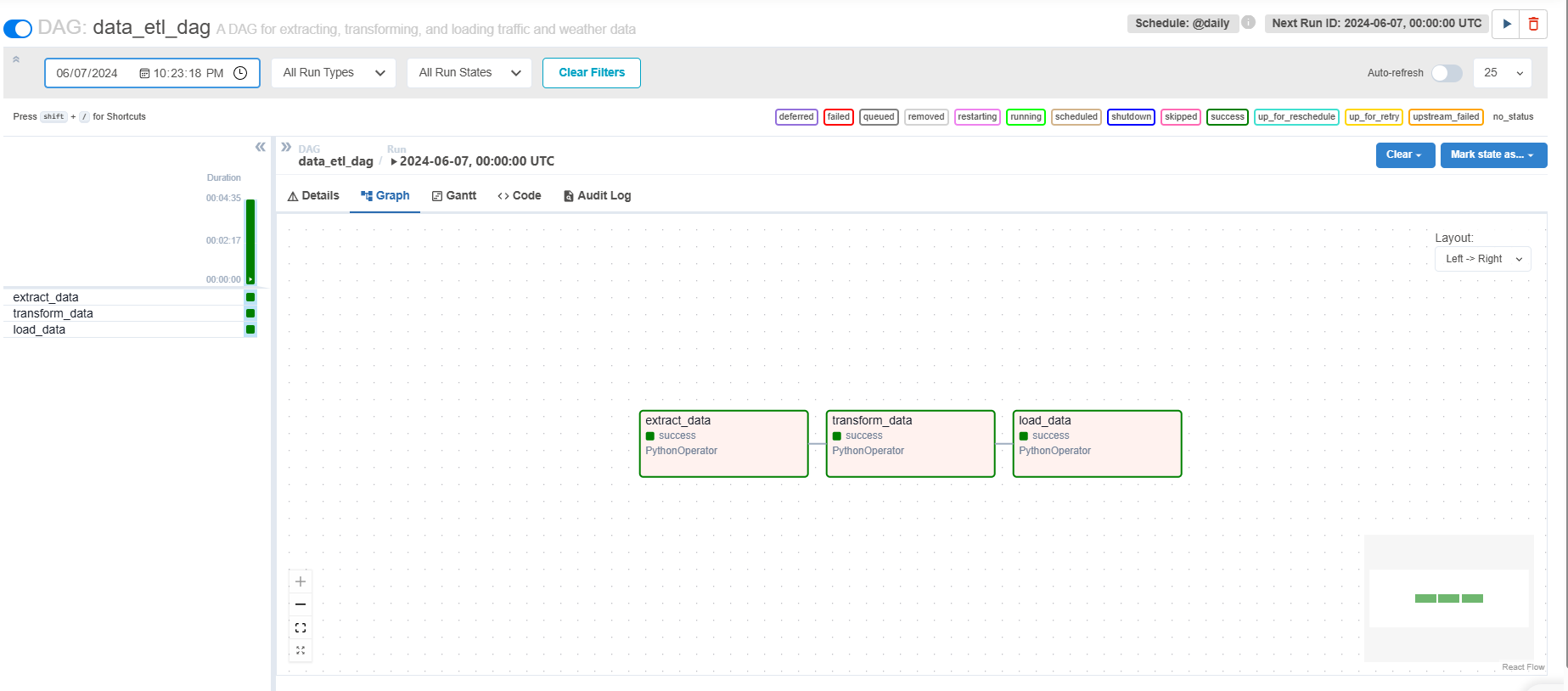

Once all containers are built access local (http://localhost:8080/) and trigger etl_dag DAG (username and password are admin by default)

-

Once DAG compiles successfully, run the following command to execute queries on both weather and traffic tables:

docker exec -it etl-weather_traffic_data-db-1 psql -U airflow -c "\i queries/queries.sql"

Do

\qin terminal to quit query, there are 2 queries in total.

- The project uses Docker and Docker Compose for containerization and orchestration to ensure consistent development and deployment environments.

- Docker volumes are utilized to persist PostgreSQL data, ensuring that the data remains intact even if the containers are stopped or removed.

- The PostgreSQL database is selected for data storage due to its reliability, scalability, and support for SQL queries.

- Pure Python, SQL, and PySpark are used for data manipulation to ensure lightweight and efficient data processing.

- The SQL queries for generating reports are stored in separate files (e.g.,

queries.sql). This allows for easy modification of the queries and provides a convenient way to preview the results. - To generate the reports, the SQL queries are executed within the PostgreSQL database container. This approach simplifies the process and ensures that the queries can be easily run and modified as needed.