Code and data of the paper:

Lucas E. Resck, Marcos M. Raimundo, Jorge Poco. "Exploring the Trade-off Between Model Performance and Explanation Plausibility of Text Classifiers Using Human Rationales," NAACL Findings 2024 (to appear).

Check out the paper on arXiv!

Thank you for your interest in our paper and code.

Steps to reproduce our experiments:

- Setup: Conda environment, data download, and optional model access request.

- Experiments: raw experimental data generation with

experiments/code; - Figures and Tables: final results generation with code at

notebooks/.

Clone and enter the repository:

git clone https://github.com/visual-ds/plausible-nlp-explanations.git

cd plausible-nlp-explanations

Ensure anaconda or miniconda is installed. Then, create and activate the Conda environment:

cd conda

conda env create -f export.yml

conda activate plausible-nlp-explanations

In case of package inconsistencies, you may also create the environment from

no_builds.yml(which contains package versions without builds) orhistory.yml(package installation history).

Install moopt:

cd ../..

git clone https://github.com/marcosmrai/moopt.git

cd moopt

git checkout e2ab0d7b25e8d7072899a38dd2458e65e392c9f0

python setup.py install

This is a necessary package for multi-objective optimization. For details, check its repository.

We point the reader to the original dataset sources and their respective licenses:

- Download HateXplain from authors' GitHub and save it into

data/hatexplain/. - Download Movie Reviews from authors' webpage and extract it into

data/movie_reviews/. - Download Tweet Sentiment Extraction from Kaggle and extract it into

data/tweet_sentiment_extraction/. - Download HatEval dataset at the author's instantaneous data request form and extract it into

data/hateval2019/. If the link is unavailable, we can share it under CC BY-NC 4.0—contact us.

data should look like this:

explainability-experiments

├── conda

├── data

│ ├── hateval2019

│ ├── hatexplain

│ ├── movie_reviews

│ └── tweet_sentiment_extraction

├── experiments

├── ...

Request access to the models fine-tuned on HateXplain dataset at Hugging Face: DistilBERT and BERT-Mini. Make sure to have set up a Hugging Face user access token and added it to your machine running

huggingface-cli login

We do not release HateXplain–fine-tuned models publicly because of ethical concerns. These models are only necessary for specific experiments though.

To generate the raw experimental data, you can use the following command:

python experiments.py --datasets hatexplain movie_reviews \

--explainers lime shap --models tf_idf distilbert \

--negative_rationales 2 5 --experiments_path data/experiments/ \

--performance_metrics accuracy recall \

--explainability_metrics auprc sufficiency comprehensiveness \

--random_state 42 --gpu_device 0 --batch_size 128

You can change the parameters as you wish. For example, to run the same experiment but with BERT-Mini, you can change --models tf_idf distilbert to --models bert_mini. For details, please check the help:

python experiments.py -h

To run the BERT-HateXplain comparison, you can use the following command:

python comparison.py --datasets hatexplain_all --explainers lime \

--models bert_attention --negative_rationales 2 \

--experiments_path data/experiments/ \

--performance_metrics accuracy recall \

--explainability_metrics auprc sufficiency comprehensiveness \

--random_state 42 --gpu_device 0 --batch_size 128 --n_jobs 1

You can also change the parameters as you wish. For details, please check the help:

python comparison.py -h

To run the out-of-distribution experiments, simply run

python out_of_distribution.py

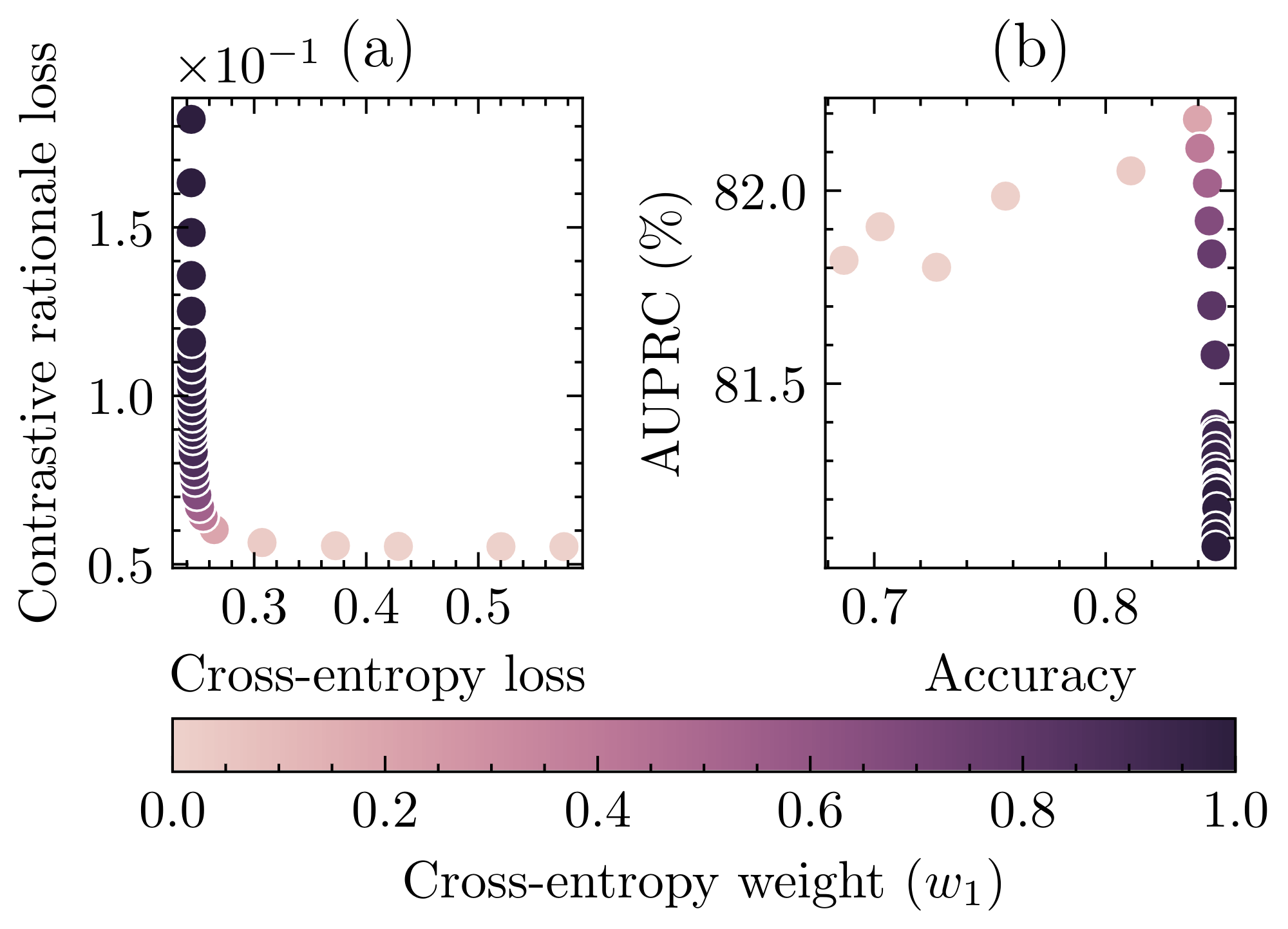

To generate the final figures and tables, run the Jupyter notebooks at the folder notebooks/. It is necessary to have LaTeX installed on your machine to generate the figures. For example, to generate Figure 3 (DistilBERT and HateXplain's trade-offs), run the notebook notebooks/complete_graphic.ipynb. For details, please check the notebooks.

A new dataset should be a new Python module at experiments/datasets/ following the Dataset base class at experiments/dataset.py.

Then, it should be easy to add it to experiments.py.

The same is true for new models and explainers, and similar for metrics.py.

Feel free to open issues and pull requests.

We release this code under the MIT License.

@inproceedings{resck_exploring_2024,

address = {Mexico City, Mexico},

title = {Exploring the {Trade}-off {Between} {Model} {Performance} and {Explanation} {Plausibility} of {Text} {Classifiers} {Using} {Human} {Rationales}},

copyright = {Copyright © 2024 Association for Computational Linguists (ACL). All Rights Reserved. Licensed under the CC BY 4.0 license (https://creativecommons.org/licenses/by/4.0/).},

url = {https://arxiv.org/abs/2404.03098},

booktitle = {Findings of the {Association} for {Computational} {Linguistics}: {NAACL} 2024},

publisher = {Association for Computational Linguistics},

author = {Resck, Lucas E. and Raimundo, Marcos M. and Poco, Jorge},

month = jun,

year = {2024},

note = {To appear},

}