Numerai learning

This repo contains materials for getting started on Numerai's ML problems. Topics include

- Convex optimisation

- ML modelling of numerai tournament

- Quant Club notes

Resources

Convex optimisation for portfolio allocation

- Ipython notebook from Boyd's Stanford short course

- Slides on the application of convex optimisation.

- Linear algebra Quadratic form

Convex optimisation

Portfolio maximisation formulation

Read through

- the ipython notebook from Boyd's Stanford short course

- slides on the application of convex optimisation.

The following is almost an exact rewrite of the notebook.

We formulate the portfolio allocation across a set of assets as a convex optimisation problem where we want to maximise return and minimise risk.

We have a fixed budget normalised to

$$\text{leverage} = ||w||1 = \mathbf{1}^Tw+ + \mathbf{1}^T w_-$$

We can short sell and use that money to go long. So we can technically invest more than our total budget.

Asset return

Now we define asset return as

Portfolio Return

We define expected portfolio return

Objective

We want to maximise return while minimising risk. We can set this up as the classical Markowitz portfolio optimisation.

Risk vs Return trade-off curve

Here

Choosing the optimal risk-return trade-off

Above, we approximate the portfolio return by a normal distribution. We can now plot the return at different risk-aversion values

Other constraints

- Max leverage constraint

$|w|_1 < L^{max}$ . As we increase leverage, we can get higher returns for the same risk (defined by stddev). Also higher leverage allows us to take higher risks. - Set max leverage and max risk as constraints and optimise return. Here we show

optimal asset allocations for different maximum leverage for a fixed max risk.

- Market neutral constraint. We want the portfolio returns to be uncorrelated with the

market returns.

$m^T \Sigma w = 0$ where$m_i$ is the capitalisation of the asset$i$ and$M=\mathbf{m}^T\mathbf{r}$ is the market return. That is$m_i$ is the fraction of the market cap this asset holds. Think what fraction of S&P500 does Apple hold.$m^T \Sigma w = \mathbf{cov(M, R)}$ by setting this to zero, we make the portfolio uncorrelated with the market. The allocation$w$ vector is rotated orthogonal to the market cap fraction vector$m \perp \Sigma w \implies m^T \cdot (\Sigma w) = 0$ .

Variations

- Fix minimum return

$R^{min} \geq \mathbf{\mu}^T w$ and minimise risk ($w^T \Sigma w$ ). - Include broker costs for short positions

$\mathbf{s}^T w_-$ . - Include transaction fee to change from current portfolio as a penalty

$\kappa^T |w - w^{cur}|^{\eta}, \kappa \geq 0$ . Usual values for$\eta$ are$\eta = 0, 1.5, 2$ . - Factor covariance model explained later.

Variation - Factor Covariance model

In a factor covariance model, we assume

- Each of the

$n$ stocks belong to$k$ factors ($k \ll n, k \approx 10$ ) with different proportions (linear weighting/affine). A factor can be thought of as an industry sector (tech vs energy vs finance etc.) - Individual stocks are not directly correlated with other stocks but only indirectly through their factors.

We can thus factorise the Covariance matrix

-

$F_{[n\times k]}$ is the factor loading matrix and$F_{ij}$ is the loading of asset$i$ to factor$j$ and -

$D_{[n \times n]}$ is a diagonal matrix where$D_{ii}>0$ with the individual risk of each stock independent of the factor covariance. -

$\tilde\Sigma_{[k \times k]} > 0$ is the factor covariance matrix (positive definite)

Portfolio factor exposure:

Formulation

Computational complexity to solve the problem falls from

In order to leverage this we define the problem like below

Python code for standard and factor portfolio optimisation

n = 3000 # number of stocks / assets

m = 50 # number of factors

np.random.seed(1)

mu = np.abs(np.random.randn(n, 1)) # average return = (p_new - p)/p over days

Sigma_tilde = np.random.randn(m, m) # factor cov matrix Σ'

Sigma_tilde = Sigma_tilde.T.dot(Sigma_tilde)

D = sp.diags(np.random.uniform(0, 0.9, size=n)) # Stock idiosyncratic risk (indep of factor)

F = np.random.randn(n, m) # Factor loading matrix: how much each stock relates to a factor

# Standard model portfolio optimisation

#######################################

w = cp.Variable(n) # portfolio allocation

gamma = cp.Parameter(nonneg=True) # Risk aversion parameter

Lmax = cp.Parameter(nonneg=True) # Maximum leverage

Sigma = F @ Sigma_tilde @ F.T + D # [n*n] = [n*k] x [k*k] x [k*n] + [n*n]

preturn = mu.T @ w # [1*1] = [1*n] x [n*1]

risk = w.T @ Sigma @ w # [1*1] = [1*n] x [n*n] x [n*1]

# (or equivalently) risk = cp.quad_form(w, Sigma)

prob_std = cp.Problem(

objective=cp.Maximize(preturn - gamma * risk),

constraints={cp.sum(w)==1, cp.norm(w, 1) <= Lmax},

)

# Solve it: Takes 15 minutes

gamma.value = 0.1

Lmax.value = 2

prob.solve(verbose=True)

print(f"Return: {preturn.value[0]}, Risk: {np.sqrt(risk.value)}")

# Factor model portfolio optimisation

#####################################

w = cp.Variable(n)

# Make the exposure a variable and constraint it to F^T w = f

f = cp.Variable(m)

gamma, Lmax = cp.Parameter(nonneg=True), cp.Parameter(nonneg=True)

retn_factor = mu.T @ w

risk_factor = cp.quad_form(f, Sigma_tilde) + cp.sum_squares(np.sqrt(D) @ w)

# (or equivalently) risk_factor = f.T @ Sigma_tilde @ f + w.T @ D @ w

prob_factor = cp.Problem(

objective=cp.Maximize(retn_factor - gamma * risk_factor),

constraints={

cp.sum(w)==1,

cp.norm(w, 1) <= Lmax,

F.T@w==f, # NOTE: This is a new constraint

},

)

# Solve it: Takes 1.2 seconds

gamma.value = 0.1

Lmax.value = 2

prob.solve(verbose=True)

print(f"Return: {preturn.value[0]}, Risk: {np.sqrt(risk.value)}")

print(prob_factor.solver_stats.solve_time)Numerai modelling

Learnings from loading data

(Updated: 21st March 2023)

-

There are several versions of data, the most recent one being

v4.1. -

Three types of datasets

Data split Rows Features Eras Notes Training 2.4M ~1600 [0, 574] Pandas df: 7.5GB, forums contain xval code Validation 2.4M ~1600 [575, 1054] Pandas df: 7.5GB, this is the test data Live 5k ~1600 current week Most recent week's data to predict on -

Each training row or validation row is indexed by

(era, stock_id). Eras are incremented every week.

features.json file

Contains

targets: These are simply the target column names. Thetargetcolumn points totarget_nomi_v4_20. We can train on multiple targets and build a meta model to predict on final target.

| Target type | Number of targets |

| ----------- | ----------------- |

| All | 28 |

| 20 day | 14 |

| 60 day | 14 |

feature_sets: Provides preset features that we can use to quickly bootstrap modelling on a smaller feature set or previous versions' feature sets.

| Feature set | Count |

|---|---|

| small | 32 |

| medium | 641 |

| v2_equivalent_features | 304 |

| v3_equivalent_features | 1040 |

| fncv3_features | 416 |

feature_stats: Contains useful correlation information to rank features. We can load up the feature stats in a pandas dataframe withpd.DataFrame(features_json["feature_stats"]). Example output

| feature_honoured_observational_balaamite | feature_polaroid_vadose_quinze | ... | |

|---|---|---|---|

| legacy_uniqueness | nan | nan | ... |

| spearman_corr_w_target_nomi_20_mean | -0.000868326 | 0.000162301 | ... |

| spearman_corr_w_target_nomi_20_sharpe | -0.084973 | 0.0161156 | ... |

| spearman_corr_w_target_nomi_20_reversals | 7.04619e-05 | 8.11128e-05 | ... |

| spearman_corr_w_target_nomi_20_autocorr | 0.0326726 | -0.0128228 | ... |

| spearman_corr_w_target_nomi_20_arl | 3.92019 | 3.55319 | ... |

Below is a table that looks at the overlap of the top 100 features according to

different statistics with the preset feature sets provided by numerai

| statistic | feature set | overlap | feature set size | Percent overlap with fset |

| --------------------------------------- | ---------------------- | ------- | ---------------- | ------------------------- |

| corr_w_target_nomi_20_mean | small | 9 | 32 | 28% |

| corr_w_target_nomi_20_mean | medium | 42 | 641 | 7% |

| corr_w_target_nomi_20_mean | v2_equivalent_features | 40 | 304 | 13% |

| * | | | | |

| corr_w_target_nomi_20_autocorr | small | 3 | 32 | 9% |

| corr_w_target_nomi_20_autocorr | medium | 33 | 641 | 5% |

| corr_w_target_nomi_20_autocorr | v2_equivalent_features | 16 | 304 | 5% |

| * | | | | |

| corr_w_target_nomi_20_arl | small | 1 | 32 | 3% |

| corr_w_target_nomi_20_arl | medium | 46 | 641 | 7% |

| corr_w_target_nomi_20_arl | v2_equivalent_features | 8 | 304 | 3% |

Sharpe correlation is similar to `corr_mean` and `corr_reversals` has a small overlap similar to

`corr_arl`.

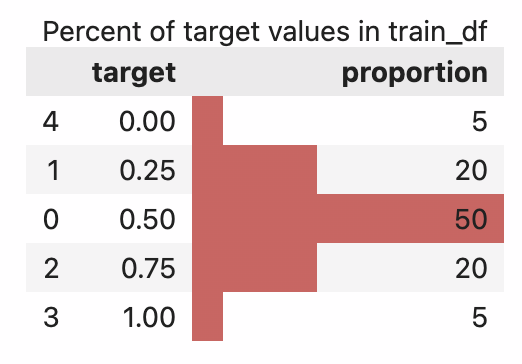

Target distribution

See github gist for generating the plot below.

Downloading data

See the script download_numerai_dataset.py in this folder.

def download_data(cur_round=None, version="v4.1"):

"""Downloads training, validation and tournament-live data.

It stores them in the following folder structure

data/v4.1/train_int8.parquet

data/v4.1/validation_int8.parquet

data/444/v4.1/live_int8.parquet

data/v4.1/features.json

"""

cur_round = cur_round or napi.get_current_round()

Path(f"data/{cur_round}").mkdir(exist_ok=True, parents=True)

fl_to_downpath = {

f"{version}/live_int8.parquet": f"data/{cur_round}/{version}/live_int8.parquet",

f"{version}/train_int8.parquet": f"data/{version}/train_int8.parquet",

f"{version}/validation_int8.parquet": f"data/{version}/validation_int8.parquet",

f"{version}/features.json": f"data/{version}/features.json",

}

for filename, dest_path in fl_to_downpath.items():

napi.download_dataset(filename=filename, dest_path=dest_path)

print(f"Current round: \t{cur_round}\n")

downd_fls = '\n\t'.join(str(pt) for pt in Path('data').rglob('*.*'))

print(f"Downloaded files: \n\t{downd_fls}")

return cur_round, fl_to_downpathModel metrics

Cross validation

We need custom cross validation code because

- we want an entire era to act as a group that either appears in training or in the validation split

- we only want to use past eras as training and never train on future eras and test on past eras.

See mdo's post for an implementation.

Quant club notes

22 Mar 2023

Questions

Normalisation

Weighted average signal is passed to the optimiser. no neutralisation is done. The constraints forces neutralisation. Even if signal is neutral, the portfolio may not be neutral and hence constraints need to be specified at a portfolio level.

New targets?

New targets are going to be shared. Moving away from rank correlation to pearson corr, makes the predictions guassianised. With the new metric we can get a perfect corr of 1.

New targets closer to TC?

- Some metrics are easier to compute now that you have access to the meta model score.

Hitting max capacity with higher AUMs

- Any update on max capacity?

- Max capacity decreases with increasing AUM. Gradual and smooth shift. No clear fall off.

- Daily trading can help here.

- Hoping to get to 1B$ AUM by end of the year. Still have headroom

Main talk: LightGBM settings

- Interesting settings in lightgbm. Some of them have similar settings in xgb but not

all settings.

- Monotone constraints: A list [1,-1,0] length, length of feature. You can enforce that this feature should always be monotonically increasing or decreasing. Especially for composite features.Now there are more advanced papers but are similar. mdo's experience: They don't necessarily help with raw model performance but can help with sharpe, TC regularisation.

- Linear tree: Builds a linear model at the end of the space. Makes the model

more linear at the end where it flattens out. There are several lambda params to

regularise these. Possible in

lightGBMand not in xgb.

Main talk: XGB as a feature selection & weighting (OHE)

- XGB Discretisation and weighting of features.

- Imagine 5-depth binary DT classification. Splits into 2^5 32 bins. Mixed bins with roughly equal counts 0.5 predictions.

- For pure data bins like the one numerai dataset. If it has very high tree leaf weight but very few datapoints then might not generalise.

- But with ensembling you learn a series of features and feature spaces. You get an output an one hot encoded output for each leaf.

- XGB let's you dump the model

get_dumpfunction. You can convert the xgboost model prediction to a python code. We can compute the leaves. At the end of xgb, you can think of a DNN, it throws a weighting on input space and creates an embedding. - XGB has an apply function which returns the index of the nodes in the output. If input is n,p features and and you fit T trees, then the output is n x T, where each entry in T is the tree node. Now you can create a OHE of the features. n x (T x num_leaves). num_leaves 100*2^5=3200.

- If you had leaf weights times the OHE features you will get the prediction of the xgboost model.

- The plot of feature values is the average value of parameters for each subspace. The spikes in the spaces could be useful space or could be noise.

- Most average parameter space is close to 0. The instances are highly non-separable so the impact is almost 0 for most cases.

- On the final features you could do linear regression.

- L1 leaves which are small perhaps shrink those to zero.

- Shrink the spaces with fewer number of datapoints than spaces with larger number of datapoints. Shrink by 1/sqrt(n) (confidence interval/margin). Shrink out the high variance leaves.

- 1/sqrt shrinking better correlated with unshrinked output from xgb than unweighted L1.

- Unweighted L1 improved sharpe by decreasing variance. However the expected gain dropped sharply. The weighted L1 had a much more modest drop in expected gain by retaining most of the variance wins.