Similar to previous "Automated Lab Deployment Scripts" (such as here, here, here, here and here), this script makes it very easy for anyone to deploy a vSphere 7.0 Update 2 environment setup with vSphere with Tanzu and the new NSX Advanced Load Balancer (NSX ALB) in a Nested Lab environment for learning and educational purposes. All required VMware components (ESXi, vCenter Server and NSX ALB VMs) are automatically deployed and configured to allow for the enablement of vSphere with Tanzu. For more details about vSphere with Tanzu, please refer to the official VMware documentation here.

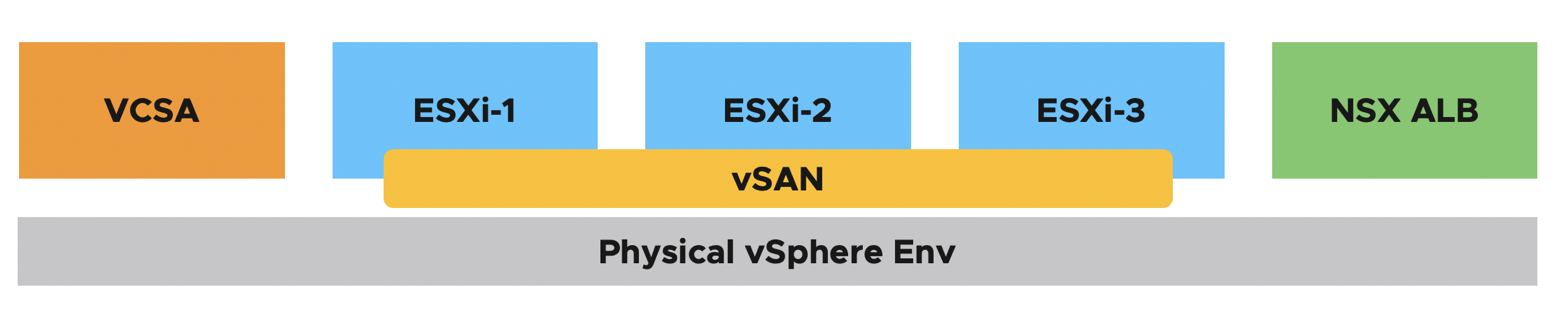

Below is a diagram of what is deployed as part of the solution and you simply need to have an existing vSphere environment running that is managed by vCenter Server and with enough resources (CPU, Memory and Storage) to deploy this "Nested" lab. For workload management enablement (post-deployment operation), please have a look at the Sample Execution section below.

You are now ready to get your K8s on! 😁

- 04/05/2021

- Initial Release

-

vCenter Server running at least vSphere 6.7 or later

- If your physical storage is vSAN, please ensure you've applied the following setting as mentioned here

-

ESXi Networking

- Enable either MAC Learning or Promiscuous Mode on your physical ESXi host networking to ensure proper network connectivity for Nested ESXi workloads

-

Resource Requirements

-

Compute

- Ability to provision VMs with up to 8 vCPU

- Ability to provision up to 108 GB of memory

- DRS-enabled Cluster (not required but vApp creation will not be possible)

-

Network

- 1 x Standard or Distributed Portgroup (routable) to deploy all VMs (vSphere Management, NSX ALB + Supervisor Management)

- 5 x IP Addresses for VCSA, ESXi and NSX ALB VM

- 5 x Consecutive IP Addresses for Kubernetes Control Plane VMs

- 8 x IP Addresses for NSX ALB Service Engine VMs

- 1 x Standard or Distributed Portgroup (routable) for combined Load Balancer IPs + Workload Network (e.g. 172.17.32.128/26)

- IP Range for NSX ALB VIP/Load Balancer addresses (e.g. 172.17.32.152-172.17.32.159)

- IP Range for Workload Network (e.g. 172.17.32.160-172.17.32.179)

- 1 x Standard or Distributed Portgroup (routable) to deploy all VMs (vSphere Management, NSX ALB + Supervisor Management)

-

Storage

- Ability to provision up to 1TB of storage

Note: For detailed requirements, plesae refer to the official document here

-

-

Desktop (Windows, Mac or Linux) with latest PowerShell Core and PowerCLI 12.1 Core installed. See instructions here for more details

-

vSphere 7 Update 2 & NSX ALB OVAs:

-

Can I reduce the default CPU, Memory and Storage resources?

- You can, see this blog post for more details. I have not personally tested reducing CPU and Memory resources for NSX ALB, YMMV.

-

Can I just deploy vSphere (VCSA, ESXi) and vSAN without NSX ALB and vSphere with Tanzu?

-

Yes, simply search for the following variables and change their values to

0to not deploy Tanzu components or run through the configurations$deployNSXAdvLB = 0 $setupTanzuStoragePolicy = 0 $setupTanzu = 0 $setupNSXAdvLB = 0

-

-

How do I enable Workload Management after the script has completed?

- Please see Enable Workload Management section for instructions

-

How do I troubleshoot enabling or consuming vSphere with Tanzu?

- Please refer to this troubleshooting tips for vSphere with Kubernetes blog post

-

Is there a way to automate the enablement of Workload Management to a vSphere Cluster?

- Yes, the Workload Management PowerCLI Module for automating vSphere with Tanzu can be used. Please see Enable Workload Management section for instructions

-

Can I deploy vSphere with Tanzu using NSX-T instead of NSX ALB?

- Yes, you will need to use the previous version of the Automated vSphere with Kubernetes deployment script and substituting the vSphere 7.0 Update 2 images

Before you can run the script, you will need to edit the script and update a number of variables to match your deployment environment. Details on each section is described below including actual values used in my home lab environment.

This section describes the credentials to your physical vCenter Server in which the vSphere with Tanzu lab environment will be deployed to:

$VIServer = "FILL-ME-IN"

$VIUsername = "FILL-ME-IN"

$VIPassword = "FILL-ME-IN"This section describes the required TKG Content Library needed for deploying TKG Clusters.

$TKGContentLibraryName = "TKG-Content-Library"

$TKGContentLibraryURL = "https://wp-content.vmware.com/v2/latest/lib.json"This section describes the location of the files required for deployment.

$NestedESXiApplianceOVA = "C:\Users\william\Desktop\tanzu\Nested_ESXi7.0u2_Appliance_Template_v1.ova"

$VCSAInstallerPath = "C:\Users\william\Desktop\tanzu\VMware-VCSA-all-7.0.2-17694817"

$NSXAdvLBOVA = "C:\Users\william\Desktop\tanzu\controller-20.1.4-9087.ova"Note: The path to the VCSA Installer must be the extracted contents of the ISO

This section describes the Tanzu Kubernetes Grid vSphere Content Library to subscribed from. This should be left alone and just ensure your environment has outbound connectivity to this endpoint

$TKGContentLibraryName = "TKG-Content-Library"

$TKGContentLibraryURL = "https://wp-content.vmware.com/v2/latest/lib.json"This section defines the number of Nested ESXi VMs to deploy along with their associated IP Address(s). The names are merely the display name of the VMs when deployed. At a minimum, you should deploy at least three hosts, but you can always add additional hosts and the script will automatically take care of provisioning them correctly.

$NestedESXiHostnameToIPs = @{

"tanzu-esxi-1" = "172.17.33.4"

"tanzu-esxi-2" = "172.17.33.5"

"tanzu-esxi-3" = "172.17.33.6"

}This section describes the resources allocated to each of the Nested ESXi VM(s). Depending on your usage, you may need to increase the resources. For Memory and Disk configuration, the unit is in GB.

$NestedESXivCPU = "4"

$NestedESXivMEM = "24" #GB

$NestedESXiCachingvDisk = "8" #GB

$NestedESXiCapacityvDisk = "200" #GBThis section describes the VCSA deployment configuration such as the VCSA deployment size, Networking & SSO configurations. If you have ever used the VCSA CLI Installer, these options should look familiar.

$VCSADeploymentSize = "tiny"

$VCSADisplayName = "tanzu-vcsa-1"

$VCSAIPAddress = "172.17.33.3"

$VCSAHostname = "tanzu-vcsa-1.tshirts.inc" #Change to IP if you don't have valid DNS

$VCSAPrefix = "24"

$VCSASSODomainName = "vsphere.local"

$VCSASSOPassword = "VMware1!"

$VCSARootPassword = "VMware1!"

$VCSASSHEnable = "true"This section describes the NSX ALB VM configurations

$NSXAdvLBDisplayName = "tanzu-nsx-alb"

$NSXAdvLByManagementIPAddress = "172.17.33.9"

$NSXAdvLBHostname = "tanzu-nsx-alb.tshirts.inc"

$NSXAdvLBAdminPassword = "VMware1!"

$NSXAdvLBvCPU = "8" #GB

$NSXAdvLBvMEM = "24" #GB

$NSXAdvLBPassphrase = "VMware"

$NSXAdvLBIPAMName = "Tanzu-Default-IPAM"This section describes the Service Engine Network Configuration

$NSXAdvLBManagementNetwork = "172.17.33.0"

$NSXAdvLBManagementNetworkPrefix = "24"

$NSXAdvLBManagementNetworkStartRange = "172.17.33.180"

$NSXAdvLBManagementNetworkEndRange = "172.17.33.187"This section describes the combined VIP/Frontend and Workload Network Configuration

$NSXAdvLBCombinedVIPWorkloadNetwork = "Nested-Tanzu-Workload"

$NSXAdvLBWorkloadNetwork = "172.17.32.128"

$NSXAdvLBWorkloadNetworkPrefix = "26"

$NSXAdvLBWorkloadNetworkStartRange = "172.17.32.152"

$NSXAdvLBWorkloadNetworkEndRange = "172.17.32.159"This section describes the self-sign TLS certificate to generate for NSX ALB

$NSXAdvLBSSLCertName = "nsx-alb"

$NSXAdvLBSSLCertExpiry = "365" # Days

$NSXAdvLBSSLCertEmail = "admini@primp-industries.local"

$NSXAdvLBSSLCertOrganizationUnit = "R&D"

$NSXAdvLBSSLCertOrganization = "primp-industries"

$NSXAdvLBSSLCertLocation = "Palo Alto"

$NSXAdvLBSSLCertState = "CA"

$NSXAdvLBSSLCertCountry = "US"This section describes the location as well as the generic networking settings applied to Nested ESXi VCSA & NSX VMs

$VMDatacenter = "San Jose"

$VMCluster = "Compute Cluster"

$VMNetwork = "Nested-Tanzu-Mgmt"

$VMDatastore = "comp-vsanDatastore"

$VMNetmask = "255.255.255.0"

$VMGateway = "172.17.33.1"

$VMDNS = "172.17.31.2"

$VMNTP = "45.87.78.35"

$VMPassword = "VMware1!"

$VMDomain = "tshirts.inc"

$VMSyslog = "172.17.33.3"

$VMFolder = "Tanzu"

# Applicable to Nested ESXi only

$VMSSH = "true"

$VMVMFS = "false"This section describes the configuration of the new vCenter Server from the deployed VCSA. Default values are sufficient.

$NewVCDatacenterName = "Tanzu-Datacenter"

$NewVCVSANClusterName = "Workload-Cluster"

$NewVCVDSName = "Tanzu-VDS"

$NewVCMgmtPortgroupName = "DVPG-Supervisor-Management-Network"

$NewVCWorkloadPortgroupName = "DVPG-Workload-Network"This section describes the Tanzu Configurations. Default values are sufficient.

$StoragePolicyTagCategory = "tanzu-demo-tag-category"

$StoragePolicyTagName = "tanzu-demo-storage"

$DevOpsUsername = "devops"

$DevOpsPassword = "VMware1!"Once you have saved your changes, you can now run the PowerCLI script as you normally would.

There is additional verbose logging that outputs as a log file in your current working directory tanzu-nsx-adv-lb-lab-deployment.log

In this example below, I will be using a two /24 VLANs (172.17.33.0/24 and 172.17.32.0/24). The first network will be used to provision all VMs and place them under typical vSphere Management network configuration and 5 IPs will be allocated from this range for the Supervisor Control Plane and 8 IPs for the NSX ALB Service Engine. The second network will combine both IP ranges for the NSX ALB VIP/Frontend function as well as the IP ranges for Workloads. See the table below for the explicit network mappings and it is expected that you have a setup similar to what has been outlined.

| Hostname | IP Address | Function |

|---|---|---|

| tanzu-vcsa-1.tshirts.inc | 172.17.33.3 | vCenter Server |

| tanzu-esxi-1.tshirts.inc | 172.17.33.4 | ESXi-1 |

| tanzu-esxi-2.tshirts.inc | 172.17.33.5 | ESXi-2 |

| tanzu-esxi-3.tshirts.inc | 172.17.33.6 | ESXi-3 |

| tanzu-nsx-alb.tshirts.inc | 172.17.33.9 | NSX ALB |

| N/A | 172.17.33.180-172.17.33.187 | Service Engine (8 IPs from Mgmt Network) |

| N/A | 172.17.33.190-172.17.33.195 | Supervisor Control Plane (5 IPs from Mgmt Network) |

| N/A | 172.17.32.152-172.17.32.159 | NSX ALB VIP/Load Balancer IP Range |

| N/A | 172.17.32.160-172.17.32.179 | Workload IP Range |

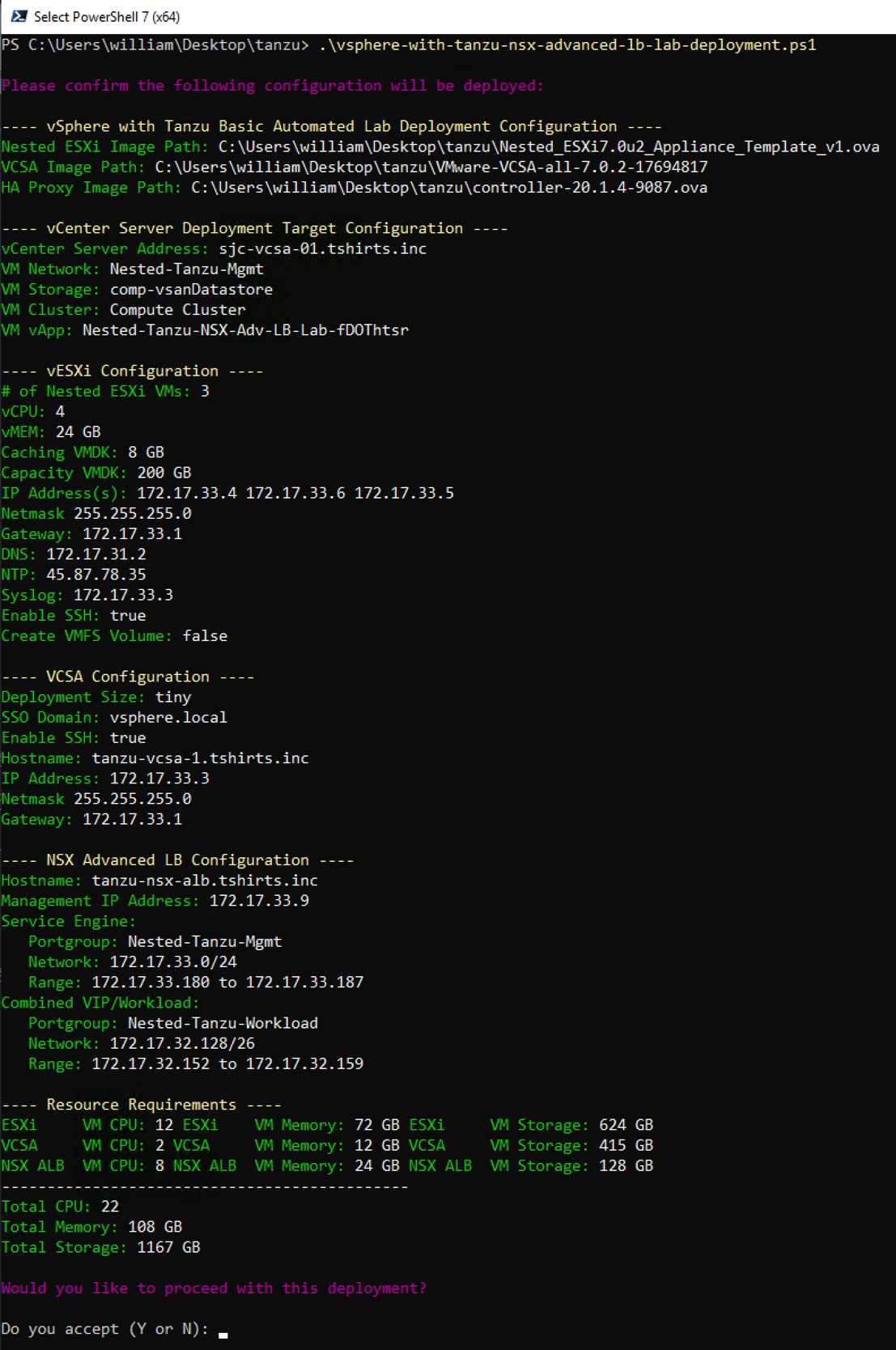

Here is a screenshot of running the script if all basic pre-reqs have been met and the confirmation message before starting the deployment:

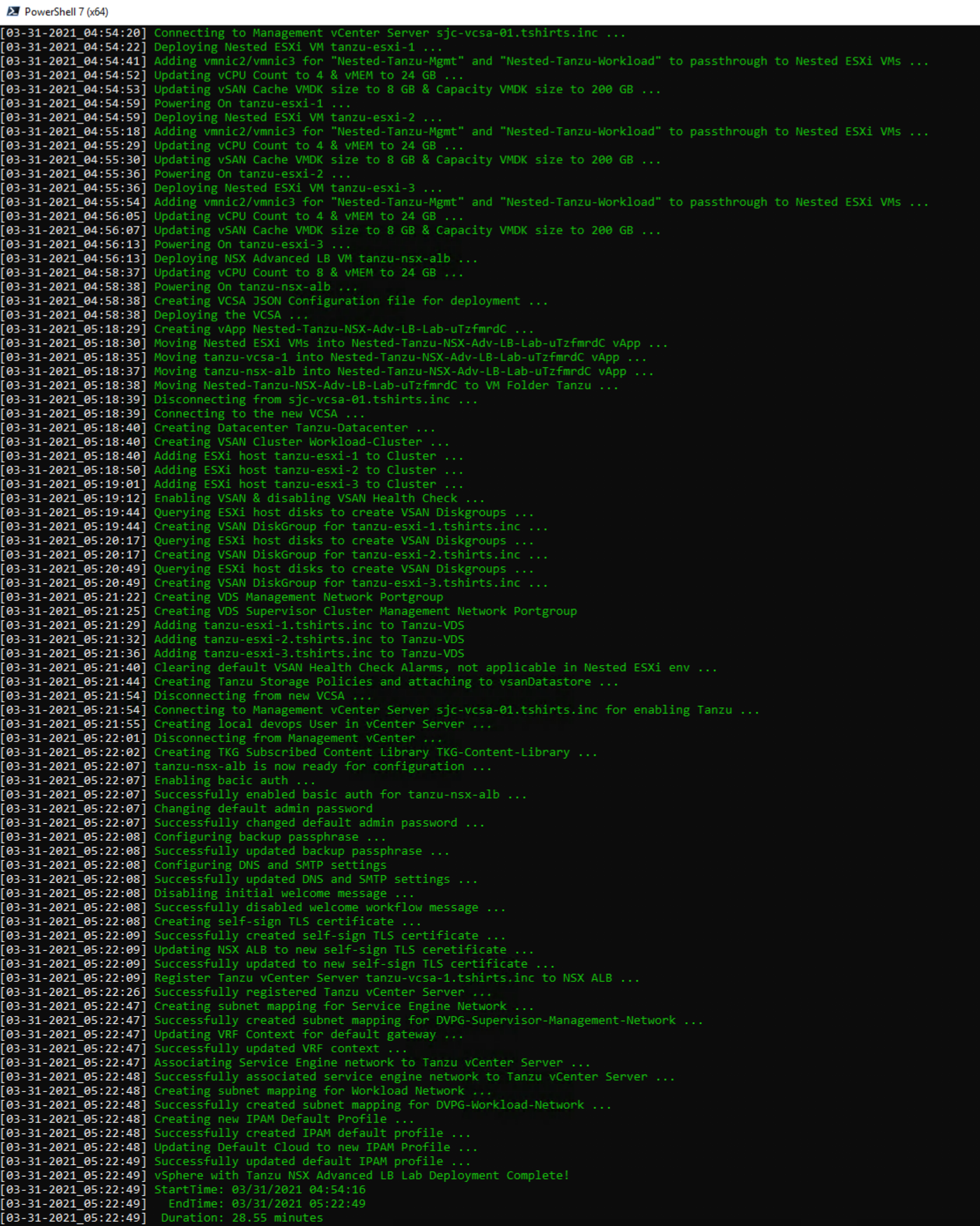

Here is an example output of a complete deployment:

Note: Deployment time will vary based on underlying physical infrastructure resources. In my lab, this took ~29min to complete.

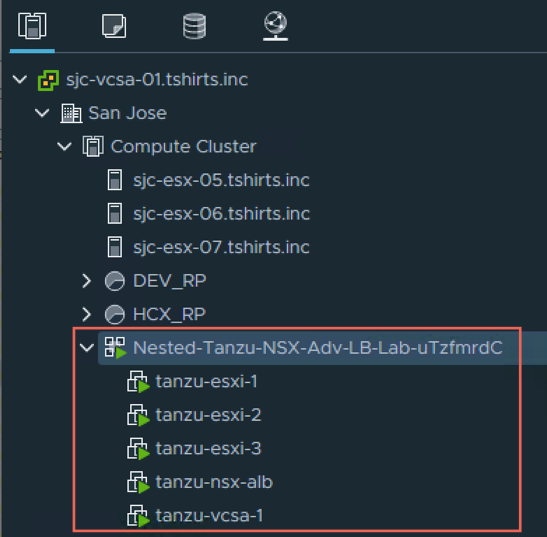

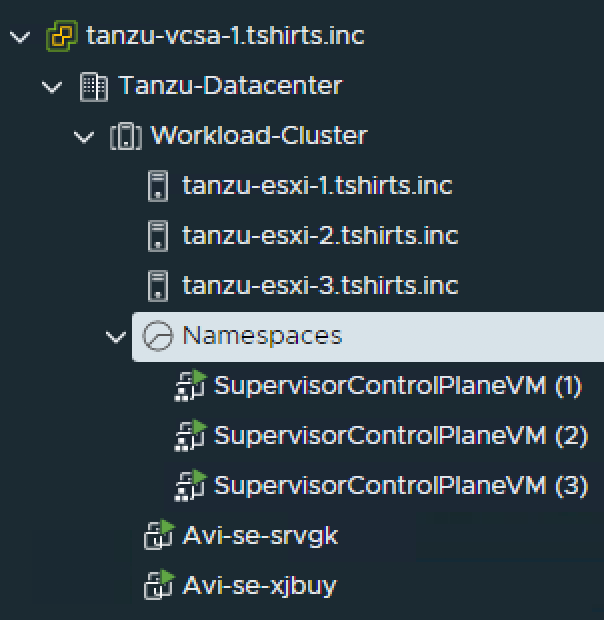

Once completed, you will end up with your deployed vSphere with Kubernetes Lab which is placed into a vApp

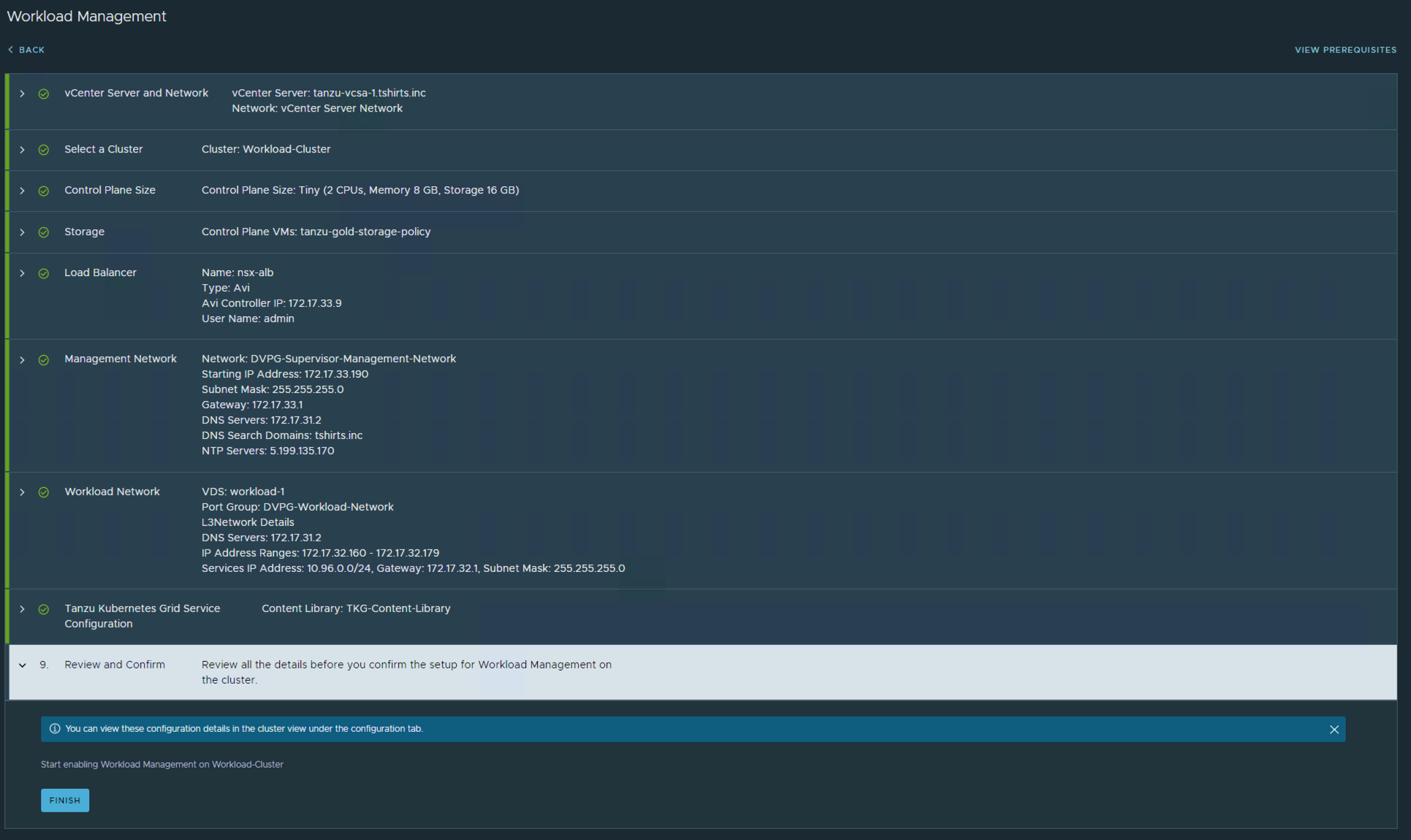

To consume the vSphere with Tanzu capability in vSphere 7.0 Update 2, you must enable workload management on a specific vSphere Cluster, which is not part of this automation script.

You have two options:

- You can enable workload management by using the vSphere UI. For more details, please refer to the official VMware documentation here.

Here is an example output of what the deployment configuration should look like for my network setup:

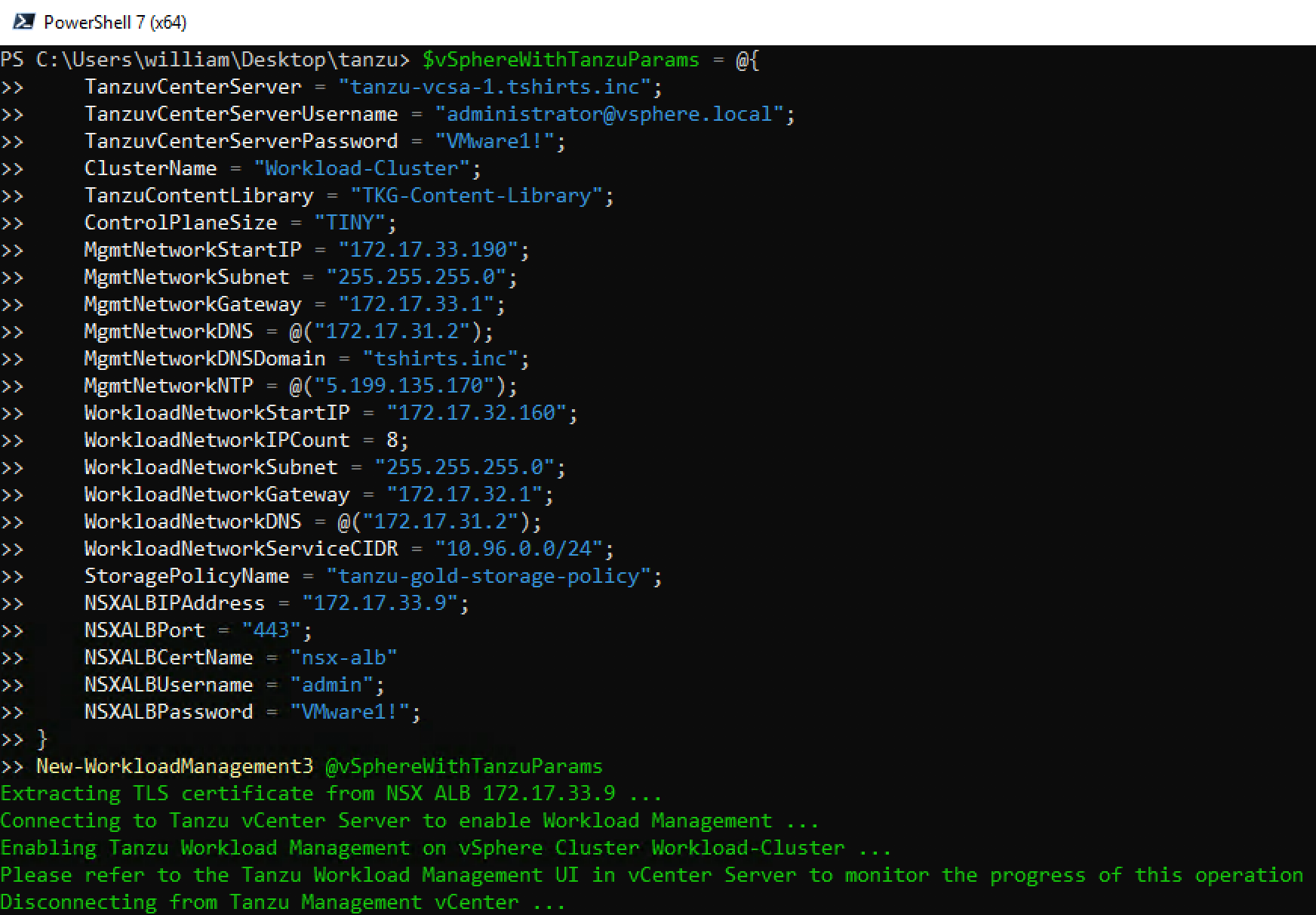

- You can use my community PowerCLI module (VMware.WorkloadManagement) to automate the enablement of workload management:

Here is an example using the New-WorkloadManagement3 function and the same configuration as shown in the UI above to enable workload management:

Import-Module VMware.WorkloadManagement

$vSphereWithTanzuParams = @{

TanzuvCenterServer = "tanzu-vcsa-1.tshirts.inc";

TanzuvCenterServerUsername = "administrator@vsphere.local";

TanzuvCenterServerPassword = "VMware1!";

ClusterName = "Workload-Cluster";

TanzuContentLibrary = "TKG-Content-Library";

ControlPlaneSize = "TINY";

MgmtNetworkStartIP = "172.17.33.190";

MgmtNetworkSubnet = "255.255.255.0";

MgmtNetworkGateway = "172.17.33.1";

MgmtNetworkDNS = @("172.17.31.2");

MgmtNetworkDNSDomain = "tshirts.inc";

MgmtNetworkNTP = @("5.199.135.170");

WorkloadNetworkStartIP = "172.17.32.160";

WorkloadNetworkIPCount = 8;

WorkloadNetworkSubnet = "255.255.255.0";

WorkloadNetworkGateway = "172.17.32.1";

WorkloadNetworkDNS = @("172.17.31.2");

WorkloadNetworkServiceCIDR = "10.96.0.0/24";

StoragePolicyName = "tanzu-gold-storage-policy";

NSXALBIPAddress = "172.17.33.9";

NSXALBPort = "443";

NSXALBCertName = "nsx-alb"

NSXALBUsername = "admin";

NSXALBPassword = "VMware1!";

}

New-WorkloadManagement3 @vSphereWithTanzuParamsHere is an example output of running the above snippet:

Upon a successful enablement of workload management, you should have two NSX ALB Service Engine VMs deployed: