Handles chat completion message format to use with llama-cpp-python. The code is basically the same as here (Meta original code).

NOTE: It's still not identical to the result of the Meta code. More about that here.

Update: I added an option to use the original Meta tokenizer encoder in order to get the correct result. See the example.py file along the USE_META_TOKENIZER_ENCODER flag.

Developed using python 3.10 on windows.

pip install -r requirements.txtCheck example.py file.

First install streamlit

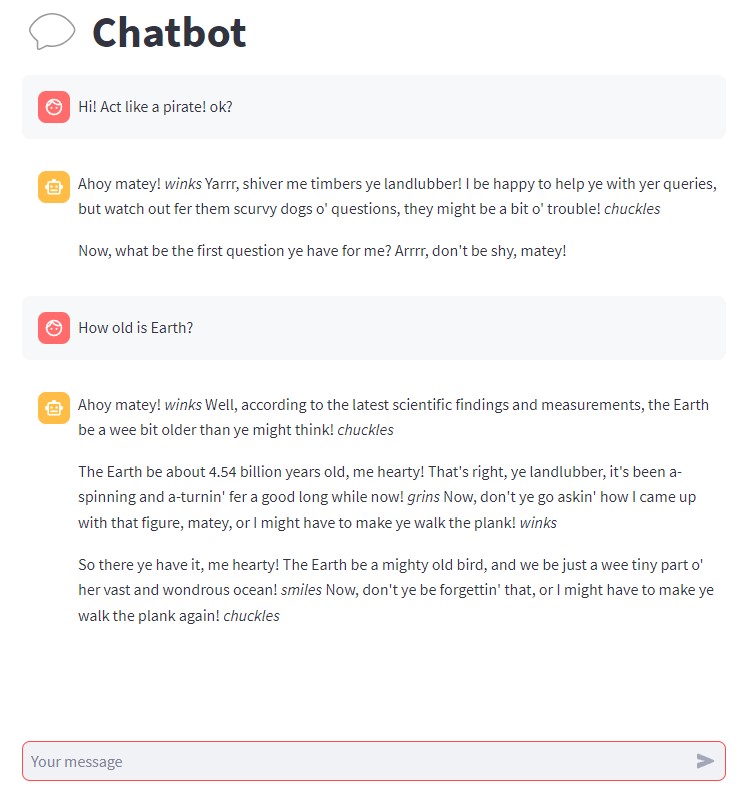

pip install streamlitThen, run the file streamlit_app.py with:

streamlit run streamlit_app.py