Due to large volumes of high-dimensional data in the text classification, image recognition and speech processing, reducing their dimensionality while preserving the meaningful structure becomes harder during machine learning stages such as feature engineering. Autoencoders, an artifcial neural network which is able to compress the input data and create latent representations that capture essential patterns, allowing the model to identify the abstract features from the raw data and minimizes the overfitting by enabling the models to better generalize by learning robust features that improves the model accuracy. It reduces the processing of large sparse vectors, making dowsntream tasks faster and more computationaly efficient.Aside,there are other several key challenges involved in when performing text classification such as

- Limited Data Availability : Many real world datasets for text classification are small or comprised of imbalanced classes, that leads moedls to overfit and perform poorly on underrepresented classes.

- No Data Augmentation : Have to rely on simple rule-based augmentation techniques that doesn't capture the true data variability.

- Poor Feature Representation : Manual feature engineering techniques such as simple embeddings may miss capturing the deeper semantic meanings of the text.

- Reduced Robustness to Noise: Due to noisey inputs, most real world textual data consists of mispellings,infromal langauges and incomplete sentences.

Having a generative model is able to overcome these challenges. It's a semi-supervised machine learning model that learn the underlying patterns or distributioms of the data and can use that knowledge to generate synthetic data which is similar to the input data. Generative models are used in many applications such as text and image syntheis, speech generation, data augmentation and anomaly detection. It does data augmentation to create synthetic samples of the data to balance imbalnced datasets, improves the classification accuracy by learning the abstract latent features,densoisng the noisey inputs through reconstruction and leverages the ability to handle missing or unlabeled data. It is able to learn from unlabeled data by modeling its distribution when less availability of the labeled data. Also pre-trained generative models cand be used in transfer learning , which is more compuationally efficient and less expensive rather than building a model from the scratch.

Using the autoenecoders as a genetaive model helps overcome many challenges described above with their combined unique features that improve the efficiency in many text-based and image-based classification tasks.

This project builds an Autoencoder model on MNIST dataset using PyTorch framework.Then uses the autoencoder as a genarative model to genearte new images of the digits using the generative model.

The MNIST dataset is loaded in batches using the DataLoader class from the torch.utils.data module, with the dataset provided by torchvision.datasets using the data loading function. It contains the images of digits from 0-9.

Autoencoders' architecture consists of two main components:

- Encoder – Compresses the input into a lower-dimensional latent space (e.g., reducing a 256×256 image to a 3×3 representation).

- Decoder – Reconstructs the original input from the latent representation, preserving key features.

As shown in the autoencoder dcoder model two separate Python classes are defined for the encoder and decoder architectures. These are then combined in a model class to form a complete autoencoder. This setup allows for the inspection of outputs at each stage—specifically, comparing the encoder’s compressed representation and the decoder’s reconstructed output to evaluate how closely it matches the original input image from the dataset.

The model was trained for 10 epochs using a batch size of 32. During training, both the training and validation losses were calculated to evaluate the model's performance. The training used a learning rate of 0.001 and the Adam optimizer as part of the model's hyperparameter configuration. Both train and validation losses gaind values in the range of 0.03-0.05 which can be considred the model is performing well.

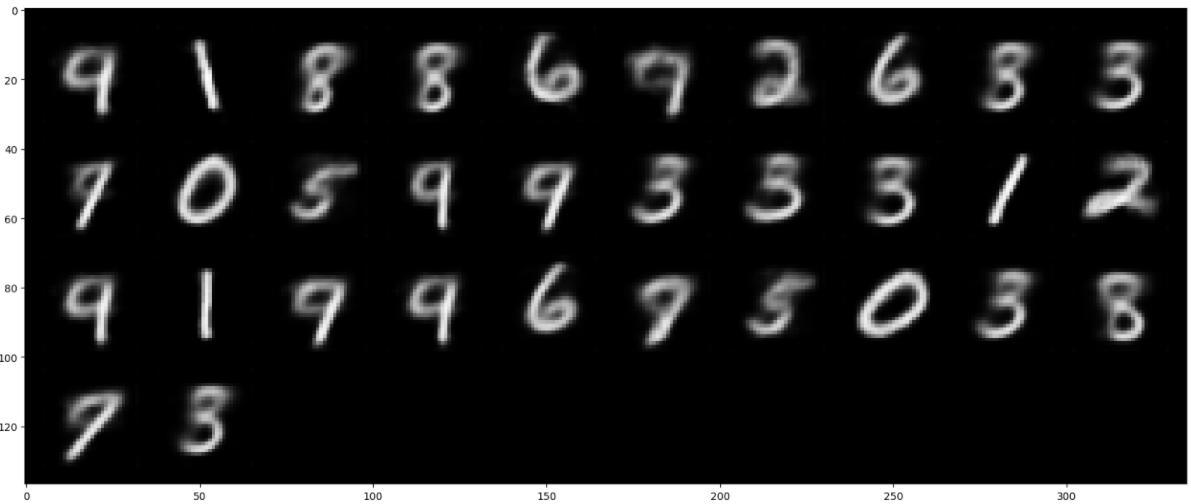

Image 1 : The output of the Autoencoder model

Image 1 : The output of the Autoencoder model

Image 2 : The putput of the decoder

The autoencoder successfully generated an image grid that reconstructs digitized images from the MNIST dataset. These reconstructions closely resemble the original inputs, demonstrating the model’s ability to learn and reproduce key features. The decoder component, in particular, functions as a generative model by transforming compressed, lower-dimensional representations back into full images. This highlights the decoder’s capacity to generate synthetic data with similar characteristics to the original inputs. Such generative capabilities are valuable for tasks like data augmentation, noise reduction, and addressing challenges related to unlabeled or imbalanced datasets.