This is the official implementation for VideoTree

Authors: Ziyang Wang*, Shoubin Yu*, Elias Stengel-Eskin*, Jaehong Yoon, Feng Cheng, Gedas Bertasius, Mohit Bansal

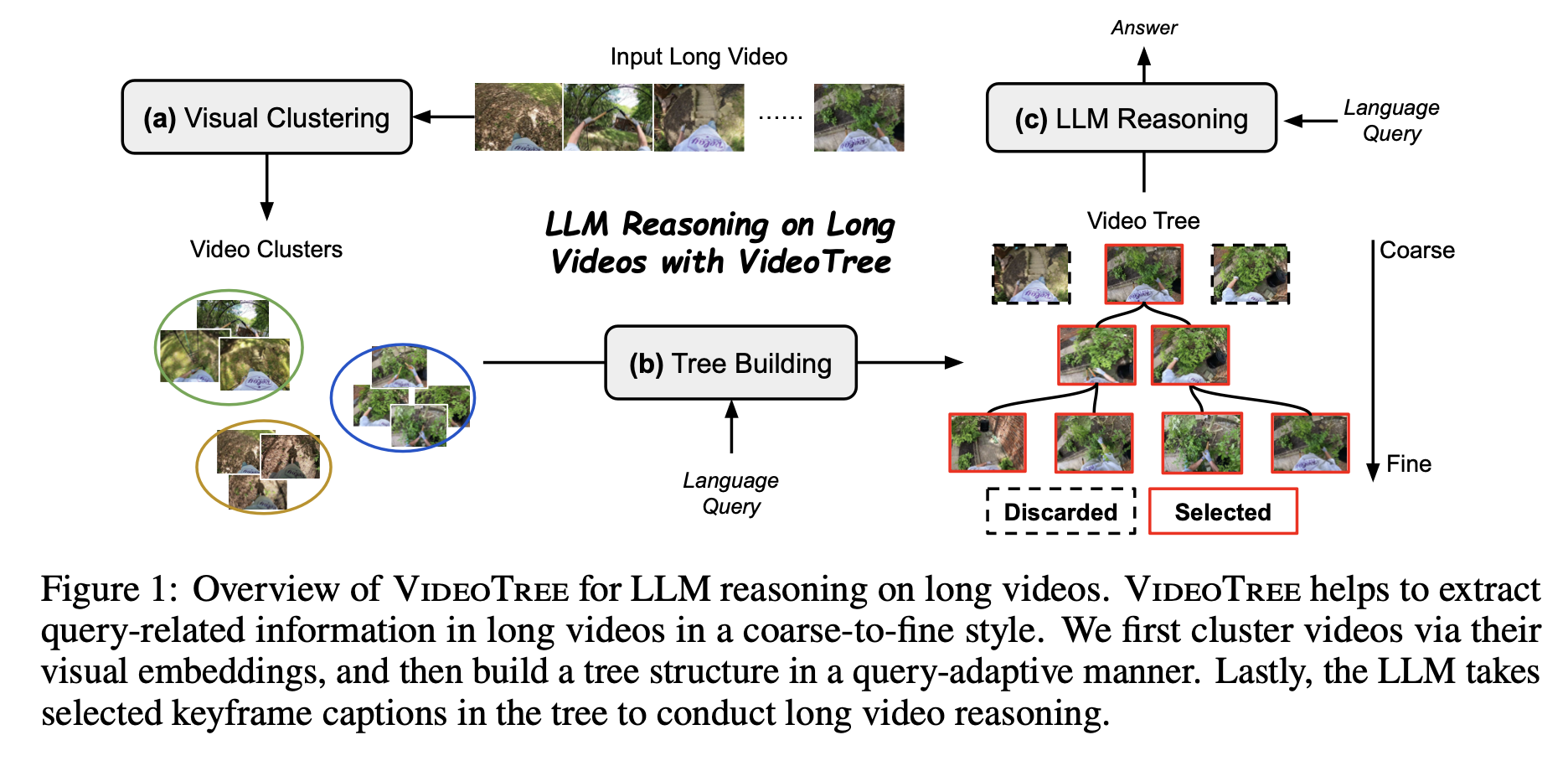

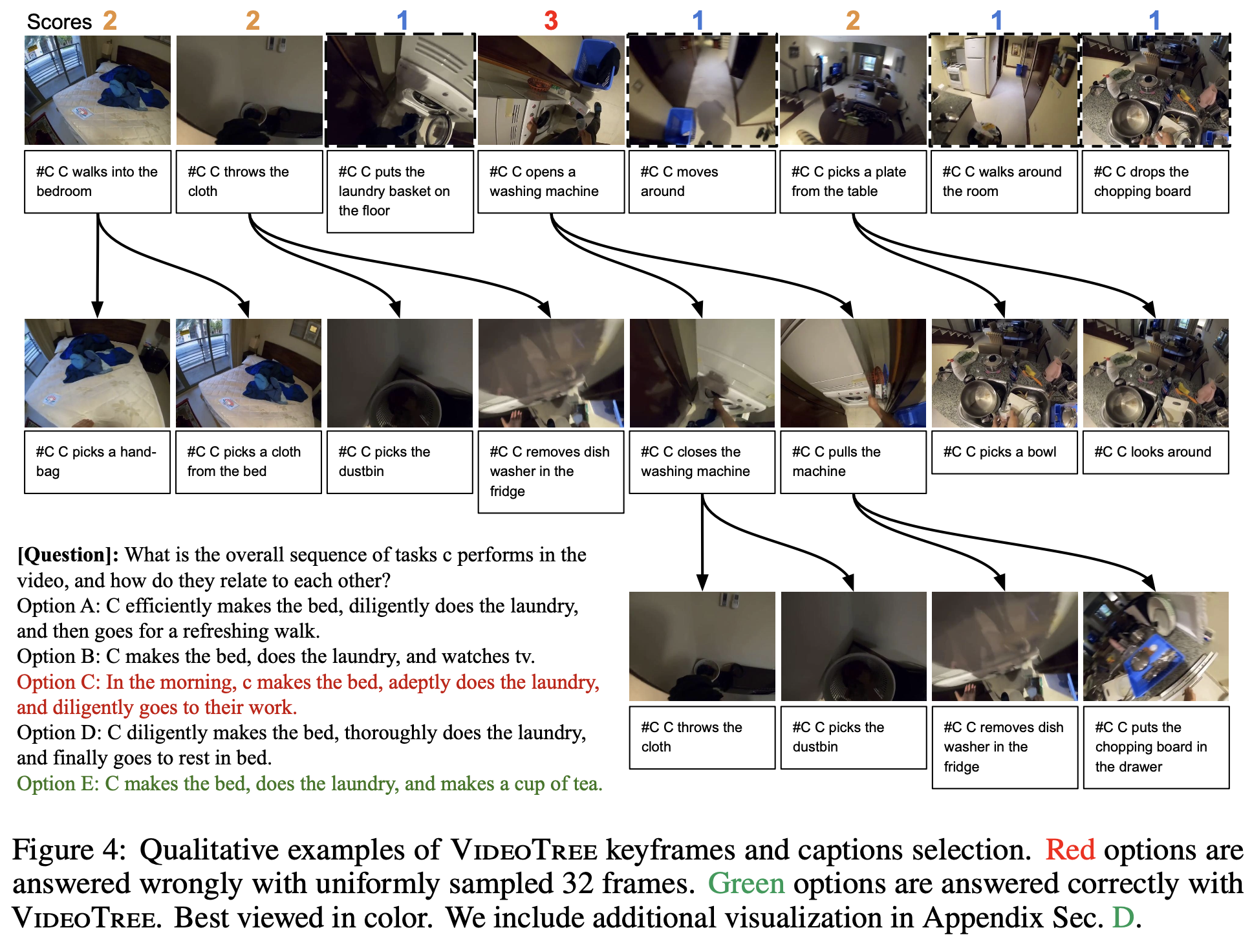

We introduce VideoTree, a query-adaptive and hierarchical framework for long-video understanding with LLMs. Specifically, VideoTree dynamically extracts query-related information from the input video and builds a tree-based video representation for LLM reasoning.

Install environment.

Python 3.8 or above is required.

git clone https://github.com/Ziyang412/VideoTree.git

cd VideoTree

python3 -m venv videetree_env

source activate videetree_env/bin/activate

pip install openai

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

pip install pandas

pip install transformers

pip install accelerateDownload dataset annotations and extracted captions.

Download data.zip from the File LLoVi provided.

unzip data.zipYou could extract captions for EgoSchema at ./data. It also contains dataset annotations.

Specifically, LaViLa base model is leveraged to extract EgoSchema captions at 1 FPS.

Due to the limit of time, the codebase is now under-constructed. We plan to improve the code structure and incorporate the full framework with adaptive width expansion in the future. We will also incorporate the scripts/captions for NExT-QA and IntentQA in the future.

VideoTree Variant (static tree width + depth expansion + LLM reasoning) on EgoSchema

Please update the feature and output path before running the code.

python tree_expansion/width_expansion.py

Please update the path (output of the last step) into the main_rel.py before running the code.

sh scripts/rel_egoschema.sh

python output_prep/convert_answer_to_pred_relevance.pyPlease update the feature and output path before running the code.

python tree_expansion/depth_expansion.py

sh scripts/egoschema.sh

python output_prep/convert_answer_to_pred.py--save_info: save more information, e.g. token usage, detailed prompts, etc.

--num_examples_to_run: how many examples to run. -1 (default) to run all.

--start_from_scratch: ignore existing output files. Start from scratch.We thank the developers of LLoVi, LifelongMemory, EVA-CLIP for their public code release. We also thank the authors of VideoAgent for the helpful discussion.

Please cite our paper if you use our models in your works:

@article{wang2024videotree,

title={VideoTree: Adaptive Tree-based Video Representation for LLM Reasoning on Long Videos},

author={Wang, Ziyang and Yu, Shoubin and Stengel-Eskin, Elias and Yoon, Jaehong and Cheng, Feng and Bertasius, Gedas and Bansal, Mohit},

journal={arXiv preprint arXiv:2405.19209},

year={2024}

}