This is open source code for our metasurface machine learning series

- Metasurface Machine Learning performance Benchmark

- SUTDPRT Dataset and Network Architect Search

- Symmetry Inspired Metasurface Machine Learning

pip install tensorboardX

pip install scipy

pip install matplotlib

pip install optuna

pip install PyWavelets

pip install pandas

pip install tensorboard

git clone https://github.com/veya2ztn/mltool.git

git clone https://github.com/veya2ztn/SUTD_PRCM_dataset.git

echo "{\"DATAROOT\": \"[the path for your data]",\"SAVEROOT\": \"[the path for your checkpoint]"}" > .DATARoot.json

-

run

python makeproject.pyto generate task files underprojects/undo -

run

python multi_task_train.pyto start training.use

-mor--modeassign train mode- The

defaultmode will train task inprojects/undoone by one. - The

testmode will run only 1 epoch and exit, meanwhile it will give the maximum batch size for current GPU. ( may fail after PyTorch 1.10) - The

parallelflag will active data parallel. Notice the officialtorch.optim.Adamoptimizer is not compatible. Recommend usetorch.optim.SGD. - The

multiTaskflag should work with--gpu NUM. It will auto move task file toproject/muliti_set{gpu}and run task on its individual GPU part. - The

assignflag should work with--path, which make script run assigned task.

python makeproject.py python multi_task_train.py - The

- add model under

./model - now model avaible:

- Modified 2D Resnet18/34/50/101 with different tail layer

- Modified Resnet18/34/50/101 Complex number version (Half/Full)

- Modified Resnet+NLP

- Modified DenseNet/SqueezeNet

- 1D Resnet for directly inverse problem

- Deep Multilayer Perceptron(MLP)

- DARTS model (with geotype input)

- Task-json-templete see

makeproject.py - The front script is

train_base.py

- Task-json-templete see

makeNASproject.py - The code is rewrite and unit weight now is split

- call

architect_darts.ArchitectDARTSfor share weight evolution. - call

architect_msdarts.ArchitectMSDARTSfor full weight evolution. (which may allocate much more memory and may not stable [under my test] )

- call

- Notice the drop phase only work on train.

- The front script is

darts_search.py

-

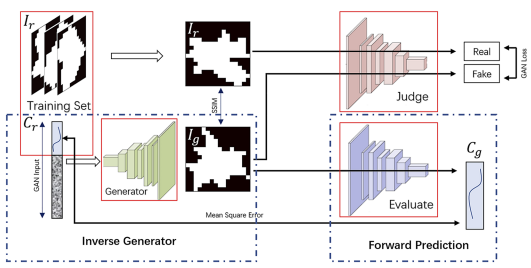

There are two way to train the GAN model

- original Deep Convolutional GAN (DCGAN) at

./GANproject/DCGAN*.py - Wasserstein GAN (WGAN) at

./GANproject/GAN*.py. However, Wasserstein distance in this scenario would make train unstable.

- original Deep Convolutional GAN (DCGAN) at

-

There are

DCGANTRAINandDCGANCURVEtwo part, link topattern confidenceandpattern-curve mapaccordingly. -

The whole GAN flow for practice implement is