⚠️ This is old, untested and undocumented code. It's only use-case is to reproduce the experiment below using the original code. The original experiment and the code inexperiment.pywas written by Hugh Salimbeni for the paper Deep Gaussian Processes with Importance-Weighted Variational Inference. The code undersrcis an early version of GPflux.

Toy Deep Gaussian Process Experiment

Code to reproduce experiment Fig. 1 from Salimbeni et al. (2018) and Fig. 7 from Leibfried et al. (2021).

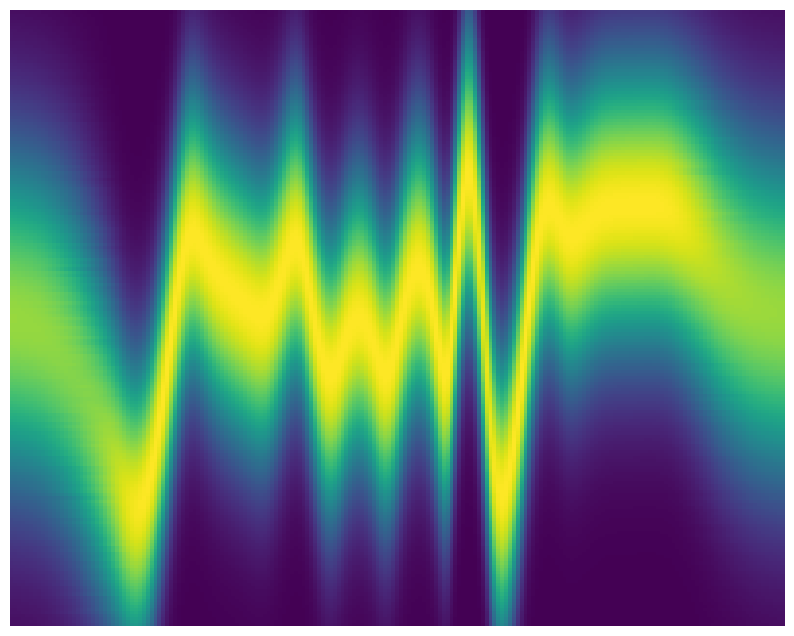

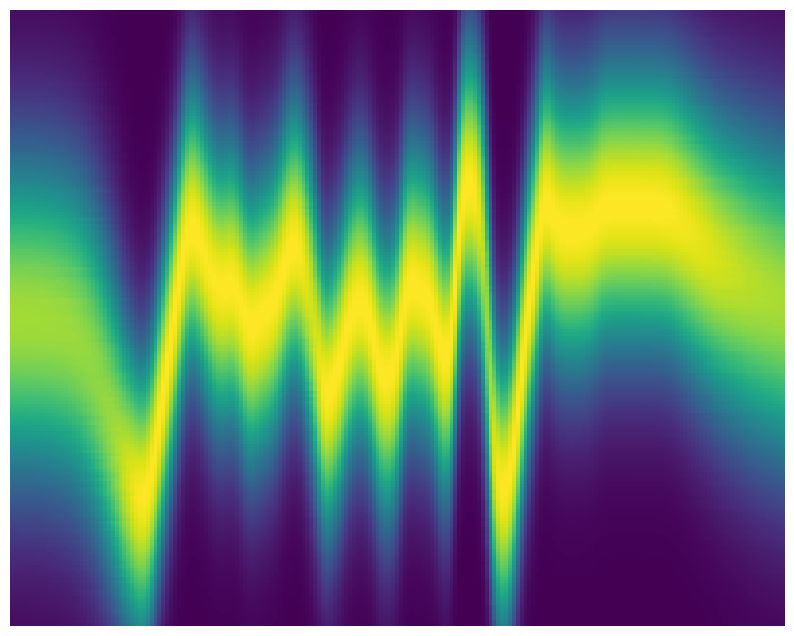

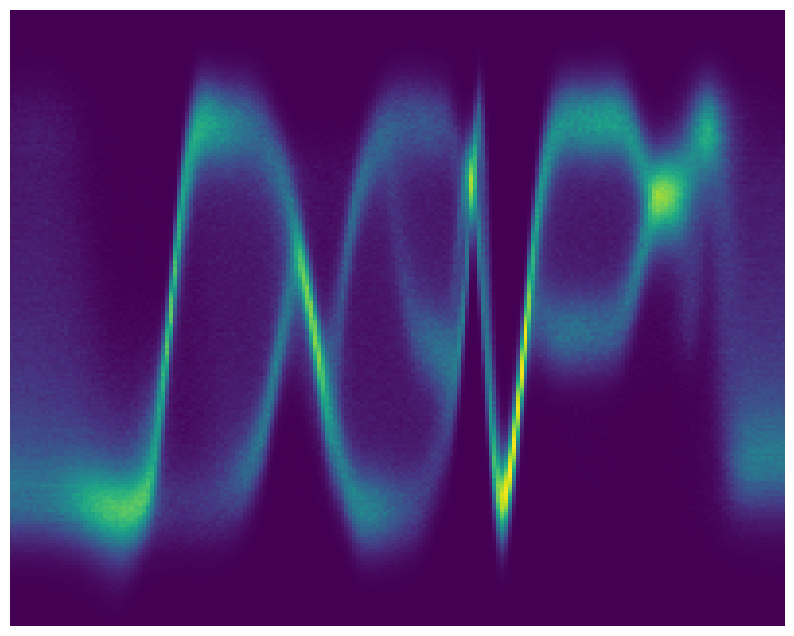

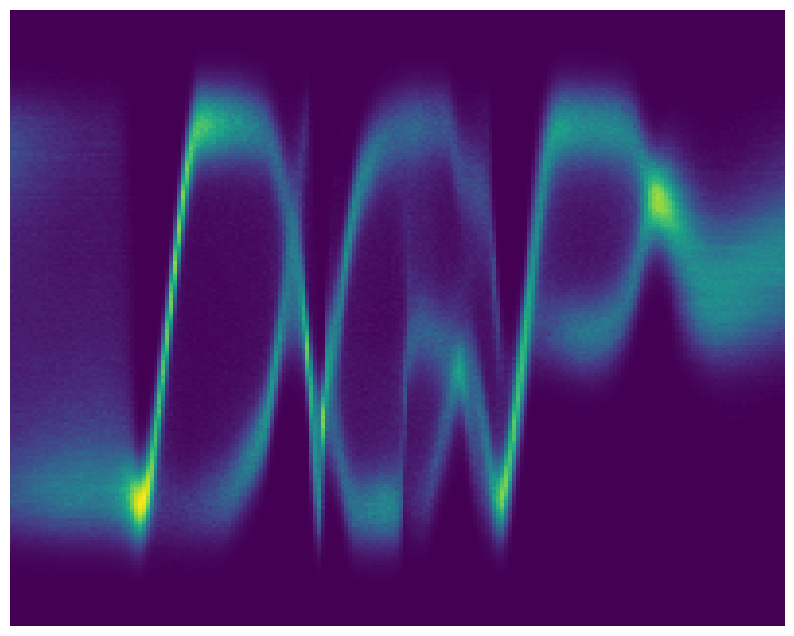

| GP | GP + GP | Latent Layer (LL) + GP | LL + GP + GP |

|

|

|

|

Installation

Disclaimer: legacy code written in Python 3.6 and TensorFlow 1.5.

The experiment uses a (very) early version of GPflux (contained in this repo under source) and GPflow 1.2.0 (commit hash).

Step 1.

Create a virtual environment with Python 3.6, using for example anaconda. After a successful installation of (mini) conda, a new virtual env can be created and activated using

conda create -n py36 python=3.6 && conda activate py36Step 2.

Install the dependencies

pip install -r requirements.txtRun the experiments

From the repo's root directory run

python experiment.py --configuration G1 --mode VIwhich will create a shallow sparse GP model, train and evaluate it. The results will be stored in the directory results. For a latent-variable shallow sparse GP model, run

python experiment.py --configuration L1_G1 --mode VIand for deep sparse GP versions of the former (with two layers each) run

python experiment.py --configuration G1_G1 --mode VIand

python experiment.py --configuration L1_G1_G1 --mode VINote that each experiment will create separate folders in the results directory.