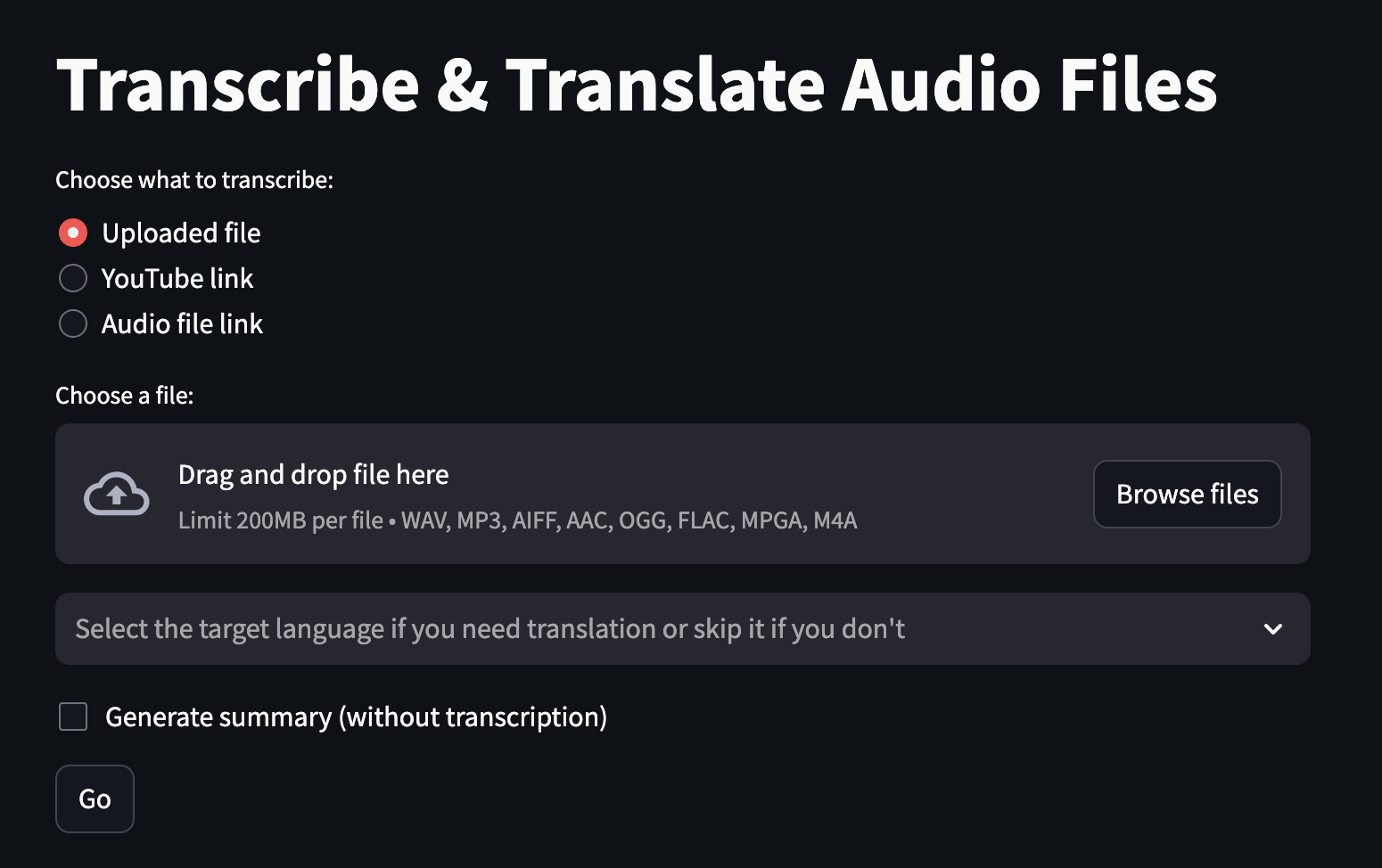

Transcriber & translator for audio files. Like Otter.ai but open-source and almost free.

Otter.ai monthly subscription is $16.99/per user.

Where you get:

1200 monthly transcription minutes; 90 minutes per conversation

Transcription:

Replicate AI models cloud-hosting with current prices and models used, 1200 minutes will cost approximately $5.50

At least three times cheaper with the same or even better quality of transcription, in my opinion.

And you pay as you go.

Translation and summerization: Gemini 1.5 Pro is free, if you use Gemini API from a project that has billing disabled, without the benefits available in paid plan.

Hosting: Free tires or trials of Render, Google Cloud, Orcale Cloud, AWS, Azure, IBM Cloud, or low-cost DigitalOcean, or any you like.

Total: Pay as you go for 10 hours audio. Replicate + free Gemini API + DigitalOcean = $2.00 + $0.00 + $0.10 = $2.10

Run Whisper model on Replicate much cheaper than using OpenAI API for Whisper.

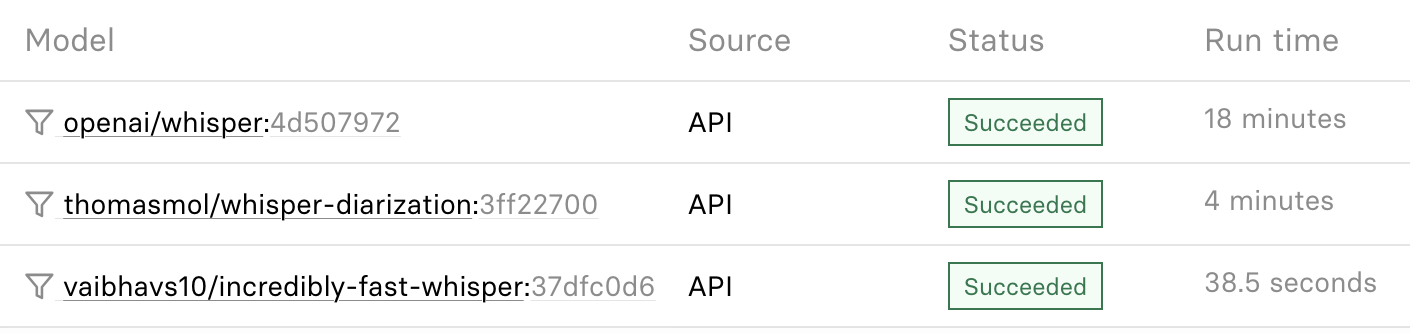

I use two models:

vaibhavs10/incredibly-fast-whisper best for speed

thomasmol/whisper-diarization best for dialogs

Same audio 45 minutes (6 speakers) comparison by model

OpenAI Speech to text Whisper model

File uploads are currently limited to 25 MB.

To avoid this limitation, I use compression (Even though I know the models I'm using use compression, too. In practice, I've encountered a limit when relying on compression in a model). The file size without compression is 63 MB for 45 minutes of audio. However, after compression, the file size reduces to 4 MB for the same duration. Therefore, using compression, we can avoid splitting audio into chunks, and we can increase the limit to approximately 3 hours and 45 minutes of audio without losing transcription quality.

But if you still need to transcript more you can split file using pydub's silence.split_on_silence() or silence.detect_silence() or silence.detect_nonsilent(). This function's speed is hardware-dependent, but it is about 10 times faster than listening to the entire file.

In my tests, I face three main problems:

- These functions are not working as I expect.

- If split just by time, you can cut in the middle of a word.

- Post-processing becomes a challenge. It's hard to identify the speaker smoothly. Loss of timestamps.

All this beloongs to very long audio only.

Gemini 1.5 Pro model name and properties

Max output tokens: 8,192

0.75 words per token = ~6,144 words or about 35 minutes of speaking. But for non-English languages, most words are counted as two or more tokens.

The maximum number of tokens for output is currently 8,192. Audio post-processing, which includes correction and translation, can only be done for files that are approximately 35 minutes long. Other models have a maximum output of 4,096 or less. If you need to process more than 8,192 tokens, you may need to do it in batches, but this will significantly increase the processing time.

Translation by chunks still works, but the quality little bit lower.

Max audio length: approximately 8.4 hours

It still works well for summarization.

Languages support for translation.

Example of .env file:

GEMINI_API_KEY = your_api_key

REPLICATE_API_TOKEN = your_api_key

You need to replace the path to the env_file in compose.yaml

Get Gemini API key

Get Replicate API key

| Platform | Links |

|---|---|

| Render | Deploy from GitHub / GitLab / Bitbucket |

| Google Cloud | Quickstart: Deploy to Cloud Run Tutorial: Deploy your dockerized application on Google Cloud |

| Oracle Cloud | Container Instances |

| IBM Cloud | IBM Cloud® Code Engine |

| AWS | AWS App Runner |

| Azure | Web App for Containers Deploy a containerized app to Azure |

| Digital Ocean | How to Deploy from Container Images |