This repository was made as an assignment for the FALL2020 course EE321. In this project we demonstrate the Bayesian Matting Algorithm which was first introduced in the paper "A Bayesian Approach to Digital Matting"

Image Matting is the process of accurately estimating the foreground object in images and videos. It is a very important technique in image and video editing applications, particularly in film production for creating visual effects. In order to fully separate the foreground from the background in an image, accurate estimation of the alpha values for partial or mixed pixels is necessary. Bayesian matting models both the foreground and background color distributions with spatially-varying mixtures of Gaussians, and assumes a fractional blending of the foreground and background colors to produce the final output. It then uses a maximum-likelihood criterion to estimate the optimal opacity, foreground and background simultaneously. The goal of this assignment is for you to understand the real life implications of theory that are covered in the class. The task is to implement bayesian matting in python.

This Bayesian framework for solving the matting problem, is based on extracting the foreground element from a backgound image by estimating an opacity for each pixel (Alpha) of the foreground element. In this approach they model both the foreground and background pixels as colour distributions spatially-varying mixtures of Gaussians and assume a fractional blending of the foreground and background colours to produce the final output. It uses a maximum likelihood estimation criteria to estimate the optimal opacity or alpha at a given pixel.

We were provided the ALpha Matting Dataset, which can be found on their website

The Input images are here.

The Trimap images are here.

The Ground truth alpha images are here.

The dataset contains 27 images, with their trimaps and ground truth alpha mattes.

The project uses Python >= 3.5

Other technologies used

- Jupyter Notebook

- OpenCV

- Pillow

- Matplotlib

- Numpy

* The project is also compatible with Google Colabaratory

For matting an image, we want to find that which part (pixel) of the image belongs to the forground and which part belongs to the alpha. For this, we define opacity/alpha as a parameter for each pixel. The image can be considered as a mixture of two seprate forground and background pictures.

For every Pixel we need 7 values. 3 for Forground RGB, 3 for Background RGB and 1 for opacity/alpha.

For Bayesian matting we model each pixel as a distribution of colour data and then try to solve 3 equations to find our 7 variables. We use minimum likelihood estimation to do so. As we can never find the actual values of 7 variables with just 3 equations, but we try to get a close estimate of them.

We recursively solve for all the pixels that are unknown based on the distribution of their neigbouring pixels. Each pixel is predicted based on the center of the the clustering pixel of foreground and background nearby pixels. This way we determine which pixel value comes out to be in the foreground and which in the background.

This project tries to implement the algorithm introduced by the CV2001 paper. The main algorithm is implemented in the BayesianMatting.ipynb and can be also opened in Google Colaboratory

This code is highly inspired from MarcoForte's code on Bayesian Matting. Do have a look!

The cells can be executed in the Jupyter Notebooks after all the dependencies are installed.

After Running the algorithm on each image we get -

| Sample Name | Absolute Difference Between Ground Truth |

|---|---|

| GT01 | 1.11 |

| GT02 | 2.43 |

| GT03 | 7.56 |

| GT04 | 10.63 |

| GT05 | 0.99 |

| GT06 | 2.24 |

| GT07 | 1.81 |

| GT08 | 14.72 |

| GT09 | 2.85 |

| GT10 | 3.65 |

| GT11 | 4.77 |

| GT12 | 1.44 |

| GT13 | 9.99 |

| GT14 | 1.90 |

| GT15 | 3.29 |

| GT16 | 19.67 |

| GT17 | 2.27 |

| GT18 | 2.70 |

| GT19 | 11.50 |

| GT20 | 1.58 |

| GT21 | 5.94 |

| GT22 | 2.81 |

| GT23 | 2.19 |

| GT24 | 5.66 |

| GT25 | 13.6 |

| GT26 | 17.58 |

| GT27 | 9.99 |

| Average | 6.10 |

All the final images are stored in the results folder.

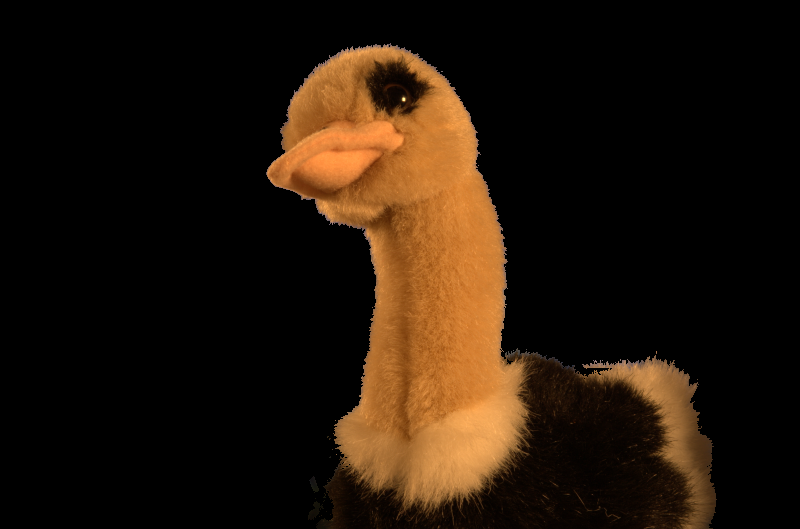

The Input Image -

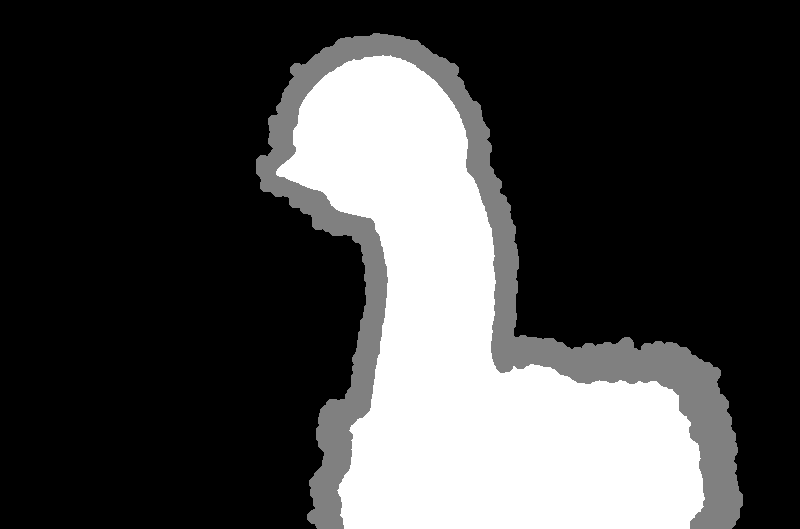

The Trimap Image -

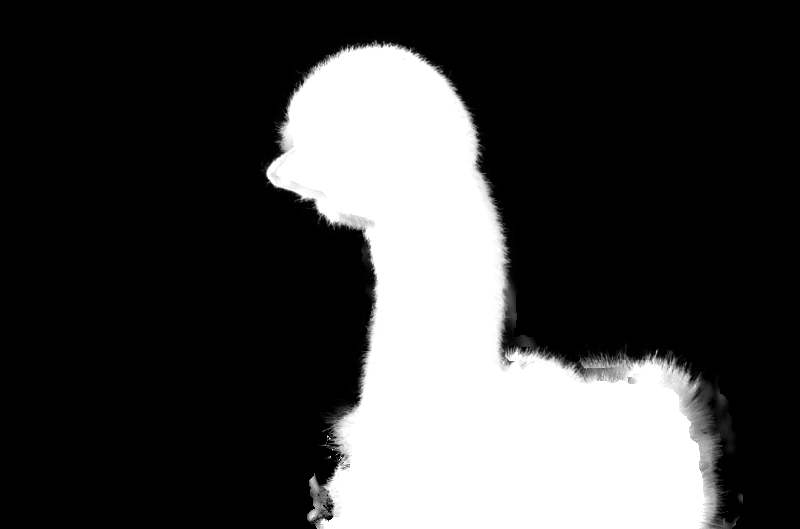

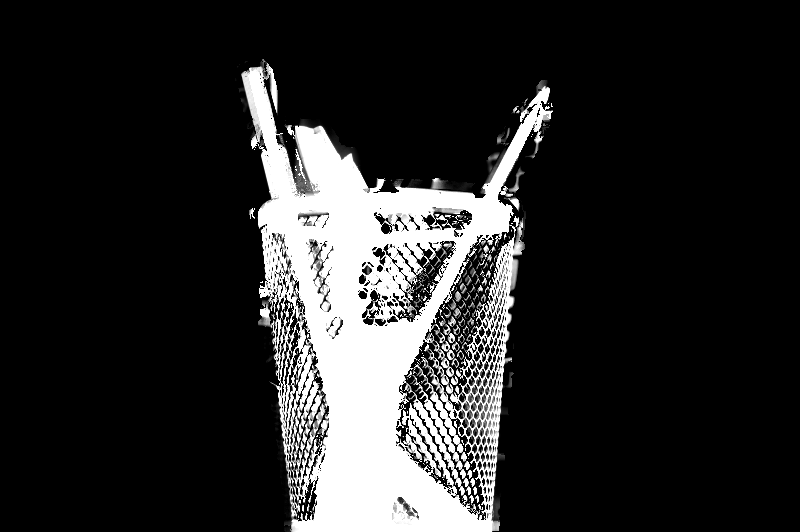

The Solved Alpha Matte -

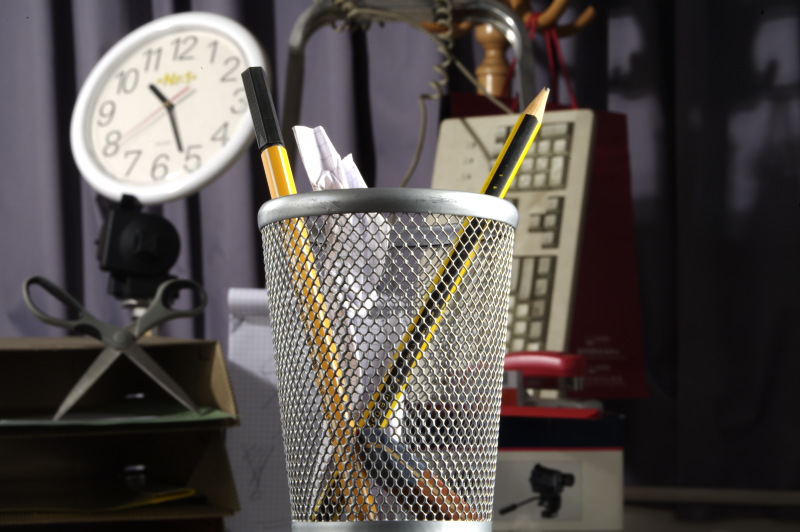

Finnaly, The mask over the orignal image -

The Input Image -

The Trimap Image -

The Solved Alpha Matte -

Finnaly, The mask over the orignal image -

[1] Yung-Yu Chuang, Brian Curless, David H. Salesin, and Richard Szeliski. A Bayesian Approach to Digital Matting. In Proceedings of IEEE Computer Vision and Pattern Recognition (CVPR 2001), Vol. II, 264-271, December 2001

[2] Christoph Rhemann, Carsten Rother, Jue Wang, Margrit Gelautz, Pushmeet Kohli, Pamela Rott. A Perceptually Motivated Online Benchmark for Image Matting. Conference on Computer Vision and Pattern Recognition (CVPR), June 2009.