Yiyang Jiang, Wengyu Zhang, Xulu Zhang, Xiao-Yong Wei, Chang Wen Chen, and Qing Li.

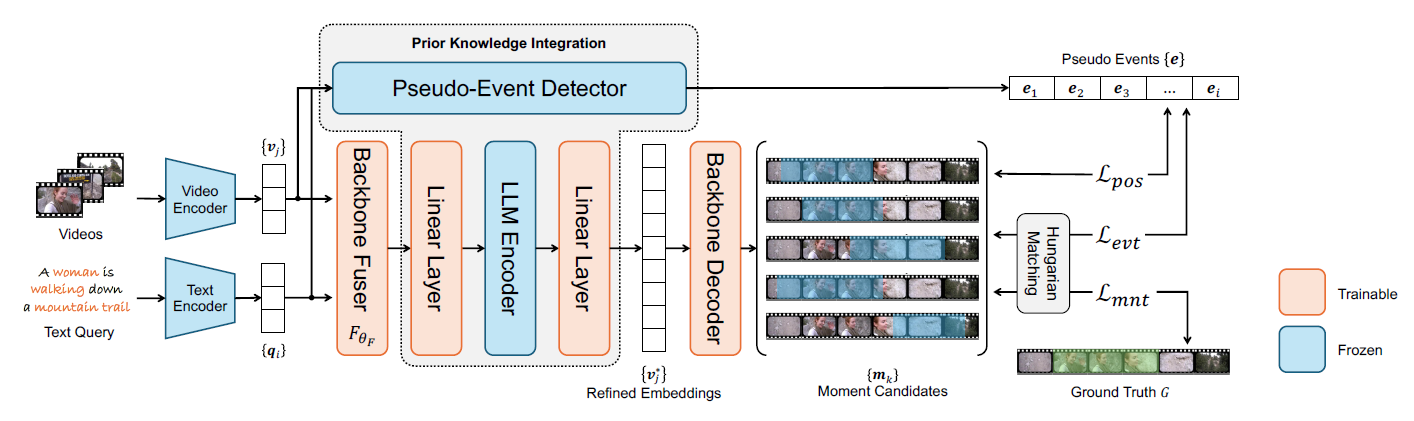

Official Pytorch Implementation of 'Prior Knowledge Integration via LLM Encoding and Pseudo-Event Regulation for Video Moment Retrieval'

Installation | Dataset | Training | Evaluation | Model Zoo

[2024.7.21] Our paper has been accepted by ACM Multimedia 2024 (Oral).

[2024.7.10] The code and dataset of related tasks has been released.

[2024.5.10] The repository is public.

[2024.4.10] The repository is created.

- Clone the repository from GitHub.

git clone https://github.com/fletcherjiang/LLMEPET.git

cd LLMEPET- Create conda environment.

conda create -n LLMEPET python=3.8

conda activate LLMEPET- Download the packages

pip install -r requirements.txtFor all datasets, we provide extracted features, download them and place them into features/

.

├── LLMEPET

│ ├── llm_epet

│ └── data

│ └── results

│ └── run_on_video

│ └── standalone_eval

│ └── utils

├── data

├── features

│ └── qvhighlight

│ └── charades

│ └── tacos

│ └── tvsum

│ └── youtube_uni

├── llama

│ └── consolidated.00.pth

│ └── tokenizer.model

│ └── params.json

├──README.md

└── ···

- You can download from huggingface OR

- LLaMA

If you want to try LLaMA-2 or LLaMA-3, you could download the checkpoints from LLaMA-2 or LLaMA-3. You should edit the (llm_epet/llama.py) by yourself.

bash llm_epet/scripts/train.sh

bash llm_epet/scripts/charades_sta/train.sh

bash llm_epet/scripts/tacos/train.sh

bash llm_epet/scripts/tvsum/train_tvsum.sh

bash llm_epet/scripts/youtube_uni/train.sh

bash llm_epet/scripts/inference.sh results/{direc}/model_best.ckpt 'val'

bash llm_epet/scripts/inference.sh results/{direc}/model_best.ckpt 'test'

Pack the hl_{val,test}_submission.jsonl files and submit them to CodaLab.

| Dataset | Model file |

|---|---|

| QVHighlights (Slowfast + CLIP) | checkpoints |

| Charades (Slowfast + CLIP) | checkpoints |

| TACoS | checkpoints |

| TVSum | checkpoints |

| Youtube-HL | checkpoints |

If you find the repository or the paper useful, please use the following entry for citation.

@inproceedings{

jiang2024prior,

title={Prior Knowledge Integration via {LLM} Encoding and Pseudo Event Regulation for Video Moment Retrieval},

author={Yiyang Jiang and Wengyu Zhang and Xulu Zhang and Xiaoyong Wei and Chang Wen Chen and Qing Li},

booktitle={ACM Multimedia 2024},

year={2024},

url={https://arxiv.org/abs/2407.15051}

}