Since Google Summer of Code 2018, FFMpeg supports the sr

filter for applying super-resolution methods based on convolutional neural

networks. However, compiling FFMpeg with proper libraries and preparing models

for super-resolution requires expert knowledge. This repository provides a

Dockerfile that makes super-resolution in FFMpeg a breeze!

- Miniconda: For producing pre-trained super-resolution models.

- Docker: For running containerized FFMpeg with super-resolution support.

- NVIDIA Container Toolkit: For GPU acceleration.

First, download the pre-built Libtensorflow library from

https://storage.googleapis.com/tensorflow/libtensorflow/. Your version of

Libtensorflow (here 1.15.0) should match your version of CUDA, see the

compatibility table. Your version of CUDA should

match your NVIDIA driver, see NVIDIA CUDA Toolkit Release Notes, Table

2.

$ mkdir -p tensorflow/lib_package/

$ pushd tensorflow/lib_package/

$ wget https://storage.googleapis.com/tensorflow/libtensorflow/libtensorflow-gpu-linux-x86_64-1.15.0.tar.gz

$ popdIf your version of Libtensorflow has not been pre-built, you will need to build it yourself, which takes about 2 hours on a quad-core laptop. Specific steps that need to be taken for some historic versions of Libtensorflow (1.12.3) are described in the issues.

$ git clone https://github.com/tensorflow/tensorflow.git

$ pushd tensorflow/tensorflow/tools/ci_build/linux/

$ git checkout v1.15.0

$ ./libtensorflow_gpu.sh

$ popdNext, build our FFMpeg Docker image (takes about 5 minutes on a quad-core

laptop). Your nvidia/cuda base image should match your version

of CUDA (here 10.0).

$ git clone https://github.com/MIR-MU/ffmpeg-tensorflow.git

$ tar xzvf tensorflow/lib_package/libtensorflow-gpu*.tar.gz -C ffmpeg-tensorflow/

$ docker build --build-arg FROM_IMAGE=nvidia/cuda:10.0-cudnn7-devel-ubuntu18.04 -t ffmpeg-tensorflow ffmpeg-tensorflow/You should now see ffmpeg-tensorflow among your Docker images.

Remove auxiliary files and intermediary Docker images downloaded during

the installation:

$ rm -rf ffmpeg-tensorflow/ tensorflow/

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ffmpeg-tensorflow latest 5d66a25f140b About an hour ago 5.34GB

tf-tensorflow-gpu latest 7f8c5a76892c 4 hours ago 6.15GB

$ docker rmi 7f8c5a76892cCreate and activate a Miniconda environment named ffmpeg-tensorflow.

Your version of the tensorflow package (here 1.15.0) should match the

version of Libtensorflow that you used during installation:

$ conda create --name ffmpeg-tensorflow tensorflow=1.15.0 numpy python=3

$ conda activate ffmpeg-tensorflowNext, download the HighVoltageRocknRoll/sr GitHub

repository that contains tensorflow checkpoints for

models trained on the DIV2K dataset, and convert the tensorflow

checkpoints into super-resolution models:

$ git clone https://github.com/HighVoltageRocknRoll/sr

$ pushd sr

$ python generate_header_and_model.py --model=espcn --ckpt_path=checkpoints/espcn

$ python generate_header_and_model.py --model=srcnn --ckpt_path=checkpoints/srcnn

$ python generate_header_and_model.py --model=vespcn --ckpt_path=checkpoints/vespcn

$ python generate_header_and_model.py --model=vsrnet --ckpt_path=checkpoints/vsrnet

$ cp espcn.pb srcnn.pb vespcn.pb vsrnet.pb ..

$ popdFinally, deactivate and remove the ffmpeg-tensorflow Miniconda environment,

and remove the HighVoltageRocknRoll/sr GitHub repository:

$ conda deactivate

$ conda env remove --name ffmpeg-tensorflow

$ rm -rf sr/You should be left with a number of super-resolution models:

$ ls

espcn.pb srcnn.pb vespcn.pb vsrnet.pbThe architectures and experimental

results for the super-resolution results are described in the

HighVoltageRocknRoll/sr GitHub repository.

Download an example video and use the ffmpeg-tensorflow docker

image to upscale it using one of the super-resolution models (here ESPCN):

$ wget https://media.xiph.org/video/derf/y4m/flower_cif.y4m

$ alias ffmpeg-tensorflow='docker run --rm --gpus all -u $(id -u):$(id -g) -v "$PWD":/data -w /data -it ffmpeg-tensorflow'

$ ffmpeg-tensorflow -i flower_cif.y4m -filter_complex '

> [0:v] format=pix_fmts=yuv420p, extractplanes=y+u+v [y][u][v];

> [y] sr=dnn_backend=tensorflow:scale_factor=2:model=espcn.pb [y_scaled];

> [u] scale=iw*2:ih*2 [u_scaled];

> [v] scale=iw*2:ih*2 [v_scaled];

> [y_scaled][u_scaled][v_scaled] mergeplanes=0x001020:yuv420p [merged]

> ' -map [merged] -sws_flags lanczos -c:v libx264 -crf 17 -c:a copy \

> -y flower_cif_2x.mp4The flower_cif_2x.mp4 file with the upscaled example video should be produced.

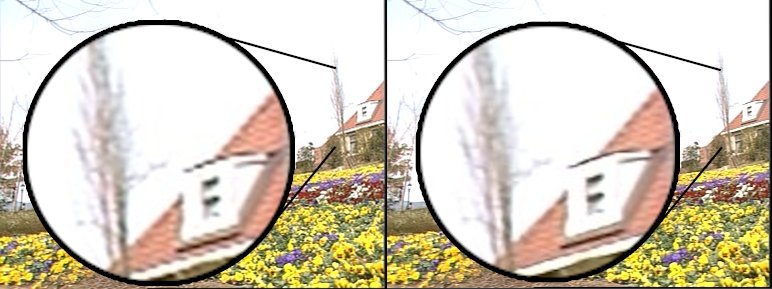

Compare upscaling using Lanczos filtering (left) with upscaling using the ESPCN

super-resolution model (right):