The is the repo of Distributed Task-aware Compression (dtac).

Link to paper: Task-aware Distributed Source Coding under Dynamic Bandwidth

Link to blog: Blog

We design a distributed compression framework that learns low-rank task representations and efficiently distributes bandwidth among sensors to provide a trade-off between performance and bandwidth.

Click to expand

Efficient compression of correlated data is essential to minimize communication overload in multi-sensor networks. In such networks, each sensor independently compresses the data and transmits them to a central node due to limited communication bandwidth. A decoder at the central node decompresses and passes the data to a pre-trained machine learning-based task to generate the final output. Thus, it is important to compress features that are relevant to the task. Additionally, the final performance depends heavily on the total available bandwidth. In practice, it is common to encounter varying availability in bandwidth, and higher bandwidth results in better performance of the task. We design a novel distributed compression framework composed of independent encoders and a joint decoder, which we call neural distributed principal component analysis (NDPCA). NDPCA flexibly compresses data from multiple sources to any available bandwidth with a single model, reducing computing and storage overhead. NDPCA achieves this by learning low-rank task representations and efficiently distributing bandwidth among sensors, thus providing a graceful trade-off between performance and bandwidth. Experiments show that NDPCA improves the success rate of multi-view robotic arm manipulation by 9% and the accuracy of object detection tasks on satellite imagery by 14% compared to an autoencoder with uniform bandwidth allocation. Task-aware distributed source coding with NDPCA:

Task-aware distributed source coding with NDPCA:

- The framework uses neural encoders and their corresponding neural decoder to minimize the task loss, by measuring the task-performance on the reconstructed data,

$\phi(\hat{X})$ , and on uncompressed data,$\phi(X)$ . - The neural autoencoders are encouraged to generate low-rank representations

$Z$ , which helps achieve a systematic trade-off between latent dimension and performance with a single model.

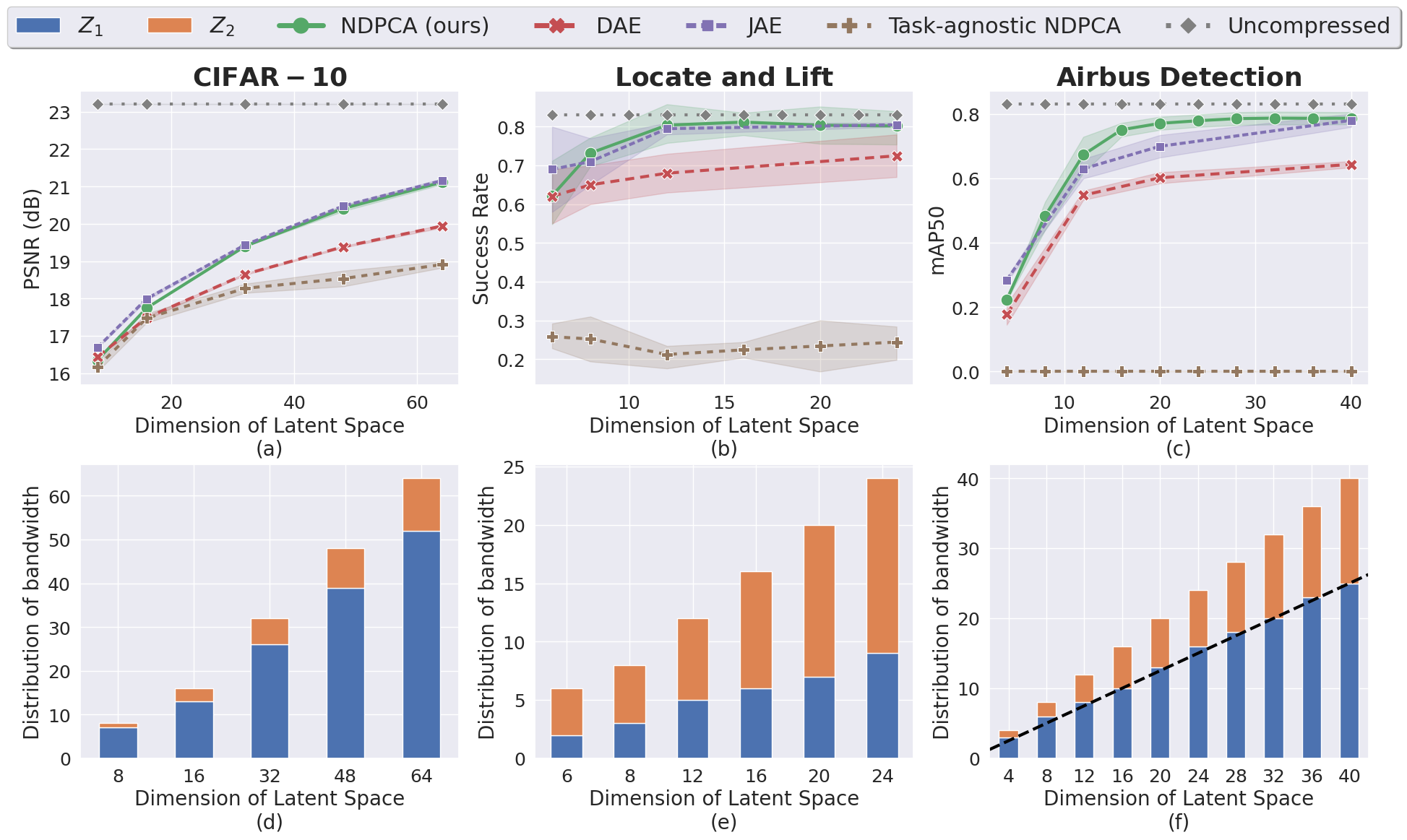

Top: Performance Comparison for 3 different tasks. Our method achieves equal or higher performance than other methods.

Bottom: Distribution of total available bandwidth (latent space) among the two views for NDPCA (ours). The unequal allocation highlights the difference in the importance of the views for a given task.

Top: Performance Comparison for 3 different tasks. Our method achieves equal or higher performance than other methods.

Bottom: Distribution of total available bandwidth (latent space) among the two views for NDPCA (ours). The unequal allocation highlights the difference in the importance of the views for a given task.

For the installation of the required packages, see the setup.py file or simply run the following command to install the required packages in requirements.txt:

pip install -r requirements.txtThen to activate the dtac environment, run:

pip install -e .The locate and lift experiment needs the gym package (see setup.py or requirements.txt) and mujoco. To install mujoco, see install mujoco.

To collect the demonstration dataset used for training of RL agent and the autoencoders, run the following command:

python collect_lift_hardcode.pyThe airbus experiment needs the airbus dataset. To download the dataset, see Airbus Aircraft Detection.

After downloading the dataset, place the dataset in the "./airbus_dataset

folder and run "./airbus_scripts/aircraft-detection-with-yolov8.ipynb".

Then, put the output of the notebook in the following folder

"./airbus_dataset/224x224_overlap28_percent0.3_/train" and "./airbus_dataset/224x224_overlap28_percent0.3_/val".

Finally, augment the dataset with mosaic at "./mosaic/":

python main.py --width 224 --height 224 --scale_x 0.4 --scale_y 0.6 --min_area 500 --min_vi 0.3The dtac package contains the following models:

- ClassDAE.py: Class of Autoencoders

- DPCA_torch.py: Fuctions of DPCA

and other common utility functions.

To train an RL agent, run the following command:

python train_behavior_cloning_lift.py -vwhere -v is the views of the agent: "side", "arm", or "2image".

To train the lift and locate NDPCA, run the following command:

python train_awaDAE.py -argsSee the -args examples in the main function of train_awaDAE.py file.

To evaluate autoencoder models, run the following command in the "./PnP_scripts" folder:

python eval_DAE.py -argsSee the -args examples in the main function of eval_DAE.py file.

To train the object detection (Yolo) model, run the following command:

python airbus_scripts/yolov1_train_detector.pyTo train the Airbus NDPCA, run the following command in the "./airbus_scripts" folder:

python train_od_awaAE.py -argsSee the -args examples in the main function of train_od_awaAE.py file.

To evaluate autoencoder models, run the following command in the "./airbus_scripts" folder:

python dpca_od_awaDAE.py -argsSee the -args examples in the main function of dpca_od_awaDAE.py file.

If you find this repo useful, please cite our paper:

@inproceedings{Li2023taskaware,

title={Task-aware Distributed Source Coding under Dynamic Bandwidth},

author={Po-han Li and Sravan Kumar Ankireddy and Ruihan Zhao and Hossein Nourkhiz Mahjoub and Ehsan Moradi-Pari and Ufuk Topcu and Sandeep Chinchali and Hyeji Kim},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023},

url={https://openreview.net/forum?id=EJo8lMC5cY}

}