Semi-FedSER: Semi-supervised Learning for Speech Emotion Recognition On Federated Learning using Multiview Pseudo-Labeling

This repository contains the official implementation (in PyTorch) of Semi-FedSER. If you have any questions, please email me at tiantiaf@gmail.com or tiantiaf@usc.edu.

We extract a variety of speech representations using OpenSMILE toolkit and pretrained models. You can refer to OpenSMILE and SUPERB paper for more information.

Below is a listed of features that we include in the current experiment:

| Publication Date | Model | Name | Paper | Input | Stride | Pre-train Data | Official Repo |

|---|---|---|---|---|---|---|---|

| --- | EmoBase | --- | MM'10 | Speech | --- | --- | EmoBase |

| 5 Apr 2019 | APC | apc | arxiv | Mel | 10ms | LibriSpeech-360 | APC |

| 12 Jul 2020 | TERA | tera | arxiv | Mel | 10ms | LibriSpeech-960 | S3PRL |

| Dec 11 2020 | DeCoAR 2.0 | decoar2 | arxiv | Mel | 10ms | LibriSpeech-960 | speech-representations |

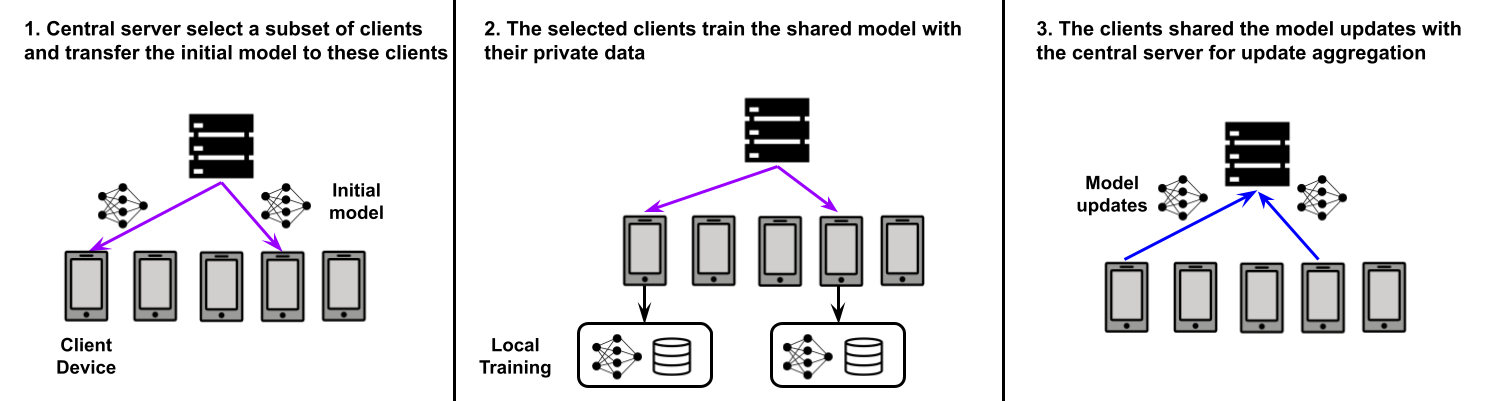

Let's recap the basic of the FL.

-

In a typical FL training round shown in the figure below, a subset of selected clients receive a global model, which they can locally train with their private data.

-

Afterward, the clients only share their model updates (model parameters/gradients) to the central server.

-

Finally, the server aggregates the model updates to obtain the global model for the next training round.

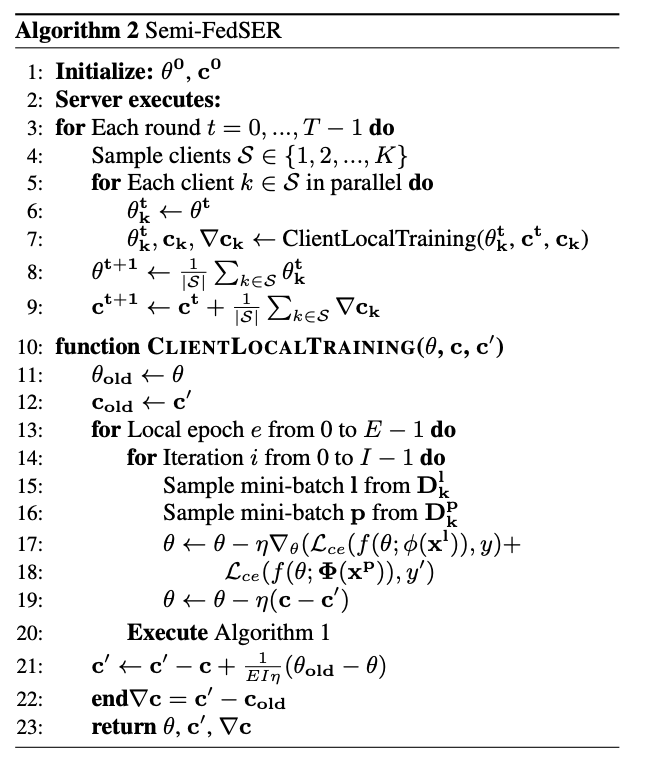

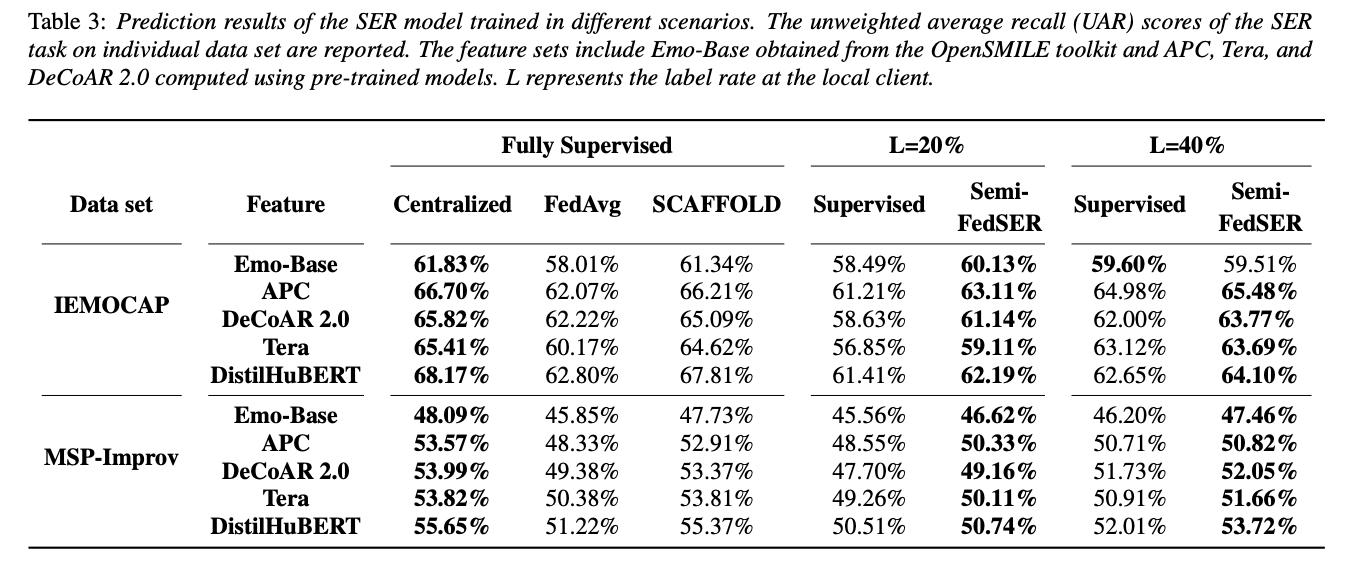

One major challenge in FL is that high-quality labeled data samples do not often exist, and most data samples are indeed unlabeled. To address this, Semi-FedSER performs the model training, utilizing both labeled and unlabeled data samples at the local client.

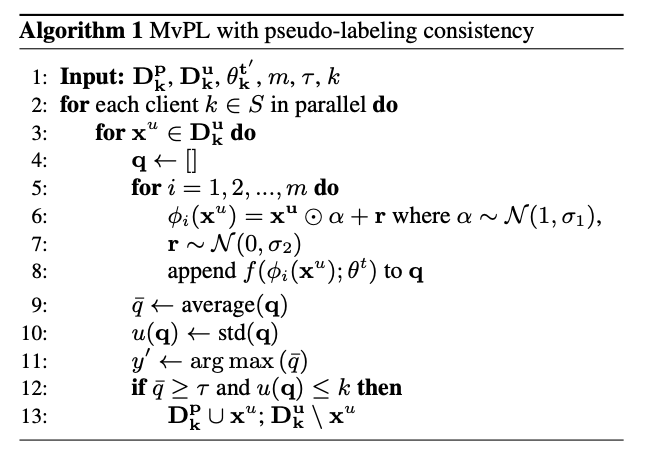

Semi-FedSER also incoporate with pseudo-labeling using the idea of multiview pseudo-labeling, and we adopt an efficient yet effective data augmentation technique called: Stochastic Feature Augmentation (SFA). The algorithm of the pseudo-labeling process is below.

In order to further address the gradient drifting issue in non-IID setting of FL, we add the implementation of SCAFFOLD. The final training algorithm is below.

@inproceedings{eyben2010opensmile,

title={Opensmile: the munich versatile and fast open-source audio feature extractor},

author={Eyben, Florian and W{\"o}llmer, Martin and Schuller, Bj{\"o}rn},

booktitle={Proceedings of the 18th ACM international conference on Multimedia},

pages={1459--1462},

year={2010}

}

@inproceedings{yang21c_interspeech,

author={Shu-wen Yang and Po-Han Chi and Yung-Sung Chuang and Cheng-I Jeff Lai and Kushal Lakhotia and Yist Y. Lin and Andy T. Liu and Jiatong Shi and Xuankai Chang and Guan-Ting Lin and Tzu-Hsien Huang and Wei-Cheng Tseng and Ko-tik Lee and Da-Rong Liu and Zili Huang and Shuyan Dong and Shang-Wen Li and Shinji Watanabe and Abdelrahman Mohamed and Hung-yi Lee},

title={{SUPERB: Speech Processing Universal PERformance Benchmark}},

year=2021,

booktitle={Proc. Interspeech 2021},

pages={1194--1198},

doi={10.21437/Interspeech.2021-1775}

}