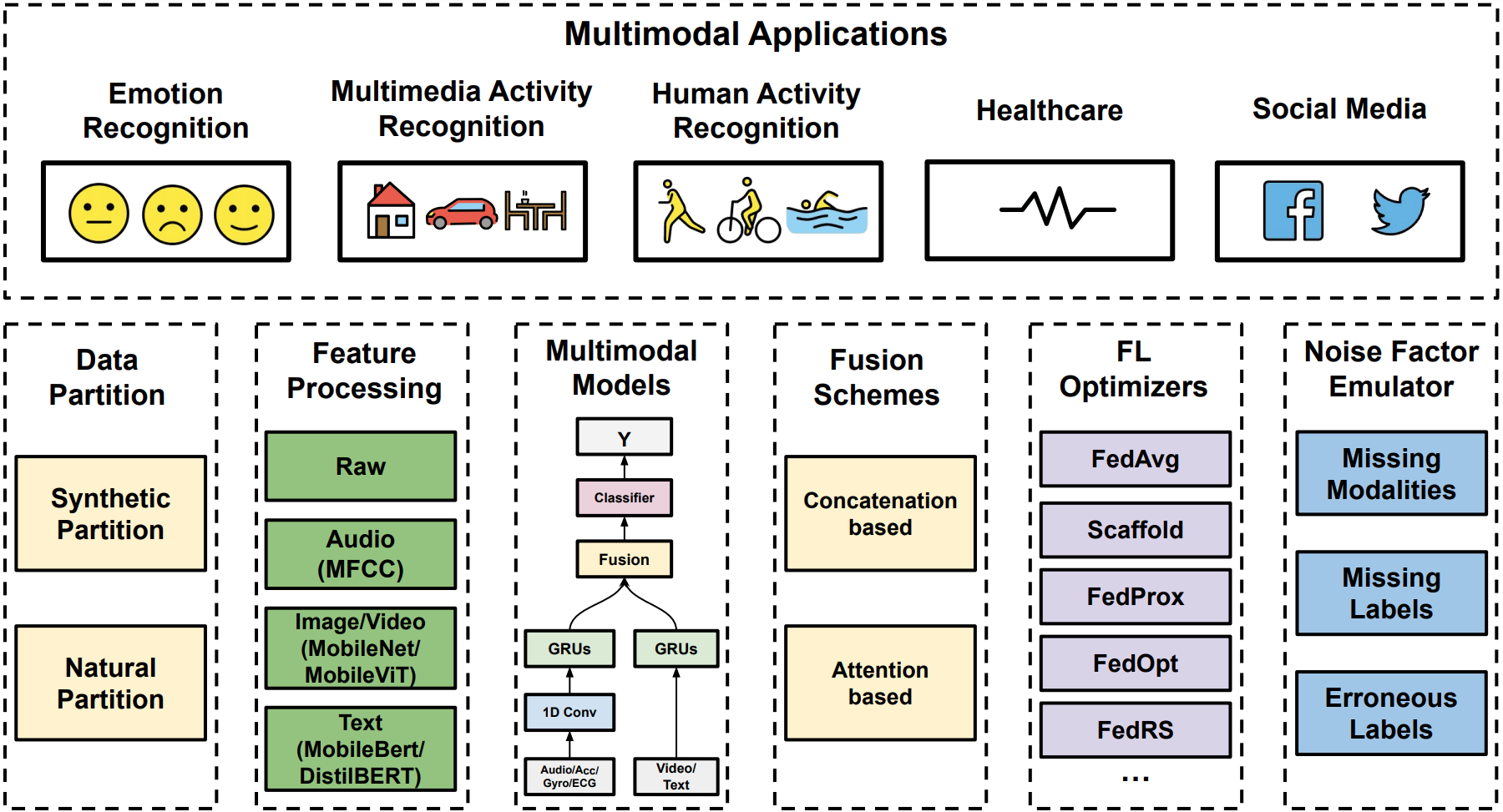

FedMutimodal [Paper Link] is an open source project for researchers exploring multimodal applications in Federated Learning setup. FedMultimodal was accepted to 2023 KDD ADS track.

The framework figure:

Image credit: https://openmoji.org/

-

- Emotion Recognition [CREMA-D] [Meld]

- Multimedia Action Recognition [UCF-101] [MiT-51]

- Human Activity Recognition [UCI-HAR] [KU-HAR]

- Social Media [Crisis-MMD] [Hateful-Memes]

-

- ECG classification [PTB-XL]

- Ego-4D (To Appear)

- Medical Imaging (To Appear)

To begin with, please clone this repo:

git clone git@github.com:usc-sail/fed-multimodal.git

To install the conda environment:

cd fed-multimodal

conda create --name fed-multimodal python=3.9

conda activate fed-multimodal

Then pip install the package:

pip install -e .

Feature processing includes 3 steps:

- Data partitioning

- Simulation features

- Feature processing

Here we provide an example to quickly start with the experiments, and reproduce the UCI-HAR results from the paper. We set the fixed seed for data partitioning, training client sampling, so ideally you would get the exact results (see Table 4, attention-based column) as reported from our paper.

You can modify the data path in system.cfg to the desired path.

cd fed_multimodal/data

bash download_uci_har.sh

cd ..

alpha specifies the non-iidness of the partition, the lower, the higher data heterogeneity. As each subject performs the same amount activities, we partition each subject data into 5 sub-clients.

python3 features/data_partitioning/uci-har/data_partition.py --alpha 0.1 --num_clients 5

python3 features/data_partitioning/uci-har/data_partition.py --alpha 5.0 --num_clients 5

The return data is a list, each item containing [key, file_name, label]

For UCI-HAR dataset, the feature extraction mainly handles normalization.

python3 features/feature_processing/uci-har/extract_feature.py --alpha 0.1

python3 features/feature_processing/uci-har/extract_feature.py --alpha 5.0

default missing modality simulation returns missing modality at 10%, 20%, 30%, 40%, 50%

cd features/simulation_features/uci-har

# output/mm/ucihar/{client_id}_{mm_rate}.json

# missing modalities

bash run_mm.sh

cd ../../../

The return data is a list, each item containing: [missing_modalityA, missing_modalityB, new_label, missing_label]

missing_modalityA and missing_modalityB indicates the flag of missing modality, new_label indicates erroneous label, and missing label indicates if the label is missing for a data.

cd experiment/uci-har

bash run_base.sh

cd experiment/uci-har

bash run_mm.sh

| Dataset | Modality | Paper | Label Size | Num. of Clients | Split | Alpha | FL Algorithm | F1 (Federated) | Learning Rate | Global Epoch |

|---|---|---|---|---|---|---|---|---|---|---|

| UCI-HAR | Acc+Gyro | UCI-Data | 6 | 105 | Natural+Manual | 5.0 5.0 0.1 0.1 |

FedAvg FedOpt FedAvg FedOpt |

77.74% 85.17% 76.66% 79.80% |

0.05 | 200 |

Feel free to contact us or open issue!

Corresponding Author: Tiantian Feng, University of Southern California

Email: tiantiaf@usc.edu

@article{feng2023fedmultimodal,

title={FedMultimodal: A Benchmark For Multimodal Federated Learning},

author={Feng, Tiantian and Bose, Digbalay and Zhang, Tuo and Hebbar, Rajat and Ramakrishna, Anil and Gupta, Rahul and Zhang, Mi and Avestimehr, Salman and Narayanan, Shrikanth},

journal={arXiv preprint arXiv:2306.09486},

year={2023}

}

FedMultimodal also uses the code from our previous work:

@inproceedings{zhang2023fedaudio,

title={Fedaudio: A federated learning benchmark for audio tasks},

author={Zhang, Tuo and Feng, Tiantian and Alam, Samiul and Lee, Sunwoo and Zhang, Mi and Narayanan, Shrikanth S and Avestimehr, Salman},

booktitle={ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={1--5},

year={2023},

organization={IEEE}

}