Simulated-Annealing

Implementing constrained optimization method simulated annealing

About

This experiment uses the simulated annealing algorithm to solve the flow shop scheduling. Each workpiece needs to go through

Background

It is known that there are

Problem Description

Based on the above problem background, the following constraint equations are given, where

The total processing time equation must meet the following constraints:

-

The total processing time of the first workpiece in the first machine can be obtained directly from the data set, that is, the

$f(W_{1},1)$ function can be called. -

The total processing time of the first

$i$ workpiece on the first machine is the sum of the total processing time of the first$i-1$ workpiece on the first machine and the processing time of the$i$ -th workpiece on the first machine, namely$T( W_{i-1},1) +f(W_{i},1)$ . -

The total processing time of the first workpiece on the first

$j$ machines is the sum of the total processing time of the first workpiece on the first$j-1$ machines and the processing time of the first workpiece on the$j$ -th machine, namely$T( W-{1},j-1) + f(W_{1},j)$ . -

The total processing time of the first

$i$ workpieces on the first$j$ machines is $max ($the total processing time of the first$i-1$ workpieces on the first$j$ machines, the total processing time of the first$i$ workpieces on the first$j-1$ machines$)$ + the processing time of the$i$ -th workpiece on the$j$ -th machine, i.e.$max{T(W_{i-1},j),T(W_{i},j-1)+f(W_{i},j)}$ [1] .

The variables of the constraint equation are the machining order Wi of the first

The following is the mathematical expression of the optimal scheduling problem of the assembly line:

Solution

The core of flow shop scheduling is to minimize the total processing time of all workpieces on all machines, that is, use the accumulation method of the total processing time of the first

Algorithm Explanation

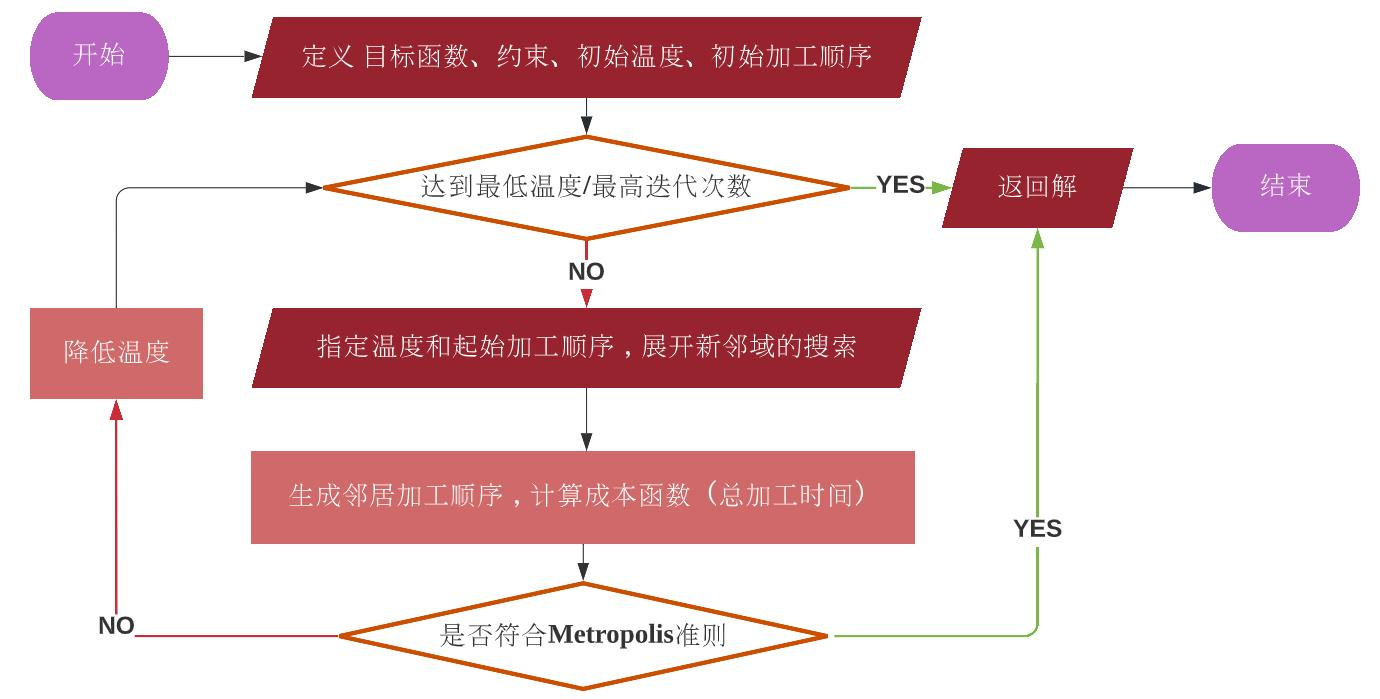

In this experiment, the simulated annealing algorithm is used to solve the flow shop scheduling. By using this probability algorithm, the approximate optimal solution is found in a large search space within a certain time. This optimization method simulates slow cooling of metals, characterized by a gradual reduction in atomic motion, which reduces the density of lattice defects until the lowest energy state is reached.

The simulated annealing algorithm, independently proposed by Kirkpatrick et al. (1983) and Cerny (1985), is based on the way in which the crystal structure of a metal reaches a state close to the global minimum energy during annealing, or the method in which the objective function reaches a minimum during a statistical search. The objective function represents the current energy state, while moving to a new variable represents the change in the corresponding energy state. At each virtual annealing temperature, simulated annealing generates new potential solutions by changing the current state, generally neighbors of the current state, according to predetermined criteria. The reception conditions for new states are based on the Metropolis standard (Metropolis-Hastings Algorithm), and the above process of generating new states will be iterated until convergence [2].

The general optimization algorithm iteratively compares the output of the objective function of the current point and its adjacent points in the domain. If the output generated by the adjacent node is better than the current node, the output is saved as the basic solution for the next iteration. If the output of adjacent points is not better than the current node, the algorithm terminates the search process, so the general optimization algorithm is easy to fall into the local optimal solution. The simulated annealing algorithm presents an efficient solution to the problem of general optimization algorithms, which combines two iterative loops, the cooling process of simulated annealing and the Metropolis criterion. The basic idea of the Metropolis criterion is to randomly perform an additional search for the neighborhood solution with a certain probability, so as to avoid the algorithm from falling into local extreme points [3].

Algorithm Flowchart

Time complexity and space complexity analysis

The time complexity of the simulated annealing algorithm depends on two factors, the annealing temperature and its decrement rate, and the length of the Markov chain in it. The time required for the entire algorithm to run is mainly determined by the initial temperature

There is a formula:

The algorithm terminates when

Assuming that the number of iterations of the inner loop is

Environmental setup

Experimental environment : Visual Studio Code 1.67.1 + Python 3.9.1

Experimental parameters :

For cases 0, 1, 2, 5 use parameters (Initial temp = 100, C = 0.9)

For cases 3, 4, 8 use parameters (Initial temp = 500, C = 0.99)

For cases 6, 7, 9, 10 use parameters (Initial temp = 1000, C = 0.99)

Result and Analysis

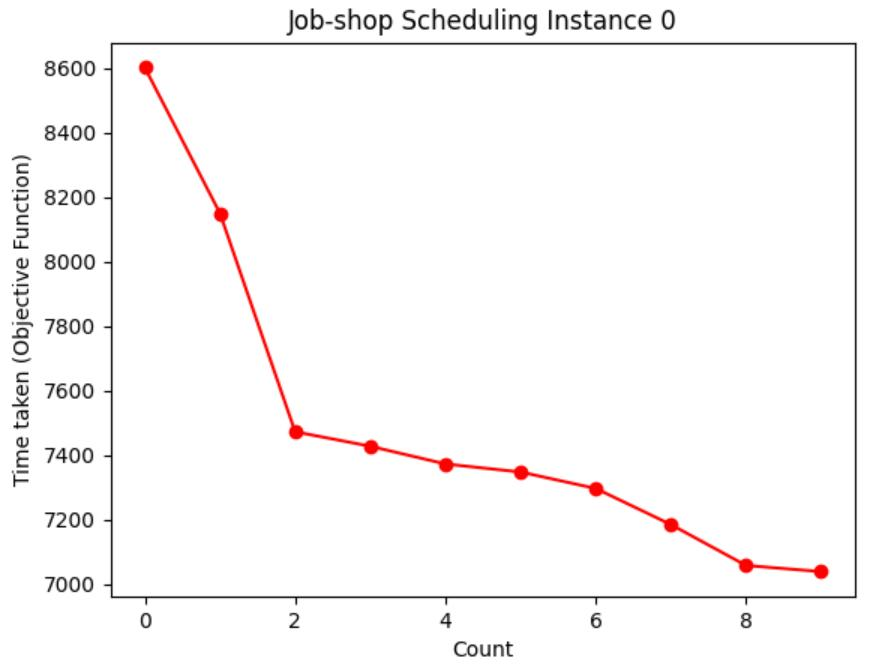

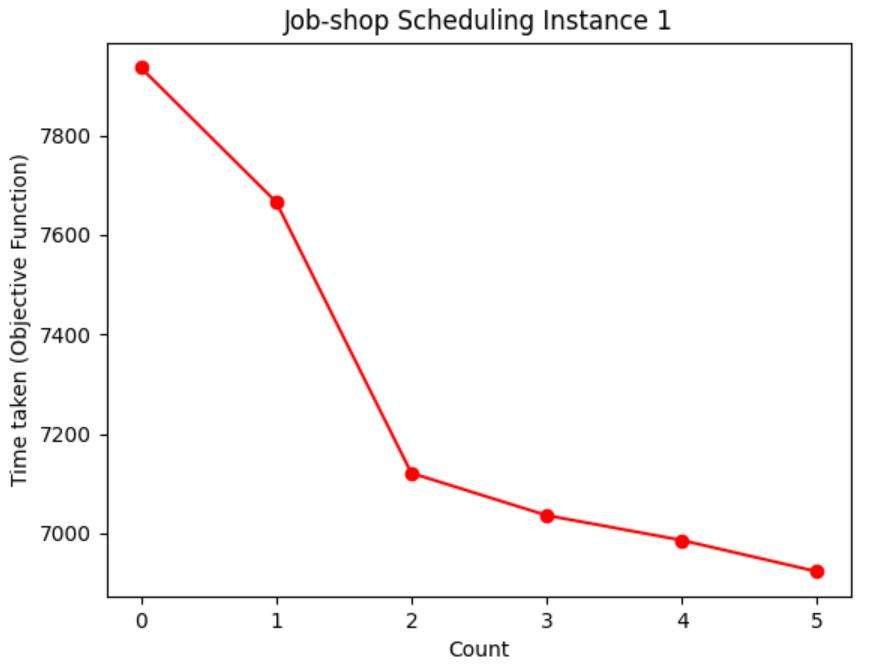

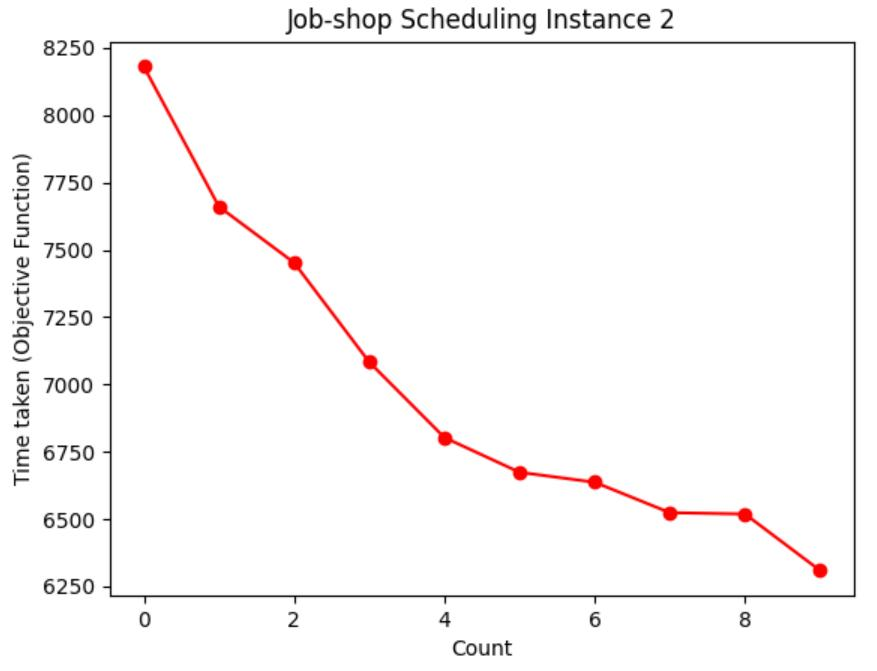

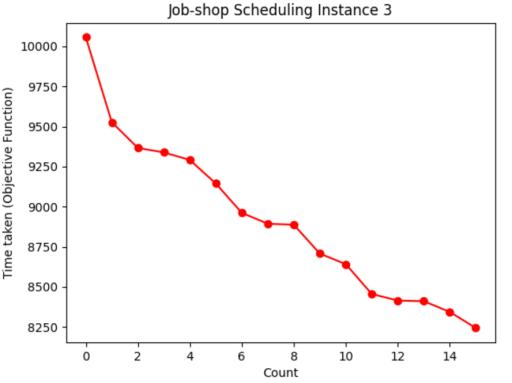

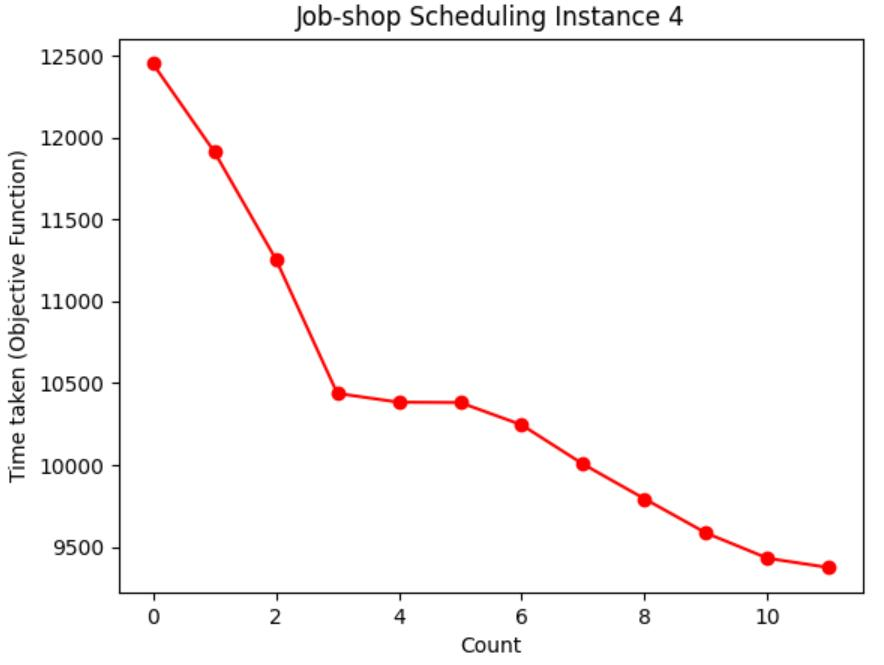

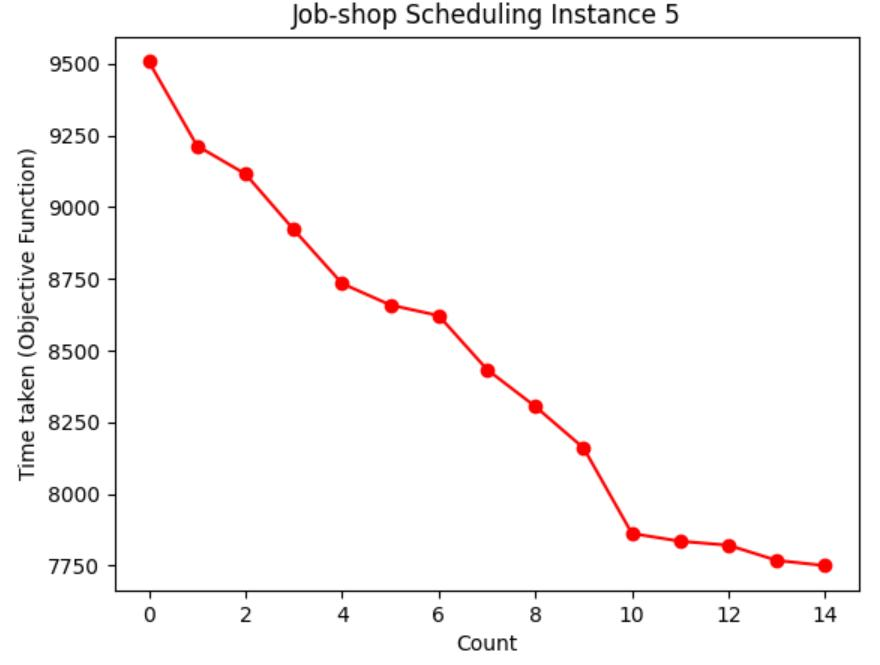

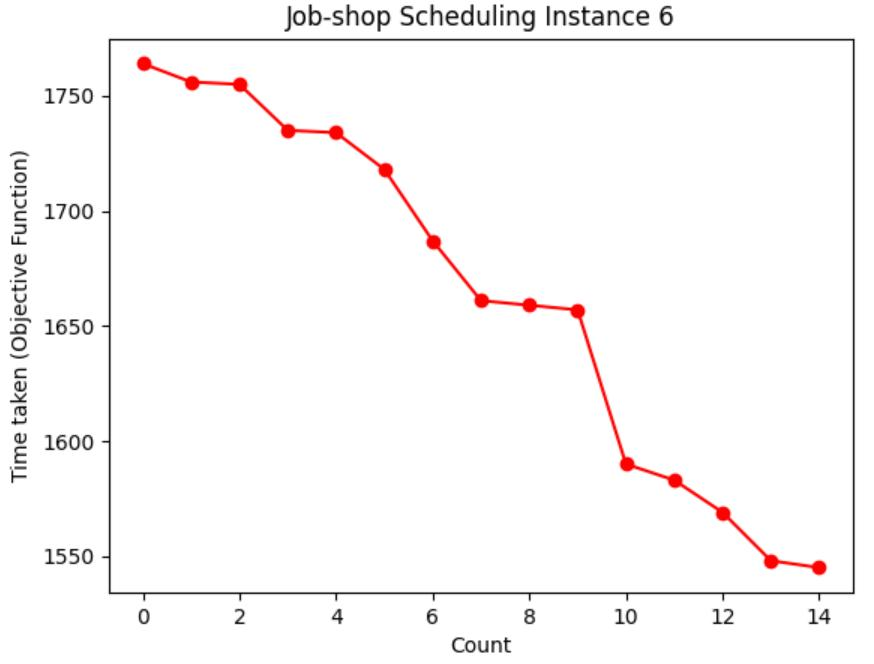

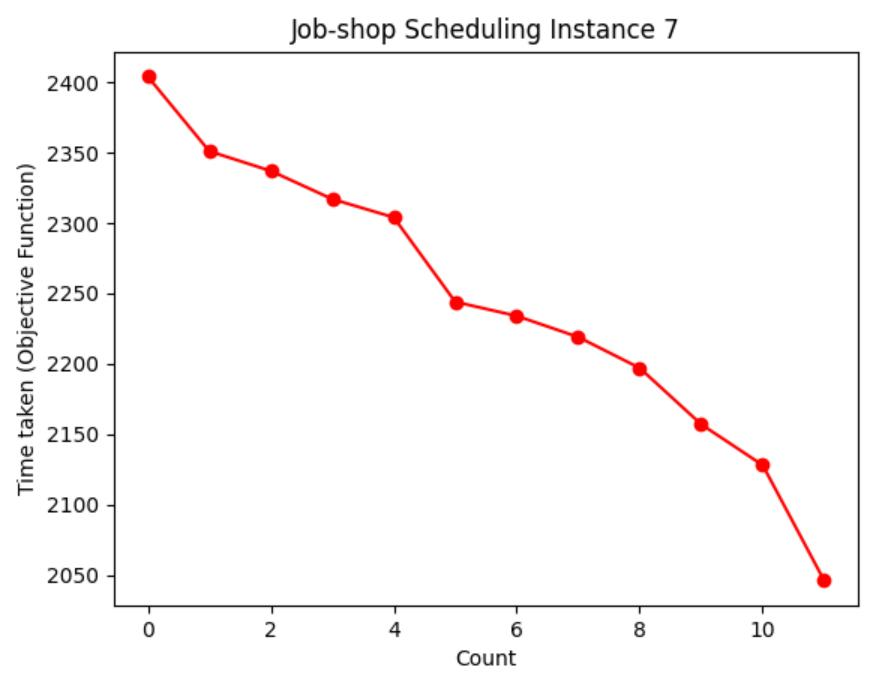

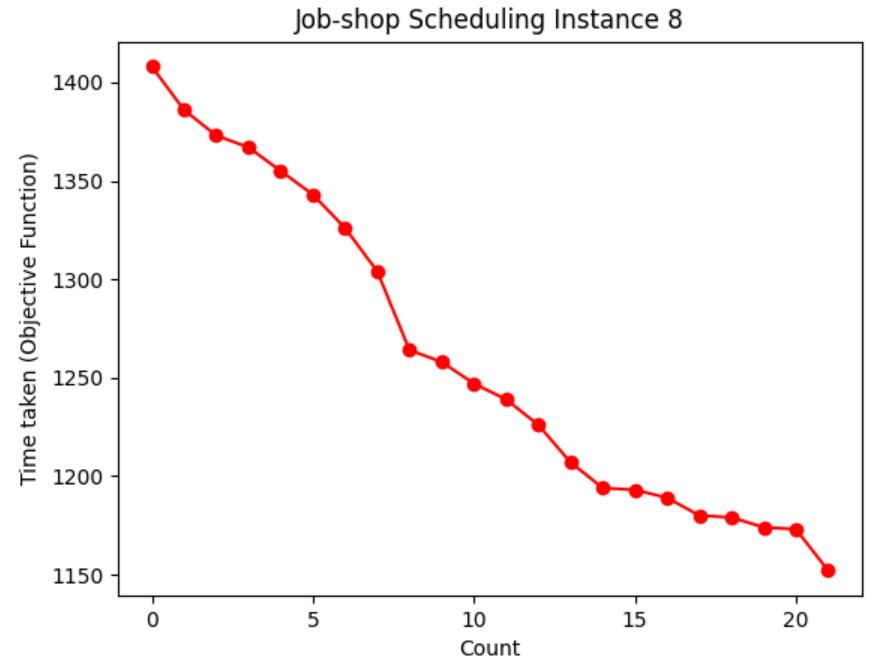

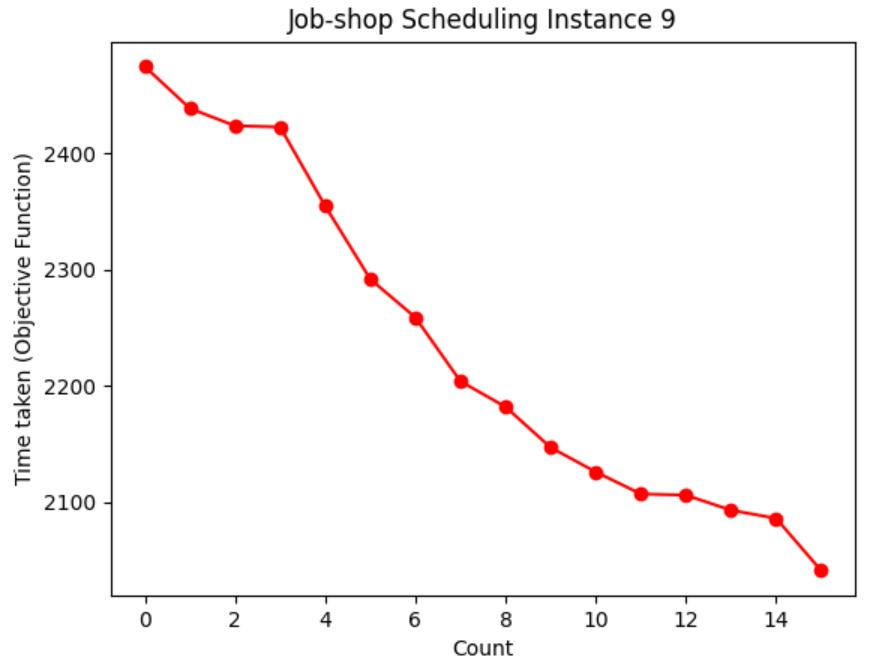

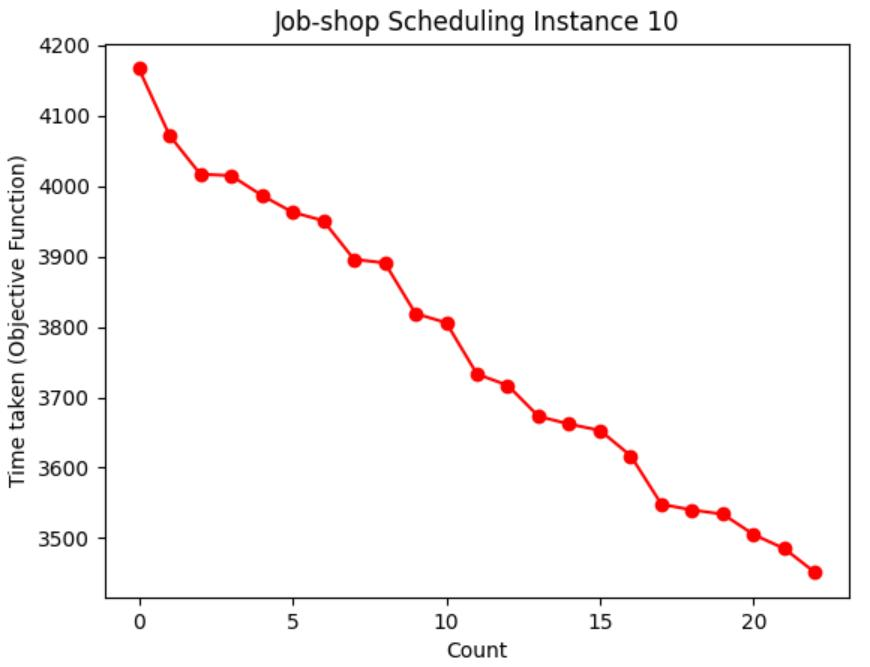

Table 1: Chart visualization of total machining time

Table 2: Optimal machining order and total machining time

| Instance | Optimal machining order | Running time (ms) |

|---|---|---|

| 0 | [7,6,0,4,10,3,5,9,1,2,8] | 7038 |

| 1 | [4,1,2,0,5,3] | 6923 |

| 2 | [6,2,3,10,1,9,8,7,4,5,0] | 6309 |

| 3 | [0,9,6,8,7,3,13,5,2,10,12,1,4,11] | 8244 |

| 4 | [5,8,10,0,7,3,14,15,9,1,13,6,4,12,11,2] | 8373 |

| 5 | [3,0,1,4,2,6,8,7,5,9] | 7750 |

| 6 | [12,10,15,6,7,17,9,5,0,13,18,8,3,19,2,11,16,14,1,4] | 1545 |

| 7 | [0,9,15,7,8,17,2,18,3,5,11,14,13,12,1,16,19,4,6,10] | 2046 |

| 8 | [12,0,3,19,13,15,6,2,8,4,5,16,11,14,7,9,10,1,17,19] | 1152 |

| 9 | [1,15,12,7,10,16,3,18,17,14,2,6,4,0,5,9,8,11,13,19] | 2041 |

| 10 | [14,24,21,40,13,12,42,9,29,31,6,41,5,32,43,3,23,10, 15,39,2,27,49,38,25,26,1,17,22,4,46,19,36,7,47,28,0,8,44,45,18,34,16,35,48,30,33,20,37,11] | 3451 |

Table 1 shows the process of visualizing the objective function using a graph, recording the moment when the objective function value (total processing time) of each case is updated to the lowest value, and visualizing the decreasing route of the total processing time. It can be seen from Table 3 that the higher the initial temperature

However, with a higher initial temperature

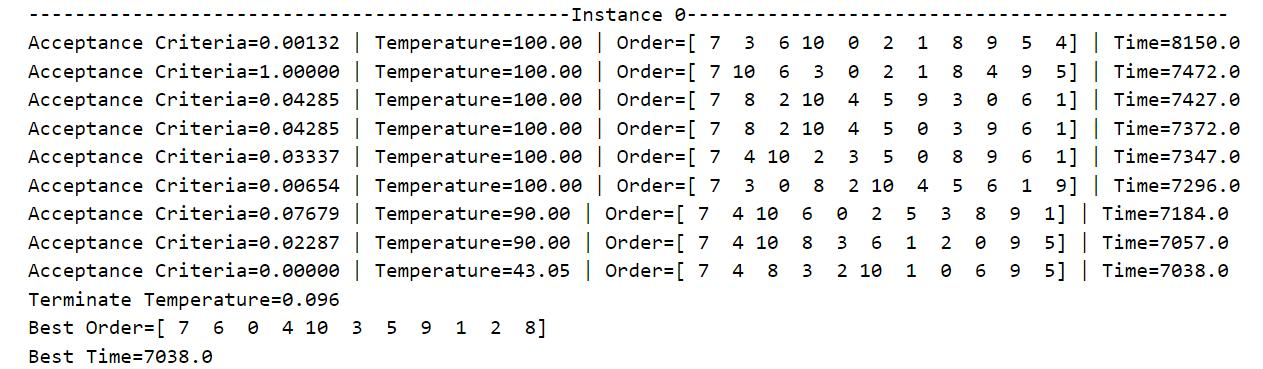

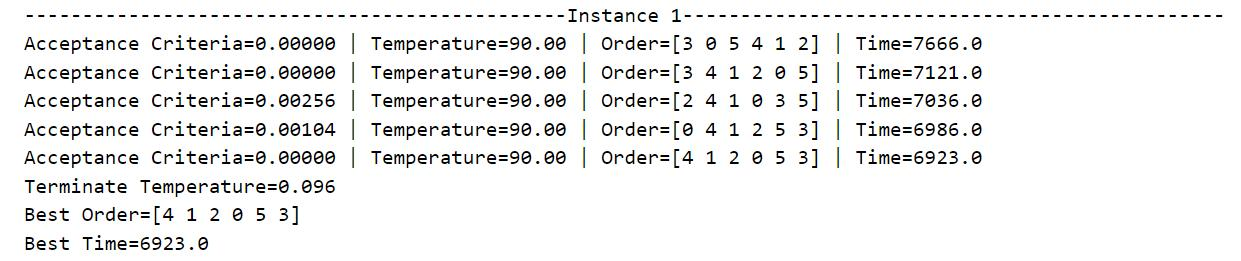

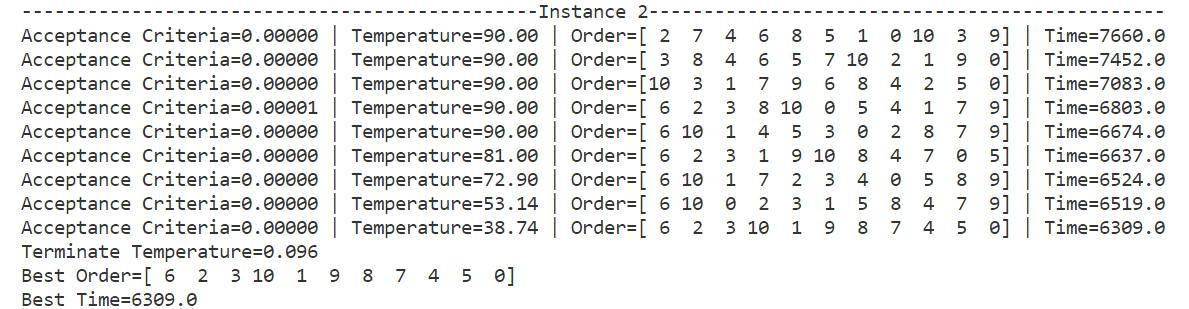

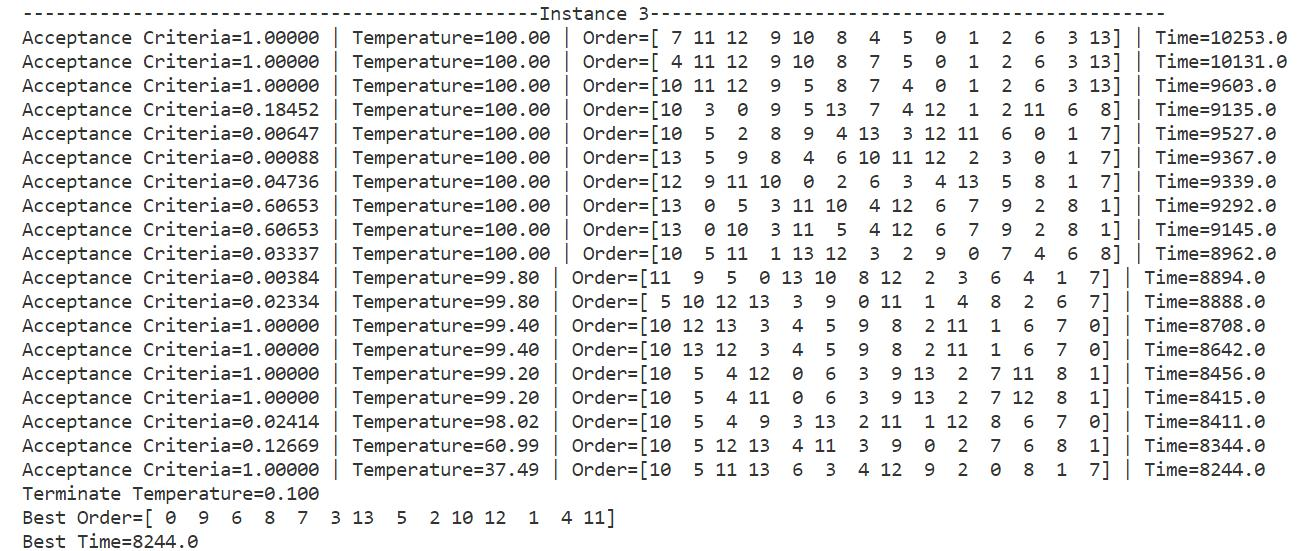

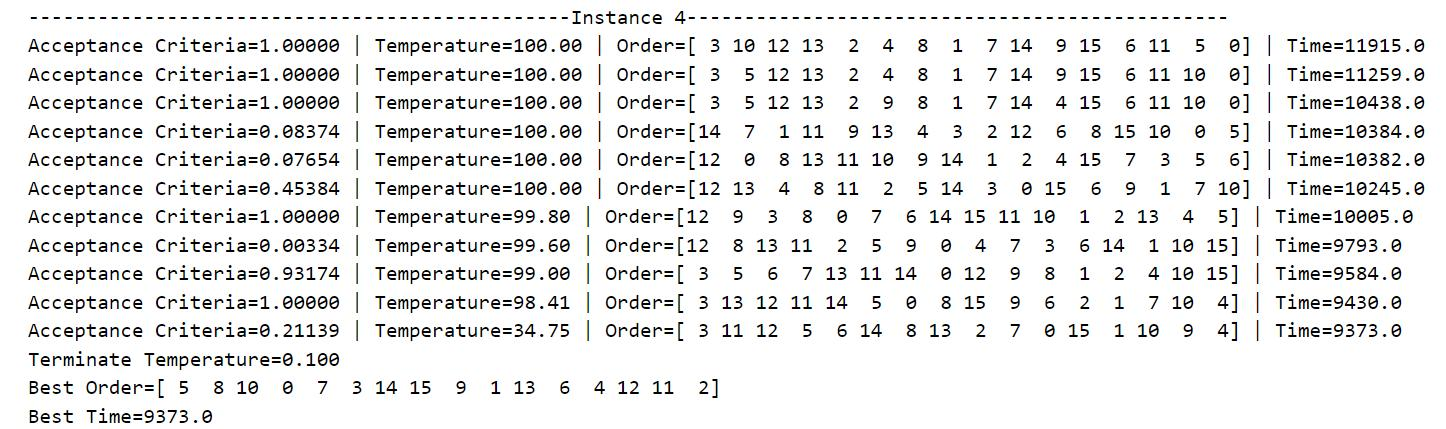

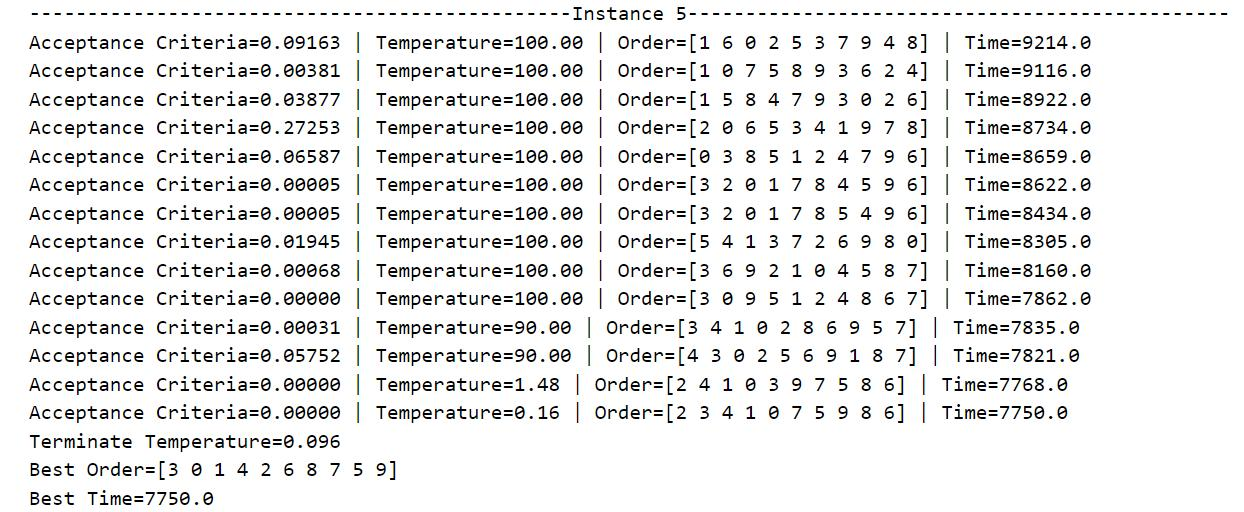

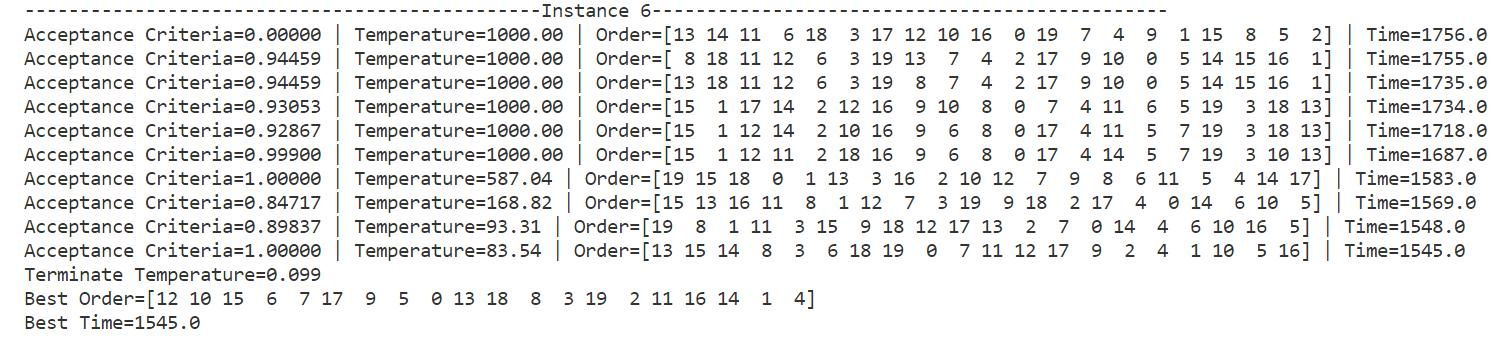

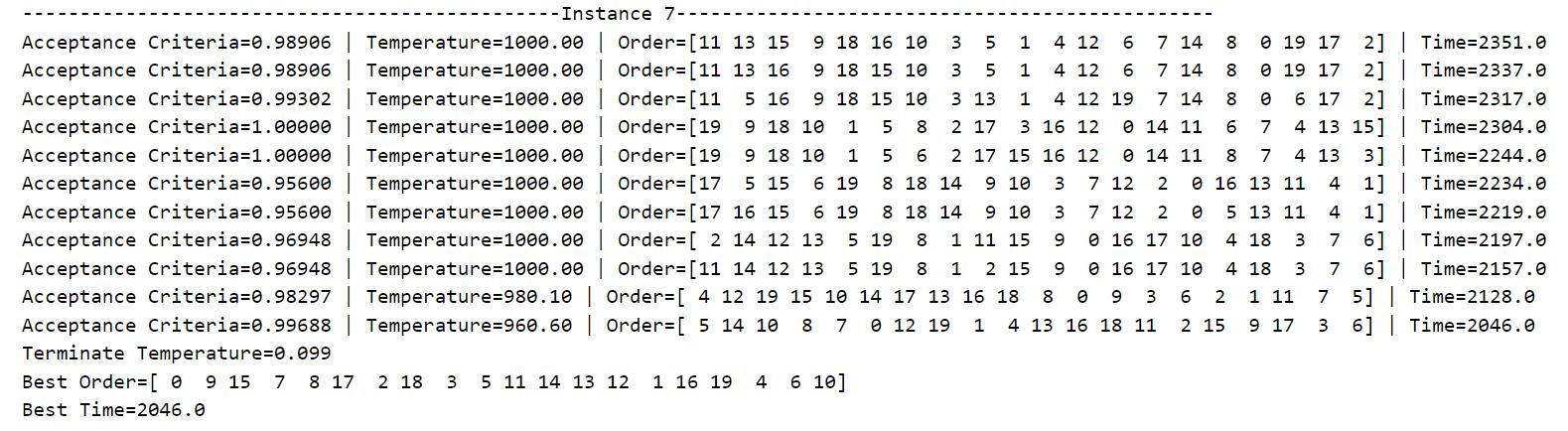

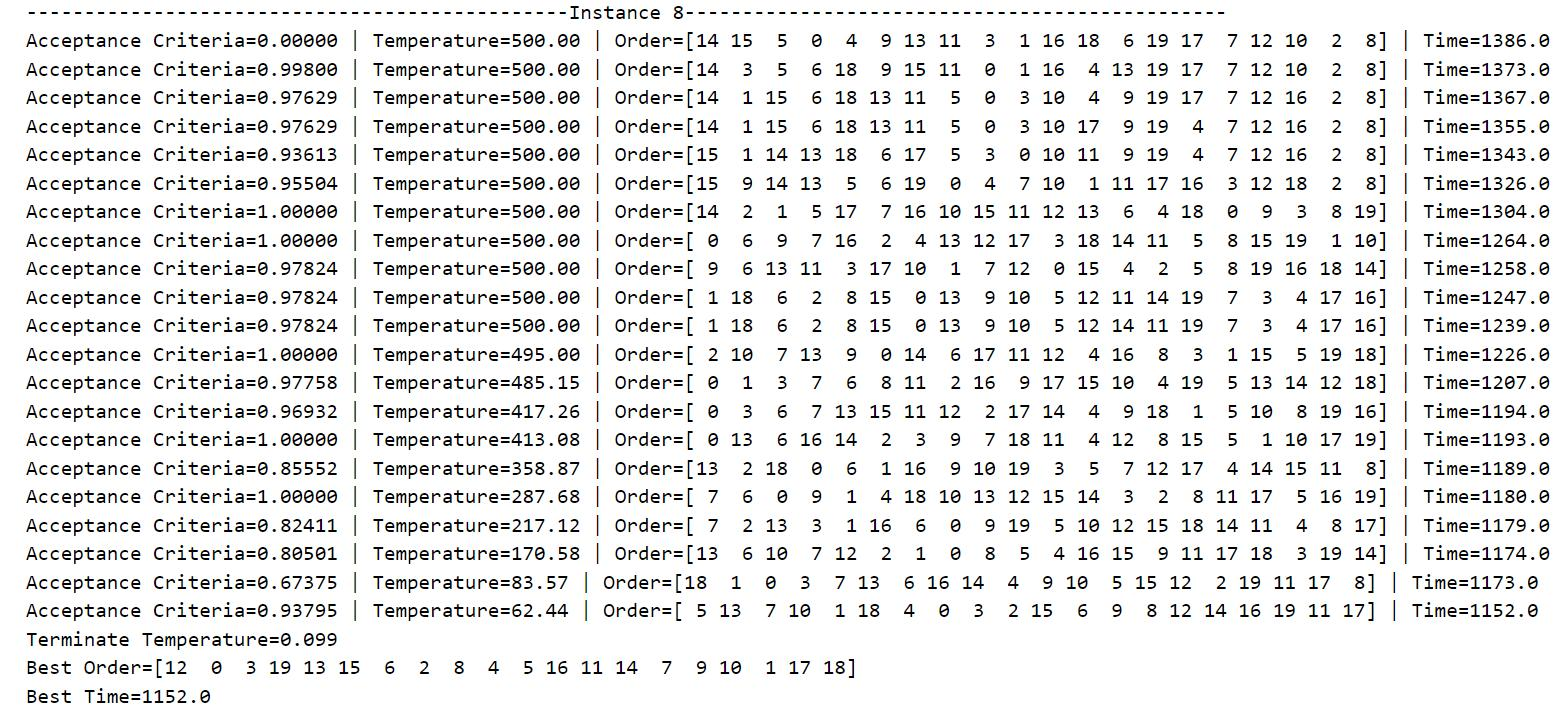

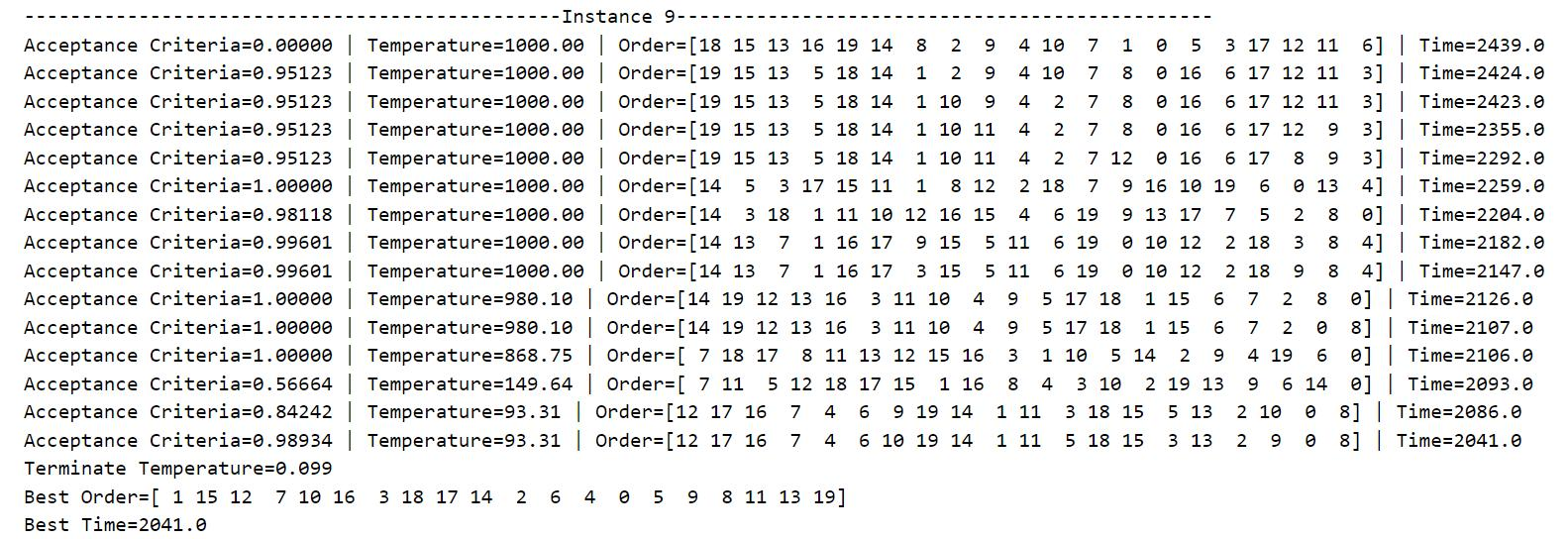

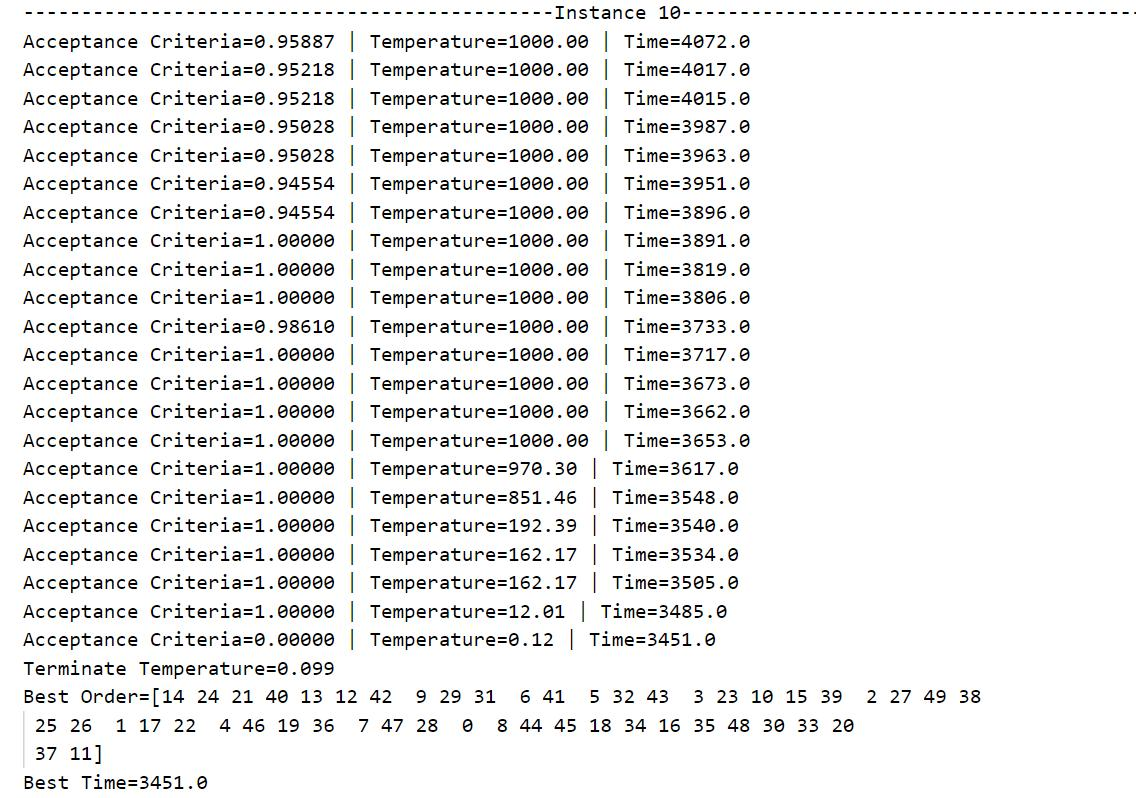

Table 3: Simulated annealing algorithm solution process and result display

| Solution process and result display |

|---|

|

|

|

|

|

|

|

|

|

|

|

Table 3 shows the running process of the program and the final result. During operation, all cases will start from their predetermined initial temperature and slowly decrease according to the decrement rate until they fall below the minimum temperature defined in the code by 0.1. For the convenience of presentation, only the candidate processing sequence, total processing time and Metropolis criterion probability when the lowest processing time occurs each time is output, and the data of each iteration is not displayed. It can be seen from the data that as the temperature decreases, the total processing time gradually decreases, that is, the algorithm converges to the local optimal solution or the global optimal solution.

The output "Acceptance Criteria" is the current Metropolis criterion probability (between 0 and 1), and "Terminate Temperature" is the lowest temperature that terminates the current iteration (less than or equal to 0.1). The optimal machining sequence and the lowest total machining time are output after the annealing process has run. Running the same case several times may obtain different optimal processing sequences and minimum total processing time, that is, the simulated annealing algorithm has a high probability of finding the global optimal solution in the search space, but it cannot guarantee that each execution of the program can be achieved. The global optimal solution may also fall into the local optimal solution with a certain probability. Therefore, in order to ensure that the current obtained solution approximates the global optimal solution, it is necessary to repeatedly run each case many times to continuously find the lowest total processing time.

Table 4: Comparison of parameters of each flow shop scheduling case

| Instance | Num of workpiece, |

Num of machine, |

Initial Temperature, |

Decay Coefficient, |

Running time (s) |

|---|---|---|---|---|---|

| 0 | 11 | 5 | 100 | 0.9 | 7.25 |

| 500 | 0.99 | 105.53 | |||

| 1000 | 0.99 | 221.29 | |||

| 1 | 6 | 8 | 100 | 0.9 | 6.37 |

| 500 | 0.99 | 80.67 | |||

| 1000 | 0.99 | 191.34 | |||

| 2 | 11 | 4 | 100 | 0.9 | 6.09 |

| 500 | 0.99 | 140.88 | |||

| 1000 | 0.99 | 173.82 | |||

| 3 | 14 | 5 | 100 | 0.9 | 16.31 |

| 500 | 0.99 | 113.28 | |||

| 1000 | 0.99 | 192.84 | |||

| 4 | 16 | 4 | 100 | 0.9 | 10.34 |

| 500 | 0.99 | 109.78 | |||

| 1000 | 0.99 | 238.90 | |||

| 5 | 10 | 6 | 100 | 0.9 | 8.09 |

| 500 | 0.99 | 105.15 | |||

| 1000 | 0.99 | 165.66 | |||

| 6 | 20 | 10 | 100 | 0.9 | 30.81 |

| 500 | 0.99 | 333.28 | |||

| 1000 | 0.99 | 342.16 | |||

| 7 | 20 | 15 | 100 | 0.9 | 45.09 |

| 500 | 0.99 | 508.24 | |||

| 1000 | 0.99 | 522.76 | |||

| 8 | 20 | 5 | 100 | 0.9 | 22.25 |

| 500 | 0.99 | 167.72 | |||

| 1000 | 0.99 | 251.45 | |||

| 9 | 20 | 15 | 100 | 0.9 | 43.36 |

| 500 | 0.99 | 484.15 | |||

| 1000 | 0.99 | 504.17 | |||

| 10 | 50 | 10 | 100 | 0.9 | 129.44 |

| 500 | 0.99 | 851.30 | |||

| 1000 | 0.99 | 894.92 |

We have the total number of iterations of the final outer loop

- According to Case 0 in Table 4, if the initial temperature

$t_{0}=100$ and the temperature decrement rate$C = 0.9$ , then there is$k_{finish} = \dfrac{ln( 0.096/100)}{ln0.9} + 1=67,n=11,m=5$ , so the time complexity is$67×1000×(2×11×5)=7.37×10^6$ , and the running time is 7.25 seconds. - According to Case 1 in Table 4, if the initial temperature

$t_{0}=100$ and the temperature decrement rate$C = 0.9$ , then there is$k_{finish} = \dfrac{ln( 0.096/100)}{ln0.9} + 1=67,n=6,m=8$ , so the time complexity is$67×1000×(2×6×8)=6.43×10^6$ , and the running time is 6.37 seconds. - According to Case 8 in Table 4, if the initial temperature

$t_{0}=500$ and the temperature decrement rate$C = 0.99$ then there is$k_{finish} = \dfrac{ln( 0.099/500)}{ln0.99} + 1=849,n=20,m=5$ , so the time complexity is$849×1000×(2×20×5)=169.80×10^6$ , and the running time is 167.72 seconds. - According to Case 1 in Table 4, if the initial temperature

$t_{0}=1000$ and the temperature decrement rate$C = 0.99$ , then there is$k_{finish} = \dfrac{ln( 0.099/1000)}{ln0.99} + 1=918,n=50,m=10$ , so the time complexity is$918×1000×(2×50×10)=918.00×10^6$ , and the running time is 894.92 seconds.

It can be seen from the calculation results of the above time complexity that, corresponding to different cases and different parameters, the time complexity calculation results are still proportional to the running time in Table 4. Therefore, it can be intuitively seen that the main factors affecting the running time of the program are the number of workpieces, the number of machines, the number of iterations of the outer loop and the number of iterations of the inner loop, of which the initial temperature

Personal Summary

From this experiment, I learned how to use the simulated annealing algorithm to optimally schedule workpieces and machines in a flow shop. If the number of workpieces and machines is larger, that is, the search space is larger, a higher initial temperature and a temperature decrement rate closer to 1 should be set, so that the current candidate processing sequence has enough time and a higher probability to jump out of the trap itself. In the range of local minima, it slowly converges in the direction of the global optimal solution.

Since the simulated annealing algorithm is meta-heuristic, different parameters need to be tuned. Although setting a higher temperature for cases with small search space can ensure better model performance, the convergence speed may be too slow, and perhaps setting a lower temperature can also obtain the corresponding global optimal solution; while for cases with large search space If the temperature is set lower in the case, the convergence speed is too fast, and the global optimal solution may not be obtained. Therefore, when initializing the parameters in the code, I specially set the appropriate initial temperature and temperature decrement rate for the case according to the size of the number of workpieces and the number of machines, which is convenient for the execution of the program and does not need to be executed for each different case to modify.

The Metropolis criterion in the simulated annealing algorithm also improves the traditional optimization algorithm. It adopts a multiple search strategy to allow the current candidate solution to accept the neighborhood solution that is not better than it with a certain probability, so as to avoid the algorithm from falling into the deadlock of the local optimal solution. On the other hand, the heating function can be set in the algorithm to lower the temperature under certain conditions and adjust the search strategy. Simulated annealing algorithm can also be combined with other algorithms such as genetic algorithm, chaotic search, neural network, gradient method, etc. for further optimization.

Reference:

[1] Milos S. Mathematical Models of Flow Shop and Job Shop Scheduling Problems[J]. International Journal of Physical and Mathematical Sciences, 2007, 1(7): 308.

[2] Mohammed G, Vassili V, Amir H. A Review on Traditional and Modern Structural Optimization: Problems and Techniques[M]. Amsterdam: Elsevier, 2013, 5-10.

[3] Ozan E. Optimization in Renewable Energy Systems[M]. Oxford: Butterworth-Heinemann, 2017, 27-74.

[4] 李元香, 项正龙, 张伟艳. 模拟退火算法的弛豫模型与时间复杂性分析[J]. 计算机学报, 2020, 43(5): 802-803.