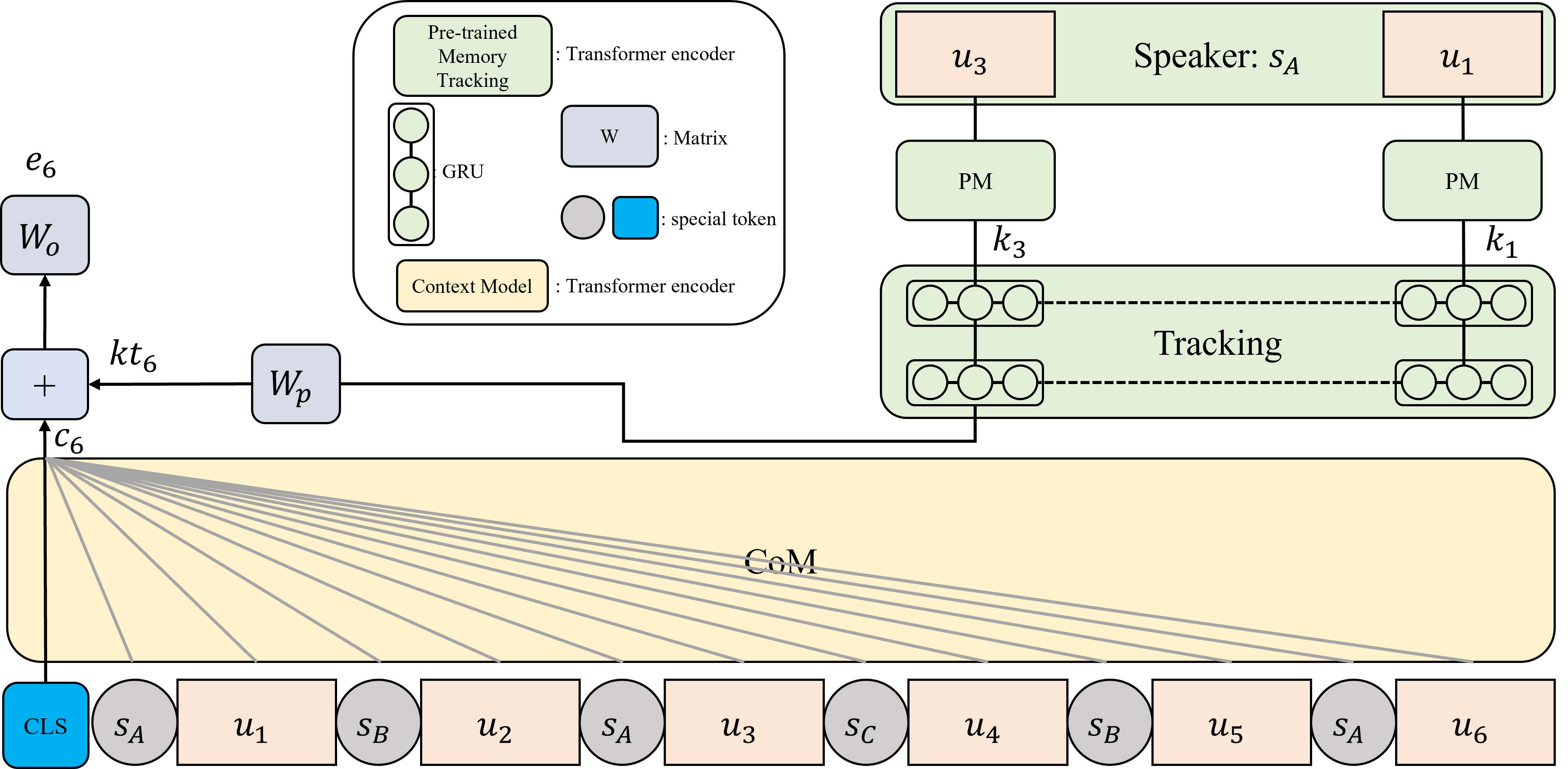

CoMPM: Context Modeling with Speaker's Pre-trained Memory Tracking for Emotion Recognition in Conversation (NAACL 2022)

- Pytorch 1.8

- Python 3.6

- Transformer 4.4.0

- sklearn

Each data is split into train/dev/test in the dataset folder.

For CoMPM, CoMPM(s), CoMPM(f)

Argument

- pretrained: type of model (CoM and PM) (default: roberta-large)

- initial: initial weights of the model (pretrained or scratch) (default: pretrained)

- cls: label class (emotion or sentiment) (default: emotion)

- dataset: one of 4 dataset (dailydialog, EMORY, iemocap, MELD)

- sample: ratio of the number of the train dataset (default: 1.0)

- freeze: Whether to learn the PM or not

python3 train.py --initial {pretrained or scratch} --cls {emotion or sentiment} --dataset {dataset} {--freeze}For a combination of CoM and PM (based on different model)

Argument

- context_type: type of CoM

- speaker_type: type of PM

cd CoMPM_diff

python3 train.py {--argument}For CoM or PM

cd CoM or PM

python3 train.py {--argument}- Google drive

- Unpack model.tar.gz and replace it in {dataset}_models/roberta-large/pretrained/no_freeze/{class}/{sampling}/model.bin

- dataset: dailydialog, EMORY, iemocap, MELD

- class: "emotion" or "sentiment"

- sampling: 0.0 ~ 1.0, default: 1.0

python3 test.pyTest result for one seed. In the paper, the performance of CoMPM was reported as an average of three seeds.

| Model | Dataset (emotion) | Performace: one seed (paper) |

|---|---|---|

| CoMPM | IEMOCAP | 66.33 (66.33) |

| CoMPM | DailyDialog | 52.46/60.41 (53.15/60.34) |

| CoMPM | MELD | 65.53 (66.52) |

| CoMPM | EmoryNLP | 38.56 (37.37) |

@article{lee2021compm,

title={CoMPM: Context Modeling with Speaker's Pre-trained Memory Tracking for Emotion Recognition in Conversation},

author={Lee, Joosung and Lee, Wooin},

journal={arXiv preprint arXiv:2108.11626},

year={2021}

}