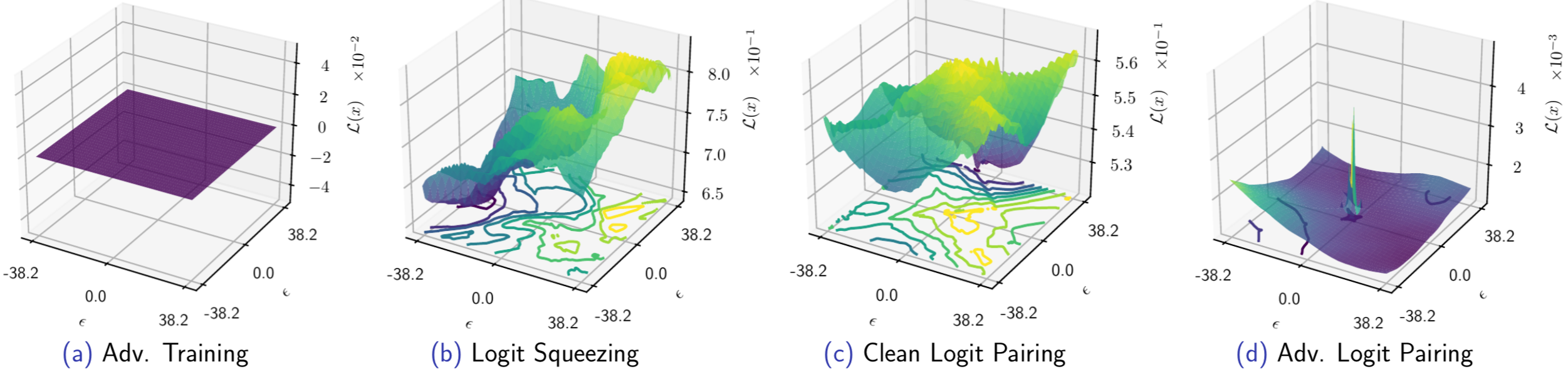

The proposed logit regularization methods in "Adversarial Logit Pairing" distort the input loss surface which makes gradient-based attacks difficult.

We highlight that a proper evaluation of the adv. robustness is still an unresolved task.

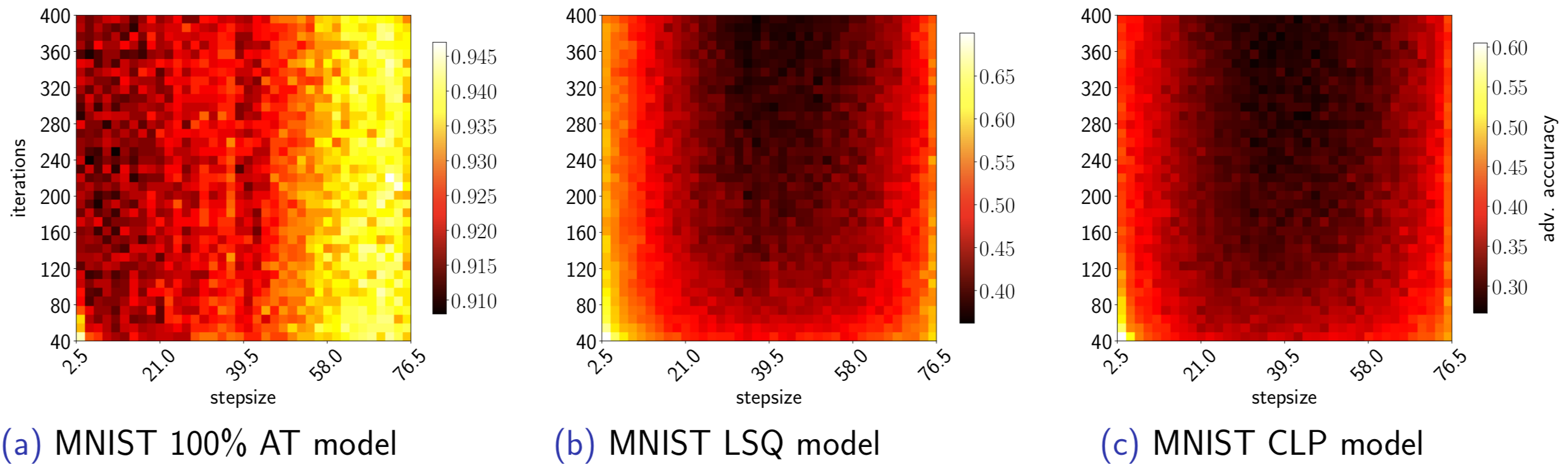

The PGD attack with the default settings is not necessarily a strong attack. Testing different attack hyperparameters (the number of iterations, stepsize) is crucial when evaluating the robustness of a new defense.

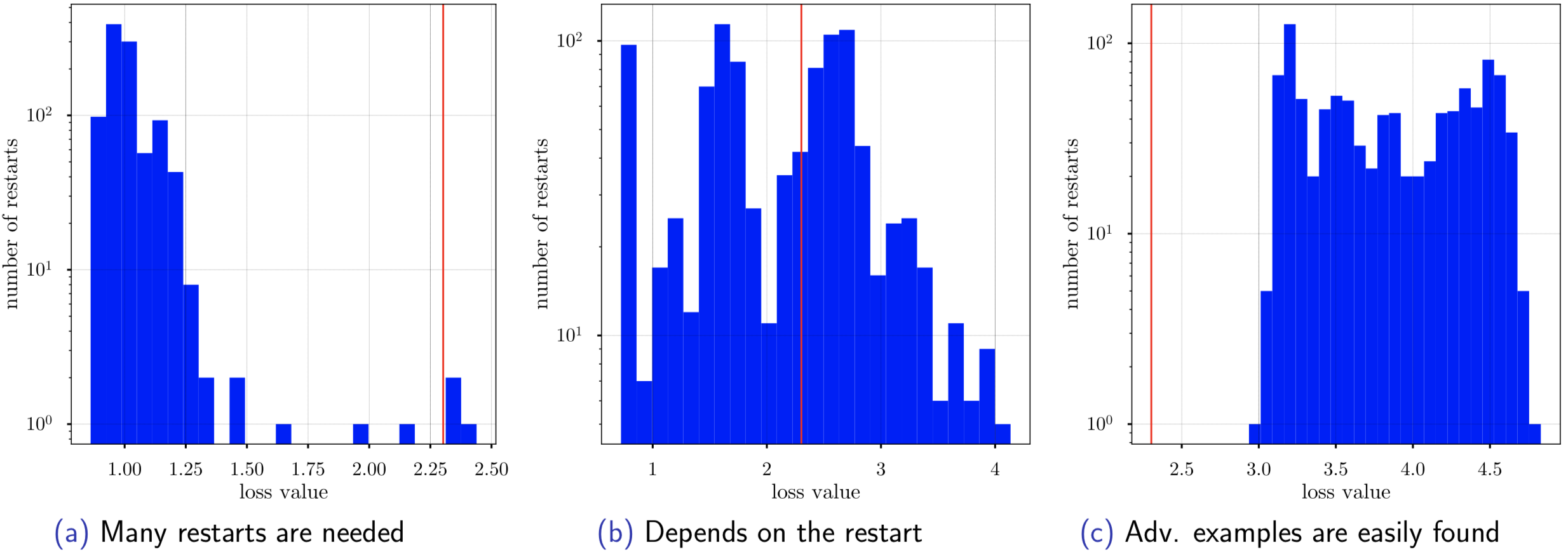

Performing many random restarts is very important when an attack deteriorates the loss surface. Below is an example of the loss values achieved by the PGD attack on different test points (a CLP model, MNIST). Using only 1 random restart (a standard practice) may be not sufficient to find an adversarial example.

The vertical red line denotes the loss value −ln(0.1), which guarantees that for this and a higher value of the loss

we find an adversarial example.

The vertical red line denotes the loss value −ln(0.1), which guarantees that for this and a higher value of the loss

we find an adversarial example.

In the table below, we provide our independently trained models on MNIST, CIFAR-10, and Tiny ImageNet together with the Fine-tuned Plain + ALP LL model released here.

Note that we could break CLP, LSQ, and some ALP models only by using the PGD attack with many iterations and many random restarts. This is computationally expensive, thus an interesting direction is to come up with new attacks that can break such models with less computational efforts. For this purposes, we encourage researchers to use the models given below for an evaluation of new attacks.

| Model | Accuracy | Adversarial accuracy with the baseline attack |

Adversarial accuracy with our strongest attack |

|---|---|---|---|

| Plain | 99.2% | 0.0% | 0.0% |

| CLP | 98.8% | 62.4% | 4.1% |

| LSQ | 98.8% | 70.6% | 5.0% |

| Plain + ALP | 98.5% | 96.0% | 88.9% |

| 50% AT + ALP | 98.3% | 97.2% | 89.9% |

| 50% AT | 99.1% | 92.7% | 88.2% |

| 100% AT + ALP | 98.4% | 96.6% | 85.7% |

| 100% AT | 98.9% | 95.2% | 88.0% |

| Model | Accuracy | Adversarial accuracy with the baseline attack |

Adversarial accuracy with our strongest attack |

|---|---|---|---|

| Plain | 83.0% | 0.0% | 0.0% |

| CLP | 73.9% | 2.8% | 0.0% |

| LSQ | 81.7% | 27.0% | 1.7% |

| Plain + ALP | 71.5% | 23.6% | 10.7% |

| 50% AT + ALP | 70.4% | 21.8% | 10.5% |

| 50% AT | 73.8% | 18.6% | 7.3% |

| 100% AT + ALP | 65.7% | 19.0% | 6.4% |

| 100% AT | 65.7% | 16.0% | 6.7% |

| Model | Accuracy | Adversarial accuracy with the baseline attack |

Adversarial accuracy with our strongest attack |

|---|---|---|---|

| Plain | 53.0% | 3.9% | 0.4% |

| CLP | 48.5% | 12.2% | 0.7% |

| LSQ | 49.4% | 12.8% | 0.8% |

| Plain + ALP LL | 53.5% | 17.2% | 0.8% |

| Fine-tuned Plain + ALP LL | 72.0% | 31.8% | 3.6% |

| 50% AT LL | 46.3% | 25.1% | 9.4% |

| 50% AT LL + ALP LL | 45.2% | 26.3% | 13.5% |

| 100% AT LL | 41.2% | 25.5% | 16.3% |

| 100% AT LL + ALP LL | 37.0% | 25.4% | 16.5% |

- For Tiny ImageNet code: TensorFlow 1.8 and Python 2.7

- For MNIST and CIFAR-10 code: Tensorflow 1.8, PyTorch0.4, CleverHans 3.0.1, and Python 3.5

- In order to set up Tiny ImageNet

dataset in the correct format (tf.records), run

tiny_imagenet.sh.

You can find examples on how to run the code for Tiny ImageNet in the following scripts:

train.shTrains a model from scratch.eval.shEvaluates an existing model (see the table for the particular Tiny ImageNet models). Note, that with this script you might not be able to reproduce the results from the paper exactly, since for those evaluations the random seed was not fixed. But the results are expected to be very close to the reported ones.

More details are available by python <script> --helpfull.

Note, that the code for Tiny ImageNet is based on the official code release of "Adversarial Logit Pairing" paper made in July 2018.

You can find examples on how to run the code for Tiny ImageNet in the following scripts:

train.shTrains a model from scratch.eval.shEvaluates an existing model

More details are available by python train.py --help and python eval.py --help.

Please contact Marius Mosbach or Maksym Andriushchenko regarding this code.

@article{mosbach2018logit,

title={Logit Pairing Methods Can Fool Gradient-Based Attacks},

author={Mosbach, Marius and Andriushchenko, Maksym and Trost, Thomas and Hein, Matthias and Klakow, Dietrich},

conference={NeurIPS 2018 Workshop on Security in Machine Learning},

year={2018}

}