Go to portal.expanse.sdsc.edu and login with your "ucsd.edu" credentials, unless instructed otherwise, in which case, you may use your "access-ci.org" credentials (if you have one).

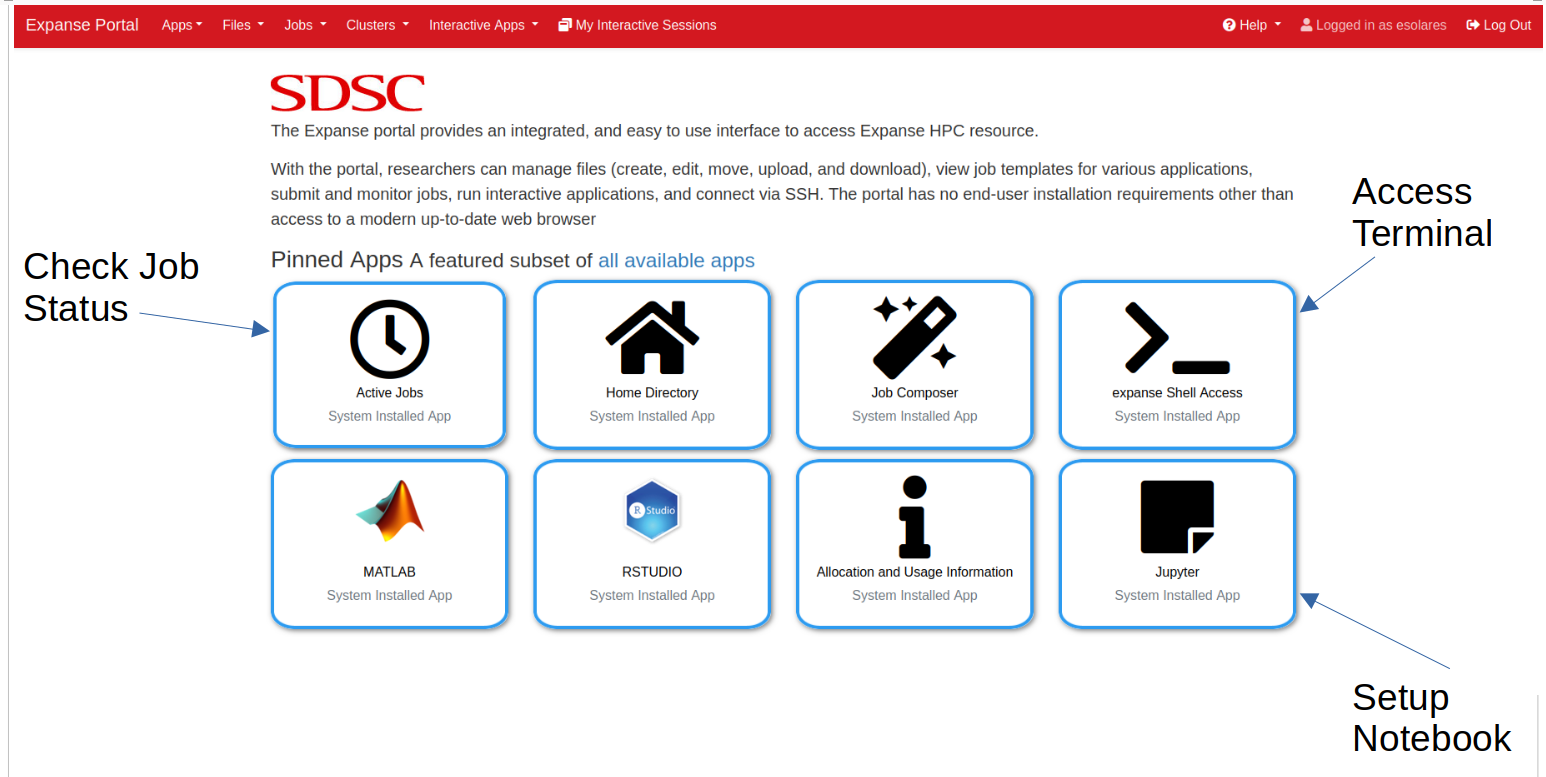

Once you are logged in, you will see the following SDSC Expanse Portal, along with several Pinned Apps.

We will be working with the following apps:

- expanse Shell Acess: To access Terminal.

- Jupyter: To setup Jupyter notebook and run JupyterLab.

- Active Jobs: To check jobs' status.

Click on the "expanse Shell Access" app in the SDSC Expanse Portal. Once you are in the Terminal, please run the following commands:

# Create a new folder with your username and add symbolic link

ln -sf /expanse/lustre/projects/uci150/$USER

# Add symbolic link to `esolares` folder where the singularity

# images are stored

ln -sf /expanse/lustre/projects/uci150/esolares

# (Optional)

# To see your group members folders, do the following for each

# group member's usernames

ln -sf /expanse/lustre/projects/uci150/GROUPMEMBERUSERNAMENote that you need to run the above instructions only once when accessing the Portal for the first time.

Click on the "Jupyter" app in the SDSC Expanse Portal.

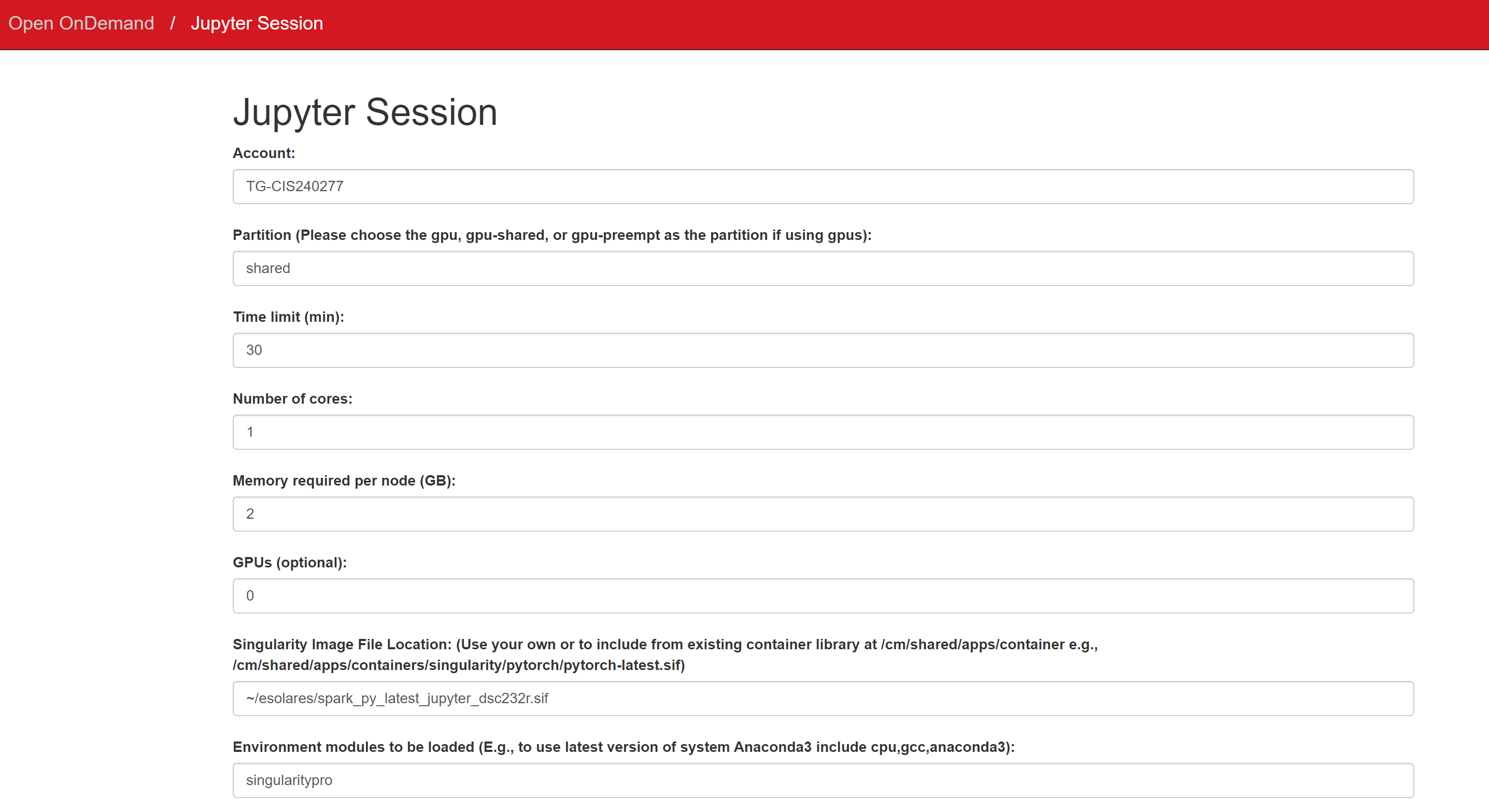

It will allow you to request a new Jupyter Session as shown below:

You will need to fill out the following fields:

-

Account:

TG-CIS240277 -

Partition:

shared -

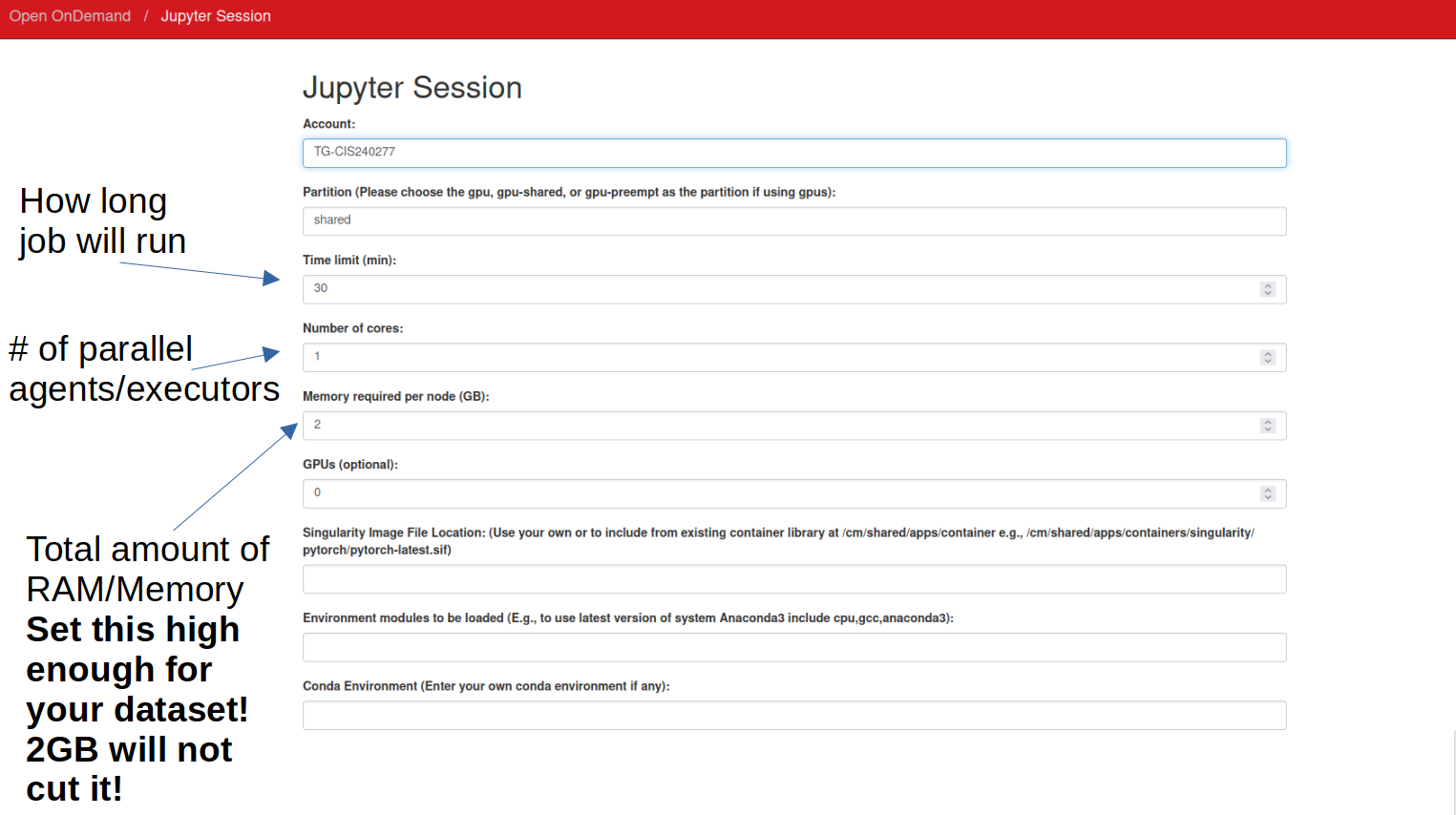

Time limit (min): Enter an integer value denoting the number of minutes you want to work on your notebook environment.

-

Number of cores: Enter an integer value denoting the number of cores your pyspark session with need. Enter a value between

2and128. -

Memory required per node (GB): Enter an integer value denoting the total memory required. Initially, start with a value of

2, i.e., 2GB. You may increase it if you get issues where you need more than 2GB per executor in your Spark Session (Spark will let you know about the amount of RAM being too low when loading your datasets). The maximum value allowed is250, i.e., 250GB.For example, if you have 128GB of total memory and 8 cores, each core gets 128/8 = 16GB of memory.

-

Singularity Image File Location:

~/esolares/spark_py_latest_jupyter_dsc232r.sif -

Environment Modules to be loaded:

singularitypro -

Working Directory:

home -

Type:

JupyterLab

Once you have filled out the above fields, go ahead and click "Submit".

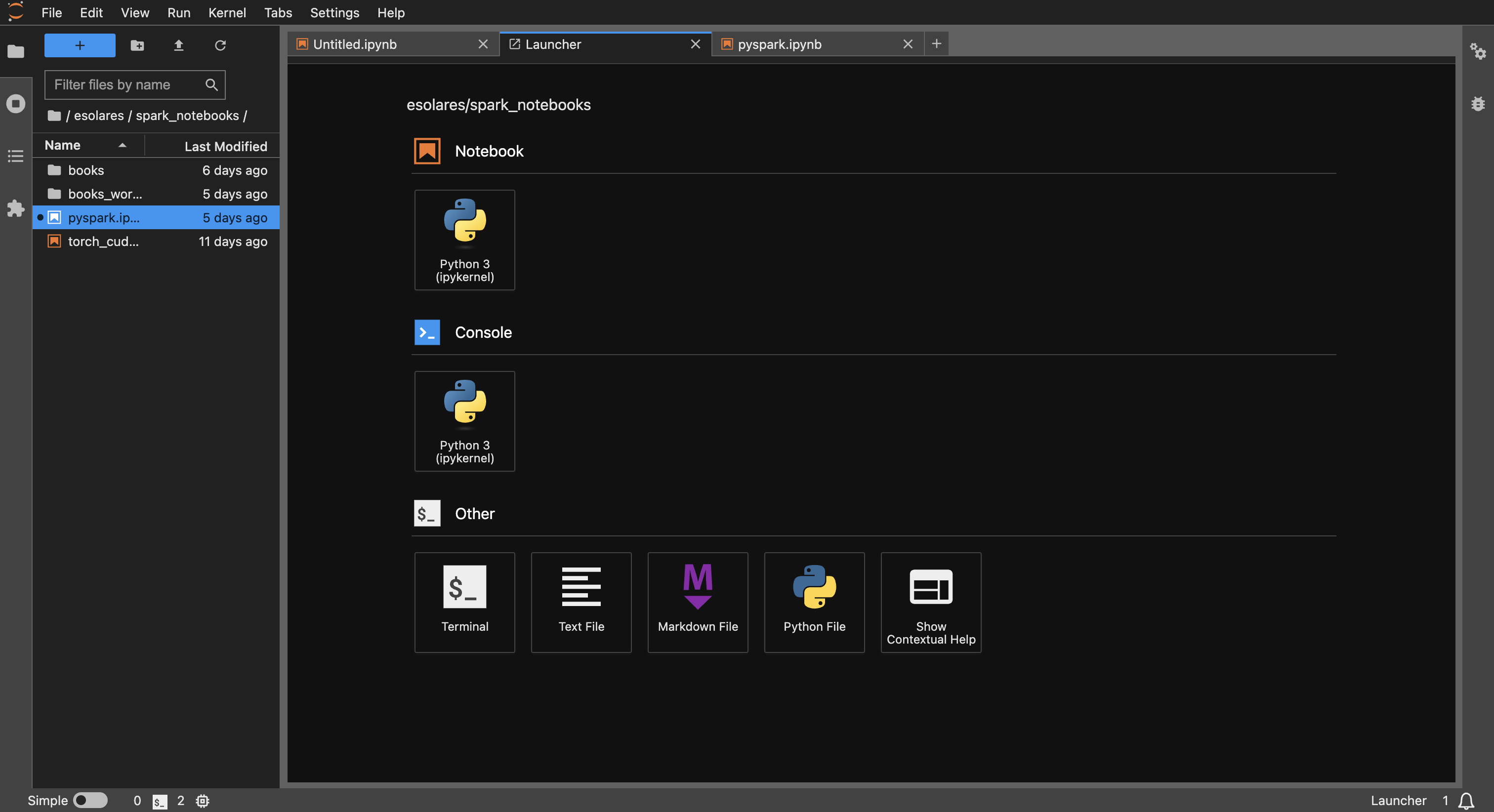

After clicking "Submit", the SDSC Expanse Portal will put your job request in a Queue. Based on the availability of resources, this might take some time. Once the request is processed, it will open a JupyterLab session. Here you can navigate around and create your own Python3 Jupyter notebooks.

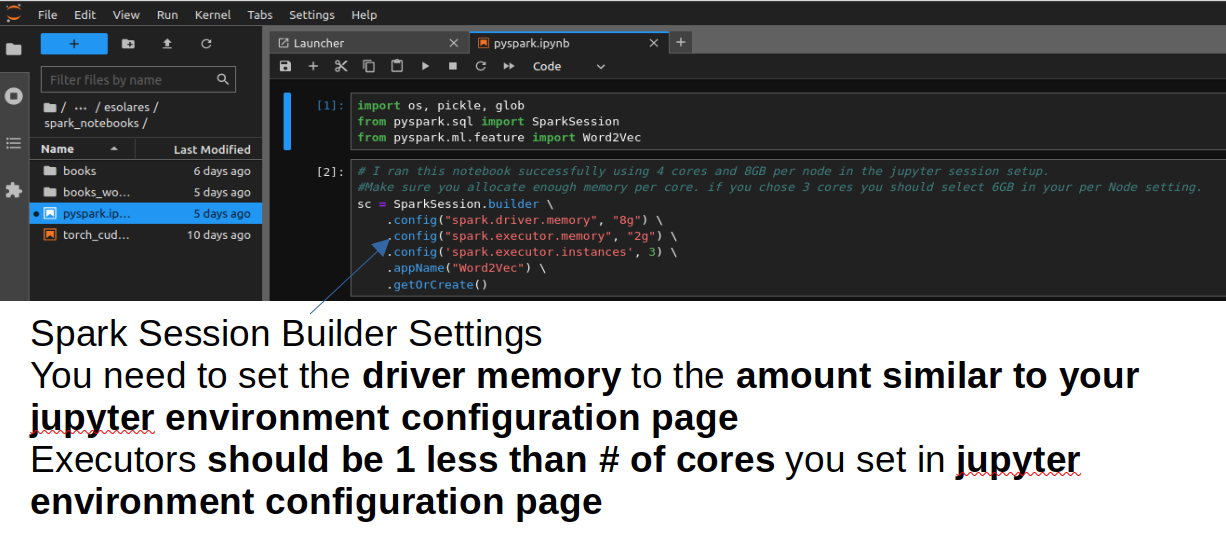

Based on the configurations provided in Jupyter above, you need to update the following code to build your SparkSession.

For example, if you have 128GB of total memory and 8 cores, each core gets 128/8 = 16GB of memory. The driver can take 1 or more cores and executors can take the remaining cores (7 or less).

sc = SparkSession.builder \

.config("spark.driver.memory", "16g") \

.config("spark.executor.memory", "16g") \

.config('spark.executor.instances', 7) \

.getOrCreate()Driver memory is the memory required by the master node. This can be similar to the executor memory as long as you are not sending a lot of data to the driver (i.e., running collect() or other heavy shuffle operation).

Example Spark notebooks are available at ~/esolares/spark_notebooks.

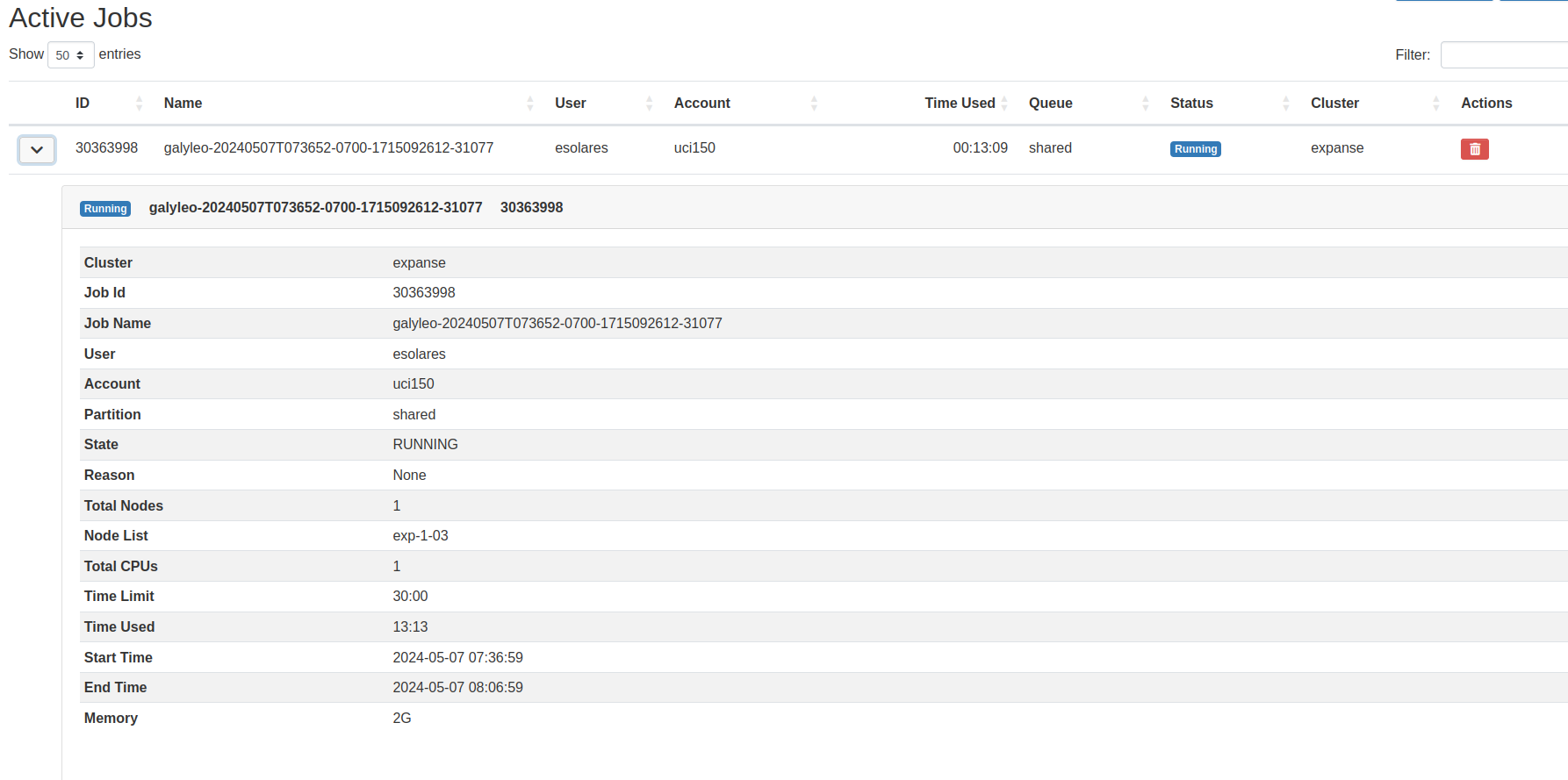

Click on the "Active Jobs" app in the SDSC Expanse Portal. Please use this while debugging Spark jobs. Note the job Status and Reason. If the job was recently run and is dead, you will see the reason why it died under the Reason field.

If you are having trouble, please submit a ticket to https://support.access-ci.org/.