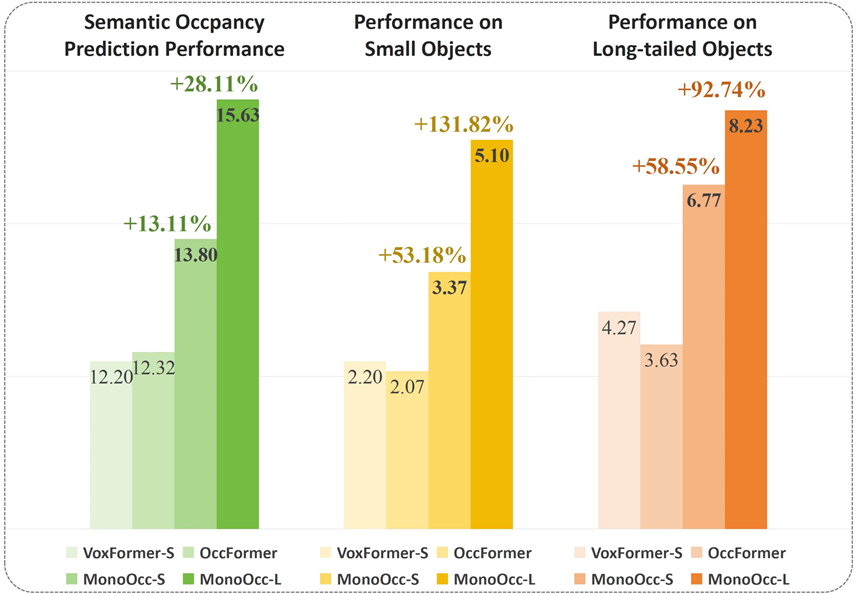

Our method outperforms the state-of-the-art MonoOcc and OccFormer

in the SSC mIoU, while also achieving a significant boost on both small objects (bicycle, motorcycle, traffic-sign)

and long-tailed objects (truck, other-vehicle, other-ground).

Our method outperforms the state-of-the-art MonoOcc and OccFormer

in the SSC mIoU, while also achieving a significant boost on both small objects (bicycle, motorcycle, traffic-sign)

and long-tailed objects (truck, other-vehicle, other-ground).

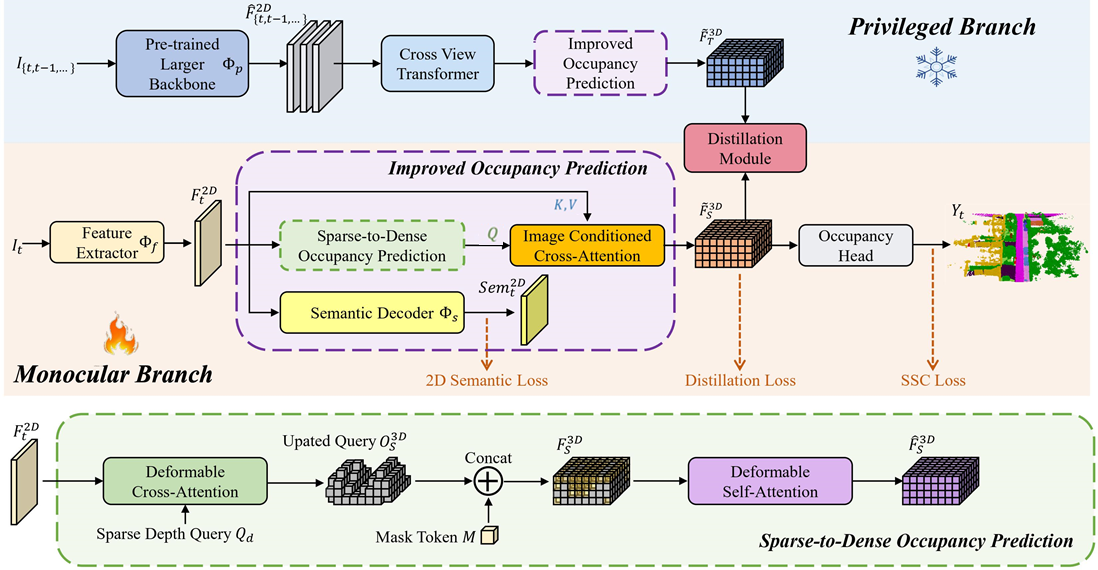

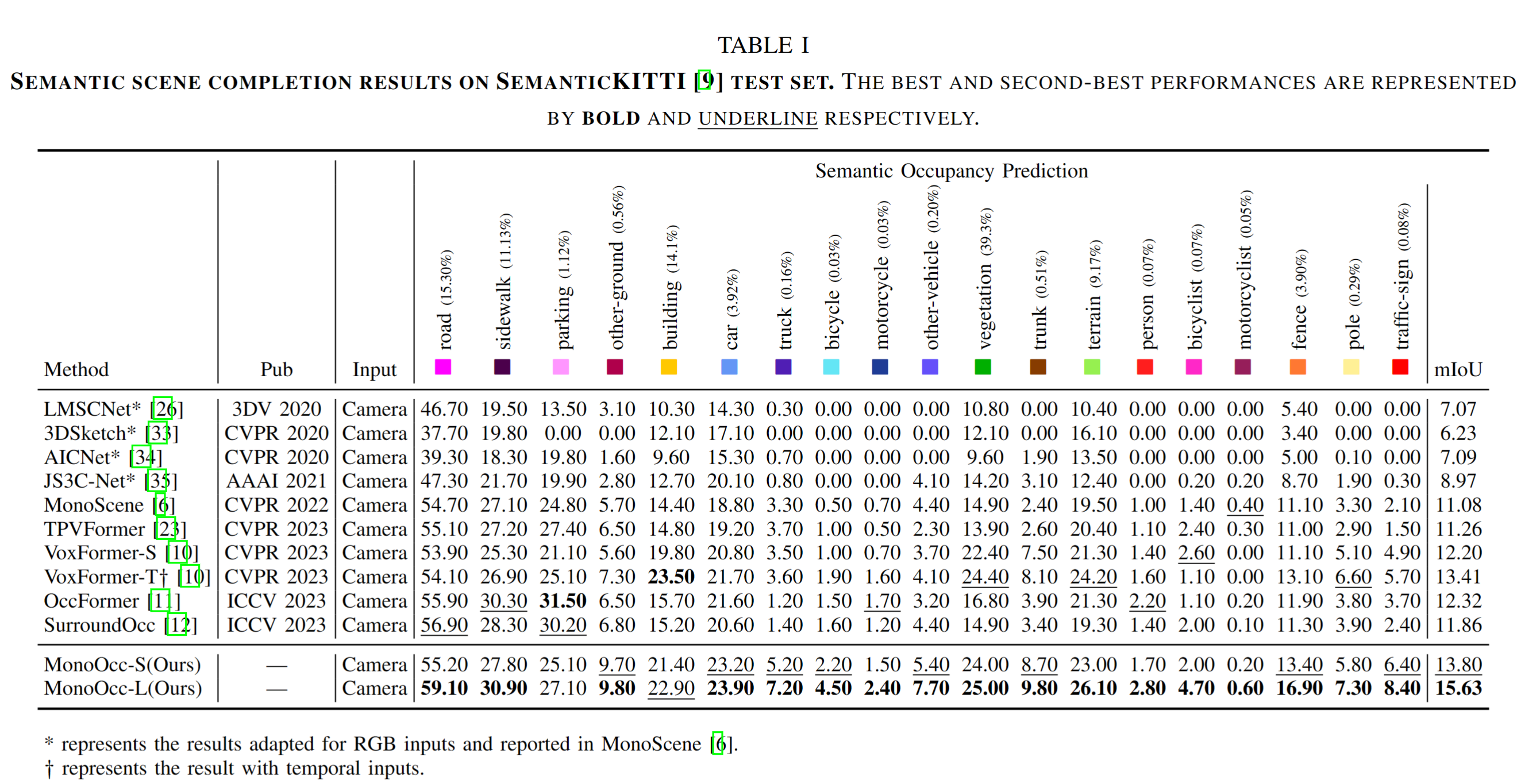

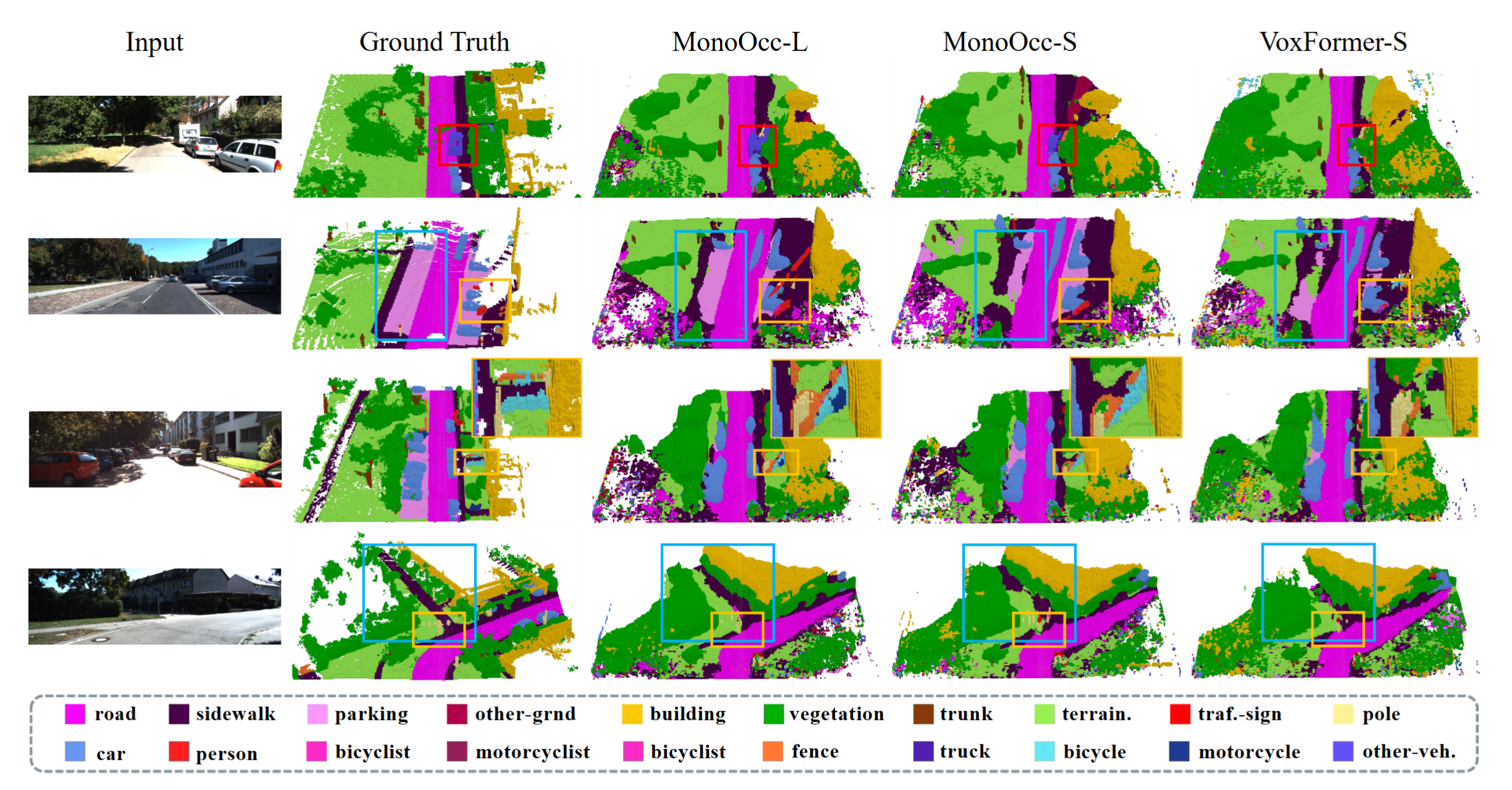

Monocular Semantic Occupancy Prediction aims to infer the complete 3D geometry and semantic information of scenes from only 2D images. It has garnered significant attention, particularly due to its potential to enhance the 3D perception of autonomous vehicles. However, existing methods rely on a complex cascaded framework with relatively limited information to restore 3D scenes, including a dependency on supervision solely on the whole network's output, single-frame input, and the utilization of a small backbone. These challenges, in turn, hinder the optimization of the framework and yield inferior prediction results, particularly concerning smaller and long-tailed objects. To address these issues, we propose MonoOcc. In particular, we (i) improve the monocular occupancy prediction framework by proposing an auxiliary semantic loss as supervision to the shallow layers of the framework and an image-conditioned cross-attention module to refine voxel features with visual clues, and (ii) employ a distillation module that transfers temporal information and richer knowledge from a larger image backbone to the monocular semantic occupancy prediction framework with low cost of hardware. With these advantages, our method yields state-of-the-art performance on the camera-based SemanticKITTI Scene Completion benchmark. Codes and models can be accessed at https://github.com/ucaszyp/MonoOcc.

- SemanticKITTI

- Occ3D-nuScenes

- KITTI-360

- Occ3D-Waymo

| Backbone | Dataset | mIoU(Val) | mIoU(Test) | Download |

|---|---|---|---|---|

| R50 | SemanticKITTI | 14.01 | 13.80 | model |

| InternImage-XL | SemanticKITTI | 15.53 | 15.63 | model |

| R50 | nuScenes | 41.86 | -- | model |

Many thanks to these excellent open source projects: