This is an exemplary project intended to showcase how:

- OpenTelemetry can be used to instrument existing frontend libraries or applications with metrics and tracing

- OpenTelemetry can be used to instrument distributed tracing and metrics across frontend requests and backend services

- Tracing, conceptually, can be used (abused?) in video players to observe end-to-end adaptive bitrate behavior (e.g., segment fetch) over the lifecycle of playback

- OpenTelemetry can be practically used with frontend JavaScript, backend NGINX, and Elastic APM to instrument a real-world use case of moderate complexity

- Use of a unified Tracing and Metrics observability solution (Elastic APM) enables a wealth of rich visualization, machine learning, and alerting tools for observing user video playback

flowchart TB

subgraph Backend

NGINX == OTLP ==> OTC[OpenTelemetry Collector]

OTC == OTLP ==> EA[Elastic APM]

end

subgraph Frontend

Video.js == HLS+Context ==> NGINX

Video.js == OTLP ==> OTC[OpenTelemetry Collector]

end

- Install Docker

- Setup a (free) Elastic Cloud trial or, if you prefer, download our docker images to run an Elastic cluster locally

- If using Elastic Cloud, there are no specific requirements on the deployment (all deployments include the required Integration Server)

- Clone this repository

- Setup Elastic APM Integration

- Launch Kibana on your new deployment

- Navigate to

/app/apmon your Kibana instance - Click

Add data - Copy

serverUrlfromAPM Agents>Node.js>Configure the agent - Copy

secretTokenfromAPM Agents>Node.js>Configure the agent - Create a

apm.envfile in the root of this repository with your APMserverUrlandsecretTokenELASTIC_APM_SERVER_URL="(serverUrl)" ELASTIC_APM_SECRET_TOKEN="(secretToken)"

docker compose buildin the root of this repository

docker compose upin the root of this repository

- Start video playback by navigating to http://127.0.0.1:8090/

- Launch Kibana on your Elastic stack

- Navigate to

/app/discoveron your Kibana instance - Ensure

traces-apm*,...is selected as your data view - Ensure new trace and metric records are being received from both the frontend player as well as the nginx backend

Given the dynamic nature of OpenTelemetry's path to a 1.0 release, I struggled to find working and practical examples of using OpenTelemetry for distributed tracing and metrics. The latter proved particularly challenging: what (working) metric examples existed tended to assume greenfield application development and were trivial in nature.

As a former digital video engineer, I was always frustrated by the lack of good open-source solutions for capturing ABR playback metrics and behaviors. While good/great proprietary options exist, I wanted a solution I could easily plug into a metrics backend of my choosing, one that I could customize with additional metadata, and one that I could integrate with tracing and other sources of observability.

With the recent availability of native OpenTelemetry support in Elastic's APM, NGINX's OpenTelemetry module, and OpenTelemetry for Javascript, I wanted to see how far I could get toward building an open observability solution for Video.js video playback.

As an aside, I also am a big proponent of relegating the ubiquitous "log file" (arguably back) to a debugging role rather than carrying production metrics. Logging is a fragile and inefficient way to record data. Given the historical lack of good cross-language, cross-platform, open-standards for transporting metrics and events, logging has generally carried the torch for production metrics. Fortunately, with the advent of OpenTelemetry, we are starting to see industry adoption of formal, standardized mechanisms to convey observability (see NGINX's support for OpenTelemetry).

Finally, I found myself wondering if it would make sense to model a user's video playback session as an overarching parent span with individual ABR segment retrievals as linked child spans (I have not seen this done elsewhere). Modeling a playback session as an overall Trace provides a nice means of associating all of the subsequent HTTPS GETs (both from a frontend and a backend perspective) with a user's overall playback session. Moreover, it allows you to avail yourself of the rich feature set offered by a distributed APM tracing solution (e.g., tail-based sampling, events, correlation with metrics, correlation between frontend and backend requests) without resorting to bespoke means of association.

As illustrated below, a parent span is created for the overall playback session. Each XHR request within Video.js VHS for an ABR segment becomes a child of that overarching playback span. Each of those Video.js segment request spans in turn creates a corresponding child span within NGINX.

flowchart TB

subgraph Player

TVP[Trace:Playback]-->TVS[Trace:GetSegment.N]

subgraph VideoJS

TVS-->TNS[Trace:GetSegment.N]

subgraph NGINX

TNS

end

end

end

- Start video playback by navigating to http://127.0.0.1:8090/

- Launch Kibana on your Elastic stack

- Navigate to

/app/apmon your Kibana instance - Select

APM>Service Mapto see the linkage between the frontend (videojs-player) and the backend (nginx-proxy)

In our trivial example, the services mapped out as expected. You can imagine chaining other operations onto the NGINX backend side of the ABR segment fetch (e.g., authorization) which could also be traced and linked to APM.

- Start video playback by navigating to http://127.0.0.1:8090/

- Launch Kibana on your Elastic stack

- Navigate to

/app/apmon your Kibana instance - Select

APM>Traces>playto see the linkage between the frontend (videojs-player) and the backend (nginx-proxy)

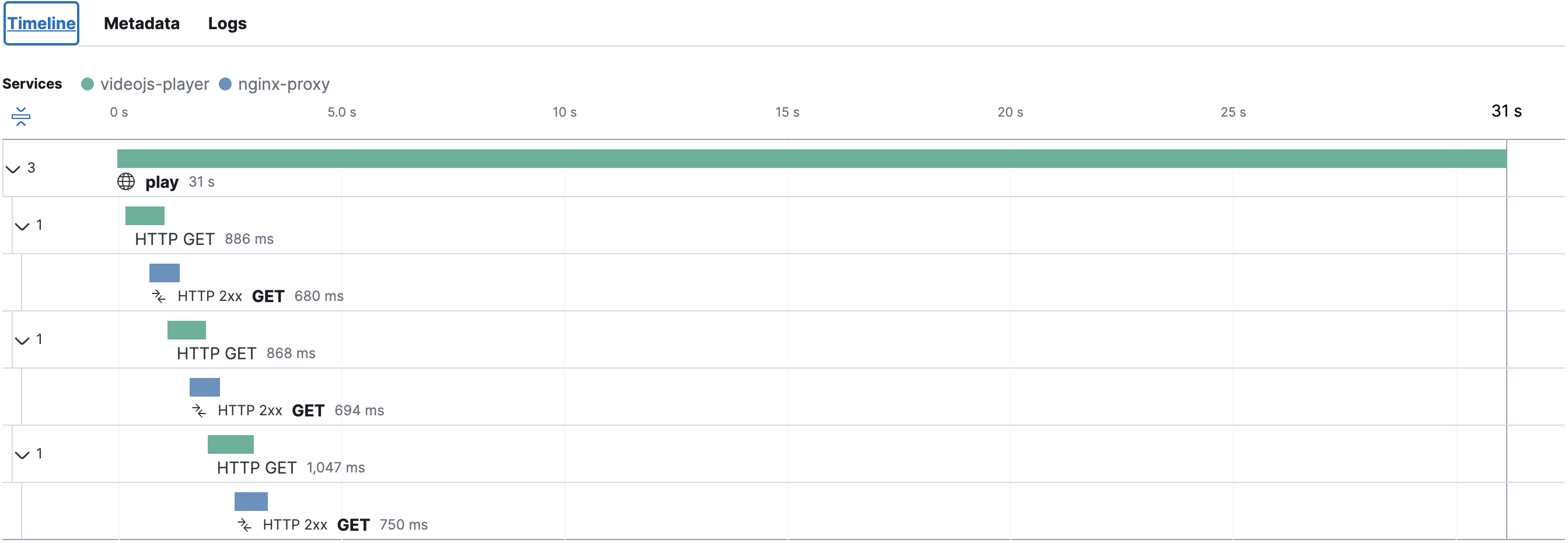

Our sample video is truncated to 30s, comprised of 3 ~10s segments. Note that we can easily visualize the timing of the individual Video.js ABR segment fetch operations (HTTP GET) as part of the overall play trace. Further, we see the ensuing NGINX proxy operation for each segment as a child of each Video.js ABR segment fetch operation. Immediately we can see that (as expected), the vast majority of the latency in delivering ABR segments to the frontend is a function of our proxy operation. Further, we can see that for a 30s playout, Video.js front-loaded the 3 segments. Obviously for a longer playout, we would expect staggered groupings of ABR segment fetch operations.

If you can put your backend on the public Internet for testing, client.ip in your traces will be populated with a valid public IP, which in turn can be reverse geocoded.

- Start video playback by navigating to http://127.0.0.1:8090/

- Launch Kibana on your Elastic stack

- Navigate to

/app/mapson your Kibana instance - Select

Add layer - Select

Documents - Set

Data viewtotraces-apm*,... - Set

client.get.locationas theGeospatial field

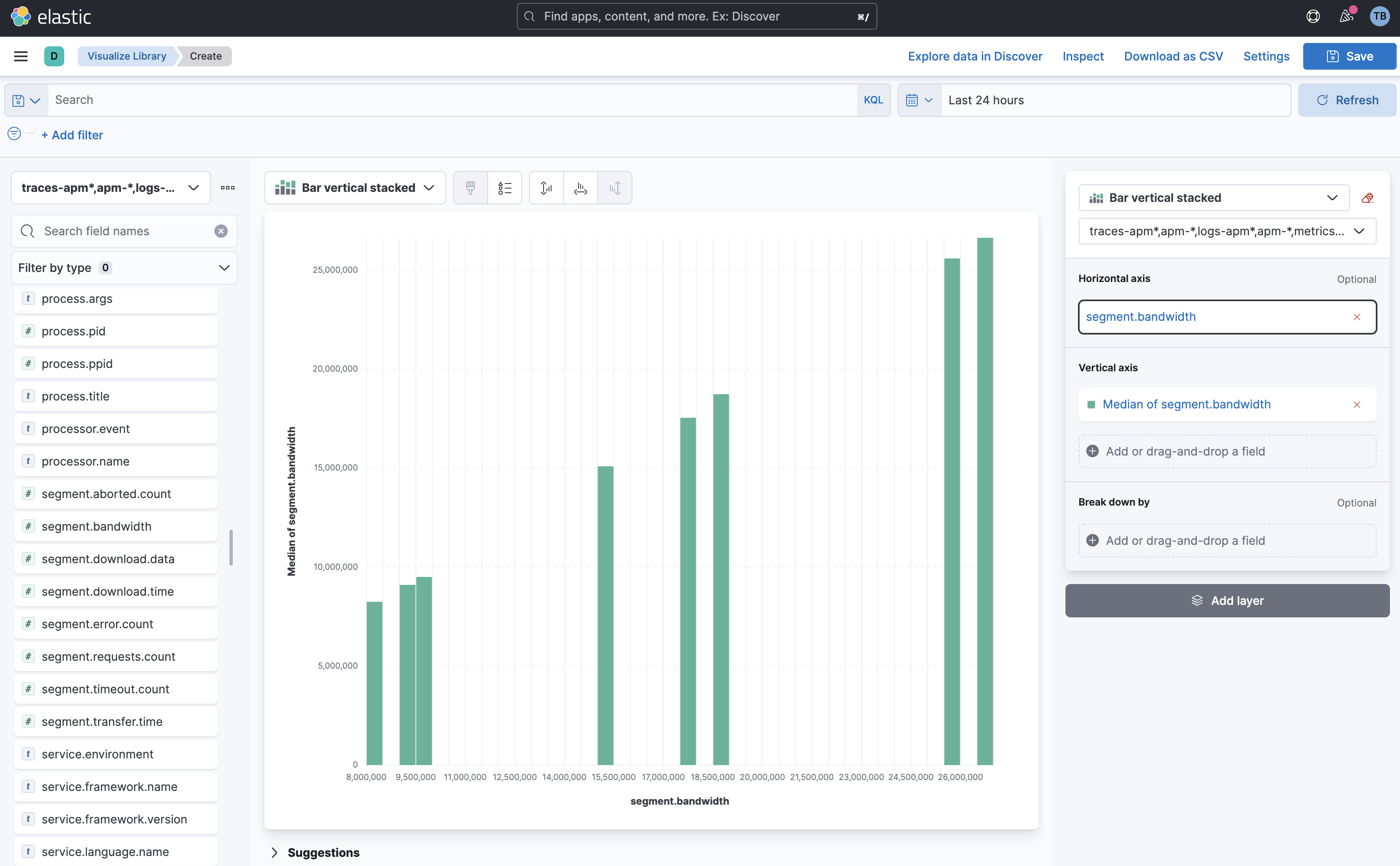

Elastic makes it easy to build out rich visualizations and dashboards custom designed for your market and application. All of the metrics we are capturing (including metrics inherently created by tracing) can be easily visualized in Kibana using Lens.

As an example, say we wanted a histogram of the bandwidth (in bits/sec) clients were achieving as they downloaded ABR segments. To graph this, we can do the following:

- Launch Kibana on your Elastic stack

- Navigate to

/app/visualizeon your Kibana instance - Select

Create new visualization - Select

Lens - Click the calendar icon in the upper-right and select a larger time frame (e.g.,

Last 24 hours) - Find

segment.bandwidthin the list of fields on the left - Drag

segment.bandwidthto theHorizontal Axis - Drag

segment.bandwidthto theVertical Axis

The resulting graph should look something like the following:

sequenceDiagram

actor U as User

participant P as Player (Video.js)

participant S as Content Server (NGINX)

participant C as OpenTelemetry Collector

participant A as Elastic APM

U->>P: Play

P->>P: [Start Trace] Playback

activate P

P->>C: Metrics(Start) / OTLP

C->>A: Metrics(Start) / OTLP

loop Get ABR Segments

P->>P: [Start Trace] GET

activate P

P->>S: GET Segment + Context/ HTTPS

S->>S: [Start Trace] GET

activate S

S->>P: ABR Segment / HTTPS

S->>C: Traces(GET) / OTLP

deactivate S

C->>A: Traces(GET) / OTLP

P->>C: Traces(GET) / OTLP

deactivate P

C->>A: Traces(GET) / OTLP

P->>C: Metrics(Tracking) / OTLP

C->>A: Metrics(Tracking) / OTLP

end

P->>C: Metrics(Performance) / OTLP

C->>A: Metrics(Performance) / OTLP

P->>C: Traces(Playback) / OTLP

deactivate P

C->>A: Traces(Playback) / OTLP

I used videojs-event-tracking as an "out of box" means of obtaining periodic playback events and statistics from Video.js. This presented an interesting challenge: this plugin pushes statistics (a combination of counters and gauges) to a callback at the 25%, 50%, 75%, and 100% playout positions. The OpenTelemetry Metrics API only supports Observable (async) Gauges. An Observable (async) gauge is generally used to instantaneously sample a data point (rather than to push a data point on-demand). This poses a problem, however, if you are interfacing against an existing library that itself pushes out metrics and events on specific, periodic intervals. To work around this interface conflict, I use a global variable to store the latest set of pushed metrics from videojs-event-tracking, which I then subsequently (ideally immediately) observe using OpenTelemetry. That presented the next challenge: the OpenTelemetry Metrics SDK comes with a PeriodicExportingMetricReader designed to observe a Gauge on regular, periodic intervals. In my case, however, I wanted to command OpenTelemetry to immediately observe a gauge, and further, to not observe that gauge again until videojs-event-tracking pushed a new set of values. To that end, I forked the existing PeriodicExportingMetricReader to create a new OneShotExportingMetricReader which, as the name implies, can be commanded (via a forceFlush) to immediately observe a set of counters and gauges exactly once per flush. Is there a better way to do this? Obviously, we could write our own Video.js plugin better suited for asynchronous observation. If there exists a better way to observe existing gauges which are 'pushed' to an application with OpenTelemetry, I'm all ears!

I also needed to coax Video.js into emitting OpenTelemetry Tracing during ABR segment fetches. I was able to use instrumentation-xml-http-request to auto-instrument these requests, though I immediately ran into an issue: you need to set the Context for Tracing such that OpenTelemetry knows if a given request is a new parent span, or if it is a nested child span (e.g., part of my overall playback span). This presents a challenge since the Video.js ABR segment fetch happens asynchronously deep within the Video.js library. Since I knew instrumentation-xml-http-request already hooks XHR requests, I decided to hook _createspan within instrumentation-xml-http-request such that I could set the appropriate context before a span is created.

src/index.js:

xhrInstrumentation.__createspan = xhrInstrumentation._createspan;

xhrInstrumentation._createspan = (xhr, url, method) => {

if (playerspan) {

context.with(trace.setspan(context.active(), playerspan), () => {

xhrInstrumentation.__createspan(xhr, url, method);

});

} else {

xhrInstrumentation.__createspan(xhr, url, method);

}

}

Finally, I wanted to propagate a few attributes across the distributed tracing between the frontend and the backend. Ideally, this is accomplished using Baggage. At present, however, it appears the NGINX OpenTelemetry module does not yet support Baggage. As a workaround, I used the player.tech().vhs.xhr.beforeRequest hook available within Video.js VHS to explicitly add several contextual headers to each ABR segment fetch which I could in turn retrieve and set as OpenTelemetry attributes from within NGINX:

src/index.js:

player.tech().vhs.xhr.beforeRequest = function (options) {

options.headers["x-playback-instance-id"] = INSTANCE_ID;

options.headers["x-playback-source-url"] = player.currentSrc();

return options;

};

nginx/nginx.conf:

opentelemetry_attribute "player.instance.id" $http_x_playback_instance_id;

opentelemetry_attribute "player.source.url" $http_x_playback_source_url;

Here are some ideas on ways to further improve this exemplary demonstration:

- Author a Video.js plugin that instruments more of the player functionality with a better asynchronous observability model

- Use Elastic Machine Learning to detect anomalies in the captured metrics

- Build out a dashboard of KPIs in Kibana

- Instrument and integrate tracing and metrics from additional backend microservices that comprise video playback sessions (e.g., authorization)

If the HLS playlist or segments are already cached locally in your browser, they will not be retrieved by Video.js VHS. Ideally, this too would be reported as a span (with a distinguishing status code), but instrumenting this would require changes to Video.js VHS.

Additionally, if your browser doesn't support MSE but rather uses native HLS playback (e.g., iOS on iPhones), I suspect (but have not confirmed) instrumentation-xml-http-request will not be triggered.

Yes! This is part of the magic of OpenTelemetry. You can easily duplicate or redirect traces and metrics from the OpenTelemetry Collector to any supported backend via its configuration file.

Data to Elastic APM is most easily secured using APM Secret Tokens carried in a gRPC header (along with the OTLP payload). Support for Bearer Tokens is only available (today) in the contributor build.

This demo is, of course, a toy meant to showcase the art of the possible using bleeding edge observability tools. As such, it would require considerable testing and tweaking to be appropriate for production use cases.

Can OpenTelemetry Agents send traces and metrics directly to Elastic APM without using an OpenTelemetry Collector?

Yes, this should be possible, though it is complicated by authentication. You obviously don't want to embed a bearer token in a web-based frontend application. Elastic APM does support anonymous agents (for RUM), though I'm not certain that will work with off-the-shelf OpenTelemetry OTLP/HTTP agents. Additionally, I don't believe the current version of the NGINX OpenTelemetry module supports bearer token authentication.

Apache License 2.0

Fork this, make it real, and build something awesome! If it's OSS, a credit/link back to this repository would be appreciated!