Unsupervised Hierarchical Semantic Segmentation with Multiview Cosegmentation and Clustering Transformers

By Tsung-Wei Ke, Jyh-Jing Hwang, Yunhui Guo, Xudong Wang and Stella X. Yu

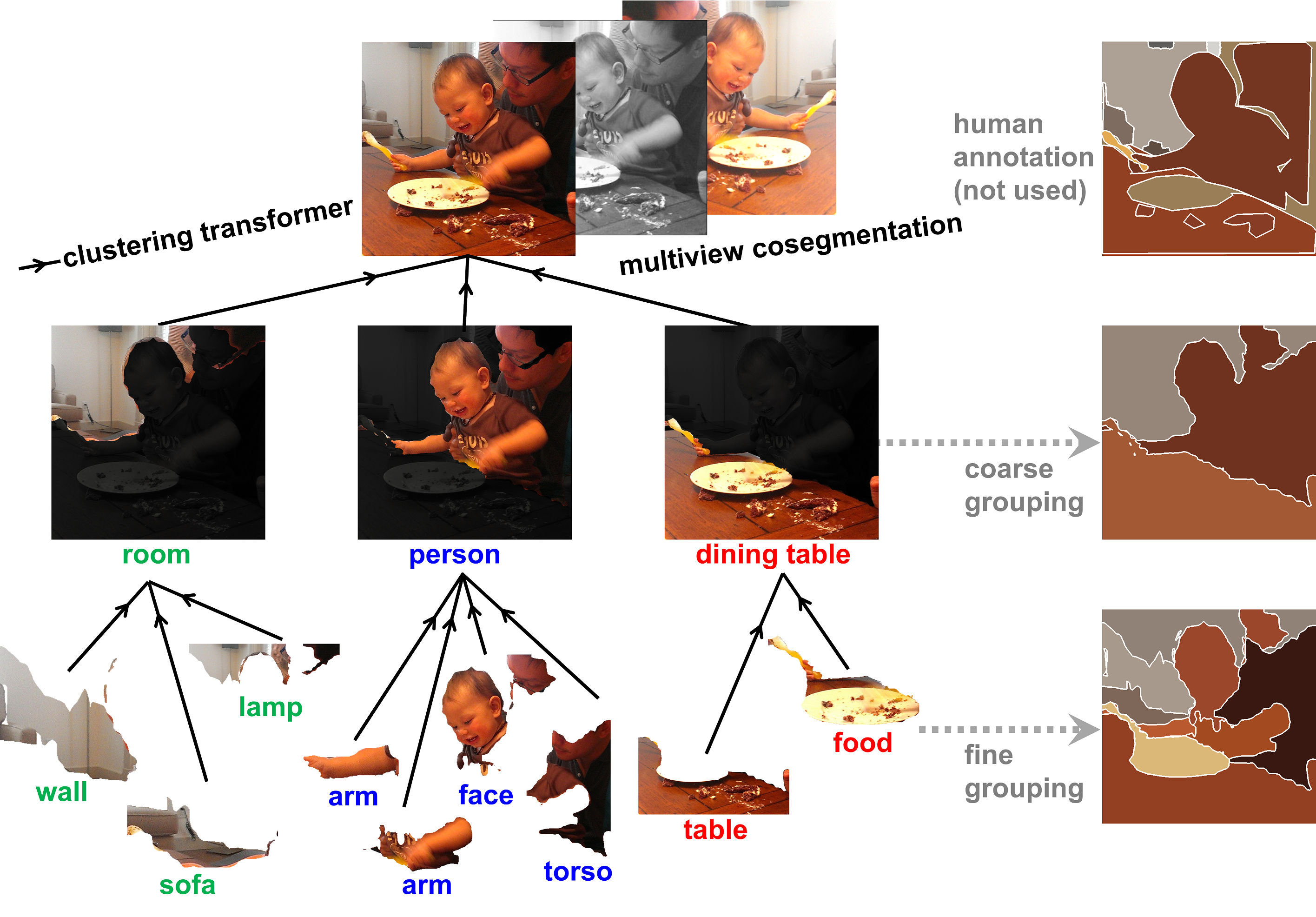

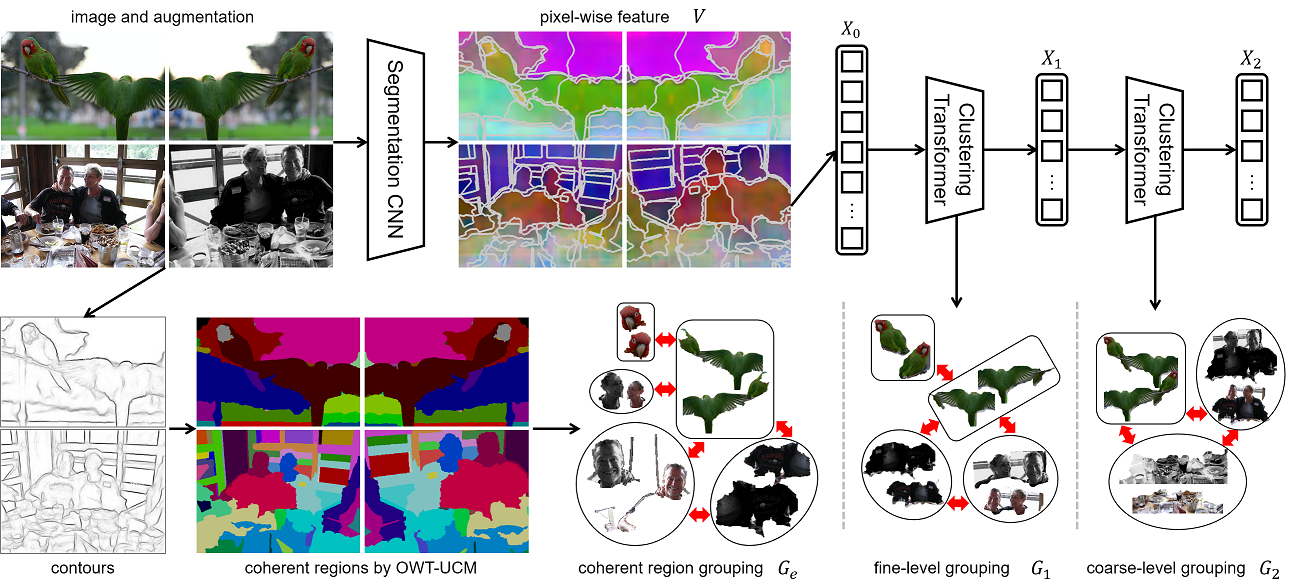

Unsupervised semantic segmentation aims to discover groupings within and across images that capture object- and view-invariance of a category without external supervi- sion. Grouping naturally has levels of granularity, creating ambiguity in unsupervised segmentation. Existing methods avoid this ambiguity and treat it as a factor outside modeling, whereas we embrace it and desire hierarchical grouping consistency for unsupervised segmentation.

We approach unsupervised segmentation as a pixel-wise feature learning problem. Our idea is that a good representation shall reveal not just a particular level of grouping, but any level of grouping in a consistent and predictable manner. We enforce spatial consistency of grouping and bootstrap feature learning with co-segmentation among multiple views of the same image, and enforce semantic consistency across the grouping hierarchy with clustering transformers between coarse- and fine-grained features.

We deliver the first data-driven unsupervised hierarchical semantic segmentation method called Hierarchical Segment Grouping (HSG). Capturing visual similarity and statistical co-occurrences, HSG also outperforms existing unsupervised segmentation methods by a large margin on five major object- and scene-centric benchmarks.

This release of code is based on SPML in ICLR 2021.

- Linux

- Python3 (>=3.5)

- Cuda >= 9.2 and Cudnn >= 7.6

- pytorch >= 1.6

- numpy

- scipy

- tqdm

- easydict == 1.9

- PyYAML

- PIL

- opencv

- Augmented Pascal VOC training set by SBD. Download link provided by SegSort.

- Ground-truth semantic segmentation masks are reformatted as grayscale images. Download link provided by SegSort.

- (Optional) The over-segmentation masks are generated by combining contour detectors with gPb-owt-ucm. We use Structured Edge (SE) as edge detector. You can download SE-owt-ucm masks here and put them under VOC2012/ folder.

- Dataset layout:

$DATAROOT/VOCdevkit/

|-------- sbd/

| |-------- dataset/

| |-------- clsimg/

|

|-------- VOC2012/

|-------- JPEGImages/

|-------- segcls/

|-------- rf_0.25_48/

- Images from MSCOCO. Download 2017 Train Images (here).

- The over-segmentation masks are generated by combining contour detectors with gPb-owt-ucm. We use Structured Edge (SE) as edge detector. You can download SE-owt-ucm masks here and put them under seginst/rf_0.25_48 folder.

- Dataset layout:

$DATAROOT/MSCOCO/

|-------- images/

| |-------- train2017/

|

|-------- seginst/

|-------- rf_0.25_48/

|-------- train2017/

- Images from Cityscapes. Download

leftImg8bit_trainvaltest.zip. - The over-segmentation masks are generated by combining contour detectors with gPb-owt-ucm. We use Pointwise Mutual Information (PMI) edge detector. You can download PMI-owt-ucm masks here and put them under seginst/ folder.

- Ground-truth semantic segmentation masks are reformatted as grayscale images. Download here.

- Dataset layout:

$DATAROOT/Cityscapes/

|-------- leftImg8bit/

| |-------- train/

| |-------- val/

|

|-------- gtFineId/

| |-------- train/

| |-------- val/

|

|-------- seginst/

|-------- pmi_0.05/

See tools/README.md for details.

We provide the download links for our HSG models trained on MSCOCO and tested on PASCAL VOC. All models are trained in two stage. Larger batchsize (128/192) but smaller image size (224x224) is used in the first stage. Smaller batchsize (48) but larger image size (448x448) is adopted in the second strage. We summarize the performance as follows.

| Batch size | val (VOC) |

|---|---|

| 128 | 42.2 |

| 192 | 43.7 |

We provide the download links for our HSG models trained and tested on Cityscaeps and tested on PASCAL VOC. We only use finely-annotated images for training and testing (2975 and 500 images). You can download our trained model here, which achieves 32.4% mIoU on Cityscapes.

- HSG on MSCOCO.

source bashscripts/coco/train.sh

- HSG on Cityscapes.

source bashscripts/cityscapes/train.sh

If you find this code useful for your research, please consider citing our paper Unsupervised Hierarchical Semantic Segmentation with Multiview Cosegmentation and Clustering Transformers.

@inproceedings{ke2022hsg,

title={Unsupervised Hierarchical Semantic Segmentation with Multiview Cosegmentation and Clustering Transformers},

author={Ke, Tsung-Wei and Hwang, Jyh-Jing and Guo, Yunhui and Wang, Xudong and Yu, Stella X.},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={},

year={2022}

}

HSG is released under the MIT License (refer to the LICENSE file for details).