A PyTorch implementation of "FC4: Fully Convolutional Color Constancy with Confidence-weighted Pooling".

The original code for the FC4 method is quite outdated (based on Python 2 and an outdated version of Tensorflow). This an attempt to provide a clean and modern Python3-based re-implementation of that method using the PyTorch library.

Original resources:

This implementation of the FC4 method uses SqueezeNet. The SqueezeNet implementation is the one offered by PyTorch and features:

- SqueezeNet 1.0. Introduced in 'SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size'

- SqueezeNet 1.1 (has 2.4x less computation and slightly fewer parameters than 1.0, without sacrificing accuracy). Introduced in this repository

This project has been developed and tested using Python 3.8 and Torch > 1.7. Please install the required packages

using pip3 install -r requirements.txt.

The device on which to run the method (either cpu or cuda:x) and the random seed for reproducibility can be set as

global variables at auxiliary/settings.py.

Note that this implementation allows for deactivating the confidence-weighted pooling, in which case a simpler summation

pooling will be used. The usage of the confidence-weighted pooling can be configured toggling

the USE_CONFIDENCE_WEIGHTED_POOLING global variable at auxiliary/settings.py

This implementation of FC4 has been tested against

the Shi's Re-processing of Gehler's Raw Color Checker Dataset. After

downloading the data, please extract it and move the images and coordinates folders and the folds.mat file to

the dataset folder.

To preprocess the dataset, run the following commands:

cd dataset

python3 img2npy.pyThis will mask the ground truth in the images and save the preprocessed items in .npy format into a new folder

called preprocessed. The script also save a linearized version of original and ground-truth-corrected images for

better visualization.

Pretrained models on the 3 benchmark folds of this dataset are available inside trained_models.zip. Those under

trained_models/fc4_cwp are meant to be used with the confidence-weighted-pooling activated while those under

trained_models/fc4_sum with the confidence-weighted-pooling not activated. All models come with a log of the training

metrics and a dump of the network architecture.

To train the FC4 model, run python3 train/train.py. The training procedure can be configured by editing the value of the

global variables at the beginning of the train.py file.

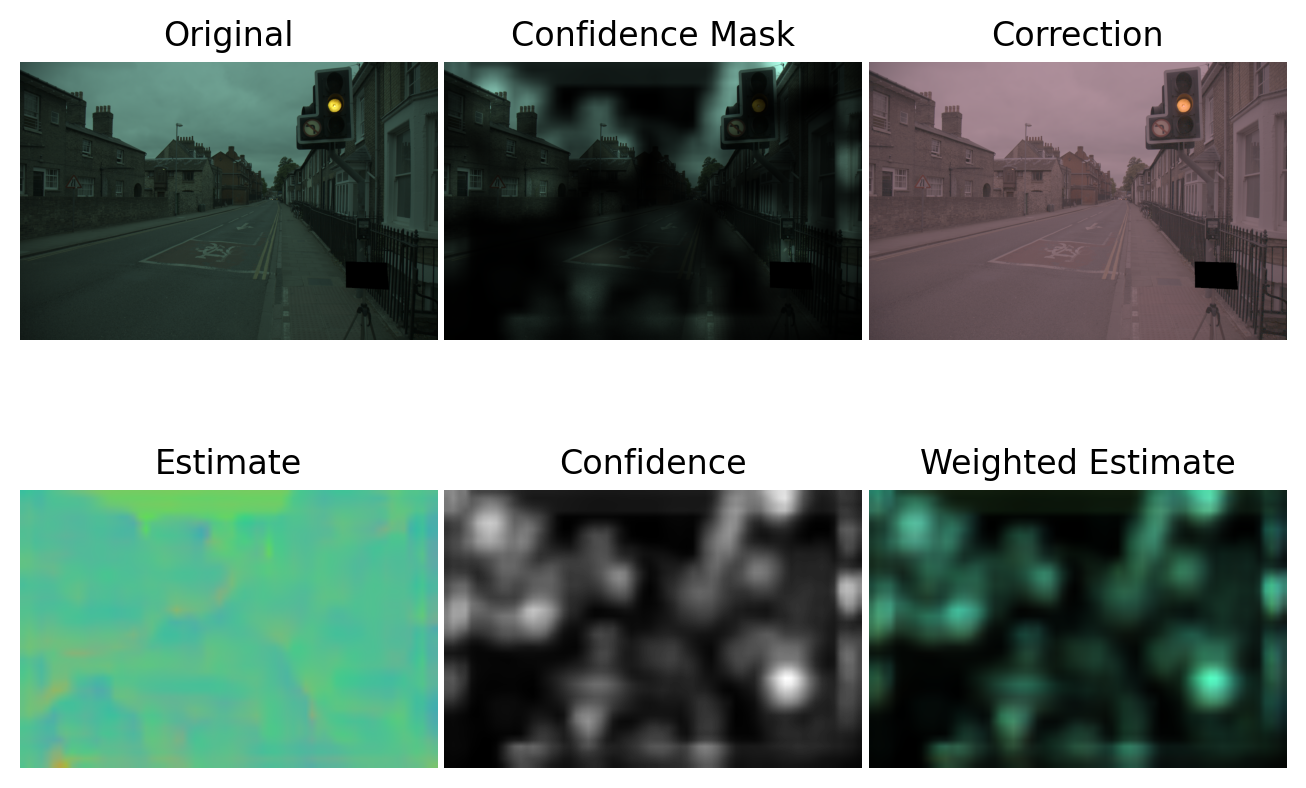

A subset of the images in the test set can be monitored at training time. A plot of the confidence for these images will

be saved at each epoch, which can be used to generate GIF visualizations using vis/make_gif.py. Here is an example:

Note that monitoring images has an impact on training time. If you are not interested in monitoring images, just

set TEST_VIS_IMG = [] in train.py.

To test the FC4 model, run python3 test/test.py. The test procedure can be configured by editing the value of the global

variables at the beginning of the test.py file.

To visualize the confidence weights learned by the FC4 model, run python3 vis/visualize.py. The procedure can be

configured by editing the value of the global variables at the beginning of the visualize.py file.

This is the type of visualization produced by the script: