Website | Deployment | Infrastructure | Example | FAQ

OpenNext takes the Next.js build output and converts it into a package that can be deployed to any functions as a service platform.

Features

OpenNext aims to support all Next.js 13 features. Some features are work in progress. Please open a new issue to let us know!

- API routes

- Dynamic routes

- Static site generation (SSG)

- Server-side rendering (SSR)

- Incremental static regeneration (ISR)

- Middleware

- Image optimization

- NextAuth.js

- Running at edge

How does OpenNext work?

When calling open-next build, OpenNext runs next build to build the Next.js app, and then transforms the build output to a format that can be deployed to AWS.

Building the Next.js app

OpenNext runs the build script in your package.json file. Depending on the lock file found in the app, the corresponding packager manager will be used. Either npm run build, yarn build, or pnpm build will be run.

Transforming the build output

The build output is then transformed into a format that can be deployed to AWS. The transformed output is generated inside the .open-next folder within your Next.js app. Files in assets/ are ready to be uploaded to AWS S3. And the function code is wrapped inside Lambda handlers, ready to be deployed to AWS Lambda or Lambda@Edge.

my-next-app/

.open-next/

assets/ -> Static files to upload to an S3 Bucket

server-function/ -> Handler code for server Lambda Function

image-optimization-function/ -> Handler code for image optimization Lambda FunctionDeployment

OpenNext allows you to deploy your Next.js apps using a growing list of frameworks.

SST

The easiest way to deploy OpenNext to AWS is with SST. This is maintained by the OpenNext team and only requires three simple steps:

- Run

npx create-sst@latestin your Next.js app - Run

npm install - Deploy to AWS

npx sst deploy

For more information, check out the SST docs: https://docs.sst.dev/start/nextjs

Other Frameworks

The OpenNext community has contributed deployment options for a few other frameworks.

- CDK: https://github.com/jetbridge/cdk-nextjs

- CloudFormation: sst#32

- Serverless Framework: sst#32

To use these, you'll need to run the following inside your Next.js app.

$ npx open-next@latest buildIf you are using OpenNext to deploy using a framework that is not listed here, please let us know so we can add it to the list.

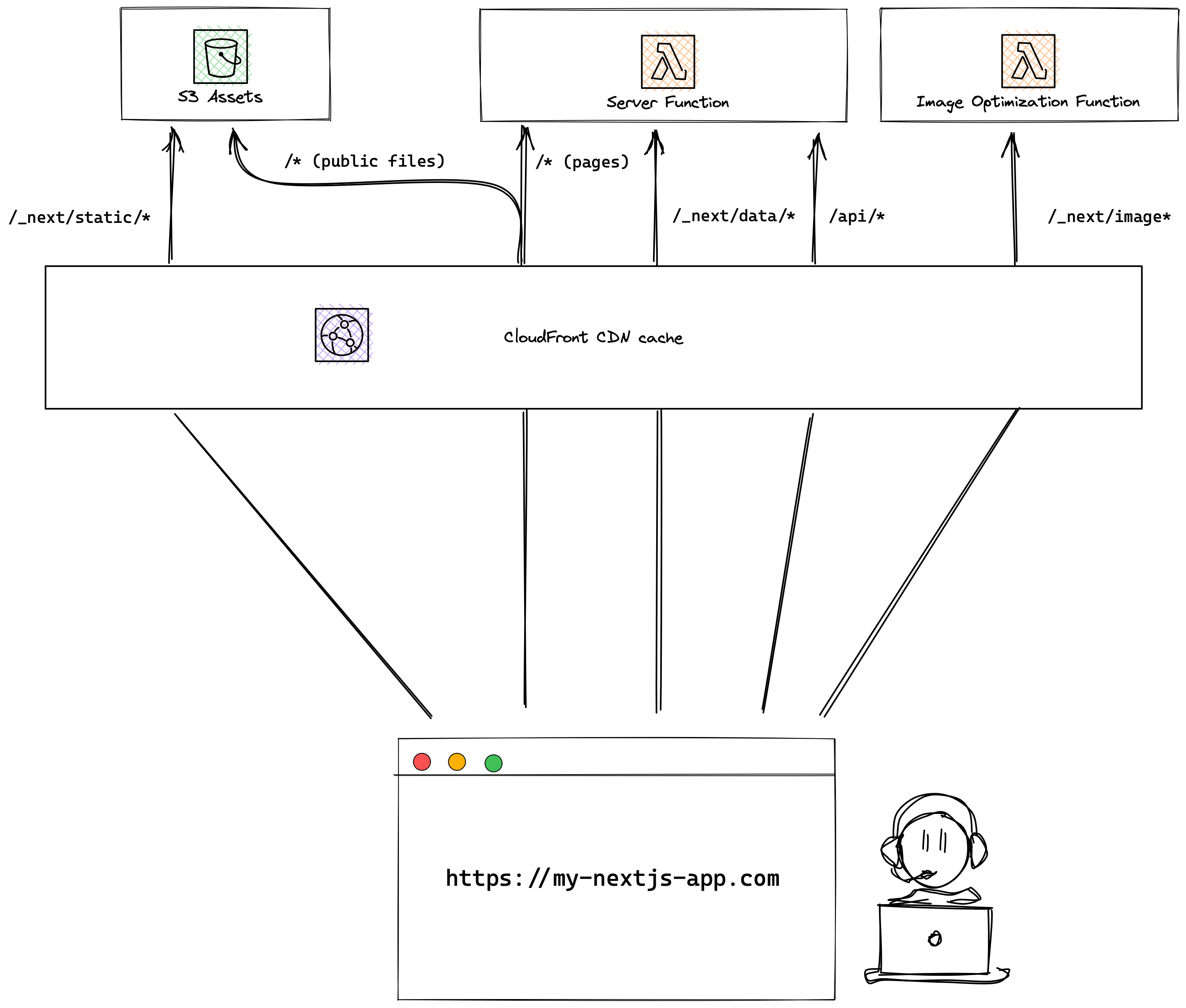

Recommended infrastructure on AWS

OpenNext does not create the underlying infrastructure. You can create the infrastructure for your app with your preferred tool — SST, AWS CDK, Terraform, Serverless Framework, etc.

This is the recommended setup.

Here are the recommended configurations for each AWS resource.

S3 bucket

Create an S3 bucket and upload the content in the .open-next/assets folder to the root of the bucket. For example, the file .open-next/assets/favicon.ico should be uploaded to /favicon.ico at the root of the bucket.

There are two types of files in the .open-next/assets folder:

Hashed files

These are files with a hash component in the file name. Hashed files are be found in the .open-next/assets/_next folder, such as .open-next/assets/_next/static/css/0275f6d90e7ad339.css. The hash values in the filenames are guaranteed to change when the content of the files is modified. Therefore, hashed files should be cached both at the CDN level and at the browser level. When uploading the hashed files to S3, the recommended cache control setting is

public,max-age=31536000,immutable

Un-hashed files

Other files inside the .open-next/assets folder are copied from your app's public/ folder, such as .open-next/assets/favicon.ico. The filename for un-hashed files may remain unchanged when the content is modified. Un-hashed files should be cached at the CDN level, but not at the browser level. When the content of un-hashed files is modified, the CDN cache should be invalidated on deploy. When uploading the un-hashed files to S3, the recommended cache control setting is

public,max-age=0,s-maxage=31536000,must-revalidate

Image optimization function

Create a Lambda function using the code in the .open-next/image-optimization-function folder, with the handler index.mjs. Also, ensure that the function is configured as follows:

- Set the architecture to

arm64. - Set the

BUCKET_NAMEenvironment variable with the value being the name of the S3 bucket where the original images are stored. - Grant

s3:GetObjectpermission.

This function handles image optimization requests when the Next.js <Image> component is used. The sharp library, which is bundled with the function, is used to convert the image. The library is compiled against the arm64 architecture and is intended to run on AWS Lambda Arm/Graviton2 architecture. Learn about the better cost-performance offered by AWS Graviton2 processors.

Note that the image optimization function responds with the Cache-Control header, so the image will be cached both at the CDN level and at the browser level.

Server Lambda function

Create a Lambda function using the code in the .open-next/server-function folder, with the handler index.mjs.

This function handles all other types of requests from the Next.js app, including Server-side Rendering (SSR) requests and API requests. OpenNext builds the Next.js app in standalone mode. The standalone mode generates a .next folder containing the NextServer class that handles requests and a node_modules folder with all the dependencies needed to run the NextServer. The structure looks like this:

.next/ -> NextServer

node_modules/ -> dependencies

The server function adapter wraps around NextServer and exports a handler function that supports the Lambda request and response. The server-function bundle looks like this:

.next/ -> NextServer

+ .open-next/

+ public-files.json -> `/public` file listing

node_modules/ -> dependencies

+ index.mjs -> server function adapterThe file public-files.json contains the top-level file and directory names in your app's public/ folder. At runtime, the server function will forward any requests made to these files and directories to S3. And S3 will serve them directly. See why.

Monorepo

In the case of a monorepo, the build output looks slightly different. For example, if the app is located in packages/web, the build output looks like this:

packages/

web/

.next/ -> NextServer

node_modules/ -> dependencies from root node_modules (optional)

node_modules/ -> dependencies from package node_modules

In this case, the server function adapter needs to be created inside packages/web next to .next/. This is to ensure that the adapter can import dependencies from both node_modules folders. It is not a good practice to have the Lambda configuration coupled with the project structure, so instead of setting the Lambda handler to packages/web/index.mjs, we will add a wrapper index.mjs at the server-function bundle root that re-exports the adapter. The resulting structure looks like this:

packages/

web/

.next/ -> NextServer

+ .open-next/

+ public-files.json -> `/public` file listing

node_modules/ -> dependencies from root node_modules (optional)

+ index.mjs -> server function adapter

node_modules/ -> dependencies from package node_modules

+ index.mjs -> adapter wrapperThis ensures that the Lambda handler remains at index.mjs.

CloudFront distribution

Create a CloudFront distribution, and dispatch requests to their corresponding handlers (behaviors). The following behaviors are configured:

| Behavior | Requests | CloudFront Function | Origin |

|---|---|---|---|

/_next/static/* |

Hashed static files | - | S3 bucket |

/_next/image |

Image optimization | - | image optimization function |

/_next/data/* |

data requests | set x-forwarded-hostsee why |

server function |

/api/* |

API | set x-forwarded-hostsee why |

server function |

/* |

catch all | set x-forwarded-hostsee why |

server function fallback to S3 bucket on 503 see why |

Running at edge

The server function can also run at edge locations by configuring it as Lambda@Edge on Origin Request. The server function can accept both regional request events (API payload version 2.0) and edge request events (CloudFront Origin Request payload). Depending on the shape of the Lambda event object, the function will process the request accordingly.

To configure the CloudFront distribution:

| Behavior | Requests | CloudFront Function | Lambda@Edge | Origin |

|---|---|---|---|---|

/_next/static/* |

Hashed static files | - | - | S3 bucket |

/_next/image |

Image optimization | - | - | image optimization function |

/_next/data/* |

data requests | set x-forwarded-hostsee why |

server function | - |

/api/* |

API | set x-forwarded-hostsee why |

server function | - |

/* |

catch all | set x-forwarded-hostsee why |

server function | S3 bucket see why |

Limitations and workarounds

WORKAROUND: public/ static files served by the server function (AWS specific)

As mentioned in the S3 bucket section, files in your app's public/ folder are static and are uploaded to the S3 bucket. Ideally, requests for these files should be handled by the S3 bucket, like so:

https://my-nextjs-app.com/favicon.ico

This requires the CloudFront distribution to have the behavior /favicon.ico and set the S3 bucket as the origin. However, CloudFront has a default limit of 25 behaviors per distribution, so it is not a scalable solution to create one behavior per file.

To work around the issue, requests for public/ files are handled by the catch all behavior /*. This behavior sends the request to the server function first, and if the server fails to handle the request, it will fall back to the S3 bucket.

During the build process, the top-level file and directory names in the public/ folder are saved to the .open-next/public-files.json file within the server function bundle. At runtime, the server function checks the request URL path against the file. If the request is made to a file in the public/ folder:

- When deployed to a single region (Lambda), the server function returns a 503 response right away, and S3, which is configured as the failover origin on 503 status code, will serve the file. Refer to the CloudFront setup.

- When deployed to the edge (Lambda@Edge), the server function returns the request object. And the request will be handled by S3, which is configured as the origin. Refer to the CloudFront setup.

This means that on cache miss, the request may take slightly longer to process.

WORKAROUND: Set x-forwarded-host header (AWS specific)

When the server function receives a request, the host value in the Lambda request header is set to the hostname of the AWS Lambda service instead of the actual frontend hostname. This creates an issue for the server function (middleware, SSR routes, or API routes) when it needs to know the frontend host.

To work around the issue, a CloudFront function is run on Viewer Request, which sets the frontend hostname as the x-forwarded-host header. The function code looks like this:

function handler(event) {

var request = event.request;

request.headers["x-forwarded-host"] = request.headers.host;

return request;

}The server function would then sets the host header of the request to the value of the x-forwarded-host header when sending the request to the NextServer.

WORKAROUND: NextServer does not set cache response headers for HTML pages

As mentioned in the Server function section, the server function uses the NextServer class from Next.js' build output to handle requests. However, NextServer does not seem to set the correct Cache Control headers.

To work around the issue, the server function checks if the request is for an HTML page, and sets the Cache Control header to:

public, max-age=0, s-maxage=31536000, must-revalidate

WORKAROUND: Set NextServer working directory (AWS specific)

Next.js recommends using process.cwd() instead of __dirname to get the app directory. For example, consider a posts folder in your app with markdown files:

pages/

posts/

my-post.md

public/

next.config.js

package.json

You can build the file path like this:

path.join(process.cwd(), "posts", "my-post.md");As mentioned in the Server function section, in a non-monorepo setup, the server-function bundle looks like:

.next/

node_modules/

posts/

my-post.md <- path is "posts/my-post.md"

index.mjs

In this case, path.join(process.cwd(), "posts", "my-post.md") resolves to the correct path.

However, when the user's app is inside a monorepo (ie. at /packages/web), the server-function bundle looks like:

packages/

web/

.next/

node_modules/

posts/

my-post.md <- path is "packages/web/posts/my-post.md"

index.mjs

node_modules/

index.mjs

In this case, path.join(process.cwd(), "posts", "my-post.md") cannot be resolved.

To work around the issue, we change the working directory for the server function to where .next/ is located, ie. packages/web.

Example

In the example folder, you can find a Next.js benchmark app. It contains a variety of pages that each test a single Next.js feature. The app is deployed to both Vercel and AWS using SST.

AWS link: https://d1gwt3w78t4dm3.cloudfront.net

Vercel link: https://open-next.vercel.app

Advanced usage

OPEN_NEXT_MINIFY

Enabling this option will minimize all .js and .json files in the server function bundle using the node-minify library. This can reduce the size of the server function bundle by about 40%, depending on the size of your app. To enable it, simply run:

OPEN_NEXT_MINIFY=true open-next buildEnabling this option can significantly help to reduce the cold start time of the server function. However, it's an experimental feature, and you need to opt-in to use it. Once this option is thoroughly tested and found to be stable, it will be enabled by default.

Debugging

To find the server and image optimization log, go to the AWS CloudWatch console in the region you deployed to.

If the server function is deployed to Lambda@Edge, the logs will appear in the region you are physically close to. For example, if you deployed your app to us-east-1 and you are visiting the app from in London, the logs are likely to be in eu-west-2.

Debug mode

You can run OpenNext in debug mode by setting the OPEN_NEXT_DEBUG environment variable:

OPEN_NEXT_DEBUG=true npx open-next@latest buildThis does a few things:

- Lambda handler functions in the build output will not be minified.

- Lambda handler functions in the build output has sourcemap enabled inline.

- Lambda handler functions will automatically

console.logthe request event object along with other debugging information.

It is recommended to turn off debug mode when building for production because:

- Un-minified function code is 2-3X larger than minified code. This will result in longer Lambda cold start times.

- Logging the event object on each request can result in a lot of logs being written to AWS CloudWatch. This will result in increased AWS costs.

Opening an issue

To help diagnose issues, it's always helpful to provide a reproducible setup when opening an issue. One easy way to do this is to create a pull request (PR) and add a new page to the benchmark app located in the example folder, which reproduces the issue. The PR will automatically deploy the app to AWS.

Contribute

To run OpenNext locally:

- Clone this repository.

- Build

open-next:cd open-next pnpm build - Run

open-nextin watch mode:pnpm dev

- Now, you can make changes in

open-nextand build your Next.js app to test the changes.cd path/to/my/nextjs/app path/to/open-next/packages/open-next/dist/index.js build

FAQ

Will my Next.js app behave the same as it does on Vercel?

OpenNext aims to deploy your Next.js app to AWS using services like CloudFront, S3, and Lambda. While Vercel uses some AWS services, it also has proprietary infrastructures, resulting in a natural gap of feature parity. And OpenNext is filling that gap.

One architectural difference is how middleware is run, but this should not affect the behavior of most apps.

On Vercel, the Next.js app is built in an undocumented way using the "minimalMode". The middleware code is separated from the server code and deployed to edge locations, while the server code is deployed to a single region. When a user makes a request, the middleware code runs first. Then the request reaches the CDN. If the request is cached, the cached response is returned; otherwise, the request hits the server function. This means that the middleware is called even for cached requests.

On the other hand, OpenNext uses the standard next build command, which generates a server function that includes the middleware code. This means that for cached requests, the CDN (CloudFront) will send back the cached response, and the middleware code is not run.

We previously built the app using the "minimalMode" and having the same architecture as Vercel, where the middleware code would run in Lambda@Edge on Viewer Request. See the vercel-mode branch. However, we decided that this architecture was not a good fit on AWS for a few reasons:

- Cold start - Running middleware and server in two separate Lambda functions results in double the latency.

- Maintenance - Because the "minimalMode" is not documented, there will likely be unhandled edge cases, and triaging would require constant reverse engineering of Vercel's code base.

- Feature parity - Lambda@Edge functions triggered on Viewer Request do not have access to geolocation headers, which affects i18n support.

How does OpenNext compared to AWS Amplify?

OpenNext is an open source initiative, and there are a couple of advantages when compared to Amplify:

-

The community contributions to OpenNext allows it to have better feature support.

-

Amplify's Next.js hosting is a black box. Resources are not deployed to your AWS account. All Amplify users share the same CloudFront CDN owned by the Amplify team. This prevents you from customizing the setup, and customization is important if you are looking for Vercel-like features.

-

Amplify's implementation is closed-source. Bug fixes often take much longer to get fixed as you have to go through AWS support. And you are likely to encounter more quirks when hosting Next.js anywhere but Vercel.

Acknowledgements

We are grateful for the projects that inspired OpenNext and the amazing tools and libraries developed by the community:

- nextjs-lambda by Jan for serving as inspiration for packaging Next.js's standalone output to Lambda.

- CDK NextJS by JetBridge for its contribution to the deployment architecture of a Next.js application on AWS.

- serverless-http by Doug Moscrop for developing an excellent library for transforming AWS Lambda events and responses.

- serverless-nextjs by Serverless Framework for paving the way for serverless Next.js applications on AWS.

Special shoutout to @khuezy for his outstanding contributions to the project.

Maintained by SST. Join our community: Discord | YouTube | Twitter