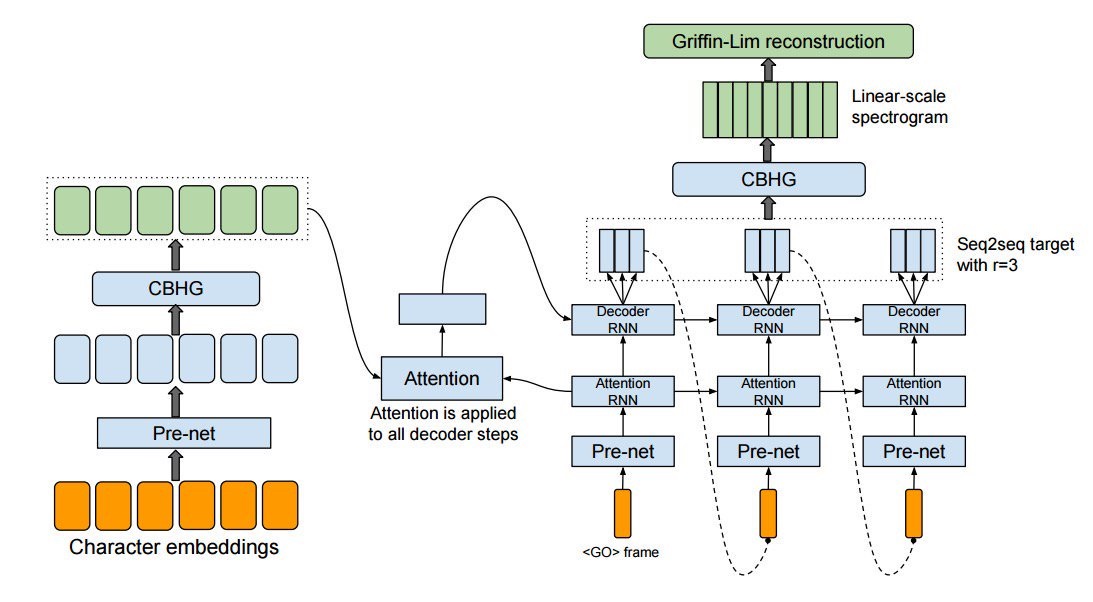

Implement google's Tacotron TTS system with pytorch.

2018/09/15 => Fix RNN feeding bug.

2018/11/04 => Add attention mask and loss mask.

2019/05/17 => 2nd version updated.

2019/05/28 => fix attention plot bug.

- Add vocoder

- Multispeaker version

See used_packages.txt.

-

Data

Download LJSpeech provided by keithito. It contains 13100 short audio clips of a single speaker. The total length is approximately 24 hrs. -

Preprocessing

# Generate a directory 'training/' containing extracted features and a new meta file 'ljspeech_meta.txt'

$ python data/preprocess.py --output-dir training \

--data-dir <WHERE_YOU_PUT_YOUR_DATASET>/LJSpeech-1.1/wavs \

--old-meta <WHERE_YOU_PUT_YOUR_DATASET>/LJSpeech-1.1/metadata.csv \

--config config/config.yaml- Split dataset

# Generate 'meta_train.txt' and 'meta_test.txt' in 'training/'

$ python data/train_test_split.py --meta-all training/ljspeech_meta.txt \

--ratio-test 0.1- Train

# Start training

$ python main.py --config config/config.yaml \

--checkpoint-dir <WHERE_TO_PUT_YOUR_CHECKPOINTS>

# Continue training

$ python main.py --config config/config.yaml \

--checkpoint-dir <WHERE_TO_PUT_YOUR_CHECKPOINTS> \

--checkpoint-path <LAST_CHECKPOINT_PATH>- Examine the training process

# Scalars : loss curve

# Audio : validation wavs

# Images : validation spectrograms & attentions

$ tensorboard --logdir log- Inference

# Generate synthesized speech

$ python generate_speech.py --text "For example, Taiwan is a great place." \

--output <DESIRED_OUTPUT_PATH> \

--checkpoint-path <CHECKPOINT_PATH> \

--config config/config.yamlAll the samples can be found here. These samples are generated after 102k updates.

The pretrained model can be downloaded in this link.

The proper alignment shows after 10k steps of updating.

- Gradient clipping

- Noam style learning rate decay (The mechanism that Attention is all you need applies.)

This work is based on r9y9's implementation of Tacotron.

- Tacotron: Towards End-to-End Speech Synthesis [link]