First you need to download the Ollama client from the official website ollama.com.

Open a Terminal and use ollama pull <model name> to download a Large Language Model. A complete list of all available models can be found on the Ollama website.

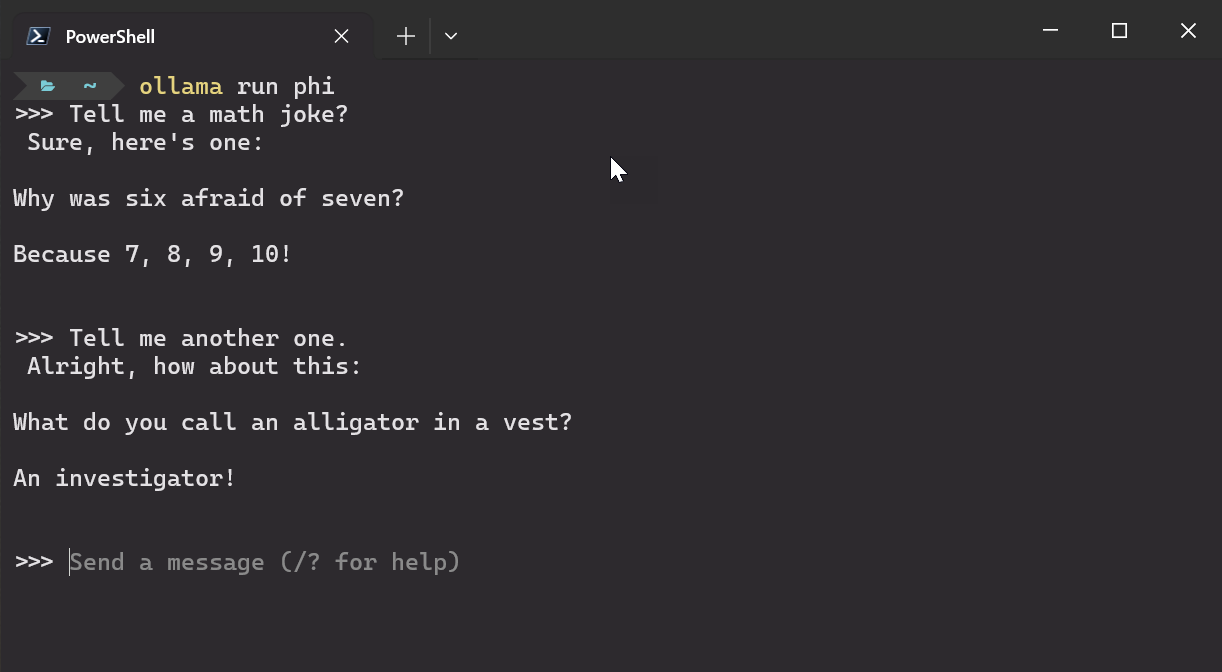

In the Terminal use ollama run <model name> to use the model in the console. You can also add --verbose to the command to get more statistic about the usage of the model.

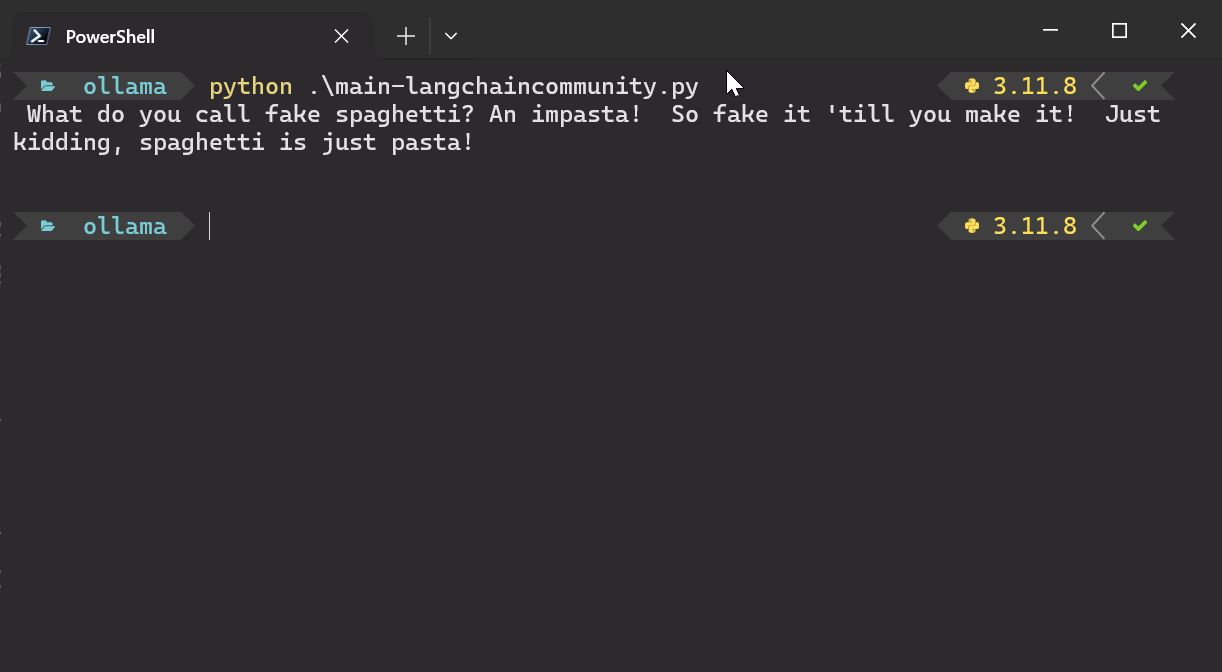

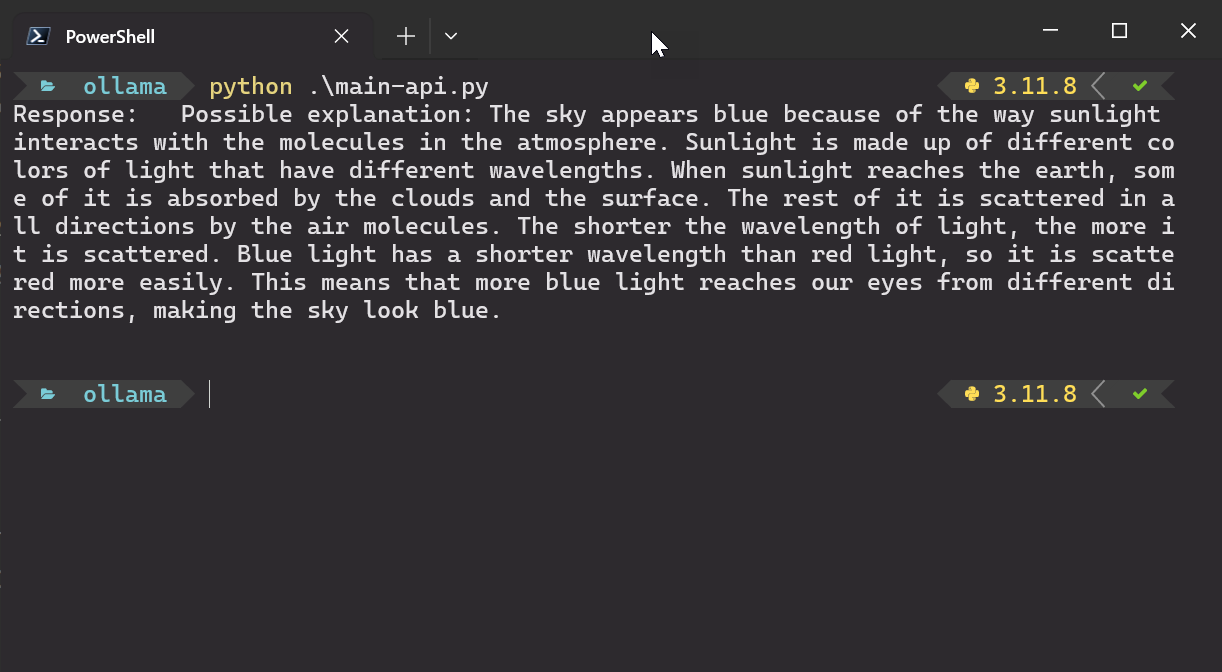

I've created two Python scripts to demonstrate the usage of the Ollama Server.

The script main-langchaincommunity.py uses the langchain_community package.

The script main-api.py uses the REST api approach by calling the api/generate endpoint on the Ollama Server.

Before running the Python scripts, make sure to install Python on your PC and also install the required packages by calling pip install -r requirements.txt.

If you are more interested into details, please see the following medium.com post:

You can also watch the following video on my YouTube channel: