Dear Technical Team, thanks for your opportunity and give me time to finish the Ansible & Kubernetes Challenge Deployment. In this page, i will to describe how iam try to work the challange appropriate with the requirement in guideline. Lets begin

- Create inventory file with group loadbalancer and backend using 3 node.

- Create playbook to install nginx on all server

- Create playbook to change nginx index to backend host with contains "served from: hostname"

- Create playbook to setup nginx in the loadbalancer group with methode "least connection" between nodes in the backend group

-

First, for inventory iam create using *.ini extention and private key methode so as we did'n need add hosts password for execute the program

-

Install Nginx, for run this program you can execute that command.

ansible-playbook -i inventory.ini nginx-install.yml -

Change default nginx page for backend.

ansible-playbook -i inventory.ini nginx-page.yml -

Setup nginx load balancer.

ansible-playbook -i inventory.ini nginx-page.yml -

for alternative i can install all part from 1-4 with one file like that.

ansible-playbook -i inventory.ini nginx-configlb.yml

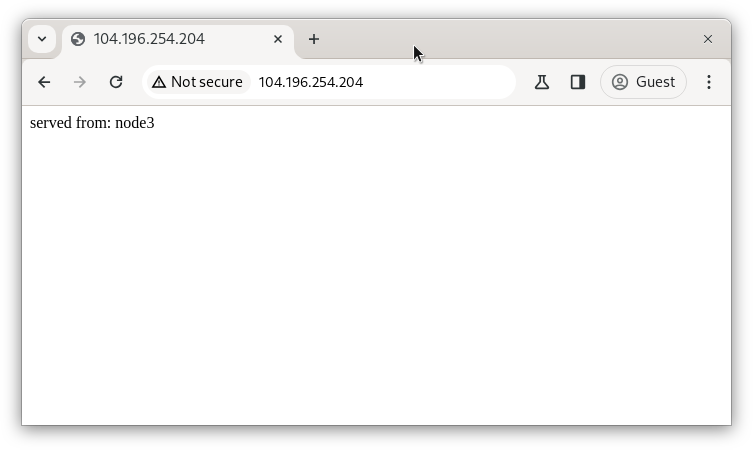

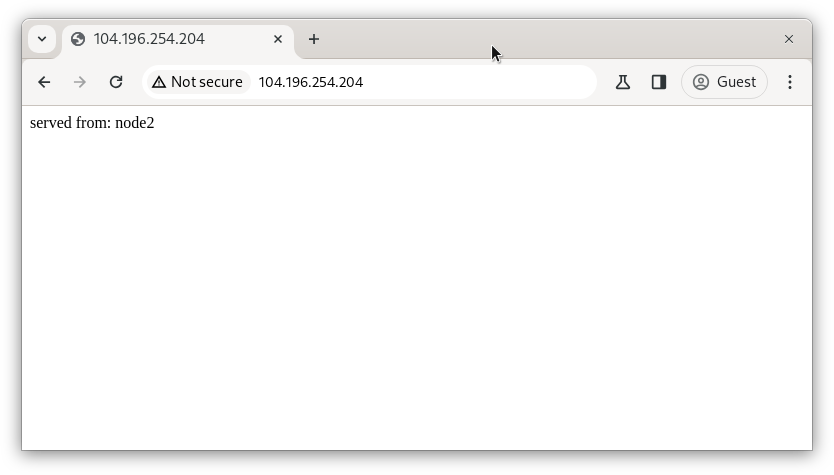

I'am trying to access public address of node1 (loadbalancer) with some incognito browser and loadbalancer can direct to node2 and node3 (backend) webserver.

- Make the worker node join the cluster

- Create a deployment with Name: nginx-app using container nginx:1.11.10-alpine and 3 replicas

- Deploy the application with new version 1.11.13-alpine, by performing a rolling update.

- Rollback that update to the previous version 1.11.10-alpine.

- Set a node named k8s-node-1 as unavailable and reschedule all the pods running on it.

- Create a Persistent Volume with name app-data, of capacity 2Gi and access mode ReadWriteMany. The type of volume is hostPath and its location is /srv/app-data.

- Create a Persistent Volume Claim that requests the Persistent Volume you had created above. The claim should request 2Gi. Ensure that the Persistent Volume Claim has the same storageClassName as the Persistent Volume you had previously created.

-

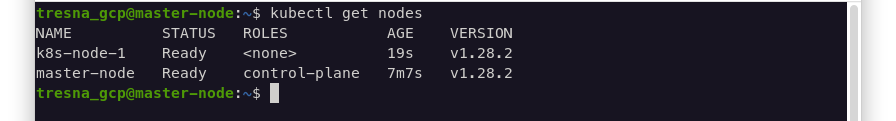

Make the worker node join the cluster, first insert this command on master node

sudo kubeadm token create --print-join-commandThe result show command with credential for worker node, copy this and run in worker node. After that, check the node is join or not in master note.

-

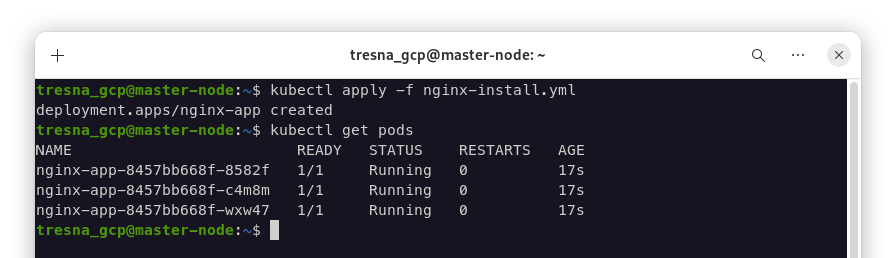

Create Deployment nginx-app, for run this program iam execute that command.

kubectl apply -f nginx-install.ymlAfter that, for make sure the apps its already running, iam using this command with result.

-

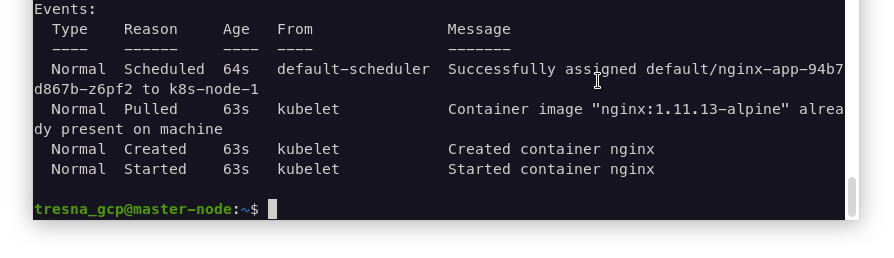

Deploy the application with new version 1.11.13-alpine, for this case iam user the same yml like nginx-install but with change the image version.

kubectl apply -f nginx-upgrade.yml --recordAlternatively you didn't create new or edit yml, you need only using 1 command like this.

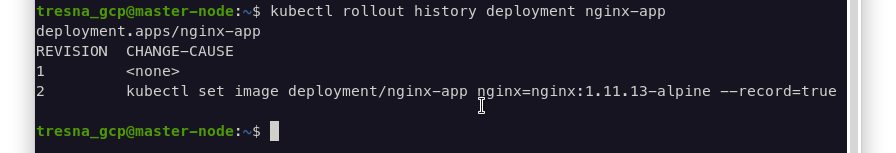

kubectl set image deployment/nginx-app nginx=nginx:1.11.13-alpine --recordfor see the the history of rolling using this command.

kubectl rollout history deployment nginx-appOr for verify the version is change, iam can use discribe command

kubectl describe deployment/nginx-app -

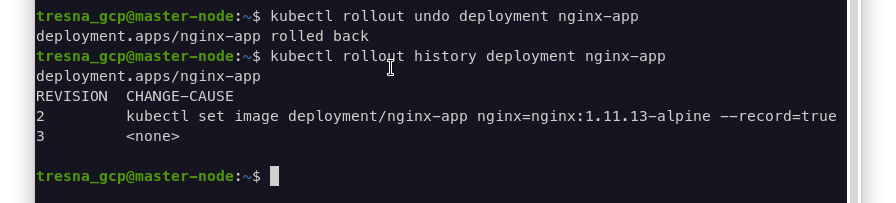

Rollback that update to the previous version 1.11.10-alpine.

kubectl rollout undo deployment nginx-appfor see the change off that, we can use rollout command like before, and i can see the 3rd revision is the undo update.

-

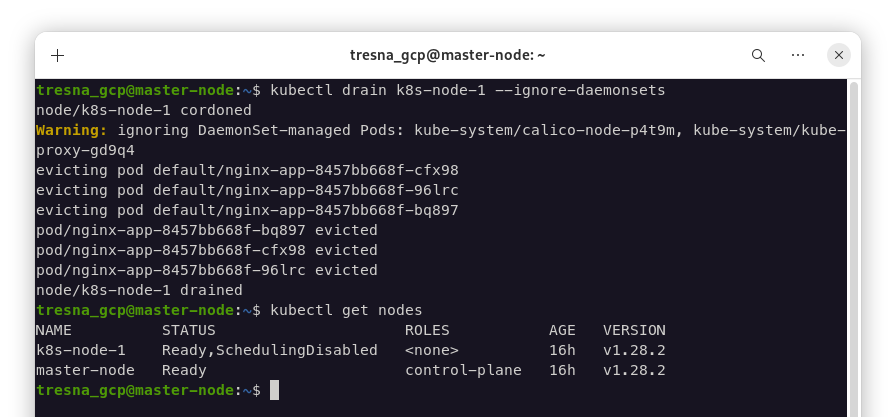

Set a node named k8s-node-1 as unavailable and reschedule all the pods running on it.

kubectl drain k8s-node-1 --ignore-daemonsetsResult after runing the command, node can have status SchedulingDisabled like this.

-

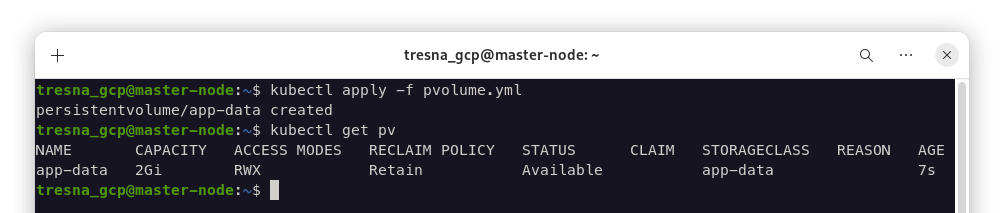

Create a Persistent Volume with name app-data.

kubectl apply -f pvolume.ymlfor see the volume created, iam using the command.

-

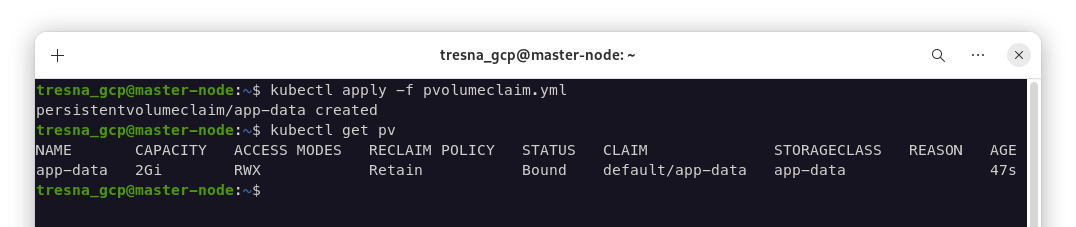

Create a Persistent Volume Claim that requests the Persistent Volume you had created above.

kubectl apply -f pvolumeclaim.ymlfor see the result, iam using same command like before.

This project i created in GCP VM Instance, i know maybe my solution didn't perpect but i hope can meet goal expectations for the challange. Thanks Before.

Regards, Tresna Widiyaman