- Main exploration is in notebooks/Give Me Some Credit.ipynb

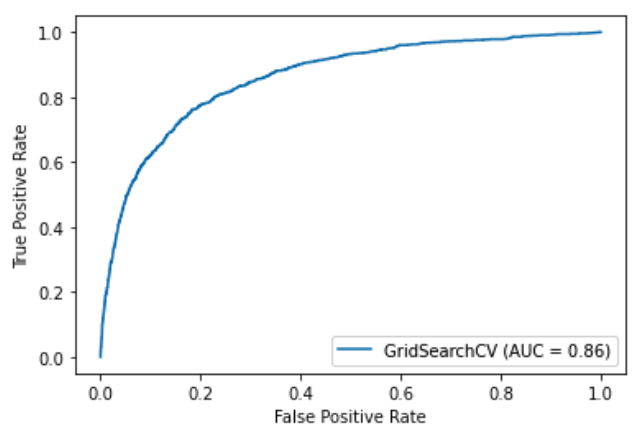

Model validation is done by selecting the model with the highest AUC. Quite simply, AUC is chosen because it is the target of the competition. Another possible evaluation metric is the F1 score.

AUC is the area under the ROC (Receiver Operating Curve). It is a graph of true positive rate against the false positive rate. It shows the performance of the classification model across all classification thresholds.

ROC AUC is the probability that the model ranks a random positive example more highly than a random negative example.

It might not be desirable when

The model’s business goal is to catch those who are likely to default, out of those who wouldn’t. The business wants to minimize false positive rate (i.e. rejecting good borrowers) and maximizing true positive rate (ie rejecting bad borrowers). The AUC can tell us exactly that (at regions of FPR).

F1 score, since we are dealing with imbalanced classes. Precision Recall Curve (which is good for when cases are imbalanced)

What insight(s) do you have from your model? What is your preliminary analysis of the given dataset?

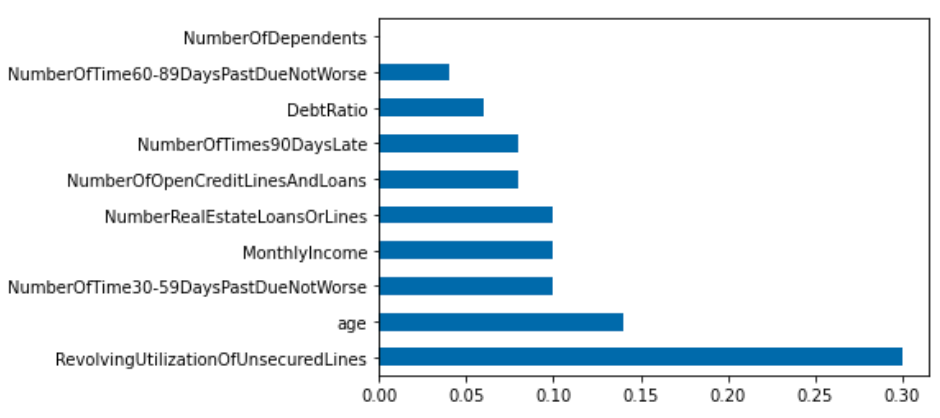

From the feature importance curve, we see that revolving utilization of unsecured lines has the highest feature importance. The closer a person is to hitting their credit limit, the more likely they are to be delinquent.

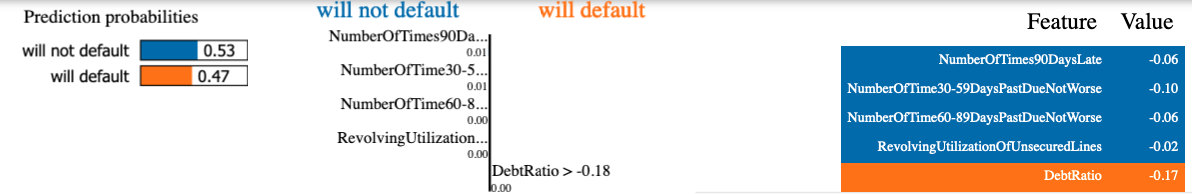

We can also use LIME to explain the prediction of a single point. For instance, the model predicted that there is a 53% chance that this person will not default because their score on the NumberOfTimes90DaysLate is lower than average, among other features.

Unfortunately, no. We could try some Auto-ML tools for parameter tuning and more feature engineering for that.

I organized this folder as if it is a POC to a production ML project that runs in batches. Admittedly, the codes in the .py files are dummy code, but they illustrate how we can organize the files in a way that allows for model retraining and prediction without using a notebook.