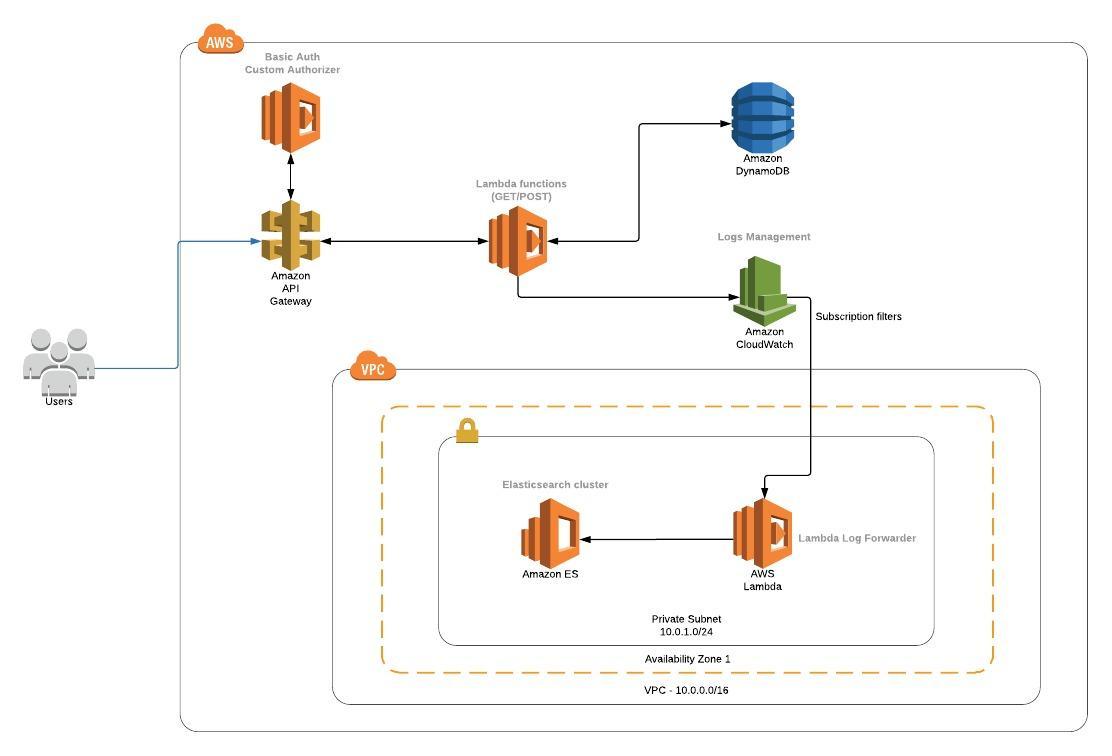

Deploying this solution sets up:

- An API gateway to expose four Lambda functions

- Four Lambda functions which implement the following functionalities:

- Retrieve a task by ID (GET /task/{id})

- Retrieve all tasks (GET /task)

- Save a new task (POST /task)

- Basic authentication (/auth)

- A Lambda function to forward the logs to an AWS Elasticsearch domain

- AWS Elasticsearch domain (1 node)

- An Internet gateway

- A VPC, a subnet and a security group for the Elasticsearch instance and the log forwarder

Below there is a brief explanation of what you can find inside the project:

.

├── go <-- Go source files

│ ├── src

│ ├── go-todo-app <-- A simple task management app in Go

│ ├── Makefile <-- Make to automate build

│ ├── functions <-- Source code for a lambda functions

│ │ ├── auth.go <-- Basic authentication Lambda function code (API Gateway Custom Authorizer)

│ │ ├── get.go <-- Get task by ID Lambda function code

│ │ ├── list.go <-- List all tasks Lambda function code

│ │ └── post.go <-- Create new task Lambda function code

│ ├── dao

│ │ ├── taskdao.go <-- DynamoDb DAO (get, list, save operations)

│ └── serverless.yaml <-- Serverless yaml file to deploy Lambda functions

├── nodejs <-- NodeJS source files

│ ├── log-forwarder <-- Function which forwards CloudWatch logs\' events to ES

│ ├── aws-data-message.json <-- An example of JSON event payload generated by CloudWatch

│ ├── handler.js <-- Lambda function to forward logs to ES

│ ├── package.json <-- NPM package file

│ ├── test-data.json <-- An example of JSON log event

│ └── serverless.yaml <-- Serverless yaml file to deploy Lambda functions

├── terraform <-- Terraform source files

│ ├── elasticsearch <-- Elasticsearch provisioning Terraform files

| ├── main.tf <-- Terraform main module

| ├── outputs.tf <-- Terraform output variables definition

| ├── variables.tf <-- Terraform input variables definition

├── README.md <-- This instructions file

- AWS CLI already configured with at least PowerUser permission

- Golang

- Python 3

- pip

- NodeJS

- Terraform

- Serveless framework

These instructions will get you a copy of the solution ready to be deployed. See deployment for notes on how to deploy the project on AWS.

First of all, you need to specify the location of your workspace. Run the following command to do it (from the project root):

$ export GOPATH=$GOPATH:`pwd`/goThen, run the commands below (from the project root) to install all the dependecies:

$ cd go/

$ go get ./...Run the following commands (from the project root) to install all the dependecies:

$ cd nodejs/log-forwarder

$ npm installIn order to run and/or deploy the lambda functions, you have to build the executeable targets. Preparing a binary to deploy to AWS Lambda requires that it is compiled for Linux.

Go in the go/src/go-todo-app directory

$ cd go/src/go-todo-appand then issue the following command in a shell to build it:

$ make buildNOTE: The previous command builds the Lambda functions for a Linux environment (specifing GOOS and GOARCH environment variables). If you need to run it locally on macOS, you can use the following command:

$ make build-local$ virtualenv -p python3 .

$ source bin/activate

$ pip3 install -r requirements.txt$ serverless invoke -f list -lThe terraform/elasticsearch directory contains a Terraform module to provision an AWS Elasticsearch domain. Using this module you can create an Elasticsearch domain and join it to a VPC with the access policy based on a security group applied to Elasticsearch domain.

To provision a new domain go to the terraform/elasticsearch directory and run the following commands (from the root of the project):

$ terraform applythen enter the value for the logical enviroment where you want to deploy the domain (e.g. dev) and the AWS account ID where you want to deploy it.

This command will also populate all the output values defined in the output.tf file.

These values are needed in the next steps of the deployment process. For instance, you will need the security group ID and the subnet ID newly created to deploy the log forwarder lambda function.

You can use Serverless framework to deploy all the Lambda functions.

Serveless use SSM parameters as the source for the parameters below:

- Basic authentication username (basicAuthUsername)

- Basic authentication password (basicAuthPassword)

- AWS account ID (accountId)

So, you need to create the parameter before running the deploy command. See here for more information.

Requirements: You need to compile the Lambda function, see [Building] section.

To package the Lambda functions, run the following command from go/src/go-todo-app directory which upload the package to S3, deploy them and expose the endopints through an API Gateway:

$ serverless deployYou can also specify the logical environment where you plan to deploy the functions by using the --stage parameter. If not specified, "dev" value is used as default.

$ serverless deploy --stage devRequirements: This function needs valid subnet, security group and Elasticsearch endpoint as parameters. You have to use the same values that have been returned as output by Terraform, after the provisioning of the Elastisearch domain. See [Elasticsearch cluster].

Run the following command from nodejs/log-forwarder directory to package the Lambda function, upload it to S3 and deploy it.

$ serverless deploy --endpoint `terraform output -state=./../../terraform/elasticsearch/terraform.tfstate es_endpoint` --securityGroup `terraform output -state=./../../terraform/elasticsearch/terraform.tfstate security_group` --subnet `terraform output -state=./../../terraform/elasticsearch/terraform.tfstate subnet_id` --stage dev