TokenUnify: Scalable Autoregressive Visual Pre-training with Mixture Token Prediction (Under review)

This repository contains the official implementation of the paper TokenUnify: Scalable Autoregressive Visual Pre-training with Mixture Token Prediction. It provides all the experimental settings and source code used in our research. The paper also includes theoretical proofs. For more details, please refer to our original paper.

To streamline the setup process, we provide a Docker image that can be used to set up the environment with a single command. The Docker image is available at:

docker pull registry.cn-hangzhou.aliyuncs.com/cyd_dl/monai-vit:v26The datasets required for pre-training and segmentation are as follows:

| Dataset Type | Dataset Name | Description | URL |

|---|---|---|---|

| Pre-training Dataset | Large EM Datasets of Various Brain Regions | Fly brain dataset for pre-training | EM Pretrain Dataset |

| Segmentation Dataset | CREMI Dataset | Challenge on circuit reconstruction datasets | CREMI Dataset |

| Segmentation Dataset | AC3/AC4 | AC3/AC4 Dataset | Mouse Brain GoogleDrive |

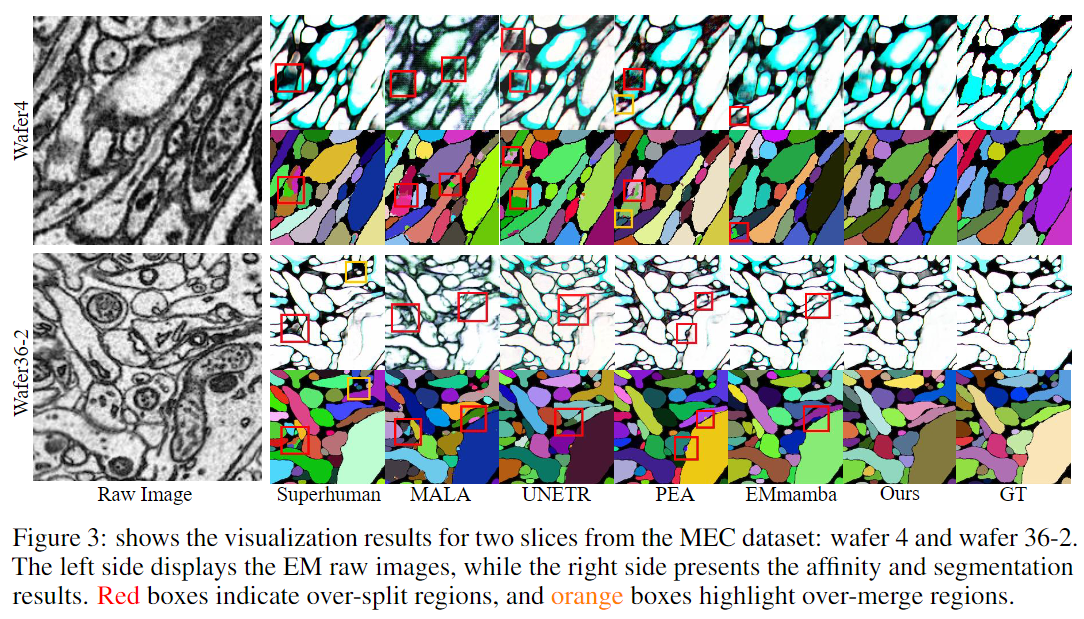

| Segmentation Dataset | MEC | A new neuron segmentation dataset | Rat Brain (Published after paper acceptance) |

To use this dataset, please refer to the license provided here.

Usage Notes

-

Non-commercial Use: Users do not have the rights to copy, distribute, publish, or use the data for commercial purposes or develop and produce products. Any format or copy of the data is considered the same as the original data. Users may modify the content and convert the data format as needed but are not allowed to publish or provide services using the modified or converted data without permission.

-

Research Purposes Only: Users guarantee that the authorized data will only be used for their own research and will not share the data with third parties in any form.

-

Citation Requirements: Research results based on the authorized data, including books, articles, conference papers, theses, policy reports, and other publications, must cite the data source according to citation norms, including the authors and the publisher of the data.

-

Prohibition of Profit-making Activities: Users are not allowed to use the authorized data for any profit-making activities.

-

Termination of Data Use: Users must terminate all use of the data and destroy the data (e.g., completely delete from computer hard drives and storage devices/spaces) upon leaving their team or organization or when the authorization is revoked by the copyright holder.

- Sample Source: Mouse MEC MultiBeam-SEM, Intelligent Institute Brain Imaging Platform (Wafer 4 at layer VI, wafer 25, wafer 26, and wafer 36 at layer II/III)

- Resolution: 8nm x 8nm x 35nm

- Volume Size: 1250 x 1250 x 125

- Annotation Completion Dates: 2023.12.11 (w4), 2024.04.12 (w36)

- Authors: Anonymous authors

- Copyright Holder: Anonymous Agency

- Chinese Name: 匿名机构

- English Name: Anonymous Agency

bash src/run_mamba_mae_AR.sh

bash src/launch_huge.sh

bash src/run_mamba_seg.sh

- Open-sourced the core code

- Wrote the README for code usage

- Open-sourced the pre-training dataset

- Upload the pre-trained weights

- Release the private dataset MEC

If you find this code or dataset useful in your research, please consider citing our paper: