This is an extension to make prompt from simple text for Stable Diffusion web UI by AUTOMATIC1111.

Currently, only prompts consisting of some danbooru tags can be generated.

Copy https://github.com/toshiaki1729/stable-diffusion-webui-text2prompt.git into "Install from URL" tab and "Install".

To install, clone the repository into the extensions directory and restart the web UI.

On the web UI directory, run the following command to install:

git clone https://github.com/toshiaki1729/stable-diffusion-webui-text2prompt.git extensions/text2prompt

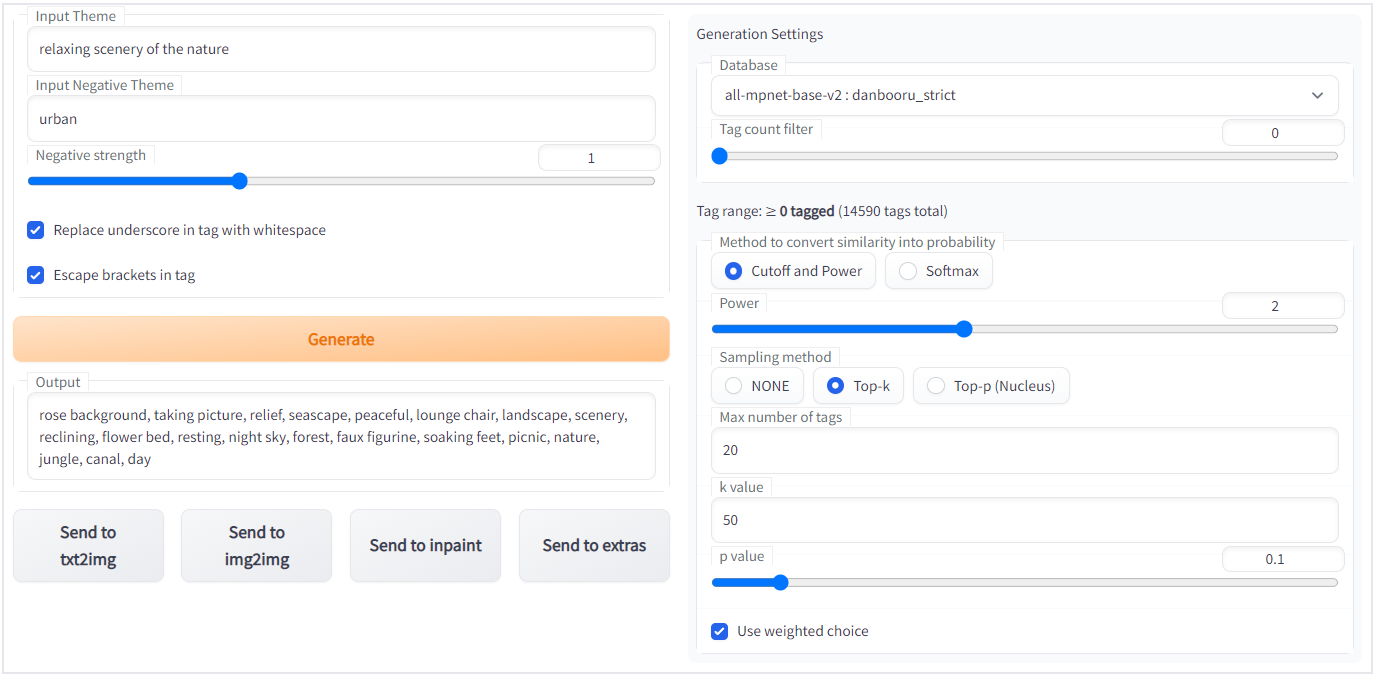

- Type some words into "Input Theme"

- Type some unwanted words into "Input Negative Theme"

- Push "Generate" button

- For more creative result

- increase "k value" or "p value"

- disable "Use weighted choice"

- use "Cutoff and Power" and decrease "Power"

- or use "Softmax" (may generate unwanted tags more often)

- For more strict result

- decrease "k value" or "p value"

- use "Cutoff and Power" and increase "Power"

- You can enter very long sentences, but the more specific it is, the fewer results you will get.

It's doing nothing special;

- Danbooru tags and it's descriptions are in the

datafolder- embeddigs of descriptions are generated from wiki

- all-mpnet-base-v2 and all-MiniLM-L6-v2 models are used to make embeddings from the text

- Tokenize your input text and calculate cosine similarity with all tag descriptions

- Choose some tags depending on their similarities

You can choose the following dataset if needed.

Download the following, unzip and put its contents into text2prompt-root-dir/data/danbooru/.

| Tag description | all-mpnet-base-v2 | all-MiniLM-L6-v2 |

|---|---|---|

| well filtered (recommended) | download (preinstalled) | download |

| normal (same as previous one) | download | download |

| full (noisy) | download | download |

well filtered: Tags are removed if their description include the title of some work. These tags are heavily related to a specific work, meaning they are not "general" tags.

normal: Tags containing the title of a work, like tag_name(work_name), are removed.

full: Including all tags.

$$p_i = \sigma(\{s_n|n \in N\})i = \dfrac{e^{s_i}}{ \Sigma{j \in N}\ e^{s_j} }$$

Yes, it doesn't sample like other "true" language models do, so "Filtering method" might be better.

- Find smallest

$N_p \subset N$ such that$\Sigma_{i \in N_p}\ p_i\ \geq p$ - set

$N_p=\emptyset$ at first, and add index of$p_{(k)}$ into$N_p$ where$p_{(k)}$ is the$k$ -th largest in$\{p_n | n \in N \}$ for$k = 1, 2, ..., n$ , until the equation holds.

- set

Finally, the tags will be chosen randomly while the number