Tensorflow implementation of Image Matching with Triplet Loss on the Tiny ImageNet dataset.

We want to create a model for the task of image matching data from the Tiny ImageNet Visual Recognition Challenge dataset.

Image matching is a process in machine learning that involves finding similar images in a dataset. It can be used for a variety of applications, such as image retrieval, object recognition, and duplication detection.

We will implement a distance-based matching model based on FaceNet: A Unified Embedding for Face Recognition and Clustering, Schroff et al., 2015 which introduced a state-of-the-art technique in face matching. Note that we will make the extra assumption that this technique can generalize to generic image matching.

This README is a short-version of the PDF report located in the report directory written as part of a coursework for the Computer Vision course of the MLMI at Cambridge. Note that this whole project was coded entirely from scratch by myself.

hpt.pyis used to perform Hyperparameter Tuning (HPT)train.pyis used to train a Feature Model (cf [Section 3.5](3.5. Feature Model))evaluate_e2e_model.ipynbis used to create and evaluate an ImageMatcher model

Create an environment with python 3.10.8 and run the following command to install the required dependencies:

pip install -r requirements.txtThe Tiny ImageNet Visual Recognition Challenge dataset contains images from 200 different classes. Each image has a size of 64 * 64.

For the rest of the project, we will use a 70/20/10 ratio for the train/validation/test split.

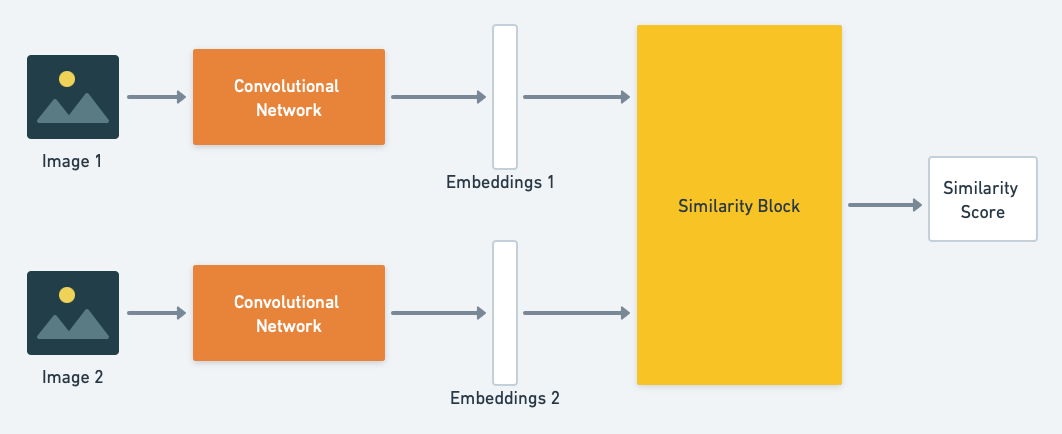

The Siamese Network was first introduced in Siamese Neural Networks for One-shot Image Recognition, Koch et al., 2015 and allows to compute similarity between 2 inputs. Therefore, image matching can be implemented by outputting 1 (for similar images) if and only if the output of the Siamese Network is greater than a given threshold.

As the name suggests, a Siamese network consists of 2 branches that share the same weights to ensure the symmetry of our distance measure. The branches are usually made of convolution layers. We picked a convolution system for 2 reasons:

- they are biased with the spatial distribution of images (i.e. pixel neighbors are likely to be correlated)

- they have much less weights than a equivalent dense feed-forward layer.

For our model, we can use a usual convolutional architecture like VGG-16, ResNet50 or Inception. Doing so will also allow to use transfer-learning to speed up our training.

Diagram of a generic Siamese Network

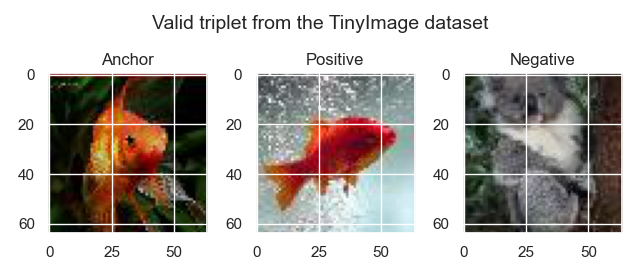

For the best performance, let's implement the Triplet Loss introduced in FaceNet: A Unified Embedding for Face Recognition and Clustering, Schroff et al., 2015.

Goal: We want to ensure that an image

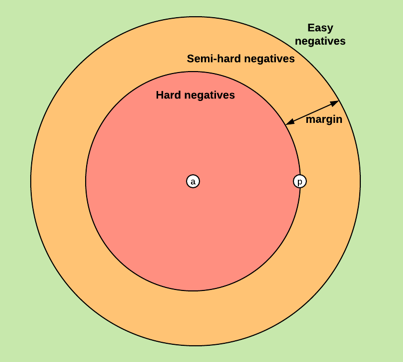

Mathematically speaking, we want:

with:

-

$f$ the embedding function -

$\mathcal T$ the set of all valid triplets in the training set (note that the fact that a given example$x_i$ belongs to a triplet depends on its embedding hence on$f$ a priori) -

$\alpha$ the margin

Location of negatives with respect to a given anchor / positive pair in the 2D-space

Hence, the triplet loss

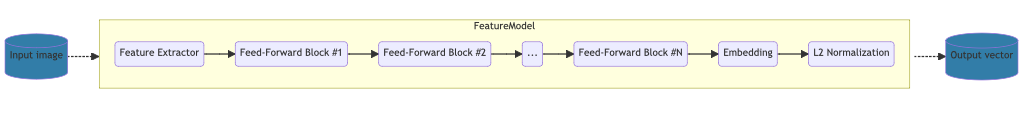

The Feature Model takes an image as input and outputs its embedding representation.

The architecture of the model is the following:

Architecture of the full Feature Model

The Image Matcher has a Siamese Network architecture as we expect the model to take 2 images as inputs.

graph LR

subgraph E2E Model

input1[(Image 1)]

input2[(Image 2)]

FeatureModel1(Feature Model)

FeatureModel2(Feature Model)

output1[(Vector 1)]

output2[(Vector 2)]

Diff{"-"}

Norm{"||.|| in L2"}

input1 -.-> FeatureModel1 -.-> output1

input2 -.-> FeatureModel2 -.-> output2

output1 & output2 -.-> Diff -.-> Norm

Norm -- > threshold --> res1(Images match)

Norm -- < threshold --> res2(Images don't match)

classDef data fill:#327da8;

class input1,input2,output1,output2 data;

classDef model fill:#e89b35;

class FeatureModel1,FeatureModel2,Diff,Norm model;

end

Architecture of the Image Matcher

For clarity, every architecture / hyperparameter change will be done from a YAML configuration file. Here is an example of such config:

experiment_name: "efficientnet_with_ff_block_test"

seed: 0

image_augmentation: False

feature_extractor: "efficientnet"

embedding_dim: 128

intermediate_ff_block_units: [512, 256]

dropout: 0.5

epochs: 50

early_stopping_patience: 10First, modify the hpt_config.yaml file and define the different hyperparameters you would like to try. Note that all fields from the 3rd sections define grids. The other parameters are fixed for all trials.

Run the following command to create an optuna HPT study.

python hpt.py --hpt-config-filepath hpt_config.yamlThe script will generate a .db file in exp/hpt_studies. This file contains the information of our Hyperparameter Tuning.

To visualize the results of our HPT study, open the hpt_visualizer.ipynb notebook, fill the first cells accordingly and run all cells.

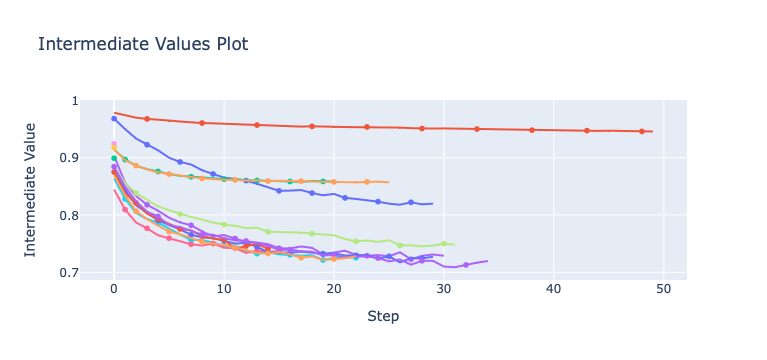

Example of possible visualization for a HPT study

First, let's predict the similarity score for 2 images picked in a valid triplet from the training set. As the triplet is likely to have been already seen by the model, we expect to have the anchor-positive score greater than the anchor-negative one by at least the default margin value of

output_1 = image_matcher.predict(anchor, positive)

output_1

> <tf.Tensor: shape=(1,), dtype=float32, numpy=array([-0.03183275], dtype=float32)>output_2 = image_matcher.predict(anchor, negative)

output_2

> <tf.Tensor: shape=(1,), dtype=float32, numpy=array([-1.5196313], dtype=float32)>margin = 1

assert output_1 > output_2 + margin

print("The model is a priori well trained.")To conclude, the model is a priori well trained and can at least capture the similarity signal for instances of our training set. Let's now evaluate the model generalization power on the test set.

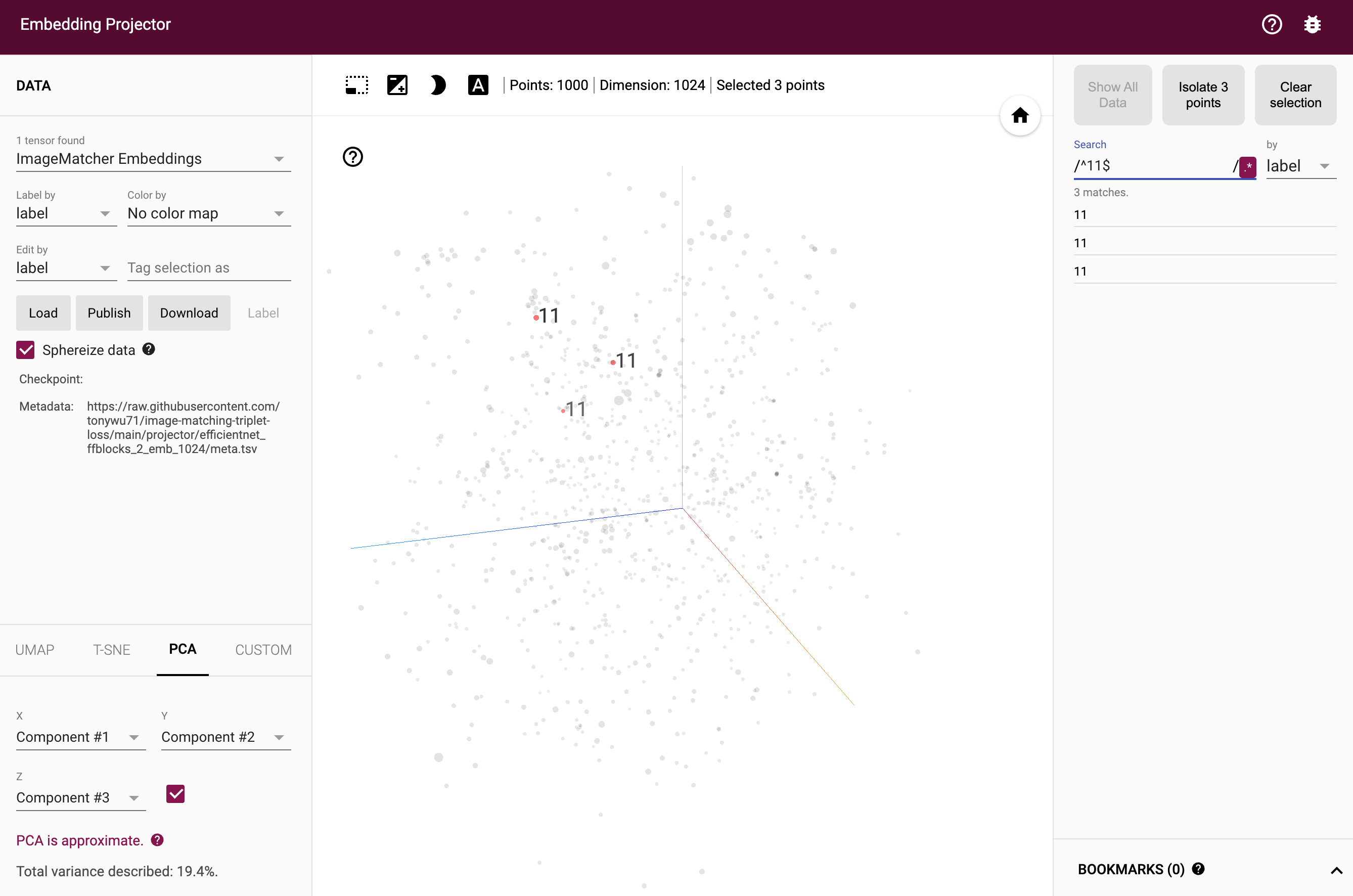

Tensorflow Projector is a useful tool for data exploration and visualization, particularly for high-dimensional data. It can help gain insights into their data and identify trends and patterns that may not be apparent in lower-dimensional projections. Therefore, we will use Tensorflow Projector to visualize a 3D representation of our embeddings. If the model is trained correctly, then similar images should be close to each other.

Screenshot of the Tensorflow Projector UI with the enbeddings obtained with our approach

To use it yourself, open the following link in your internet browser.

We have implemented an end-to-end machine learning model that is able to tell if two images are similar. This model has various applications, such as image retrieval, object recognition, and duplication detection. The project we designed is all the more interesting that the whole training process is data-agnostic i.e. the Image Matcher can be easily retrained for another dataset.