A Toolkit for Generating Query Refinement Gold Standards

Online Video Tutorial

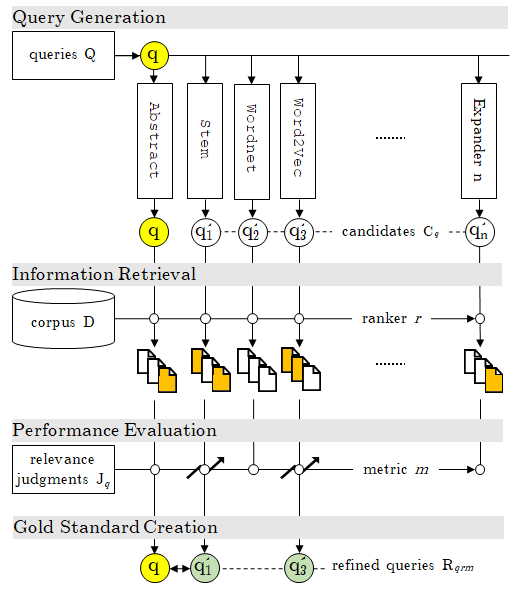

Workflow Diagram

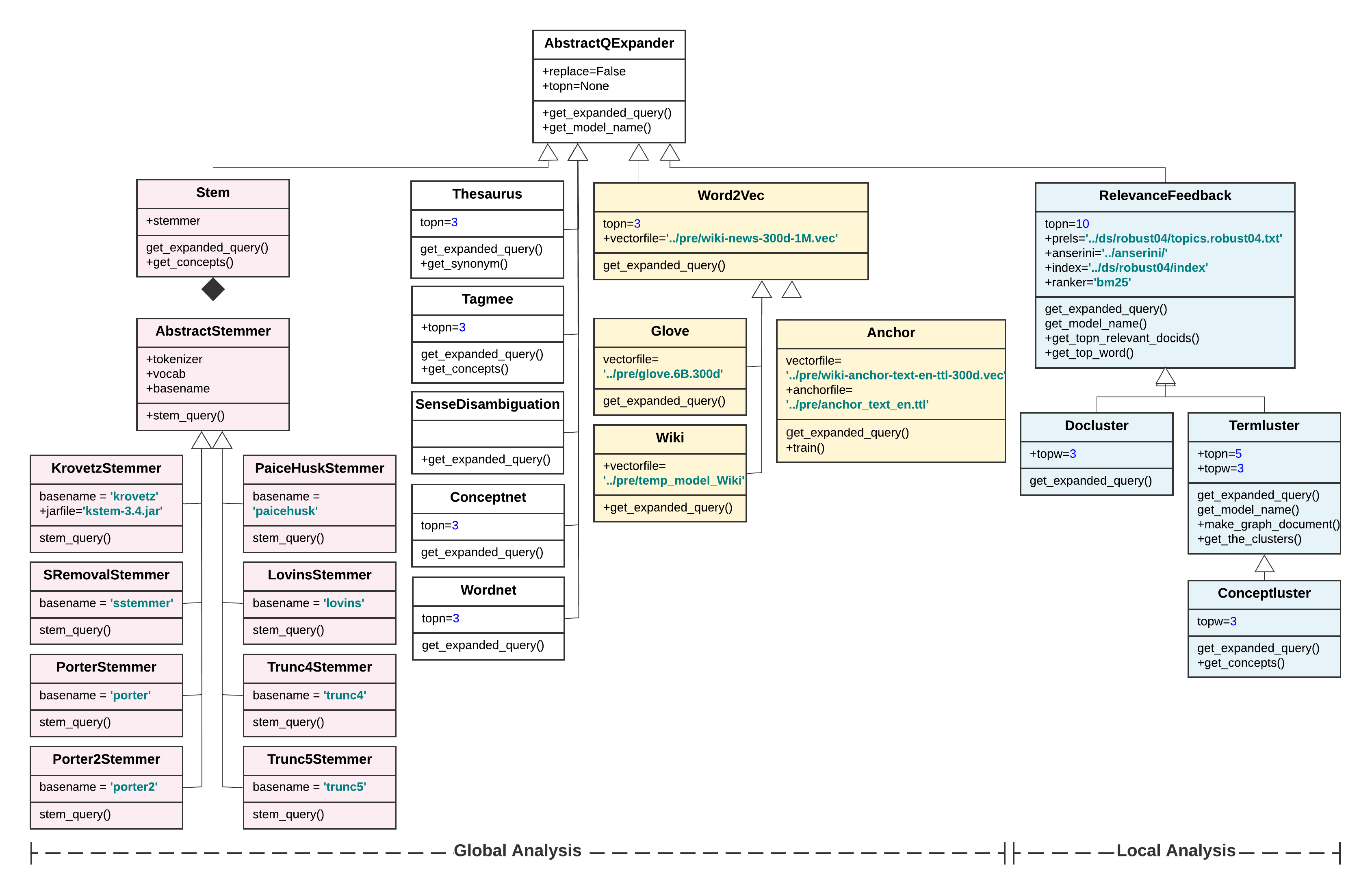

Class Diagram for Query Expanders in qe/. [zoom in!]

The expanders are initialized by the Expander Factory in qe/cmn/expander_factory.py

Overview <o>

Codebases

qe/: (query expander) source code for the expanders E={q}.

+---qe

| | main.py

| +---cmn

| +---eval

| +---expanders

| | abstractqexpander.py

| | ...

| \---stemmers

| abstractstemmer.py

| ...

qs/: (query suggester) source code from cair for the supervised query refinement methods (suggesters), including seq2seq, acg(seq2seq + attn.), and hred-qs.

+---qs

| main.py

\---cair

Source Folders [empty]

The following source folders are to be populated by the original query dataset Q, judment relevances Jq, and pre-trained models/embeddings.

pre/: (pre-trained models) source folder for pre-trained models and/or embeddings, including FastText and GloVe.

+---pre

| # anchor_text_en.ttl

| # gitkeep

| # glove.6B.300d.txt

| # temp_model_Wiki

| # temp_model_Wiki.vectors.npy

| # wiki-anchor-text-en-ttl-300d.vec

| # wiki-anchor-text-en-ttl-300d.vec.vectors.npy

| # wiki-news-300d-1M.vec

anserini/: source folder for anserini, indexes for the information corpuses, and trec_eval.

+---anserini

| +---eval

| | \---trec_eval.9.0.4

| \---target

| +---appassembler

| | +---bin

ds/: (dataset) source folder for original query datasets, including Robust04, Gov2, ClueWeb09-B, and ClueWeb12-B13.

+---ds

| +---robust04

| \---lucene-index.robust04.pos+docvectors+rawdocs

| +---clueweb09b

| | \---lucene-index.clueweb09b.pos+docvectors+rawdocs

| +---clueweb12b13

| | \---lucene-index.clueweb12b13.pos+docvectors+rawdocs

| +---gov2

| | \---lucene-index.gov2.pos+docvectors+rawdocs

Target Folders

The target folders are the output repo for the expanders, gold standard datasets, and benchmarks.

ds/qe/: output folder for expanders and the gold standard datasets.

+---ds

| +---qe

| | +---clueweb09b

| | | topics.clueweb09b.1-200.bm25.map.dataset.csv

| | | topics.clueweb09b.1-200.bm25.rm3.map.dataset.csv

| | | topics.clueweb09b.1-200.qld.map.dataset.csv

| | | topics.clueweb09b.1-200.qld.rm3.map.dataset.csv

| | +---clueweb12b13

| | | topics.clueweb12b13.201-300.bm25.map.dataset.csv

| | | topics.clueweb12b13.201-300.bm25.rm3.map.dataset.csv

| | | topics.clueweb12b13.201-300.qld.map.dataset.csv

| | | topics.clueweb12b13.201-300.qld.rm3.map.dataset.csv

| | +---gov2

| | | topics.gov2.701-850.bm25.map.dataset.csv

| | | topics.gov2.701-850.bm25.rm3.map.dataset.csv

| | | topics.gov2.701-850.qld.map.dataset.csv

| | | topics.gov2.701-850.qld.rm3.map.dataset.csv

| | |---robust04

| | | topics.robust04.bm25.map.dataset.csv

| | | topics.robust04.bm25.rm3.map.dataset.csv

| | | topics.robust04.qld.map.dataset.csv

| | | topics.robust04.qld.rm3.map.dataset.csv

ds/qe/eval/: output folder for the reports on performance of expanders and statistics about the gold standard datasets.

+---ds

| +---qe

| | \---eval

| | overall.stat.csv

ds/qs/: output folder for suggesters. This folder contains the benchmark results only and the trained models are ignored due to their sizes.

+---ds

| +---qs

| | +---all.topn5

| | +---clueweb09b.topn5

| | +---clueweb12b13.topn5

| | +---gov2.topn5

| | \---robust04.topn5

Prerequisites

anserini

cair (optional, needed for benchmark on suggesters)

python 3.7 and the following packages:

pandas, scipy, numpy, requests, urllib

networkx, community, python-louvain

gensim, tagme, bs4, pywsd, nltk [stem, tokenize, corpus]

For the full list, refer to environment.yml. A conda environment, namely ReQue, can be created and activated by the following commands:

$> conda env create -f environment.yml

$> conda activate ReQue

Pre-trained Models/Embeddings

- FastText

- GloVe

- Joint Embedding of Hierarchical Categories and Entities for Concept Categorization and Dataless Classification

Original Query Datasets

- Robust04 [corpus, topics, qrels]

- Gov2 [corpus, topics, qrels]

- ClueWeb09-B [corpus, topics, qrels]

- ClueWeb12-B13 [corpus, topics, qrels]

- Wikipedia Anchor Text

Installing

It is suggested to clone the repo and install a new conda environment along with the required packages using yaml configuration file by the following commands:

$> git clone https://github.com/hosseinfani/ReQue.git

$> cd ReQue

$> conda env create -f environment.yml

$> conda activate ReQue

Anserini must be installed in anserini/ for indexing, information retrieval and ranking, and evaluation on the original query datasets. The documents in the corpus must be indexed, e.g., by the following commands for Robust04 (already available here), Gov2, ClueWeb09-B, and ClueWeb12-B13:

$> anserini/target/appassembler/bin/IndexCollection -collection TrecCollection -input Robust04-Corpus -index lucene-index.robust04.pos+docvectors+rawdocs -generator JsoupGenerator -threads 44 -storePositions -storeDocvectors -storeRawDocs 2>&1 | tee log.robust04.pos+docvectors+rawdocs &

$> anserini/target/appassembler/bin/IndexCollection -collection TrecwebCollection -input Gov2-Corpus -index lucene-index.gov2.pos+docvectors+rawdocs -generator JsoupGenerator -threads 44 -storePositions -storeDocvectors -storeRawDocs 2>&1 | tee log.gov2.pos+docvectors+rawdocs &

$> anserini/target/appassembler/bin/IndexCollection -collection ClueWeb09Collection -input ClueWeb09-B-Corpus -index lucene-index.cw09b.pos+docvectors+rawdocs -generator JsoupGenerator -threads 44 -storePositions -storeDocvectors -storeRawDocs 2>&1 | tee log.cw09b.pos+docvectors+rawdocs &

$> anserini/target/appassembler/bin/IndexCollection -collection ClueWeb12Collection -input ClueWeb12-B-Corpus -index lucene-index.cw12b13.pos+docvectors+rawdocs -generator JsoupGenerator -threads 44 -storePositions -storeDocvectors -storeRawDocs 2>&1 | tee log.cw12b13.pos+docvectors+rawdocs &

ReQue: Refining Queries: qe/

Refining queries is done using all expanders by qe/main.py that accept the following arguments:

--anserini: The path to the anserini library (default: ../anserini/);

--corpus: The name of the original query dataset whose queries are to be expanded and paired with the refined queries, if any, which could be one of {robust04, gov2, clueweb09b, and clueweb12b13}. Required;

--index: The corpus index, e.g. ../ds/robust04/lucene-index.robust04.pos+docvectors+rawdocs. Required;

--output: The output path for the gold standard dataset, e.g., ../ds/qe/robust04/. Required;

--ranker: The ranker name which could be any of the available ranker models in anserini(SearchCollection). ReQue has been tested for {bm25,qld} (default: bm25);

--metric: The evaluation metric name which could be any metric from trec_eval. Currently, ReQue has been tested for mean average precision, (default: map);

The sample running commands are:

$> python -u main.py --anserini ../anserini/ --corpus robust04 --index ../ds/robust04/lucene-index.robust04.pos+docvectors+rawdocs --output ../ds/qe/robust04/ --ranker bm25 --metric map 2>&1 | tee robust04.log &

$> python -u main.py --anserini ../anserini/ --corpus robust04 --index ../ds/robust04/lucene-index.robust04.pos+docvectors+rawdocs --output ../ds/qe/robust04/ --ranker qld --metric map 2>&1 | tee robust04.log &

$> python -u main.py --anserini ../anserini/ --corpus gov2 --index ../ds/gov2/lucene-index.gov2.pos+docvectors+rawdocs --output ../ds/qe/gov2/ --ranker bm25 --metric map 2>&1 | tee gov2.log &

$> python -u main.py --anserini ../anserini/ --corpus gov2 --index ../ds/gov2/lucene-index.gov2.pos+docvectors+rawdocs --output ../ds/qe/gov2/ --ranker qld --metric map 2>&1 | tee gov2.log &

$> python -u main.py --anserini ../anserini/ --corpus clueweb09b --index ../ds/clueweb09b/lucene-index.cw09b.pos+docvectors+rawdocs --output ../ds/qe/clueweb09b/ --ranker bm25 --metric map 2>&1 | tee clueweb09b.log &

$> python -u main.py --anserini ../anserini/ --corpus clueweb09b --index ../ds/clueweb09b/lucene-index.cw09b.pos+docvectors+rawdocs --output ../ds/qe/clueweb09b/ --ranker qld --metric map 2>&1 | tee clueweb09b.log &

$> python -u main.py --anserini ../anserini/ --corpus clueweb12b13 --index ../ds/clueweb12b13/lucene-index.cw12b13.pos+docvectors+rawdocs --output ../ds/qe/clueweb12b13/ --ranker bm25 --metric map 2>&1 | tee clueweb12b13.log &

$> python -u main.py --anserini ../anserini/ --corpus clueweb12b13 --index ../ds/clueweb12b13/lucene-index.cw12b13.pos+docvectors+rawdocs --output ../ds/qe/clueweb12b13/ --ranker qld --metric map 2>&1 | tee clueweb12b13.log &

Gold Standard Dataset: ds/qe/

Path

The gold standard dataset for each original query dataset is generated in ds/qe/{original query dataset}/*.{ranker}.{metric}.dataset.csv.

File Structure

The columns in the gold standard dataset are:

-

qid: the original query id in the input query dataset; -

abstractqueryexpansion: the original queryq; -

abstractqueryexpansion.{ranker}.{metric}: the original evaluation valuer_m(q, Jq)for a rankerrin terms of an evaluation metricm; -

star_model_count: number of refined queries0 <= |R_qrm| <= |E|for the original queryqthat improve the original evaluation value; -

expander.{i}: the name of the expandere_ithat revised the original query and improved the original evaluation value; -

metric.{i}: the evaluation valuer_m(q'_i, Jq)of the refined queryq'_i. -

query.{i}: the refined queryq'_i;

and 0 <= i <= star_model_count. The refined queries are listed in order of descending values.

Example

The golden standard dataset for Robust04 using the ranker bm25 and based on the evaluation metric map (mean average precision) is ds/qe/robust04/topics.robust04.bm25.map.dataset.csv and, for instance, includes:

311,Industrial Espionage,0.4382,1,relevancefeedback.topn10.bm25,0.489,industrial espionage compani bnd mr foreign intellig samsung vw mossad

where there is only 1 refined query for the query# 311. The original query Industrial Espionage is revised to industrial espionage compani bnd mr foreign intellig samsung vw mossad by relevancefeedback, and the map is improved from 0.4382 (original map) to 0.489.

Another instance is:

306,African Civilian Deaths,0.1196,0

that is no available expander was able to revise the query# 306 such that the revise query improve the performance of ranker bm25 in terms of map.

Benchmark Supervised Query Refinement Method: qs/

Cair by Ahmad et al. sigir2019 has been used to benchmark the gold standard datasets for seq2seq, acg(seq2seq + attn.), hred-qs.

The qs/main.py accepts a positive integer n, for considering the topn refined queries, and the name of the original query dataset. n may be chosen to be a large number, e.g., n=100 to consider all the refined queries.

Sample commands for top-5 are:

$> python -u qs/main.py 5 robust04 2>&1 | tee robust04.topn5.log &

$> python -u qs/main.py 5 gov2 2>&1 | tee gov2.topn5.log &

$> python -u qs/main.py 5 clueweb09b 2>&1 | tee clueweb09b.topn5.log &

$> python -u qs/main.py 5 clueweb12b13 2>&1 | tee clueweb12b13.topn5.log &

By passing all as the name of the original query dataset, it is also possible to merge all the gold standard datasets and do the benchmark:

$> python -u qs/main.py 5 all 2>&1 | tee all.topn5.log &

Performance Evaluation on Generated Gold Standard Datasets for the TREC collections

Statistics: ds/qe/eval/

Statistics shows that for all the rankers, at least 1.44 refined queries exists on average for an original query while the best performance is for Robust04 over bm25 with 4.24.

| avg |Rqrm| | ||

|---|---|---|

| bm25 | qld | |

| robust04 | 4.25 | 4.06 |

| gov2 | 2.49 | 2.15 |

| clueweb09b | 1.44 | 1.67 |

| clueweb12b13 | 1.81 | 1.57 |

The average map improvement rate is also reported, given the best refined query for each original query. As shown, the minimum value of map improvement is greater than 100% for all the gold standard datasets which means that even in the worst case, the best refined query for an original query almost doubled the performance of the ranker in terms of map while the gold standard datasets for clueweb09b have improvement rate close or greater than 1000%, meaning that, on average, the best refined query improved each original query by a factor of 10 in that datasets.

average map improvement rate (%) |

||

|---|---|---|

| bm25 | qld | |

| robust04 | 411.83 | 301.26 |

| gov2 | 104.31 | 101.77 |

| clueweb09b | 945.22 | 1,751.58 |

| clueweb12b13 | 196.77 | 159.38 |

Benchmarks: ds/qs/

For each gold standard dataset belonging to ReQue, given the pairs {(q, q') | q ∈ Q, q' ∈ Rqrm}, the performance of three state-of-the-art supervised query refinement methods including seq2seq, acg(seq2seq + attn.), hred-qs after running the models for 100 epochs has been reported in terms of rouge-l and bleu. As shown, in all the cases, acg(seq2seq + attn.) and hred-qs outperform seq2seq in terms of all the evaluation metric, in most cases, acg(seq2seq + attn.) that uses the attention mechanism outperform hred-qs. The similar observation is also reported by Ahmad et al. Context Attentive Document Ranking and Query Suggestion and Dehghani et al. Learning to Attend, Copy, and Generate for Session-Based Query Suggestion.

Given a gold standard dataset which is built for an original query dataset for a ranker and a metric, the full report can be found here: ds/qs/{original query dataset}.topn5/topics.{original query dataset}.{ranker}.{metric}.results.csv. For instance, for ds/qe/robust04/topics.robust04.bm25.map/dataset.csv the benchmarks are in ds/qs/robust04.topn5/topics.robust04.bm25.map/results.csv

| seq2seq | acg | hred-qs | |||||

|---|---|---|---|---|---|---|---|

| ranker | rouge-l | bleu | rouge-l | bleu | rouge-l | bleu | |

| robust04 | bm25 | 25.678 | 17.842 | 37.464 | 23.006 | 43.251 | 29.509 |

| qld | 25.186 | 16.367 | 37.254 | 23.519 | 36.584 | 25.241 | |

| gov2 | bm25 | 16.837 | 12.007 | 42.461 | 28.243 | 37.68 | 25.595 |

| qld | 9.615 | 6.802 | 40.205 | 23.002 | 19.528 | 11.994 | |

| clueweb09b | bm25 | 20.311 | 11.323 | 43.945 | 22.574 | 38.674 | 27.707 |

| qld | 28.04 | 17.394 | 43.17 | 23.727 | 34.543 | 21.472 | |

| clueweb12b13 | bm25 | 17.102 | 13.562 | 56.734 | 28.639 | 30.13 | 21.504 |

| qld | 10.439 | 6.986 | 59.534 | 29.982 | 13.057 | 8.159 |

Authors

Hossein Fani1,2, Mahtab Tamannaee1, Fattane Zarrinkalam1, Jamil Samouh1, Samad Paydar1 and Ebrahim Bagheri1

1Laboratory for Systems, Software and Semantics (LS3), Ryerson University, ON, Canada.

2School of Computer Science, Faculty of Science, University of Windsor, ON, Canada.

License

©2020. This work is licensed under a CC BY-NC-SA 4.0 license.

Acknowledgments

We benefited from codebases of cair and anserini. We would like to expresse our gratitdue for authors of these repositeries.