This repo provides the 1st place solution(code and checkpoint) of the CVPR'23 LOVEU-AQTC challenge.

[LOVEU@CVPR'23 Challenge] [Technical Report]

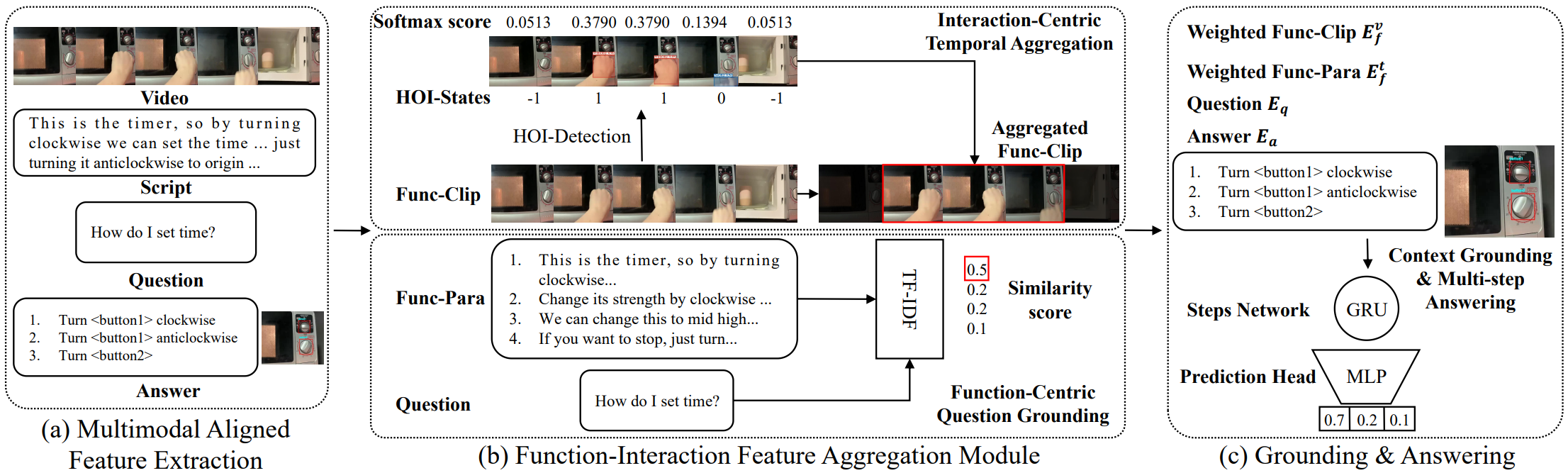

Model Architecture ( Please refer to our [Tech Report] for more details ;-) )

(1) PyTorch. See https://pytorch.org/ for instruction. For example,

conda install pytorch torchvision torchtext cudatoolkit=11.3 -c pytorch

(2) PyTorch Lightning. See https://www.pytorchlightning.ai/ for instruction. For example,

pip install pytorch-lightning

Download training set (without ground-truth labels) by filling in the [AssistQ Downloading Agreement]. Prepare the testing set via [AssistQ test@23].

Before starting, you should encode the instructional videos, scripts, function-paras, QAs. See encoder/README.md.

Select the config file and simply train, e.g.,

python train.py --cfg configs/model_egovlp_local.yaml

To inference a model, e.g.,

python inference.py --cfg configs/model_egovlp_local.yaml

The evaluation will be performed after each epoch. You can use Tensorboard, or just terminal outputs to record evaluation results.

CUDA_VISIBLE_DEVICES=0 python inference.py --cfg configs/model_egovlp_local.yaml CKPT "model_egovlp_local.ckpt"

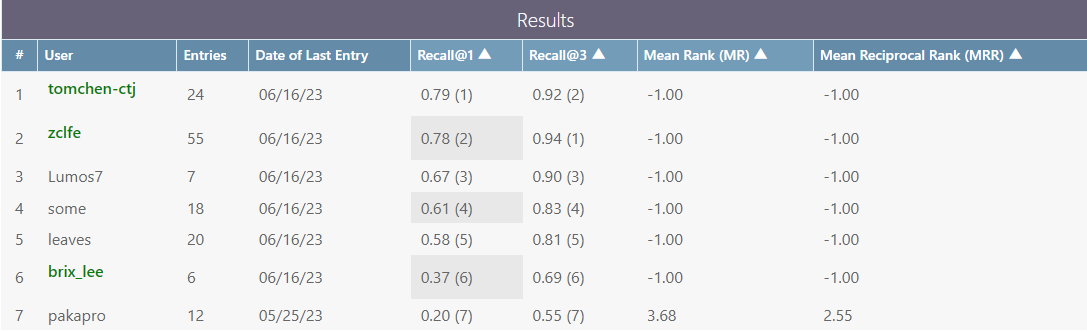

| Model | Video Encoder | Image Encoder | Text Encoder | HOI | R@1 | R@3 | CKPT |

|---|---|---|---|---|---|---|---|

| Function-centric(baseline) (configs/baseline.yaml) | ViT-L | ViT-L | XL-Net | N | 63.9 | 89.5 | |

| BLIP-Local (configs/model_blip.yaml) | BLIP-ViT | BLIP-ViT | BLIP-T | N | 67.5 | 88.2 | link |

| CLIP-Local (configs/model_clip.yaml) | CLIP-ViT | CLIP-ViT | XL-Net | Y | 67.9 | 88.9 | link |

| EgoVLP-Local (configs/model_egovlp_local.yaml) | EgoVLP-V* | EgoVLP-V* | EgoVLP-T | Y | 74.1 | 90.2 | link |

| EgoVLP-Global (configs/model_egovlp_global.yaml) | EgoVLP-V | CLIP-ViT | EgoVLP-T | Y | 74.8 | 91.5 | link |

| Linear Ensemble | 78.7 | 93.4 |

Our code is based on Function-Centric, EgoVLP and Afformer. We sincerely thank the authors for their solid work and code release. Special thanks to Ming for his valuable contributions and unwavering support throughout this competition.