My Kaggle projects.

This is the Titanic - Machine Learning from Disaster competition.

Afted data cleaning (titanic_data_cleaning.ipynb), I approach this using three techniques:

- logistic regression using polynomial features (of order

$2$ ) combined with PCA (logistic.ipynb), - a single decision tree (tree.ipynb),

- a random forest (random_forest.ipynb).

This is the Natural Language Processing with Disaster Tweets competition.

The file tweets_preprocessing.ipynb contains data cleaning and processing, in particular:

- inferring location country from location data and from the text of the tweet,

- tweet text cleaning and tokenization,

- applying word embeddings using the GloVe dataset glove.twitter.27B.

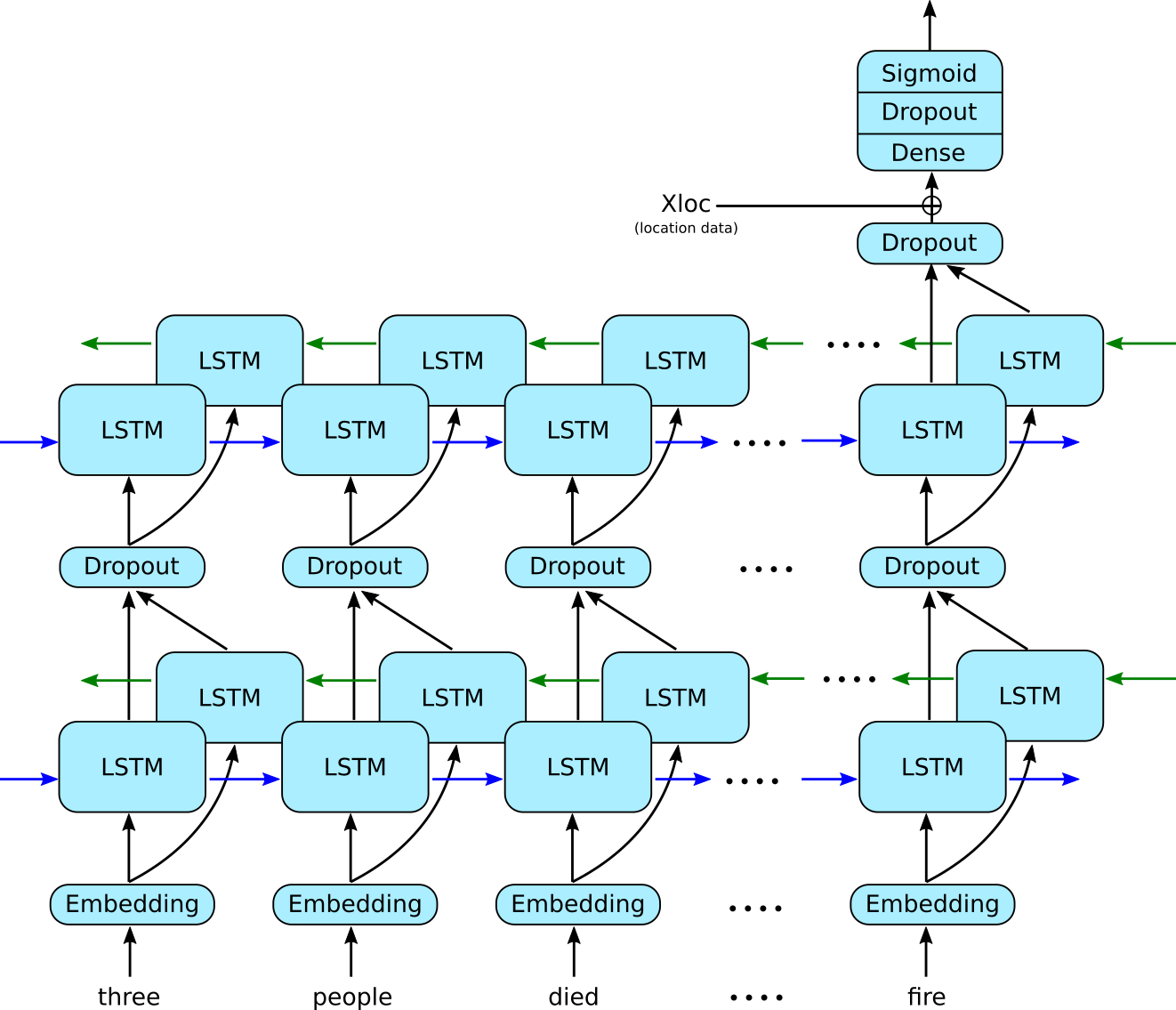

The cleaned data is subsequently fed into a recurrent neural net with two bidirectional LSTM layers shown in the schamtic picture below.

This can be found in the notebook lstm.ipynb.