This repo holds the project presented on the paper Face Reconstruction with Variational Autoencoder and Face Masks for the ENIAC 2021 Conference.

To check in more details how the ablation study was done, and how to use the code, see the Colab notebook.

The final trained model for each hypothesis is available here

For environment compatibility, check the environment.yml out.

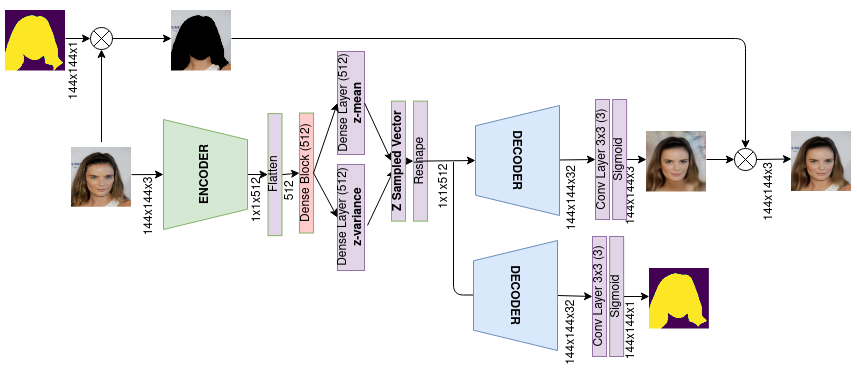

The proposed architecture has a common VAE structure besides an additional decoder branch that predicts face masks. During training it uses face masks as labels too, which are used to replace the background of the reconstructed image such that the loss function is applied only on the face pixels. On the other hand, on the prediction mode the background replacement is made straightly by the predicted mask, not requiring any extra input but an image.

This project used the CelebA dataset.

The solution uses face masks during training. Although they are not required during prediction. For the masks extraction, I used the project face-parsing.PyTorch. The masks data are available for downloading here.

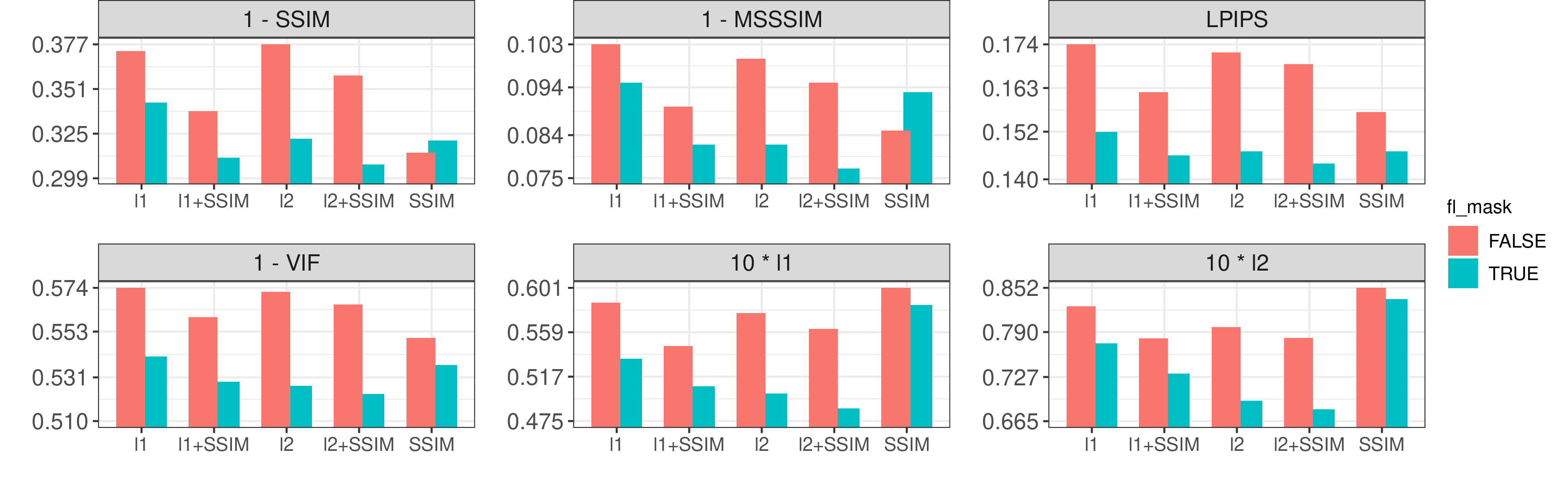

It is well noticed in the figure bellow that the presence of the mask-based architecture played a meaningful role for all metrics and losses combination, but the SSIM standalone cases (H5/H6), where "without face masks" performed better on the SSIM and MS-SSIM metrics; It's counter-intuitive for what we expected. However, if we regard LPIPS as a better perceptual metric than the SSIM ones, the hypothesis with face mask also worked better on H5/H6.

SSIM standalone cases appeared less sensitive for the background information, while the addition of face masks was much more effective when

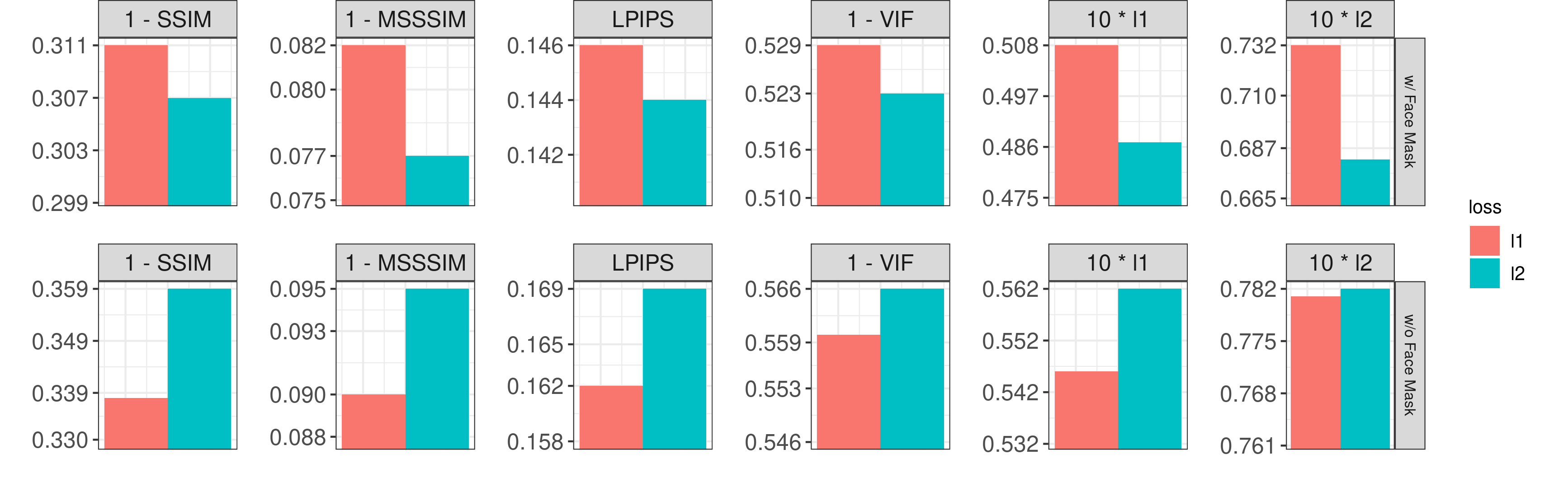

Looking at the scenario with face masks, we were surprised how well

An interesting observation is that

It is known that SSIM was made to work with grayscale images, and it may present problems with color images. To improve the training process with the perceptual loss SSIM, we add a

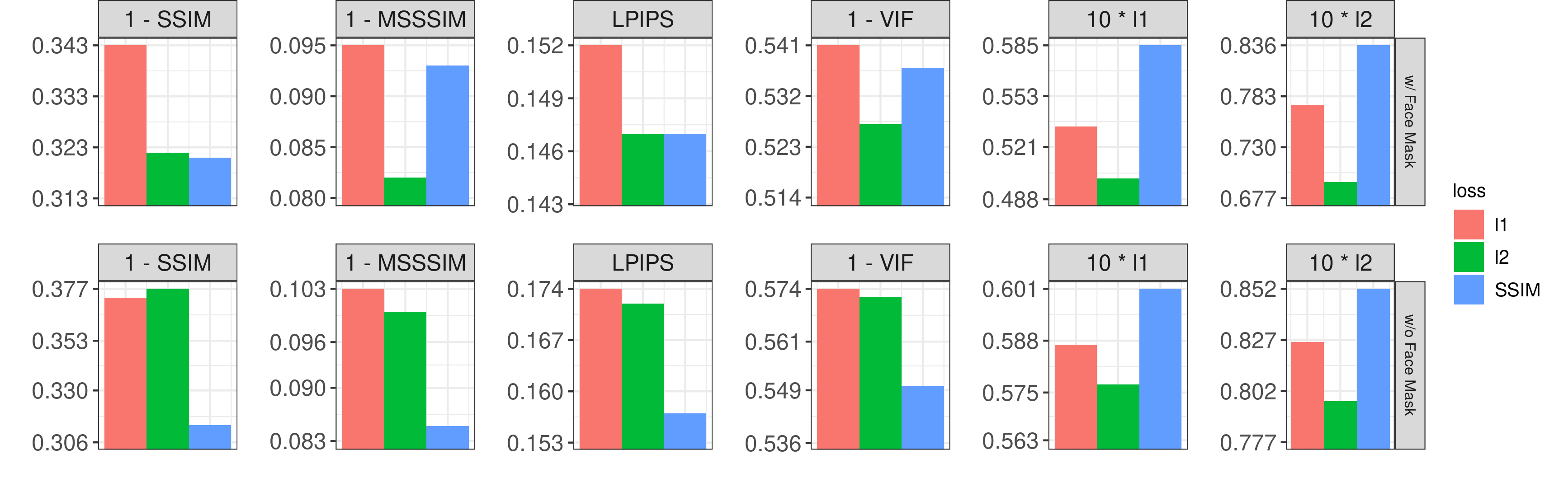

The figure bellow showed up two very distinct scenarios. Using the Mask-based approach,

Probably, here is a case where the higher sensitivity of

We had the dominant hypothesis

Without masks, we didn't have a dominant hypothesis, for perceptual metrics,

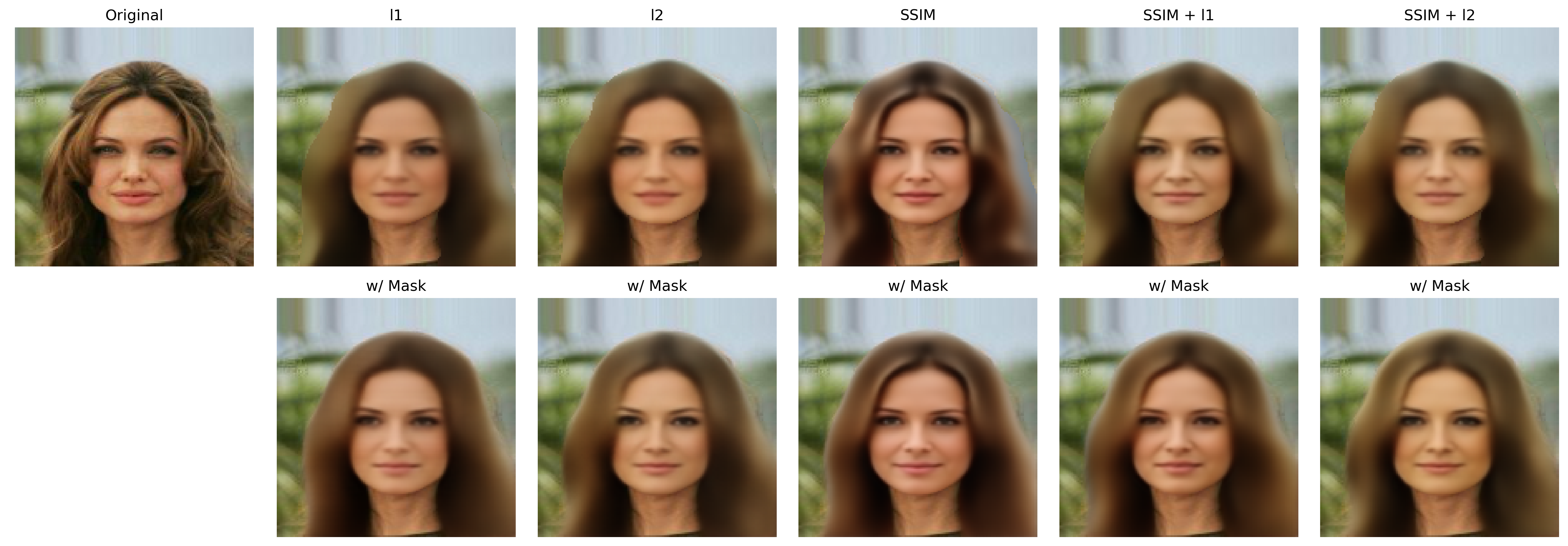

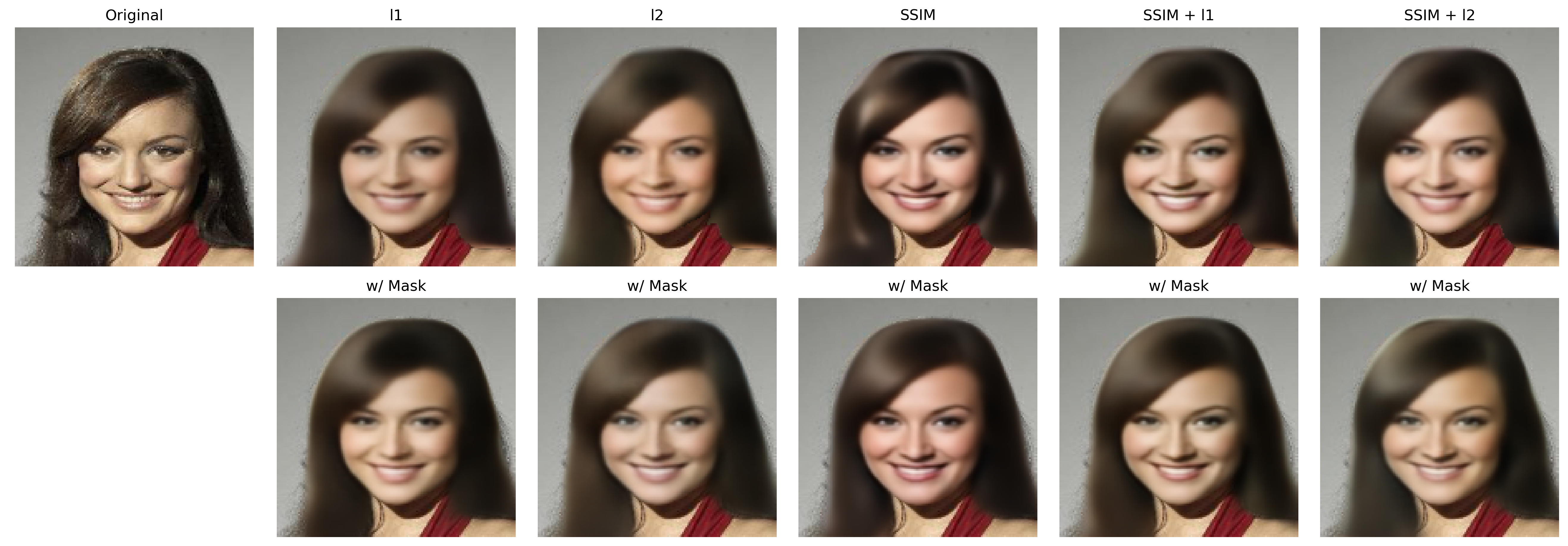

All images presented here are unobserved samples from the testing set. Checking out the hypothesis outcomes, we noticed some patterns. Looking at first figure bellow, we see the blurred reconstructed hair, which is a recurrent issue with VAE. Although, it is possible to notice that the hypothesis with face masks got slightly sharper lines on the hair, especially on the column of the SSIM standalone.

The SSIM standalone clearly had the crispest reconstructed samples, however, the colors presented on the face are the most distant from the original image, even they appearing brighter, they moved away from the original yellowish color. The hypothesis of SSIM +

In the next figure , we noticed crisper samples in the SSIM hypothesis, but its color is shifted again, it moved away from the greenish presence of the original picture. The hypothesis with face masks preserved more the greenish, also the teeth as seen on the columns with

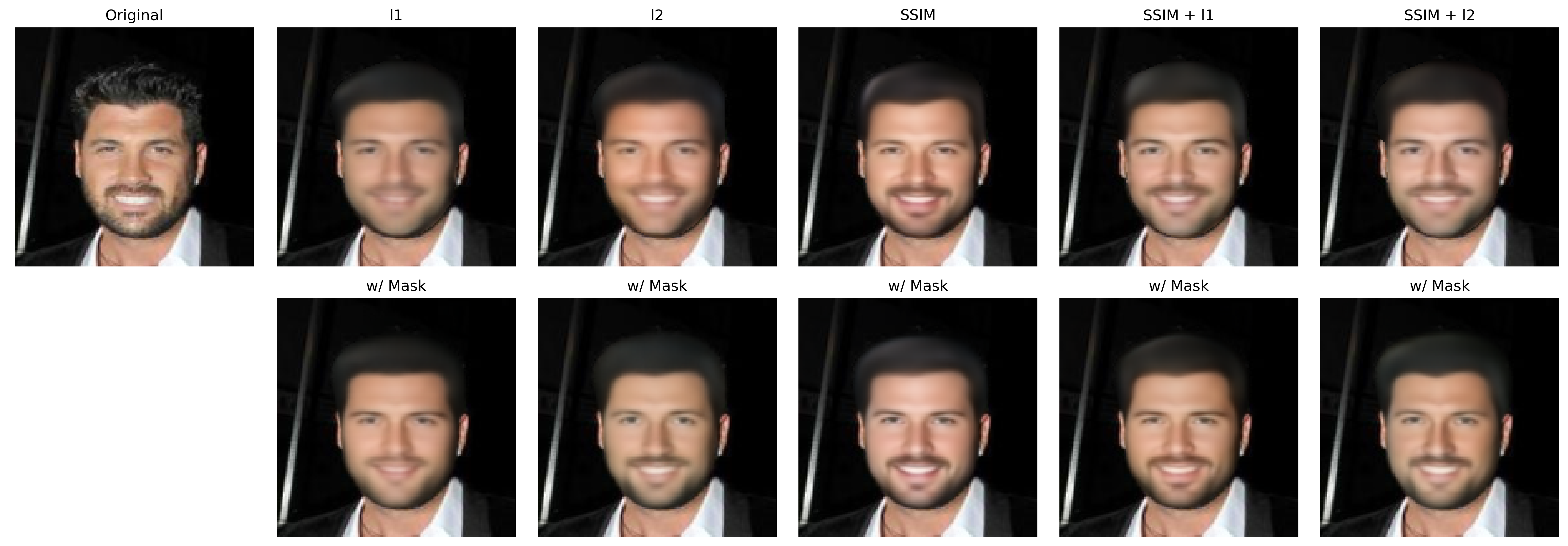

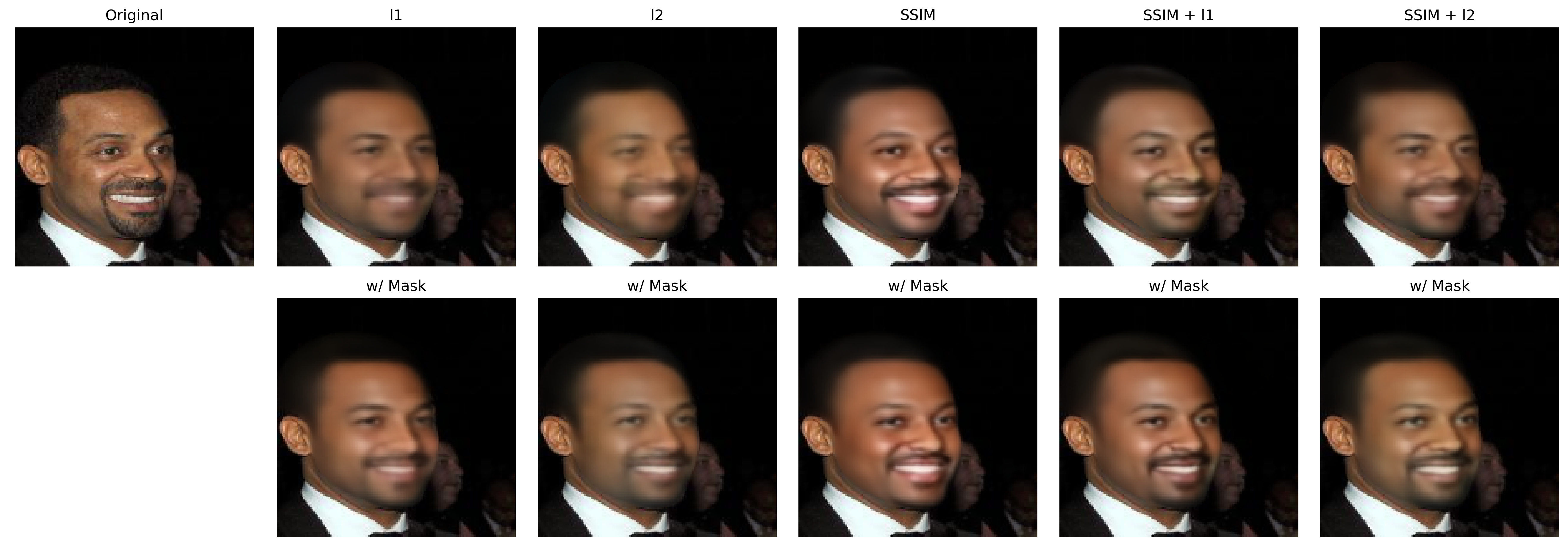

For the following figures, the hypotheses without face masks were blurrier, especially when

The figure bellow had a posing challenge, clearly, the usage of face mask or SSIM was important to accomplish good face reconstruction. Again only SSIM made the skin quite reddish, SSIM +