Neural Actor: Neural Free-view Synthesis of Human Actors with Pose Control

Project Page | Video | Paper | Data

Abstract: We propose Neural Actor (NA), a new method for high-quality synthesis of humans from arbitrary viewpoints and under arbitrary controllable poses. Our method is built upon recent neural scene representation and rendering works which learn representations of geometry and appearance from only 2D images. While existing works demonstrated compelling rendering of static scenes and playback of dynamic scenes, photo-realistic reconstruction and rendering of humans with neural implicit methods, in particular under user-controlled novel poses, is still difficult. To address this problem, we utilize a coarse body model as the proxy to unwarp the surrounding 3D space into a canonical pose. A neural radiance field learns pose-dependent geometric deformations and pose- and view-dependent appearance effects in the canonical space from multi-view video input. To synthesize novel views of high fidelity dynamic geometry and appearance, we leverage 2D texture maps defined on the body model as latent variables for predicting residual deformations and the dynamic appearance. Experiments demonstrate that our method achieves better quality than the state-of-the-arts on playback as well as novel pose synthesis, and can even generalize well to new poses that starkly differ from the training poses. Furthermore, our method also supports body shape control of the synthesized results.

This is the official repository of "Neural Actor: Neural Free-view Synthesis of Human Actors with Pose Control" (SIGGRAPH Asia 2021)

Table of contents

System requirements

This code is implemented based on Neural Sparse Voxel Fields (NSVF). The code has been tested on the following system:

- Python 3.8

- PyTorch 1.6.0, torchvision 0.7.0

- Nvidia apex library (optional)

- Nvidia GPU (Tesla V100 32GB) CUDA 10.2

(Please note that our codebase theoritically also work on higher PyTorch version >= 1.7. However, due to some unexpected behavior changes in Conv2d at Pytorch 1.7, our pre-trained models would produce bad results.)

Only learning and rendering on GPUs are supported.

Installation

To install, first clone this repo and install all dependencies. We suggest installing in a virtual environment.

conda create -n neuralactor python=3.8

conda activate neuralactor

pip install -r requirements.txtThis code also depends on opendr, which can be installed from source

mkdir -p tools

git clone https://github.com/MultiPath/opendr.git tools/opendr

pushd tools/opendr

python setup.py install

popd

Then, run

pip install --editable .Or if you want to install the code locally, run:

python setup.py build_ext --inplaceTo train and generate texture maps, it also needs to compile imaginaire following the instruction.

You can also run:

# please make sure CUDA_HOME is set correctly before compiling.

export CUDA_HOME=/usr/local/cuda-10.2/ # or the path you installed CUDA

git submodule update --init

pushd imaginaire

bash scripts/install.sh

popdGeneration with imageinaire also requires a pretrained checkpoint of flownet2. We suggest manually download before running.

mkdir -p imaginaire/checkpoints

gdown 1hF8vS6YeHkx3j2pfCeQqqZGwA_PJq_Da -O imaginaire/checkpoints/flownet2.pth.tar

Dataset

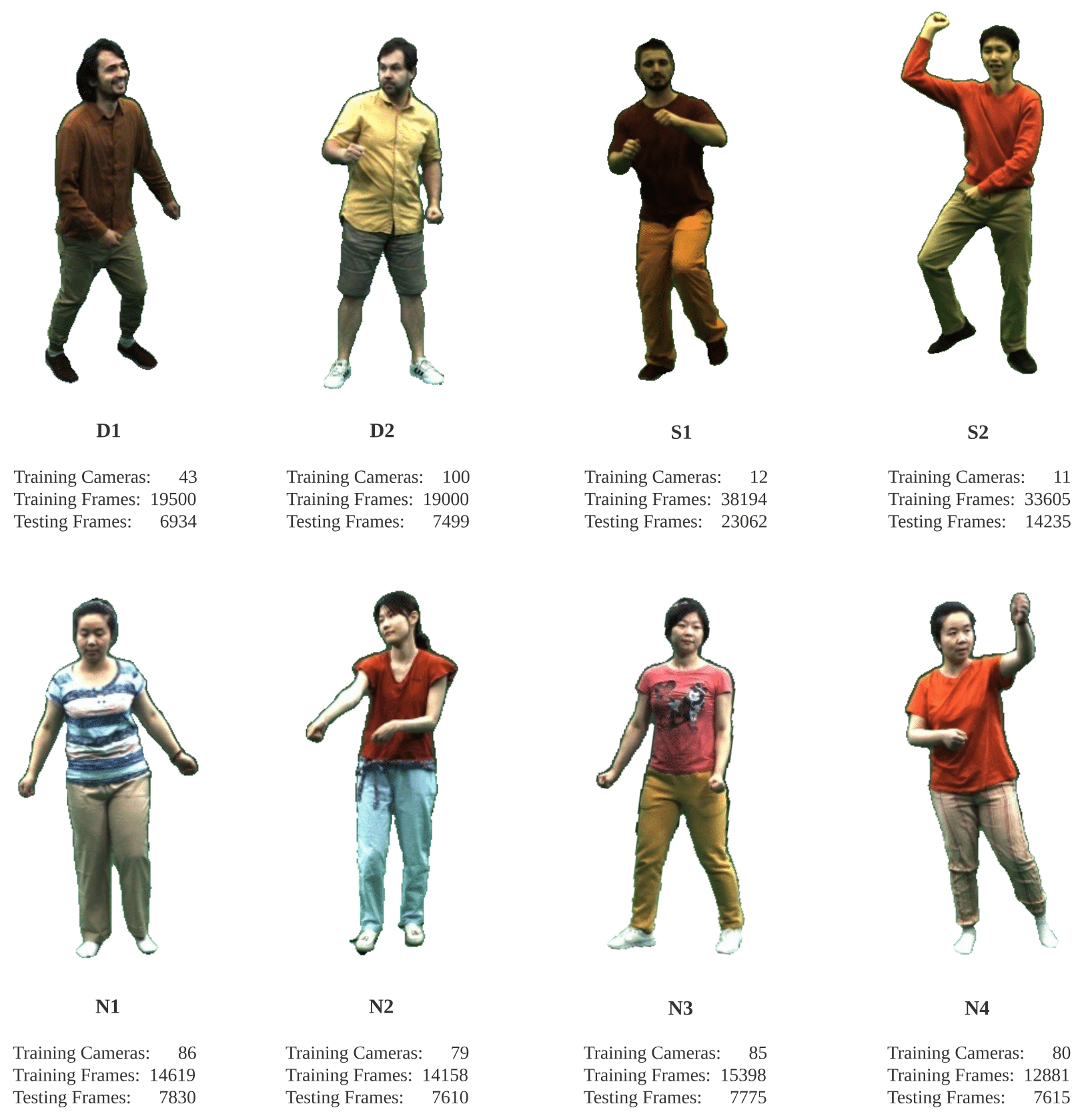

Please find the character mapping in our paper:

For full datasets, please register through this link by accepting agreements.

Each dataset also needs additional files which defines the canonical geometry, the UV parameterization and skinning weights. Please download from Google drive folder

We also provide the SMPL tracking results (pose and shape parameters) of each sequence. Please download from Google drive folder

Prepare your own dataset

Please refer to a saperate repo for detailed steps for pre-processing your own datasets

Pre-trained Models

| Character | Texture Predictor | Neural Renderer | Character | Texture Predictor | Neural Renderer |

|---|---|---|---|---|---|

| D1 | Download (3.7G) | Download (271M) | D2 | Download (3.7G) | Download (271M) |

| S1 | Download (3.7G) | Download (271M) | S2 | Download (3.7G) | Download (271M) |

| N1 | Download (3.7G) | Download (271M) | N2 | Download (3.7G) | Download (271M) |

| N3 | Download (3.7G) | Download (271M) | N4 | Download (3.7G) | Download (271M) |

You can download the pretrained models for texture predictor and neural renderer of each character for rendering pipeline.

Free Viewpoint Rendering Pipeline

We provide an example of free-view video synthesis of a pre-trained human actor given arbitrary pose control.

Train a new model

We provide an example for training the texture prediction and free-view neural rendering.

License

NeuralActor is under CC-BY-NC license. The license applies to the pre-trained models as well.

Citation

Please cite as

@article{liu2021neural,

title={Neural Actor: Neural Free-view Synthesis of Human Actors with Pose Control},

author={Lingjie Liu and Marc Habermann and Viktor Rudnev and Kripasindhu Sarkar and Jiatao Gu and Christian Theobalt},

year={2021},

journal = {ACM Trans. Graph.(ACM SIGGRAPH Asia)}

}If you use our dataset, please note that D1 and D2 are orginally from the paper Real-time Deep Dynamic Characters, and S1 and S2 are orginally from the paper DeepCap: Monocular Human Performance Capture Using Weak Supervision. We process these four datasets in our Neural Actor format. Therefore, please also consider citing the following references:

@article{habermann2021,

author = {Marc Habermann and Lingjie Liu and Weipeng Xu and Michael Zollhoefer and Gerard Pons-Moll and Christian Theobalt},

title = {Real-time Deep Dynamic Characters},

journal = {ACM Transactions on Graphics},

month = {aug},

volume = {40},

number = {4},

articleno = {94},

year = {2021},

publisher = {ACM}

}

@inproceedings{deepcap,

title = {DeepCap: Monocular Human Performance Capture Using Weak Supervision},

author = {Habermann, Marc and Xu, Weipeng and Zollhoefer, Michael and Pons-Moll, Gerard and Theobalt, Christian},

booktitle = {{IEEE} Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {jun},

organization = {{IEEE}},

year = {2020},

}