This is a Terraform toolkit that loads Overture Maps data into BigQuery tables using the BigQuery Data Transfer Service. You can clone this repo and run the terraform immediately; for more information on how it works, read below.

export TF_VAR_AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID

export TF_VAR_AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY

tf apply

This process uses BigQuery Data Transfer Service, which requires an AWS access key.

Create an AWS user with at least AmazonS3ReadOnlyAccess permissions, and then create an access key for that user.

Save the Access Key ID and Secret Access Key. You will need them in step 3.

export TF_VAR_AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID

export TF_VAR_AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY

Overture contains sets of parquet files categorized by theme and type; each type will be loaded in as a BigQuery table.

- base

- buildings

- divisions

- places

- transportation

Terraform will create the tables for you when you run tf apply.

Alternatively, this repo contains the BigQuery schema files for these tables that you can use to create the tables.

bq mk --table $PROJECT:$DATASET.infrastructure schemas/infrastructure.json

bq mk --table $PROJECT:$DATASET.land schemas/land.json

bq mk --table $PROJECT:$DATASET.land_use schemas/land_use.json

bq mk --table $PROJECT:$DATASET.water schemas/water.json

bq mk --table $PROJECT:$DATASET.building schemas/building.json

bq mk --table $PROJECT:$DATASET.building_part schemas/building_part.json

bq mk --table $PROJECT:$DATASET.boundary schemas/boundary.json

bq mk --table $PROJECT:$DATASET.division schemas/division.json

bq mk --table $PROJECT:$DATASET.division_area schemas/division_area.json

bq mk --table $PROJECT:$DATASET.place schemas/place.json

bq mk --table $PROJECT:$DATASET.connector schemas/connector.json

bq mk --table $PROJECT:$DATASET.segment schemas/segment.json

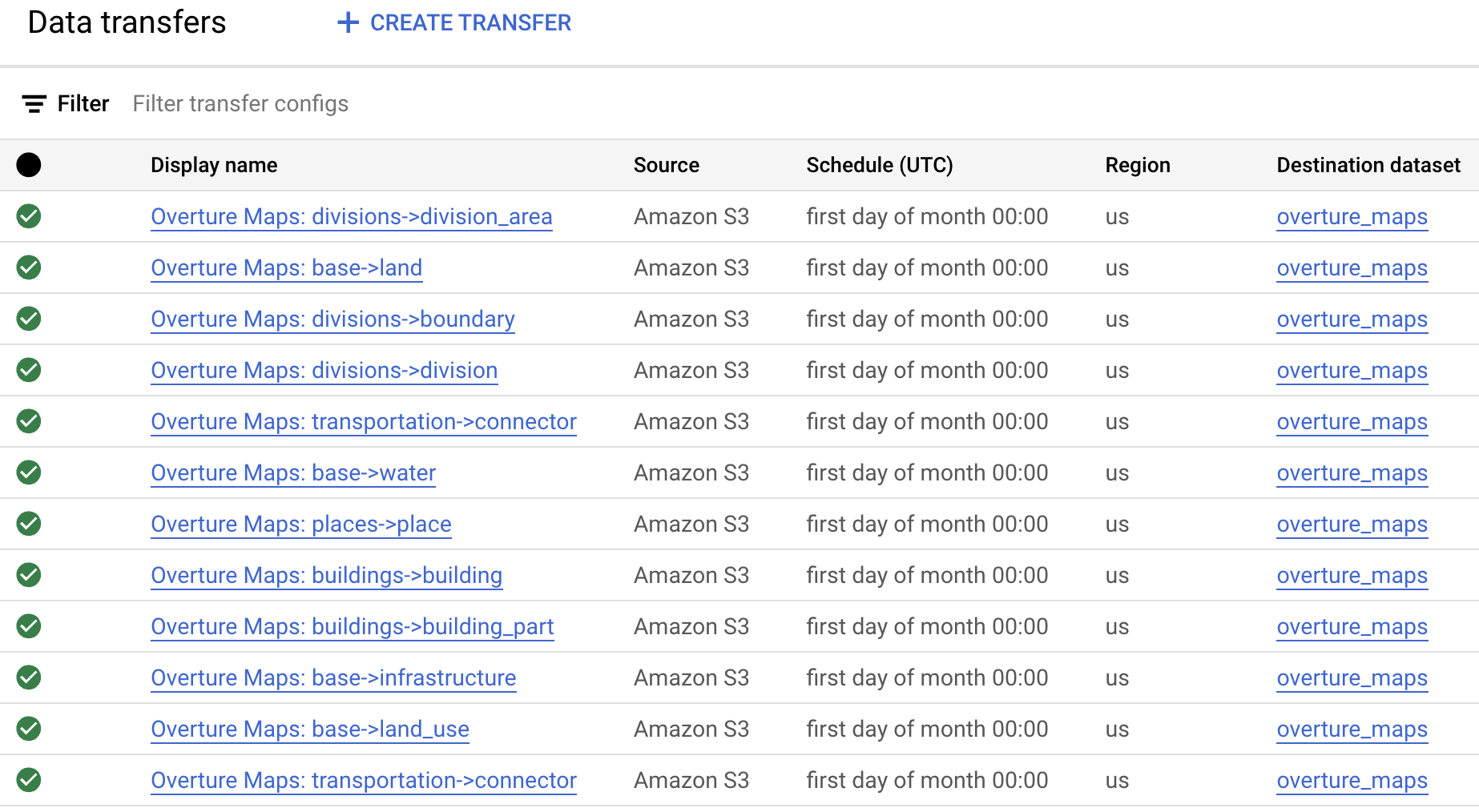

BigQuery Data Transfer service will automatically download the data from the Overture S3 buckets and save it in BigQuery. The terraform included in this repo will create one data transfer job per table, and default to update the tables every 30 days. You can manually create the transfer jobs in the BigQuery Transfers section of the Google Cloud Console, or deploy via Terraform.

tf apply

Now, manually run all transfer jobs now to create tables now without waiting for the monthly schedule. Each job should take about 10 minutes

To run geospatial queries, the table needs a GEOGRAPHY column. Parquet includes the geospatial data in WKB format, which needs to be converted.

Modify the query in create_mv.sql and run it for each table. For example,

create materialized view `<PROJECT>.overture_maps.buildings_mv`

cluster by geometry

as (

SELECT

* except(geometry),

st_geogfromwkb(geometry) as geometry

FROM `<PROJECT>.overture_maps.buildings`

)

The materialized views will automatically remain up-to-date with the base tables as they are refreshed once per month.